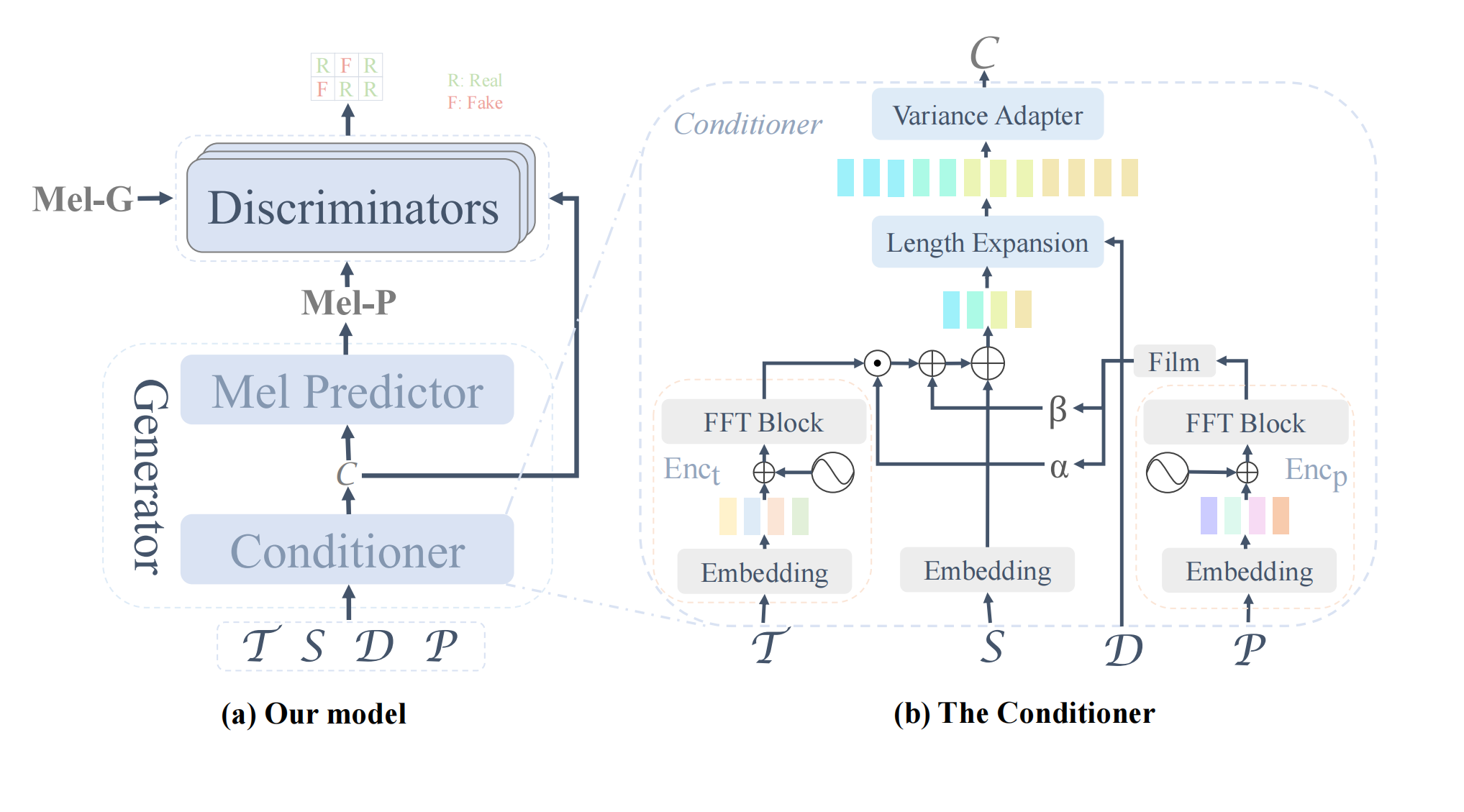

The official implementation of our AAAI24 paper: FT-GAN: Fine-grained Tune Modeling for Chinese Opera Synthesis.

- Create an environment with anaconda for example:

conda create -n gezi_opera python=3.8

conda activate gezi_opera

git clone https://github.com/double-blind-pseudo-user/Gezi_opera_synthesis

cd Gezi_opera_synthesis

pip install -r requirements.txt- Download the pretrained vocoder and the pitch extractor, unzip these two files into

checkpointsbefore training your acoustic model. - Download the dataset and unzip it into

data/processed.

Run the following scripts to binarize the data:

export PYTHONPATH=.

CUDA_VISIBLE_DEVICES=0 nohup python data_gen/tts/bin/binarize.py \

--config usr/configs/gezixi.yaml \

> data_processing.log 2>&1 &The binarized data will be saved to data/binary.

Run the following scripts to train the model:

CUDA_VISIBLE_DEVICES=0 nohup python tasks/run.py \

--config usr/configs/gezixi.yaml --exp_name your_experiments_name --reset \

> training.log 2>&1 &When training is done, run the following scripts to generate audio:

CUDA_VISIBLE_DEVICES=0 nohup python tasks/run.py \

--config usr/configs/gezixi.yaml --exp_name your_experiment_name --reset --infer \

> inference.log 2>&1 &Inference results will be saved in checkpoints/your_experiment_name/generated_ by default.