We recommended to use Anaconda for the following packages: Python 2.7, PyTorch (>0.1.12), NumPy (>1.12.1), TensorBoard

- Punkt Sentence Tokenizer:

import nltk

nltk.download()

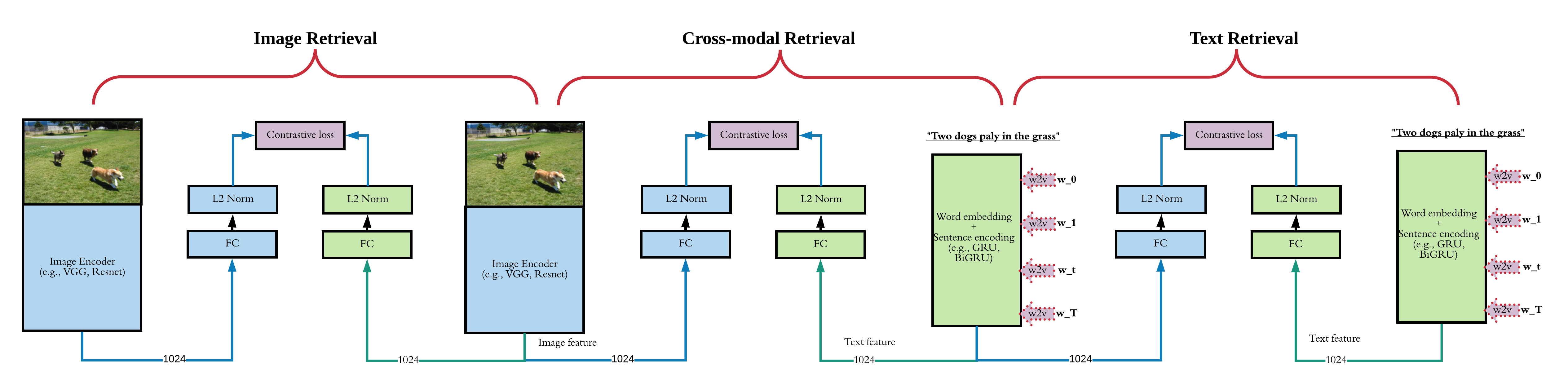

> d punktIn this exeripments, we used MSCOCO image-caption as the dataset, for single model (image or text), you can only use image/text as the training data, corss-modal retrieval shares the same framework with single modal retrieval. You can download the data from MSCOCO image captioning website, or download the precomputed image features are from here and here. To use full image encoders, download the images from their original sources here, here and here.

wget http://www.cs.toronto.edu/~faghri/vsepp/vocab.tar

wget http://www.cs.toronto.edu/~faghri/vsepp/data.tar

wget http://www.cs.toronto.edu/~faghri/vsepp/runs.tarWe refer to the path of extracted files for data.tar as $DATA_PATH and

files for models.tar as $RUN_PATH. Extract vocab.tar to ./vocab

directory.

Run train.sh:

python train.sh 0from vocab import Vocabulary

import evaluation

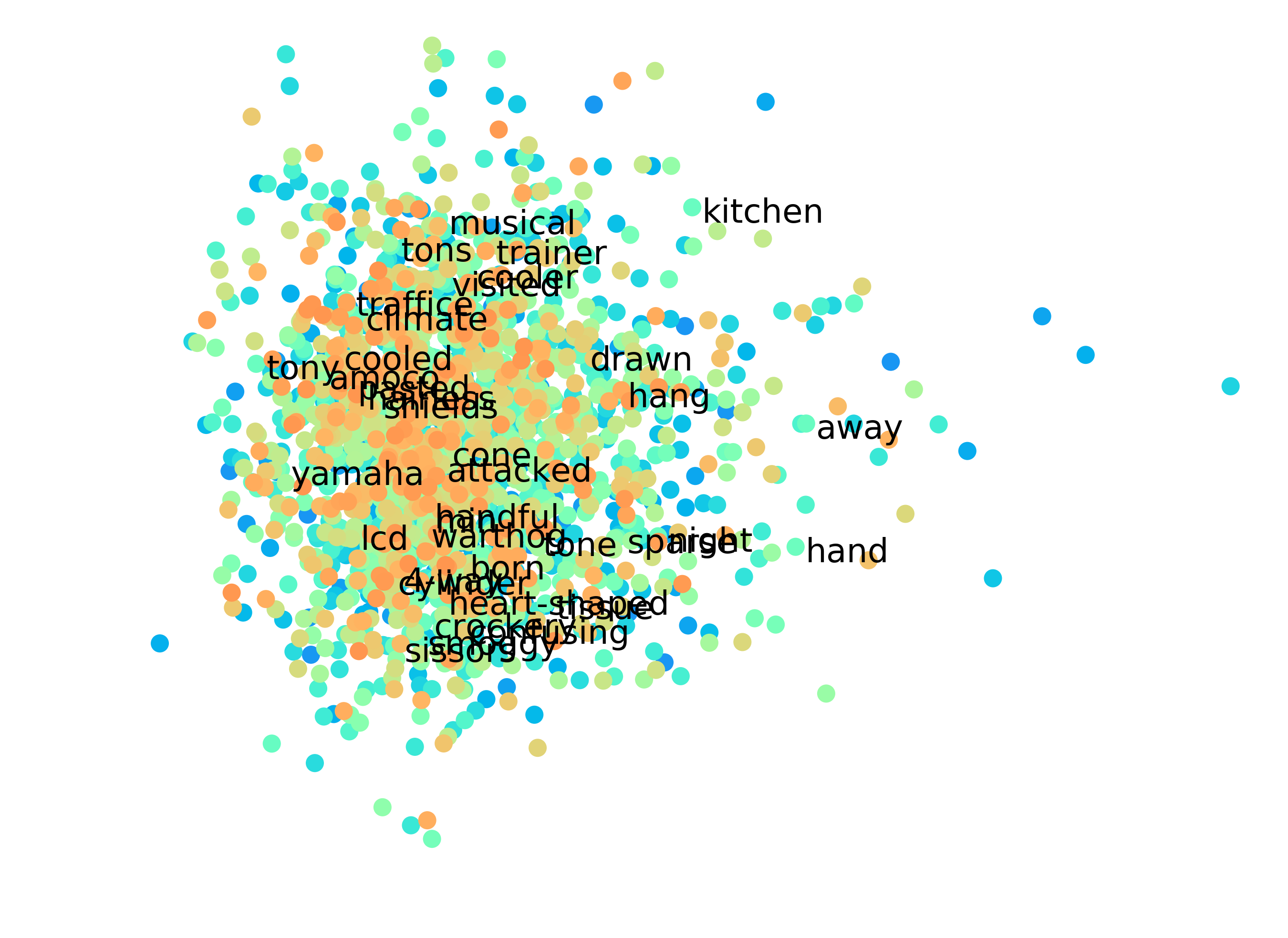

evaluation.evalrank("run/gru_cross_nlp/model_best.pth.tar", data_path="$DATA_PATH", split="test")'python visualize_w2v.py

@article{gu2017look,

title={Look, Imagine and Match: Improving Textual-Visual Cross-Modal Retrieval with Generative Models},

author={Gu, Jiuxiang and Cai, Jianfei and Joty, Shafiq and Niu, Li and Wang, Gang},

journal={CVPR},

year={2018}

}