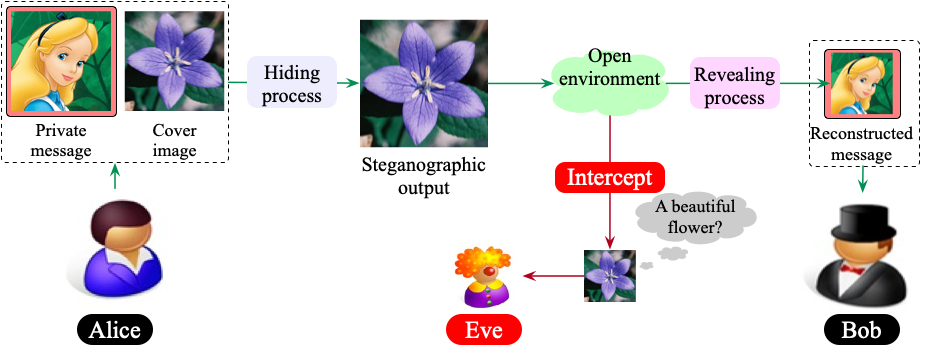

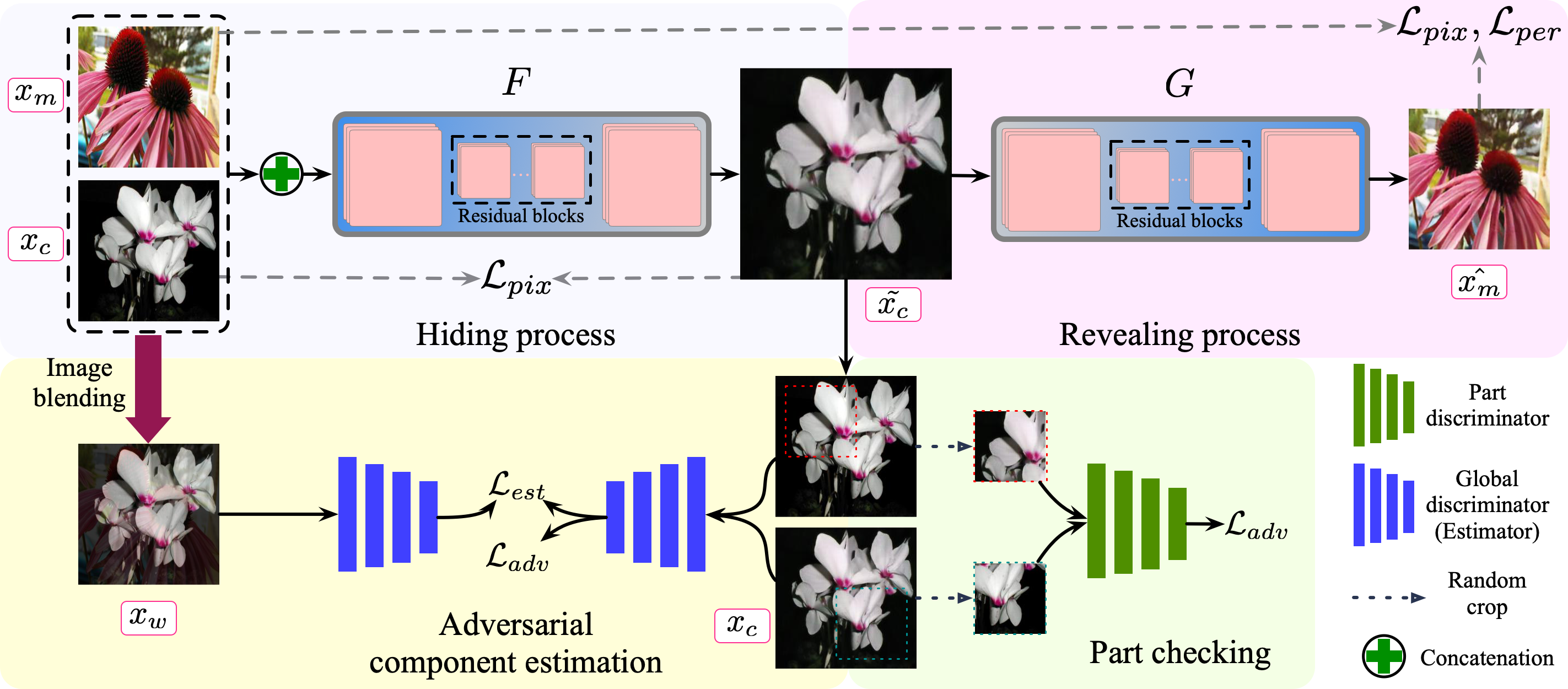

CAIS is a Tensorflow-based framework for training and testing of our paper: Component Aware Image Steganography via Adversarial Global-and-Part Checking.

2022.06.09 - Our paper is accepted by IEEE Transactions on Neural Networks and Learning Systems (TNNLS)

- We use Anaconda3 as the basic environment. If you have installed the Anaconda3 in path

Conda_Path, please create a new virtual environment byconda create -n tf114, thensource activate tf114. Installtensorflow-gpuusing the commandconda install tensorflow-gpu==1.14.0. - Install the dependencies by

pip install -r requirements.txt(if necessary). Therequirements.txtfile is provided in this package. - Please download the pre-trained VGG19 model imagenet-vgg-verydeep-19.mat (passwd:a8yv), then place it at the current path.

Please download the original image files from this. Decompress this file and prepare the training and testing image files as follows:

mkdir datasets

cd datasets

mkdir flower

# The directory structure of flower should be this:

├──flower

├── train_cover

├── cover1.jpg

└── ...

├── train_message

├── message1.jpg

└── ...

├── test_cover

├── test_a.jpg (The test cover image that you want)

└── ...

├── test_message

├── test_b.jpg (The test message image that you want)

└── ...

We also provide a simple prepare.py file to randomly split the images. Please edit the img_path to specify the image path before running this file.

Please download the caricature image dataset. We follow the training/testing split of this dataset. And prepare the traing/testing images as follows:

mkdir flowercari

# The directory structure of flower should be this:

├──flowercari

├── train_cover

├── cover1.jpg (the same 7000 flower images)

└── ...

├── train_message (the train caricature images)

├── message1.jpg

└── ...

├── test_cover

├── test_a.jpg (The rest flower images)

└── ...

├── test_message

├── test_b.jpg (The test caricature images)

└── ...

sh scripts/train_flower.sh.

You can also edit the default parameters referring the main.py.

sh scripts/test_flower.sh. Generate the steganographic images by using random two images: one cover image and one message image.

sh scripts/recon_flower.sh. Reconstruct the message images by using the steganographic images. Please specify the stegano_dir and recon_dir while running this procedure.

- Perceptual loss.

LSGAN: Least Square GAN.

- Flower: Google Drive; BaiduYun (1u8c)

- Flowercari: Google Drive; BaiduYun (ykc3)

- ImageNet: Google Drive; BaiduYun (9fto)

All the models are trained at the resolution 256*256. Please put them at the path ./check and unzip them.

- Add the analysis tools.

If you find our work useful in your research, please consider citing:

@article{zheng2022composition,

title={Composition-Aware Image Steganography Through Adversarial Self-Generated Supervision},

author={Zheng, Ziqiang and Hu, Yuanmeng and Bin, Yi and Xu, Xing and Yang, Yang and Shen, Heng Tao},

journal={IEEE Transactions on Neural Networks and Learning Systems},

year={2022},

publisher={IEEE}

}Code borrows from CycleGAN and DCGAN. The network architecture design is modified from DCGAN. The generative network is adopted from neural-style with Instance Normalization.