This includes code for: our NeurIPS 2023 paper Uni3DETR: Unified 3D Detection Transformer

our ECCV 2024 paper OV-Uni3DETR: Towards Unified Open-Vocabulary 3D Object Detection via Cycle-Modality Propagation

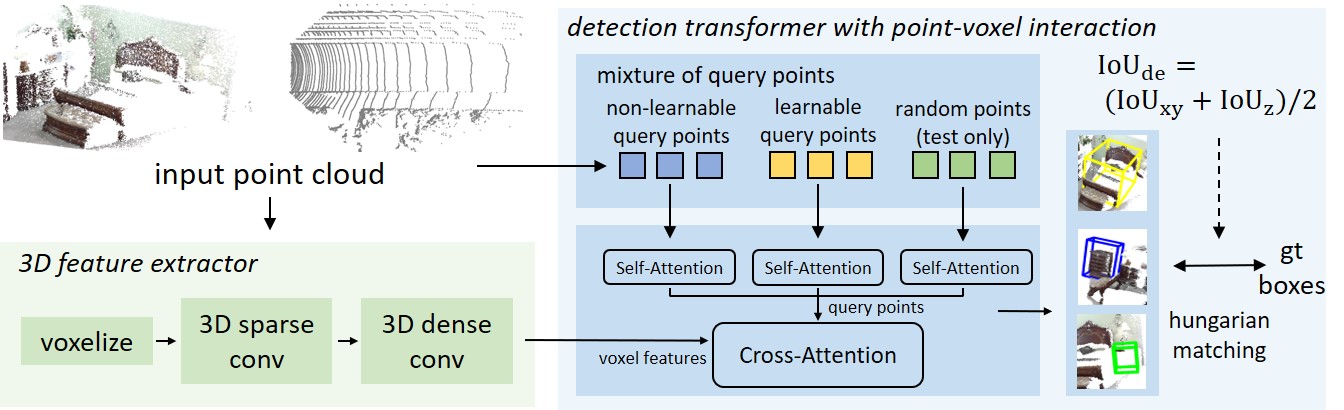

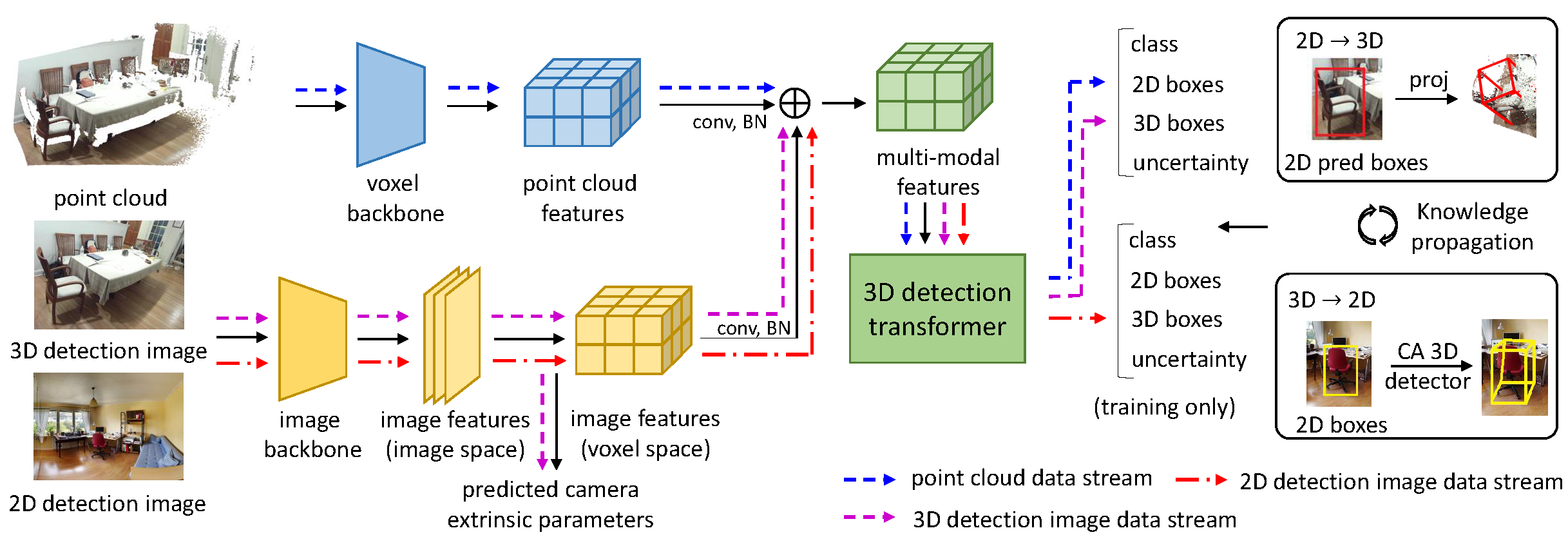

Uni3DETR provides a unified structure for both indoor and outdoor 3D object detection. Based on this architecture, OV-Uni3DETR further introduces multi-modal learning and open-vocabulary learning to achieve modality unifying and category unifying with a unified structure.

This project is based on mmDetection3D, which can be constructed as follows.

- Install mmDetection3D v1.0.0rc5 following the instructions.

- Copy our project and related files to installed mmDetection3D:

cp -r projects mmdetection3d/

cp -r extra_tools mmdetection3d/- Prepare the dataset following mmDetection3D dataset instructions.

- Uni3DETR dataset preparation:

SUN RGB-D dataset: The directory structure after processing should be as follows:

sunrgbd

├── README.md

├── matlab

│ ├── ...

├── OFFICIAL_SUNRGBD

│ ├── ...

├── sunrgbd_trainval

│ ├── ...

├── points

├── sunrgbd_infos_train.pkl

├── sunrgbd_infos_val.pkl

ScanNet dataset:

After downloading datasets following mmDetection3D, run python scripts/scannet_globalallign.py to perform global alignment in advance. Please note that this operation will modify the data file. If you have any concerns, it is recommended to back up the file first.

The directory structure should be as below

scannet

├── meta_data

├── batch_load_scannet_data.py

├── load_scannet_data.py

├── scannet_utils.py

├── README.md

├── scans

├── scans_test

├── scannet_instance_data

├── points

│ ├── xxxxx.bin

├── instance_mask

│ ├── xxxxx.bin

├── semantic_mask

│ ├── xxxxx.bin

├── seg_info

│ ├── train_label_weight.npy

│ ├── train_resampled_scene_idxs.npy

│ ├── val_label_weight.npy

│ ├── val_resampled_scene_idxs.npy

├── posed_images

│ ├── scenexxxx_xx

│ │ ├── xxxxxx.txt

│ │ ├── xxxxxx.jpg

│ │ ├── intrinsic.txt

├── scannet_infos_train.pkl

├── scannet_infos_val.pkl

├── scannet_infos_test.pkl

The outdoor KITTI and nuScenes datasets preparation steps are totally the same as mmDetection3D.

- OV-Uni3DETR dataset preparation:

SUN RGB-D dataset:

The SUN RGB-D dataset preparation steps are the same as the Uni3DETR steps above, the only difference is the annotation file. The annotations file can be downloaded directly from GoogleDrive. We will upload codes about how to generate the annotation files for training soon.

bash extra_tools/dist_train.sh ${CFG_FILE} ${NUM_GPUS}bash extra_tools/dist_test.sh ${CFG_FILE} ${CKPT} ${NUM_GPUS} --eval=bboxWe provide results on SUN RGB-D, ScanNet, KITTI, nuScenes with pretrained models (for Tab. 1, Tab. 2, Tab. 3 of our paper).

| Dataset | mAP (%) | download |

|---|---|---|

| indoor | ||

| SUN RGB-D | 67.0 | GoogleDrive |

| ScanNet | 71.7 | GoogleDrive |

| outdoor | ||

| KITTI (3 classes) | 86.57 (moderate car) | GoogleDrive |

| KITTI (car) | 86.74 (moderate car) | GoogleDrive |

| nuScenes | 61.7 | GoogleDrive |