This repository is the official implementation of the paper:

HumanVid: : Demystifying Training Data for Camera-controllable Human Image Animation

Zhenzhi Wang, Yixuan Li, Yanhong Zeng, Youqing Fang, Yuwei Guo,

Wenran Liu, Jing Tan, Kai Chen, Tianfan Xue, Bo Dai, Dahua Lin

CUHK, Shanghai AI Lab

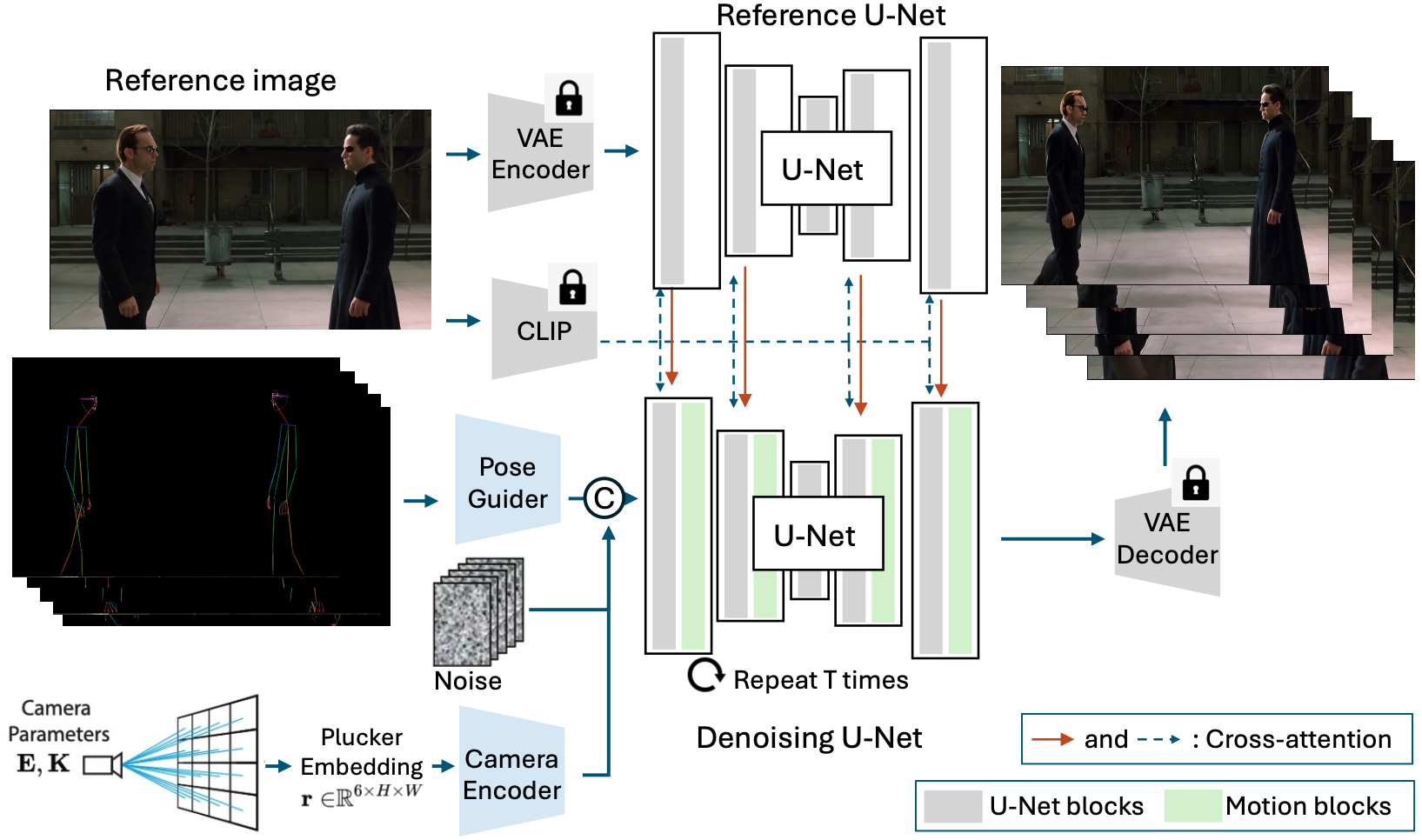

HumanVid is a new dataset for camera-controllable human image animation, which enables training video diffusion models to generate videos with both camera and subject movements like real movie clips. As a by-product, it could also enable reproducing methods like Animate-Anyone by just setting the camera to be static in the inference. We show that models only trained on videos with camera movement could achieve very good static background appearance as long as the camera annotations in the training set is accurate, reducing the difficulty of static-camera video collection. To verify our statements, our proposed baseline model CamAnimate shows impressive results, which could be found in the website. This repo will provide all the data and code to achieve the performance shown in our demo.

2024/10/20: The UE synthetic video part of HumanVid is released. Please download the videos, human poses and camera parameters from here, passwordhumanvid_ue.2024/09/27: Our paper is accepted by NeurIPS D&B Track 2024.2024/09/02: The Internet video part of HumanVid is released. Please download the video urls and camera parameters from here.

The pexels video data is collected from the Internet and we cannot redistribute them. We provide the video urls and camera parameters for each video. The camera parameters are stored in the camera.zip in the Google Drive. The videos could be downloaded by scripts from urls.

Updates: The video urls in Pexels.com are changed by the website team. We have updated the video urls in the txt file ending with new. Please use the new urls for downloading the videos.

The videos are in the OneDrive link (the training_video folder). 3d_video_* means videos are rendered from 3D scene background and generated_video_* means videos are rendered from HDRI images as background. The final file structure should be like:

2d_keypoints/

training_video/

├── 3d_video_1/

│ ├── camera/

│ ├── dwpose/

│ └── mp4/

├── 3d_video_2/

│ ├── camera/

│ ├── dwpose/

│ └── mp4/

├── 3d_video_3/

├── 3d_video_4/

├── 3d_video_5/

├── 3d_video_6/

├── 3d_video_7/

├── 3d_video_8/

├── 3d_video_9/

├── 3d_video_10/

├── generated_video_1/

├── generated_video_2/

├── generated_video_3/

├── generated_video_4/

├── generated_video_5/

├── generated_video_6/

├── generated_video_7/

├── generated_video_8/

├── generated_video_9/

└── generated_video_10/

The training_video folder of OneDrive link contains all files in the mp4 folder. Please first unzip ue_camera.zip and put each sub-folder to the corresponding position in training_video. Then unzip 2d_keypoints.zip and use python extract_pose_from_smplx_ue.py to produce mp4 dwpose files from the 2d keypoints information saved in the 2d_keypoints folder.

We follow Droid-SLAM and DPVO use TUM Camera Format timestamp tx ty tz qx qy qz qw format for camera trajectory. The timestamp is number of frame. The tx, ty, tz are the translation of the camera in meters. The qx, qy, qz, qw are the quaternion of the camera rotation. For camera intrinsics, assuming the camera has a standard 36mm CMOS, we heuristically set the focal length to 50mm (horizontal) and 75mm (vertical) and the principal point to the center of the image, based on the observation on Internet videos. We empirically find that it works well.

For Unreal Engine rendered videos, we provide the camera parameters in the ue_camera.zip file. The format is timestamp tx ty tz qx qy qz qw fx fy, where the first 8 numbers are the same as the TUM format. The fx and fy are the normalized focal lengths (intrinsics).

To better understand our camera parameter processing scripts (e.g., modifications over CameraCtrl), the camera processing code is in src/dataset/img_dataset.py. The complete code will be released later.

Please refer to the DWPose folder for scripts of extracting and visualizing whole-body poses. Note that I have added a little modification on foot by also visualizing the keypoints on the foot. It also contains the keypoints convertion from SMPL-X to COCO Keypoints format. For pretrained checkpoints, please refer to the DWPose repository.

This script will extract the whole-body pose for all videos in a given folder, e.g., videos. The extracted poses will be stored in the dwpose folder.

cd DWPose

python prepare_video.py

This script could read existing 2D SMPL-X keypoints (i.e., already projected to a camera space) and convert them to COCO whole-body keypoints format and visualize them like the DWPose's output. The projection script from 3D SMPL-X keypoints to 2D could be found in here. The SMPL-X keypoints is in the 2d_keypoints.zip of OneDrive link and camera parameters is the ue_camera.zip. Use the following command to extract the whole-body pose videos from SMPL-X keypoints.

python extract_pose_from_smplx_ue.py

- Release the synthetic data part.

- Release the inference code.

- Release the training code and checkpoint.

Please give us a star if you are interested in our work. Thanks!

@article{wang2024humanvid,

title={HumanVid: Demystifying Training Data for Camera-controllable Human Image Animation},

author={Wang, Zhenzhi and Li, Yixuan and Zeng, Yanhong and Fang, Youqing and Guo, Yuwei and Liu, Wenran and Tan, Jing and Chen, Kai and Xue, Tianfan and Dai, Bo and others},

journal={arXiv preprint arXiv:2407.17438},

year={2024}

}