Welcome to wechat-chatgpt 👋

Use ChatGPT On Wechat via wechaty

English | 中文文档

If you don't have a server or want to experience rapid deployment, you can use Railway to do so, see Usage with Railway.

Update Decomber 27, 2022

Using railway & docker deployment, there may be problems that cannot be solved, we are working on it.

Update December 20, 2022

Thanks @transitive-bullshit, The ChatGPT API automates the work.

You should use password & username to login, and config CAPTCHAs.

Update December 13, 2022

Yesterday (2022.12.12), OpenAI upgraded the authentication measures.

It causes ⚠️ No chatgpt item in pool when you use this project.

However, please rest assured that we are actively looking for an effective solution,

If you have a good solution, feel free to contribute!

🌟 Feature

- Use ChatGPT On Wechat via wechaty

- Support OpenAI Accounts Pool

- Support use proxy to login

- Add conversation Support

- Add Dockerfile

- Publish to Docker.hub

- Add Railway deploy

- Auto Reload OpenAI Accounts Pool

- Add sendmessage retry for 429/503

Use with docker in Linux(recommended)

cp config.yaml.example config.yaml

# Change Config.yaml

# run docker command in Linux or WindowsPowerShell

docker run -d --name wechat-chatgpt -v $(pwd)/config.yaml:/app/config.yaml holegots/wechat-chatgpt:latest

# login with qrcode

docker logs -f wechat-chatgptUse with docker in Windows

# Create and modify config.yaml in the current directory

# run docker command in WindowsPowerShell

docker run -d --name wechat-chatgpt -v $(pwd)/config.yaml:/app/config.yaml holegots/wechat-chatgpt:latest

# In the Windows command line (cmd) environment, you may mount the current directory like this:

docker run -d --name wechat-chatgpt -v %cd%/config.yaml:/app/config.yaml holegots/wechat-chatgpt:latest

# login with qrcode

docker logs -f wechat-chatgptUpgrade docker image version

docker pull holegots/wechat-chatgpt:latest

docker stop wechat-chatgpt

docker rm wechat-chatgpt

# run docker command in Linux or WindowsPowerShell

docker run -d --name wechat-chatgpt -v $(pwd)/config.yaml:/app/config.yaml holegots/wechat-chatgpt:latest

# In the Windows command line (cmd) environment, you may mount the current directory like this:

docker run -d --name wechat-chatgpt -v %cd%/config.yaml:/app/config.yaml holegots/wechat-chatgpt:latest

# login with qrcode

docker logs -f wechat-chatgptInstall

npm install && poetry installConfig

Copy config

You need copy config file for setting up your project.

cp config.yaml.example config.yamlGet and config Openai account

If you don't have this OpenAI account and you live in China, you can get it here.

Use account and password

You need get OpenAI account and password. Your config.yaml should be like this:

chatGPTAccountPool:

- email: <your email>

password: <your password>

# if you hope only some keywords can trigger chatgpt on private chat, you can set it like this:

chatPrivateTiggerKeyword: ""Setup proxy:

You can configure in config.yaml:

openAIProxy: <Your Proxy>CAPTCHAS

The browser portions of this package use Puppeteer to automate as much as possible, including solving all CAPTCHAs. 🔥

Basic Cloudflare CAPTCHAs are handled by default, but if you want to automate the email + password Recaptchas, you'll need to sign up for one of these paid providers:

- nopecha - Uses AI to solve CAPTCHAS

- Faster and cheaper

- Set the

NOPECHA_KEYenv var to your nopecha API key- Demo video of nopecha solving the login Recaptcha (41 seconds)

- 2captcha - Uses real people to solve CAPTCHAS

- More well-known solution that's been around longer

- Set the

CAPTCHA_TOKENenv var to your 2captcha API token

So you should config NOPECHA_KEY or CAPTCHA_TOKEN in your Environment Variables.

Start Project

npm run devIf you are logging in for the first time, then you need to scan the qrcode.

Usage with Railway

Railway is a deployment platform where you can provision infrastructure, develop with that infrastructure locally, and then deploy to the cloud.This section describes how to quickly deploy a wechat-chatgpt project using Railway.

Firstly, you'll need to sign up for a Railway account and sign in using GitHub verification.

Then click the one-click deployment button below to deploy.

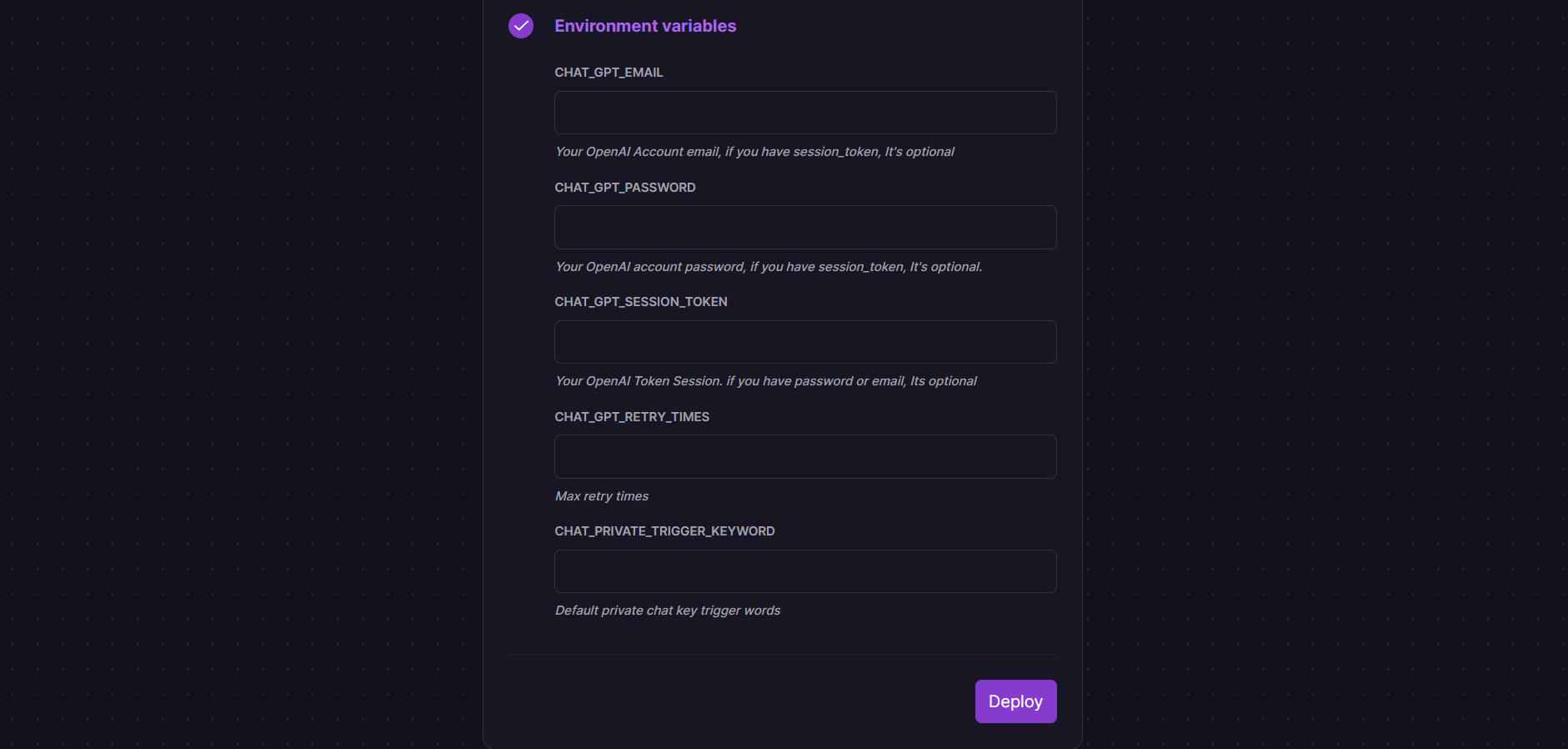

After some validation is complete, you can begin the deployment.You will see the following interface:

Some environment variables need to be configured:

-

CHAT_GPT_EMAIL : Your OpenAI Account email, if you have session_token, It's optional.

-

CHAT_GPT_PASSWORD : Your OpenAI Account password, if you have session_token, It's optional.

-

CHAT_GPT_RETRY_TIMES : The number of times to retry when the OpenAI API returns 429 or 503.

-

CHAT_PRIVATE_TRIGGER_KEYWORD : If you hope only some keywords can trigger chatgpt on private chat, you can set it.

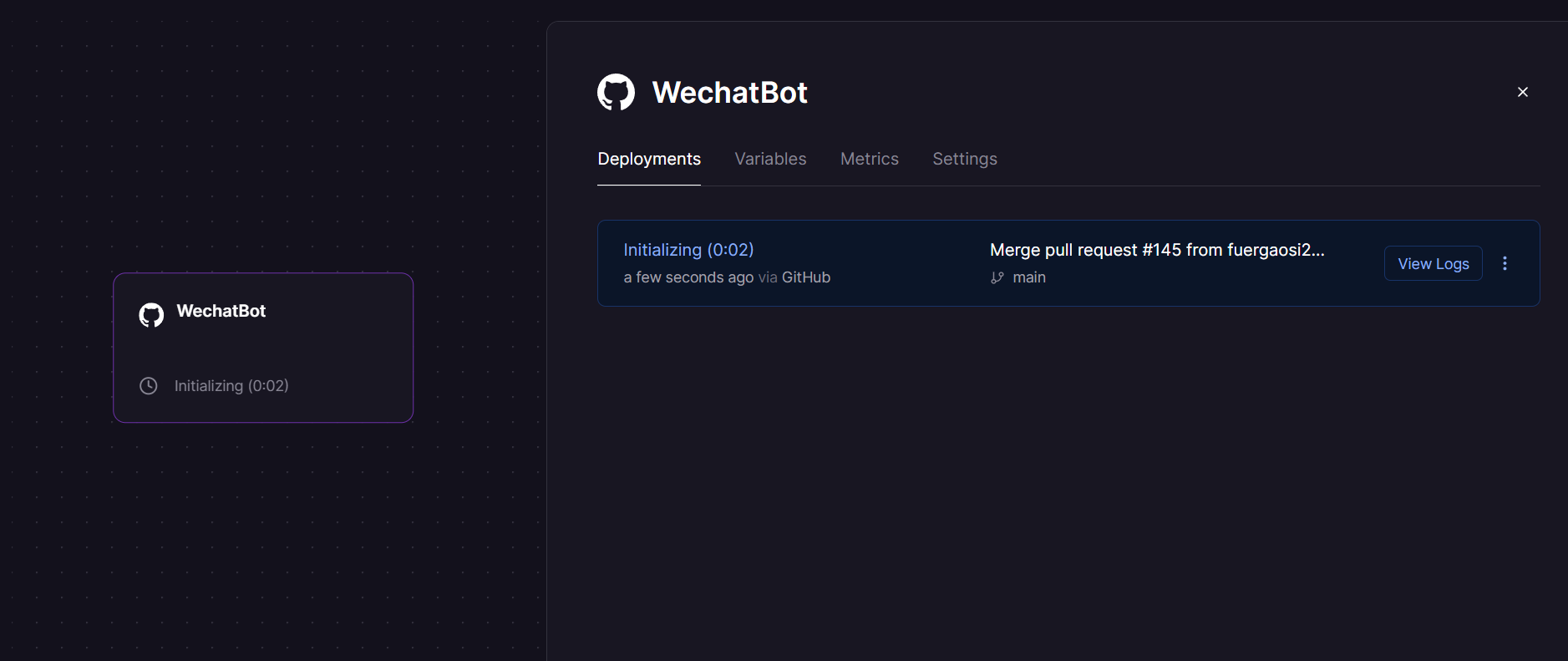

Click the Deploy button and your service will start deploying shortly.The following interface appears to indicate that the deployment has begun:

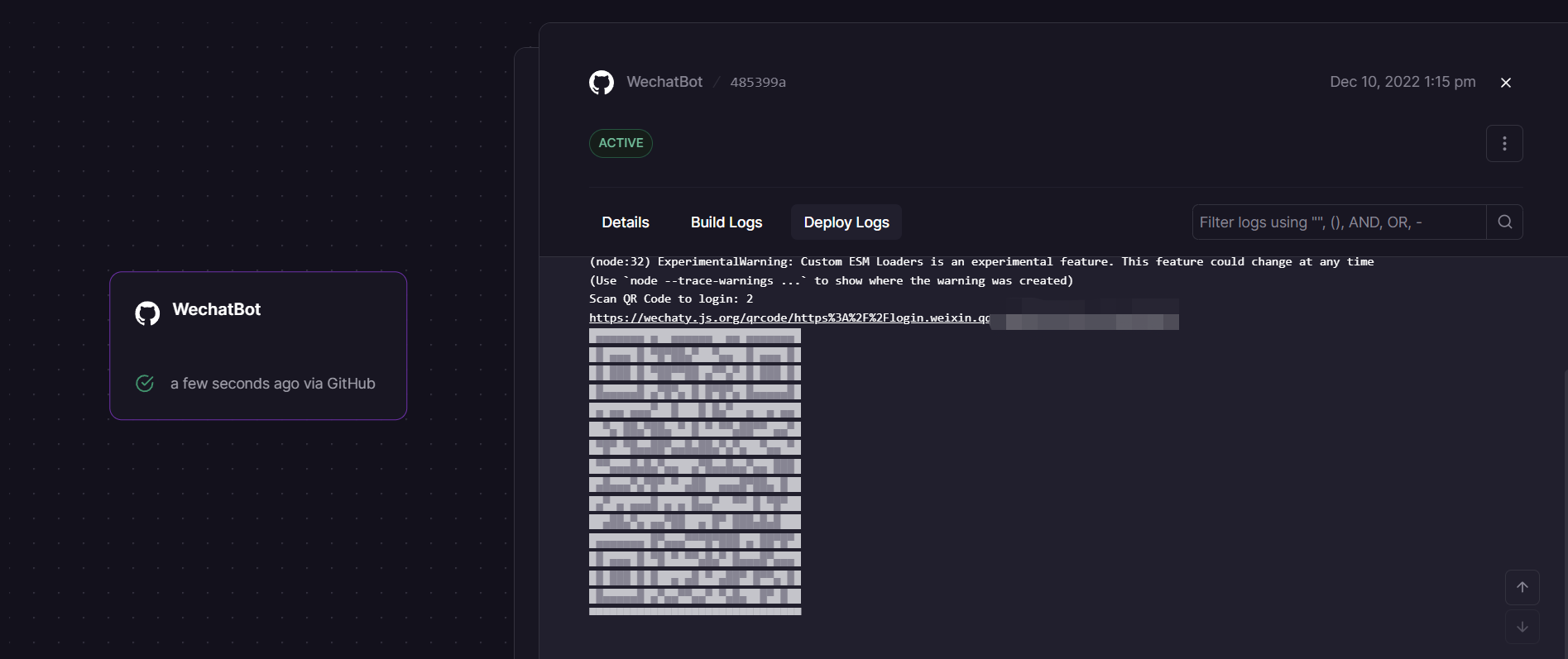

When the deployment is displayed successfully, click to view the logs and find the WeChat login link in Deploy Logs.

Click to enter and use your prepared WeChat to scan the code to log in.

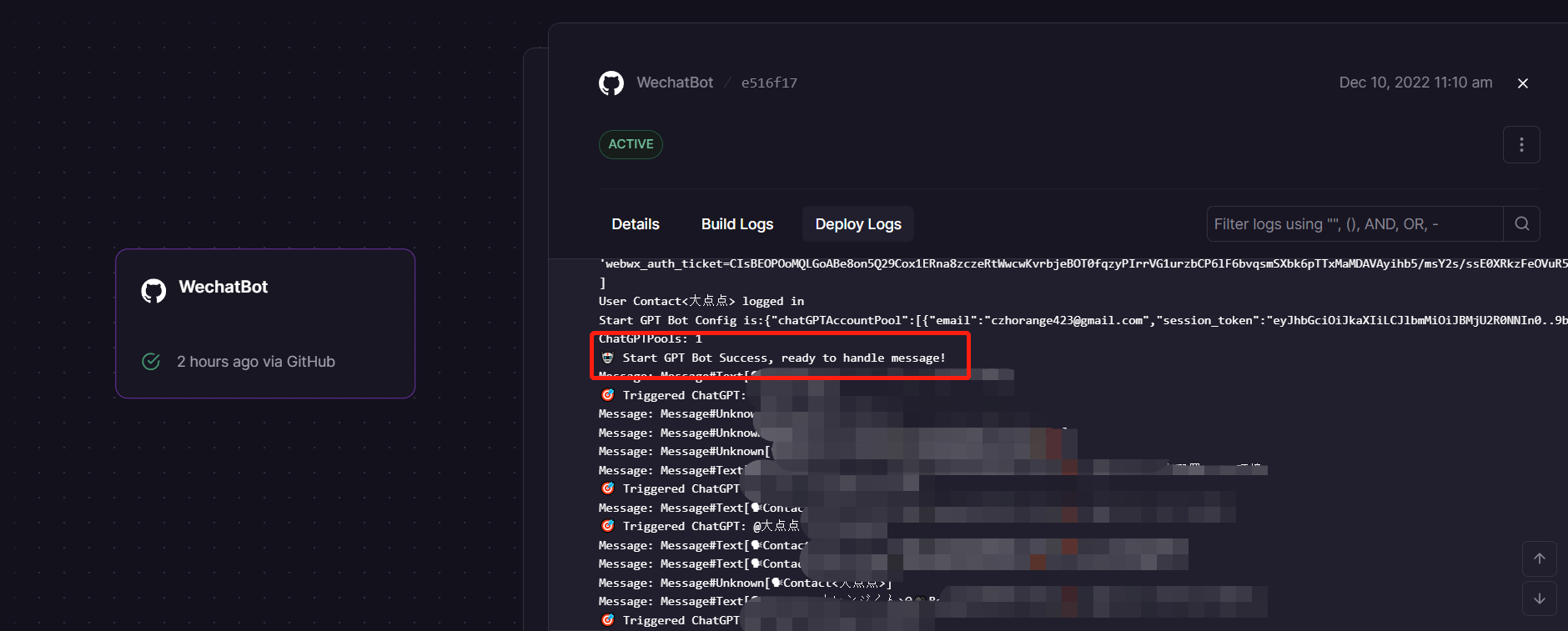

Log in successfully and start sending and receiving messages(This process can take several minutes):

Besides, in deployment, you may encounter the following issues:

- Error:

⚠️ No chatgpt item in pool : This error means that you have not configured the OpenAI account information correctly. You can solve this problem from the following aspects:1. Check whether the token or openAI account and password are filled in correctly. 2. The token may have expired (experience shows that the expiration time of the token is 24 hours), you can go to the chatGPT official website to re-obtain the token. 3. Redeploy Current Services.Note that the above should be modified on the Variables page in Railway Dashboard. - After the deployment is complete, the QR code is not generated.Try refreshing the page to see again if the Deploy Logs panel generated a link and QR code.

- The generated QR code cannot be scanned.On the generated QR code, there is a link that can be clicked to scan the QR code.

- Message feedback is very slow.Because Railway's servers are deployed overseas, there is an increase in message feedback latency, but it is still within the acceptance range. If you are time sensitive, you can use your own server deployment.

Author

👤 holegots

- Twitter: @fuergaosi

- GitHub: @fuergaosi233

🤝 Contributing

Contributions, issues and feature requests are welcome!

Feel free to check issues page.

Show your support

Give a ⭐️ if this project helped you!