VQA implementations in PyTorch for Open-Ended Question-Answering

The project comprises of the following sections.

This code was tested on Ubuntu 18.04 with CUDA 10 & CudNN 7.6

Install the COCO Python API , for data preparation.

Given the VQA Dataset's annotations & questions file, generates a dataset file (.txt) in the following format:

image_name \t question \t answer

- image_name is the image file name from the COCO dataset

- question is a comma-separated sequence

- answer is a string (label)

Sample Execution:

$ python3 prepare_data.py --balanced_real_images -s train \

-a /home/axe/Datasets/VQA_Dataset/raw/v2_mscoco_train2014_annotations.json \

-q /home/axe/Datasets/VQA_Dataset/raw/v2_OpenEnded_mscoco_train2014_questions.json \

-o /home/axe/Datasets/VQA_Dataset/processed/vqa_train2014.txt \

-v /home/axe/Datasets/VQA_Dataset/processed/vocab_count_5_K_1000.pickle -c 5 -K 1000 # vocab flags (for training set)Stores the dataset file in the output directory -o and the corresponding vocab file -v.

For validation/test sets, remove the vocabulary flags: -v, -c, -K.

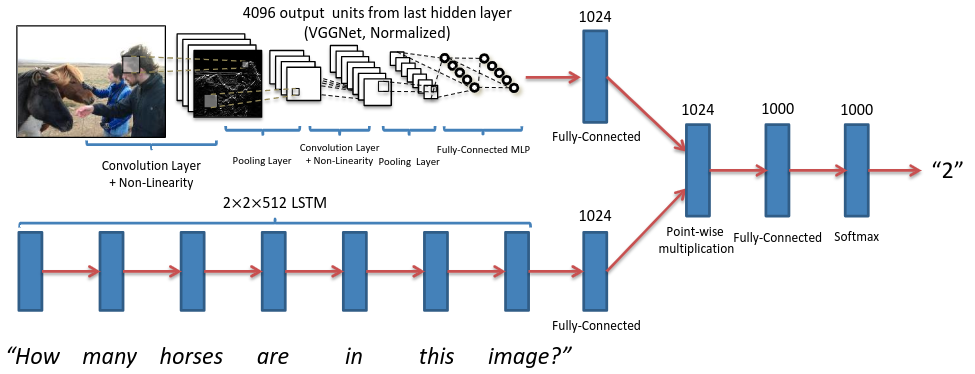

The architecture can be summarized as:-

Image --> CNN_encoder --> image_embedding

Question --> LSTM_encoder --> question_embedding

(image_embedding * question_embedding) --> MLP_Classifier --> answer_logit

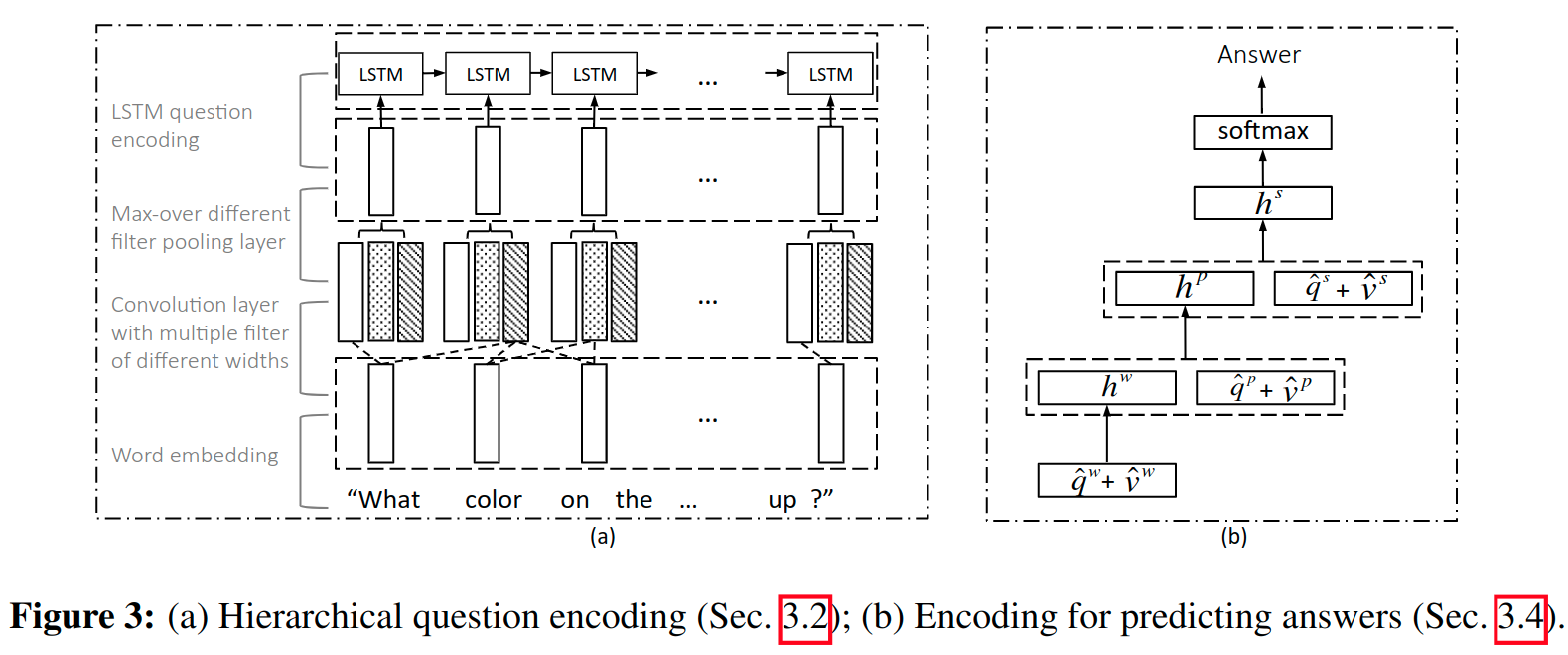

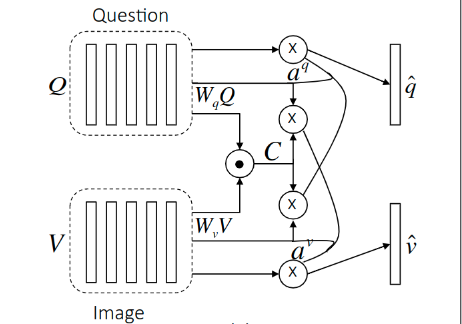

The architecture can be summarized as:-

Image --> CNN_encoder --> image_embedding

Question --> Word_Emb --> Phrase_Conv_MaxPool --> Sentence_LSTM --> question_embedding

ParallelCoAttention( image_embedding, question_embedding ) --> MLP_Classifier --> answer_logit

Run the following script for training:

$ python3 main.py --mode train --expt_name K_1000_Attn --expt_dir /home/axe/Projects/VQA_baseline/results_log \

--train_img /home/axe/Datasets/VQA_Dataset/raw/train2014 --train_file /home/axe/Datasets/VQA_Dataset/processed/vqa_train2014.txt \

--val_img /home/axe/Datasets/VQA_Dataset/raw/val2014 --val_file /home/axe/Datasets/VQA_Dataset/processed/vqa_val2014.txt\

--vocab_file /home/axe/Datasets/VQA_Dataset/processed/vocab_count_5_K_1000.pickle --save_interval 1000 \

--log_interval 100 --gpu_id 0 --num_epochs 50 --batch_size 160 -K 1000 -lr 1e-4 --opt_lvl 1 --num_workers 6 \

--run_name O1_wrk_6_bs_160 --model attention

Specify --model_ckpt (filename.pth) to load model checkpoint from disk (resume training/inference)

Select the architecture by using --model ('baseline', 'attention').

Note: Setting num_cls (K) = 2 is equivalent to 'yes/no' setup.

For K > 2, it is an open-ended set.

The experiment output log directory is structured as follows:

├── main.py

..

..

├── expt_dir

│ └── expt_name

│ └── run_name

│ ├── events.out.tfevents

│ ├── model_4000.pth

│ └── train_log.txt

- ..TO-DO..

TODO: Test with BERT embeddings (Pre-Trained & Fine-Tuned)

- Baseline & HieCoAttn

- VQA w/ BERT

- Inference & Attention Visualization

[1] VQA: Visual Question Answering

[2] Hierarchical Question-Image Co-Attention for Visual Question Answering