- Colab Demo for GFPGAN

; (Another Colab Demo for the original paper model)

- We provide a clean version of GFPGAN, which can run without CUDA extensions. So that it can run in Windows or on CPU mode.

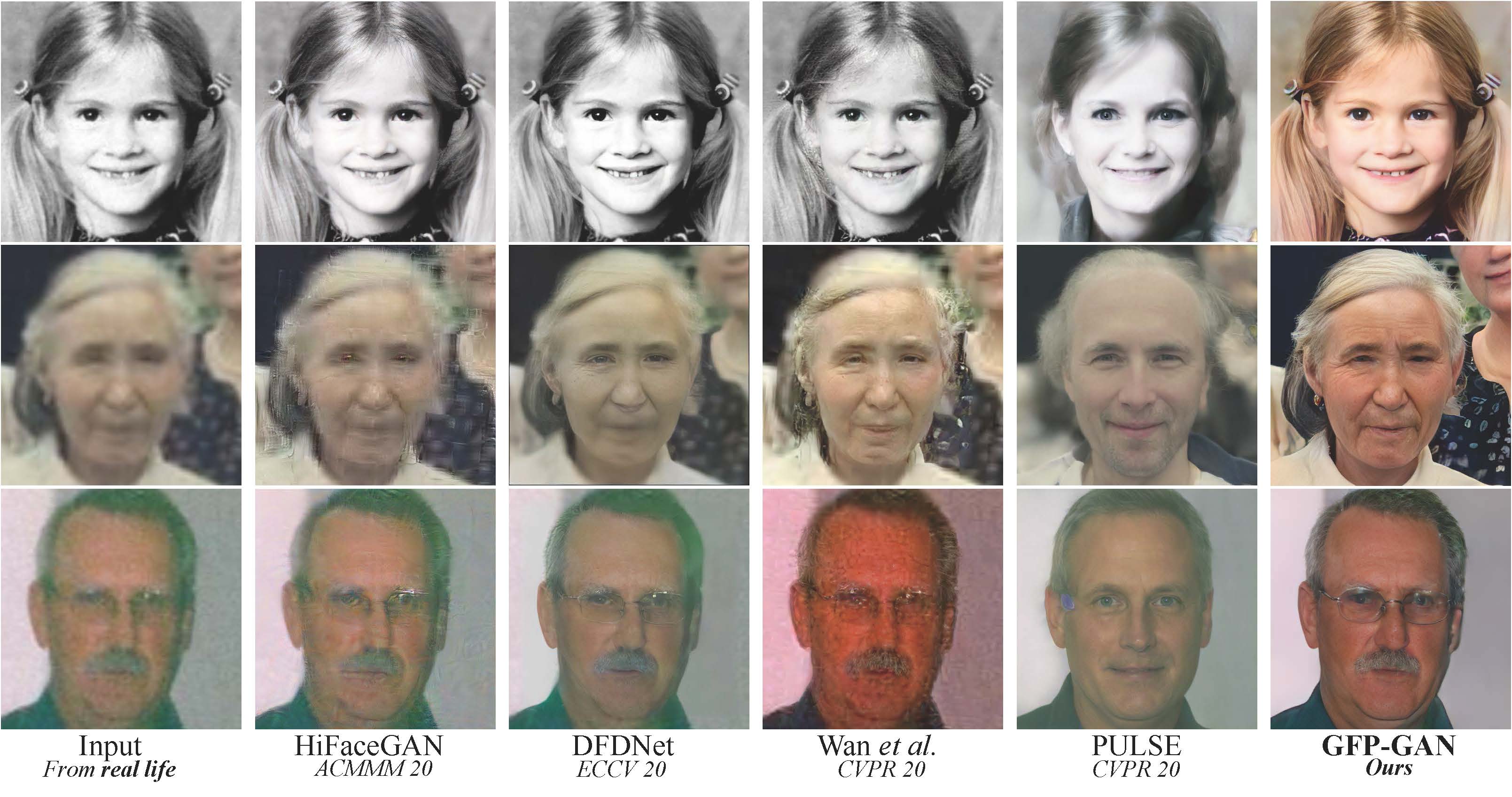

GFPGAN aims at developing Practical Algorithm for Real-world Face Restoration.

It leverages rich and diverse priors encapsulated in a pretrained face GAN (e.g., StyleGAN2) for blind face restoration.

🚩 Updates

- ✅ Support enhancing non-face regions (background) with Real-ESRGAN.

- ✅ We provide a clean version of GFPGAN, which does not require CUDA extensions.

- ✅ We provide an updated model without colorizing faces.

If GFPGAN is helpful in your photos/projects, please help to ⭐ this repo. Thanks😊

Other recommended projects:

[Paper] [Project Page] [Demo]

Xintao Wang, Yu Li, Honglun Zhang, Ying Shan

Applied Research Center (ARC), Tencent PCG

- Python >= 3.7 (Recommend to use Anaconda or Miniconda)

- PyTorch >= 1.7

- Option: NVIDIA GPU + CUDA

- Option: Linux

We now provide a clean version of GFPGAN, which does not require customized CUDA extensions.

If you want want to use the original model in our paper, please see PaperModel.md for installation.

-

Clone repo

git clone https://github.com/TencentARC/GFPGAN.git cd GFPGAN -

Install dependent packages

# Install basicsr - https://github.com/xinntao/BasicSR # We use BasicSR for both training and inference pip install basicsr # Install facexlib - https://github.com/xinntao/facexlib # We use face detection and face restoration helper in the facexlib package pip install facexlib pip install -r requirements.txt python setup.py develop # If you want to enhance the background (non-face) regions with Real-ESRGAN, # you also need to install the realesrgan package pip install realesrgan

Download pre-trained models: GFPGANCleanv1-NoCE-C2.pth

wget https://github.com/TencentARC/GFPGAN/releases/download/v0.2.0/GFPGANCleanv1-NoCE-C2.pth -P experiments/pretrained_modelsInference!

python inference_gfpgan.py --upscale_factor 2 --test_path inputs/whole_imgs --save_root results- GFPGANCleanv1-NoCE-C2.pth: No colorization; no CUDA extensions are required. It is still in training. Trained with more data with pre-processing.

- GFPGANv1.pth: The paper model, with colorization.

We provide the training codes for GFPGAN (used in our paper).

You could improve it according to your own needs.

Tips

- More high quality faces can improve the restoration quality.

- You may need to perform some pre-processing, such as beauty makeup.

Procedures

(You can try a simple version ( options/train_gfpgan_v1_simple.yml) that does not require face component landmarks.)

-

Dataset preparation: FFHQ

-

Download pre-trained models and other data. Put them in the

experiments/pretrained_modelsfolder. -

Modify the configuration file

options/train_gfpgan_v1.ymlaccordingly. -

Training

python -m torch.distributed.launch --nproc_per_node=4 --master_port=22021 gfpgan/train.py -opt options/train_gfpgan_v1.yml --launcher pytorch

GFPGAN is released under Apache License Version 2.0.

@InProceedings{wang2021gfpgan,

author = {Xintao Wang and Yu Li and Honglun Zhang and Ying Shan},

title = {Towards Real-World Blind Face Restoration with Generative Facial Prior},

booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2021}

}

If you have any question, please email xintao.wang@outlook.com or xintaowang@tencent.com.