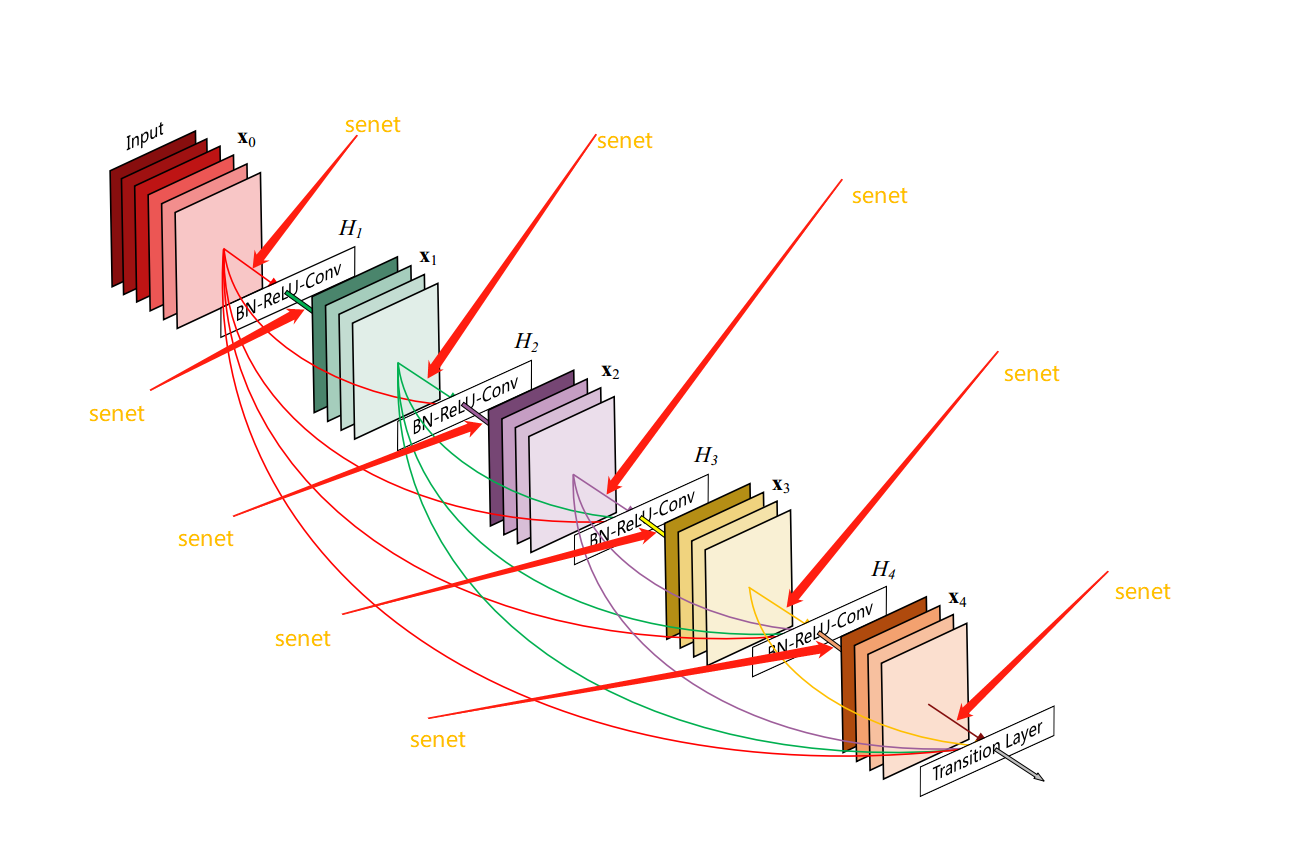

This is a DensNet which contains a SE (Squeeze-and-Excitation Networks by Jie Hu, Li Shen and Gang Sun) module.

Using densenet as backbone, I add senet module into densenet as pic shows below, but it's not the whole structure of se_densenet.

This is a DensNet which contains a SE (Squeeze-and-Excitation Networks by Jie Hu, Li Shen and Gang Sun) module.

Using densenet as backbone, I add senet module into densenet as pic shows below, but it's not the whole structure of se_densenet.

Please click my blog if you want to know more edited se_densenet details. And Chinese version blog is here

- Experiment on Cifar dataset

- Experiment on my Own datasets

- How to train model

- Conclusion

- Todo

Before we start, let's known how to test se_densenet first.

cd core

python3 se_densenet.pyAnd it will print the structure of se_densenet.

Let's input an tensor which shape is (32, 3, 224, 224) into se_densenet

cd core

python3 test_se_densenet.pyIt will print torch.size(32, 1000)

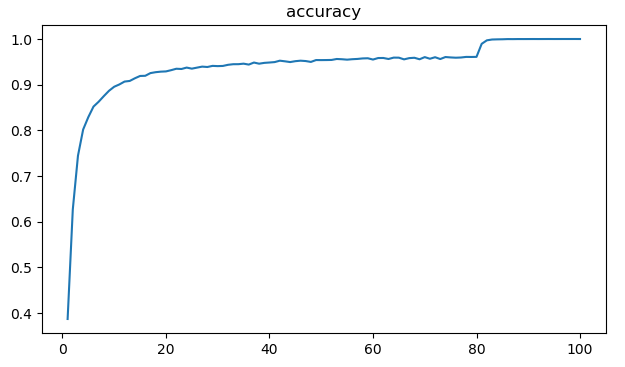

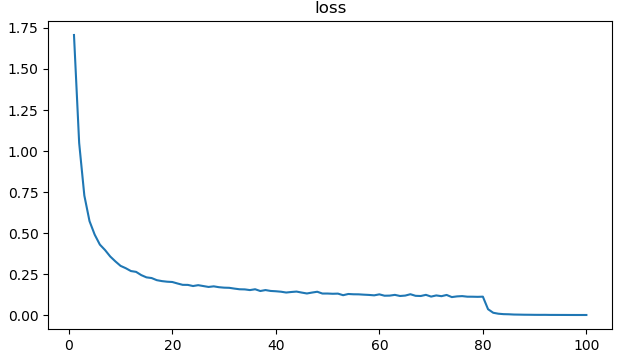

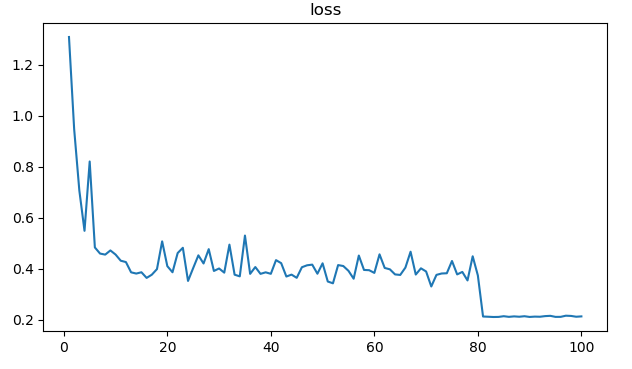

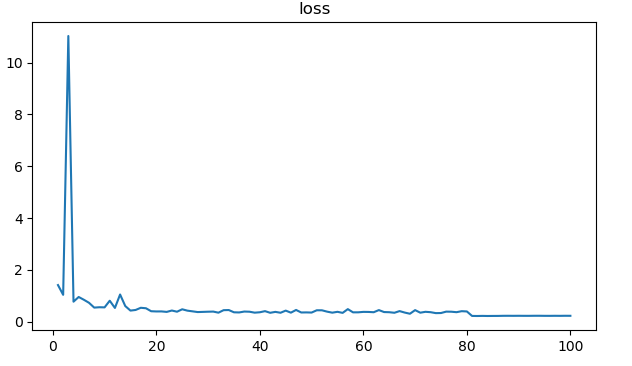

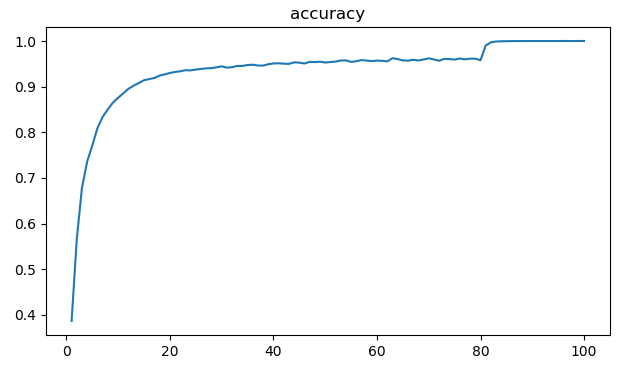

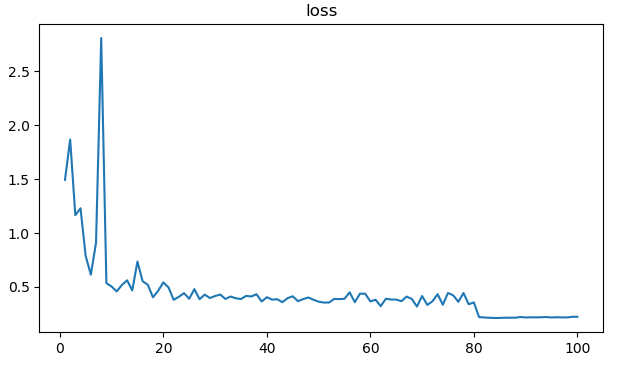

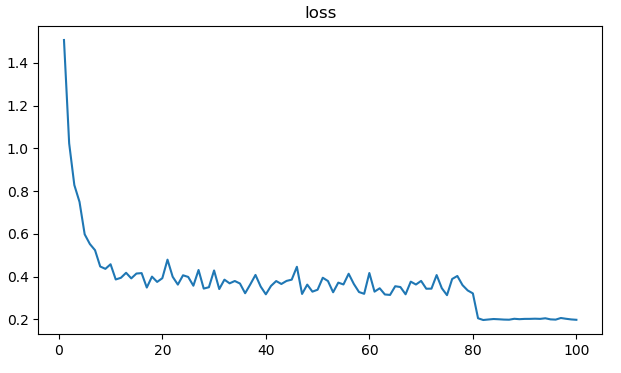

The best val acc is 0.9406 at epoch 98

In this part, I removed some selayers from densenet' transition layers, pls check se_densenet_w_block.py and you will find some commented code which point to selayers I have mentioned above.

- train

- val

The best acc is 0.9381 at epoch 98.

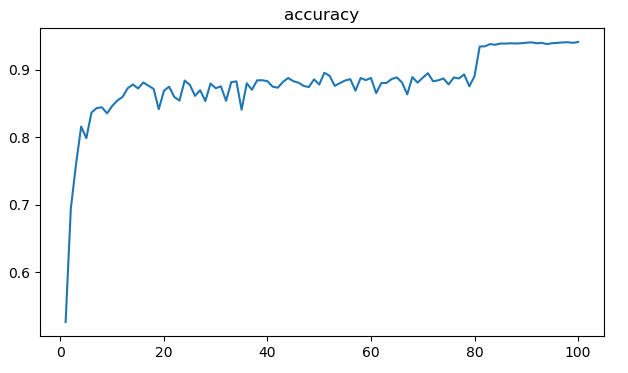

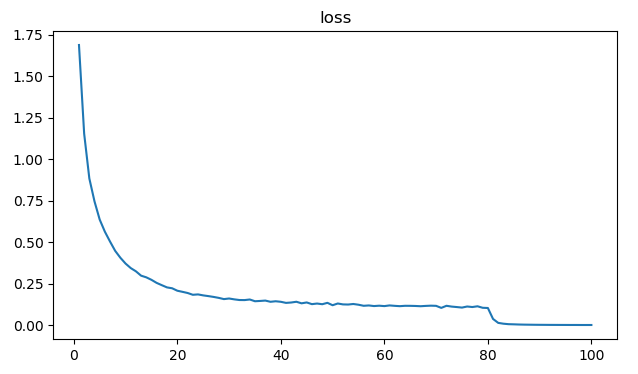

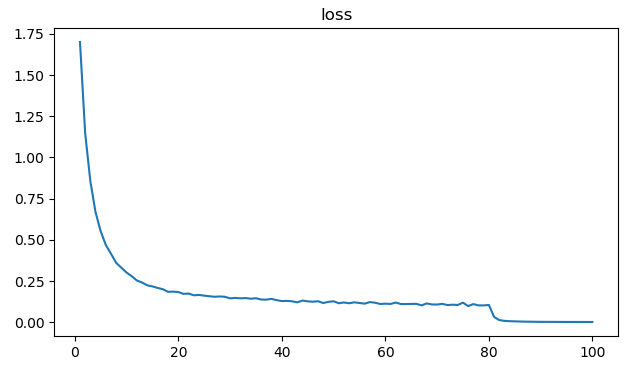

Pls check se_densenet_full.py get more details, I add senet into both denseblock and transition, thanks for @john1231983's issue, I remove some redundant code in se_densenet_full.py, check this issue you will know what I say, here is train-val result on cifar-10:

- train

- val

The best acc is 0.9407 at epoch 86.

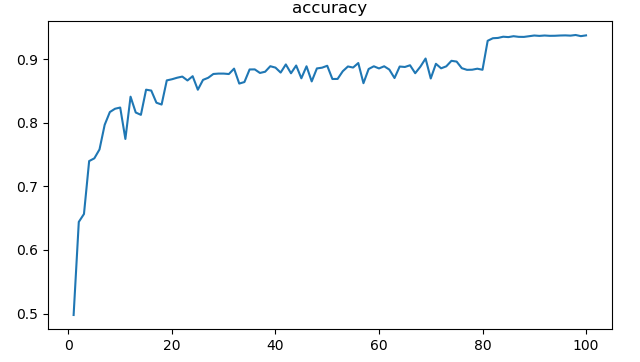

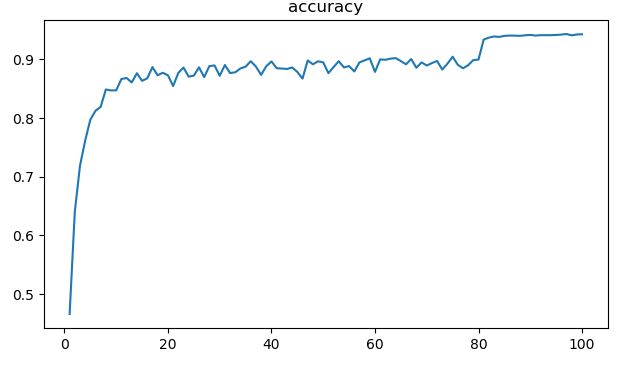

Pls check se_densenet_full_in_loop.py get more details, and this issue illustrate what I have changed, here is train-val result on cifar-10:

- train

- val

The best acc is 0.9434 at epoch 97.

| network | best val acc | epoch |

|---|---|---|

densenet |

0.9406 | 98 |

se_densenet_w_block |

0.9381 | 98 |

se_densenet_full |

0.9407 | 86 |

se_densenet_full_in_loop |

0.9434 | 97 |

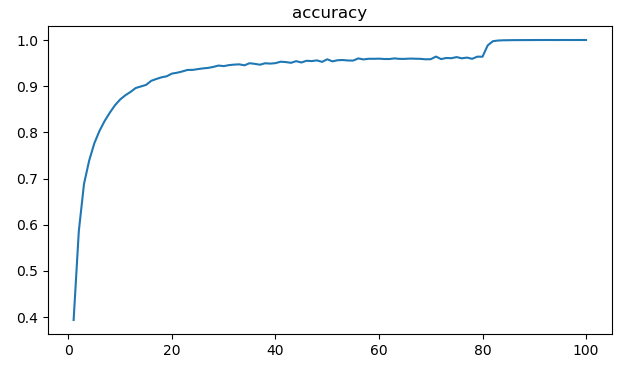

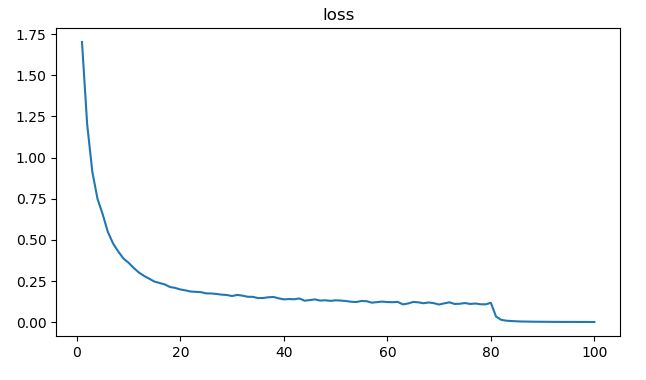

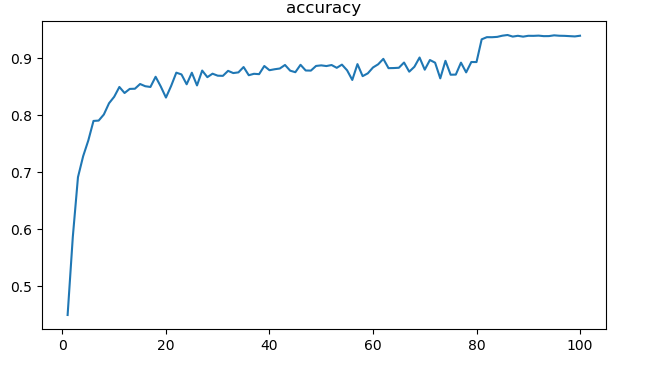

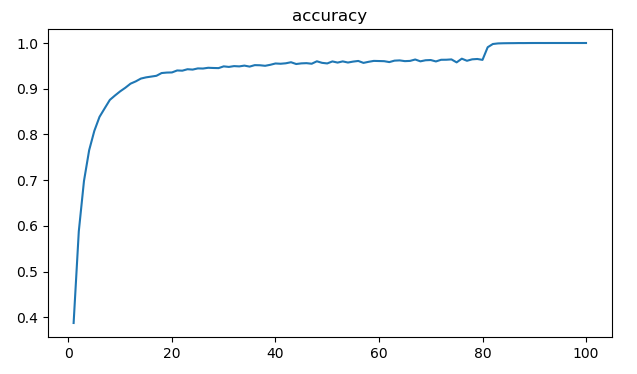

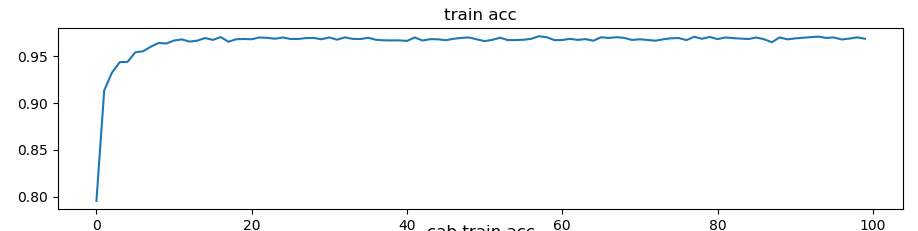

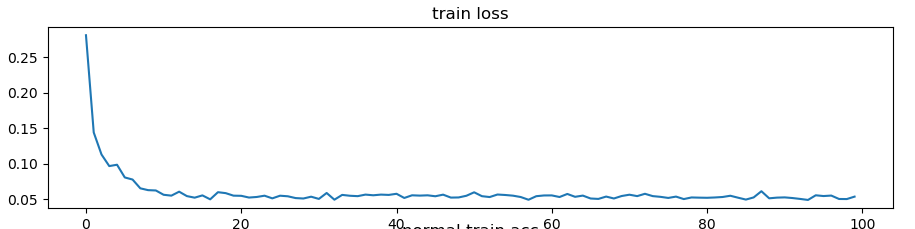

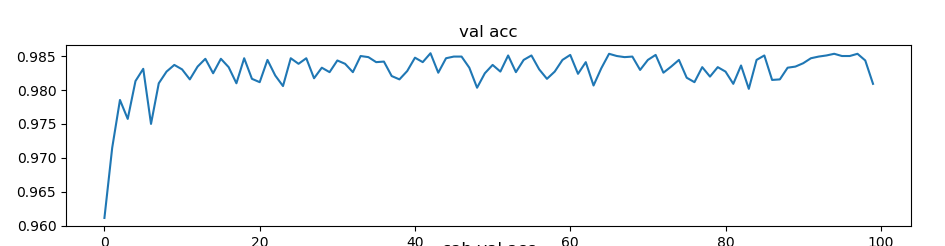

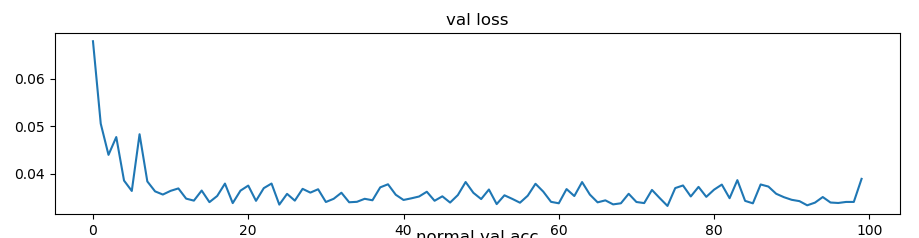

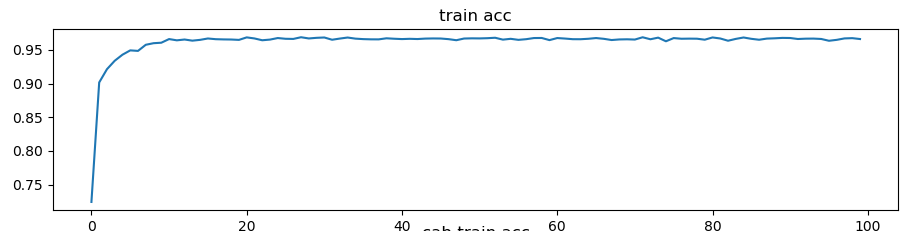

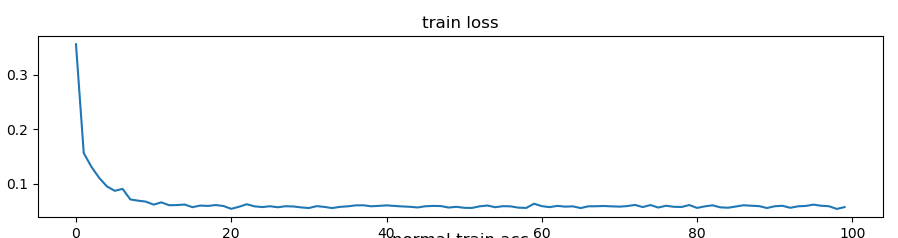

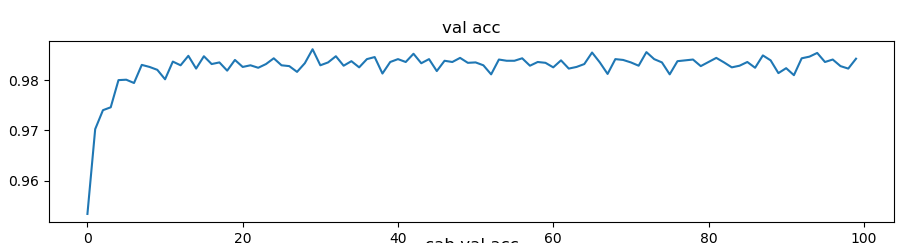

The best acc is: 98.5417%

- train

- val

The best acc is: 98.6154%

| network | best train acc | best val acc |

|---|---|---|

densenet |

0.966953 | 0.985417 |

se_densenet |

0.967772 | 0.986154 |

Se_densenet has got 0.0737% higher accuracy than densenet. I didn't train and test on public dataset like cifar and coco, because of low capacity of machine computation, you can train and test on cifar or coco dataset by yourself if you have the will.

- Download dataset

Cifar10 dataset is easy to access it's website and download it into data/cifar10, you can refer to pytorch official tutorials about how to train on cifar10 dataset.

- Training

There are some modules in core folder, before you start training, you need to edit code in cifar10.py file to change from core.se_densenet_xxxx import se_densenet121,

Then, open your terminal, type:

python cifar10.py- Visualize training

In your terminal, type:

python visual/viz.pyNote: please change your state file path in /visual/viz.py.

se_densenet_full_in_loopgets the best accuracy at epoch 97.se_densenet_fullperforms well because of less epoch at 86,and it gets0.9407the second accuracy.- In the contrast, both

densenetandse_densenet_w_blockget their own the highest accuracy are98epoch.

I will release my training code on github as quickly as possible.

- Usage of my codes

- Test result on my own dataset

- Train and test on

cifar-10dataset - Release train and test code