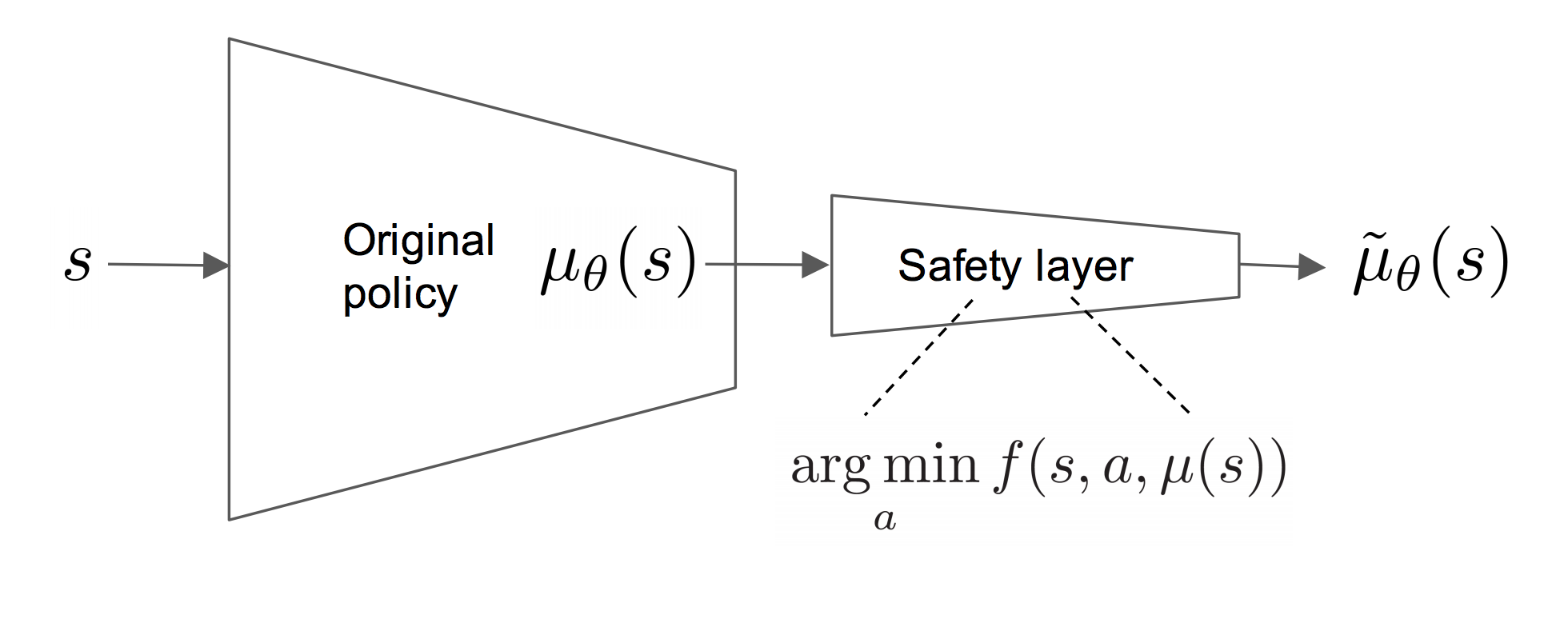

This repository contains Pytorch implementation of paper "Safe Exploration in Continuous Action Spaces" [Dalal et al.] along with "Continuous Control With Deep Reinforcement Learning" [Lillicrap et al.]. Dalal et al. present a closed form analytically optimal solution to ensure safety in continuous action space. The proposed "safety layer", makes the smallest possible perturbation to the original action such that safety constraints are satisfied.

Dalal et al. also propose two new domains BallND and Spaceship which are governed by first and second order dynamics respectively. In Spaceship domain agent receives a reward only on task completion, while BallND has continuous reward based distance from the target. Implementation of both of these tasks extend OpenAI gym's environment interface (gym.Env).

The code requires Python 3.6+ and is tested with torch 1.1.0. To install dependencies run,

pip install -r requirements.txtTo obtain list of parameters and their default values run,

python -m safe_explorer.main --helpTrain the model by simply running,

python -m safe_explorer.main --main_trainer_task ballndpython -m safe_explorer.main --main_trainer_task spaceshipMonitor training with Tensorboard,

tensorboard --logdir=runsTo be updated.

Some modifications in DDPG implementation are based OpenAI Spinning Up implement.

-

Lillicrap, Timothy P., et al. "Continuous control with deep reinforcement learning." arXiv preprint arXiv:1509.02971 (2015).

-

Dalal, Gal, et al. "Safe exploration in continuous action spaces." arXiv preprint arXiv:1801.08757 (2018).