Cho-Ying Wu, Jialiang Wang, Michael Hall, Ulrich Neumann, Shuochen Su

[arXiv] [CVF open access] [project site: data, supplementary]

As this project includes data contribution, please refer to the project page for data download instructions, including SimSIN, UniSIN, and VA, as well as UniSIN leaderboard participation.

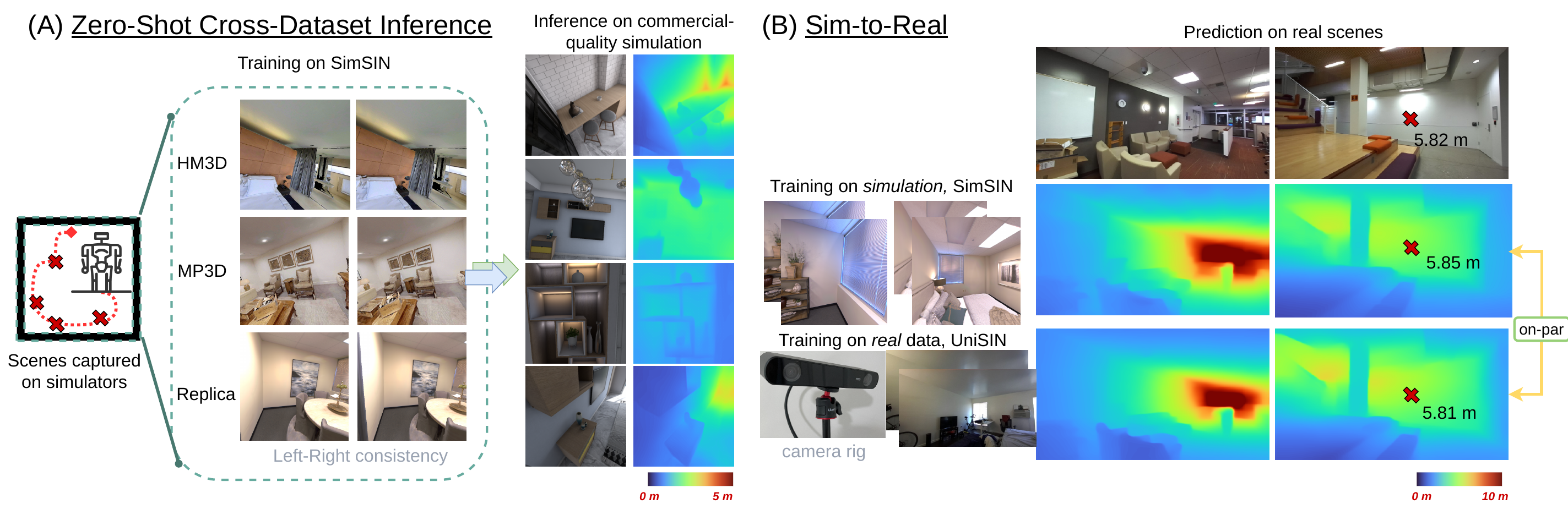

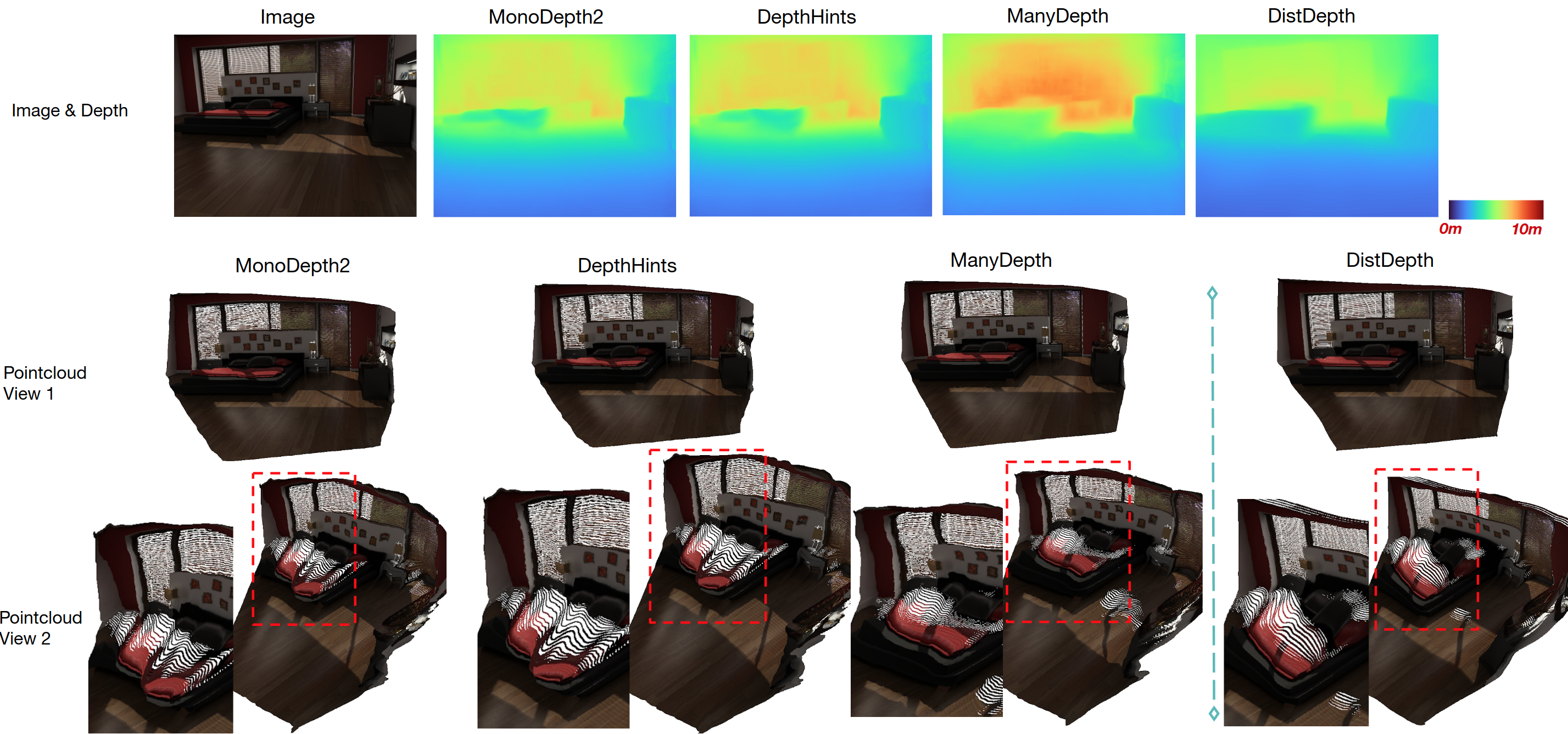

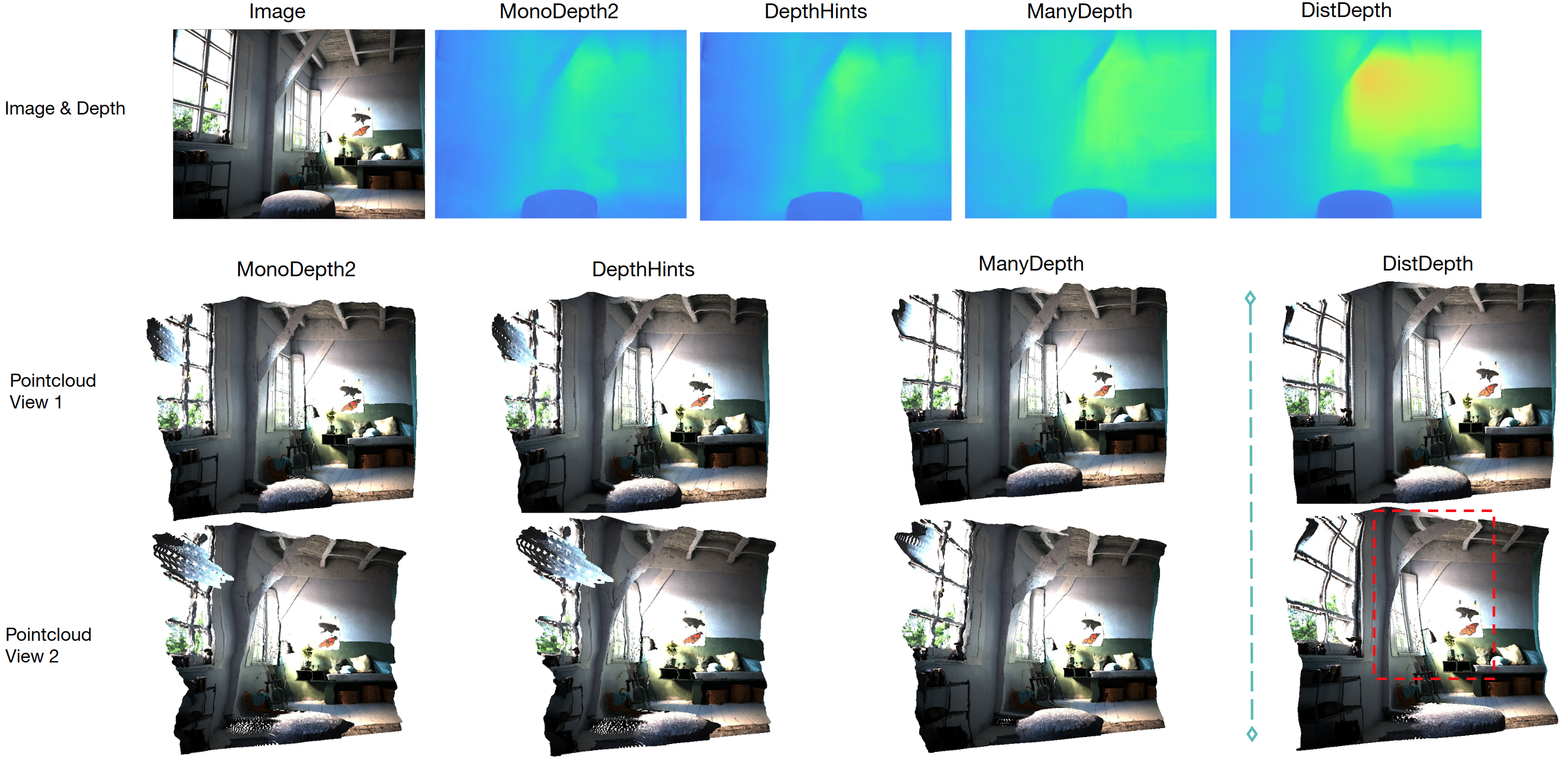

Our DistDepth is a highly robust monocular depth estimation approach for generic indoor scenes.

- Trained with stereo sequences without their groundtruth depth

- Structured and metric-accurate

- Run in an interactive rate with Laptop GPU

- Sim-to-real: trained on simulation and becomes transferrable to real scenes

We test on Ubuntu 20.04 LTS with an laptop NVIDIA 2080 GPU.

Install packages

-

Use conda

conda create --name distdepth python=3.8conda activate distdepth -

Install pre-requisite common packages. Go to https://pytorch.org/get-started/locally/ and install pytorch that is compatible to your computer. We test on pytorch v1.9.0 and cudatoolkit-11.1. (The codes should work under other v1.0+ versions)

-

Install other dependencies: opencv-python and matplotlib, imageio, Pillow, augly, tensorboardX

pip install opencv-python, matplotlib, imageio, Pillow, augly, tensorboardX

Download pretrained models

-

Download pretrained models [here] (ResNet152, 246MB, illustation for averagely good in-the-wild indoor scenes).

-

Unzip under the root. 'ckpts' that contains the pretrained models is created.

-

Run

python demo.py -

Results will be stored under

results/

Some Sample data are provided in data/sample_pc.

python visualize_pc.py

Download SimSIN [here]. For UniSIN and VA, please download at the [project site].

To generate stereo data with depth using Habitat, we provide a snippet here. Install Habitat first.

python visualize_pc.py

For a simple taste of training, download a smaller replica set [here] and create and put under './SimSIN-simple'.

mkdir weights

wget -O weights/dpt_hybrid_nyu-2ce69ec7.pt https://github.com/intel-isl/DPT/releases/download/1_0/dpt_hybrid_nyu-2ce69ec7.pt python execute.py --exe train --model_name distdepth-distilled --frame_ids 0 --log_dir='./tmp' --data_path SimSIN-simple --dataset SimSIN --batch_size 12 --width 256 --height 256 --max_depth 10.0 --num_epochs 10 --scheduler_step_size 8 --learning_rate 0.0001 --use_stereo --thre 0.95 --num_layers 152 The memory takes about 18G and requires about 20 min on a RTX 3090 GPU.

SimSIN trained models, evaluation on VA

| Name | Arch | Expert | MAE | AbsRel | RMSE | acc@ 1.25 | acc@ 1.25^2 | acc@ 1.25^3 | Download |

|---|---|---|---|---|---|---|---|---|---|

| DistDepth | ResNet152 | DPT Large | 0.252 | 0.175 | 0.371 | 75.1 | 93.9 | 98.4 | model |

| DistDepth | ResNet152 | DPT Legacy | 0.270 | 0.186 | 0.386 | 73.2 | 93.2 | 97.9 | model |

| DistDepth-Multi | ResNet101 | DPT Legacy | 0.243 | 0.169 | 0.362 | 77.1 | 93.7 | 97.9 | model |

Download VA (8G) first. Extract under the root folder.

.

├── VA

├── camera_0

├── 00000000.png

......

├── camera_1

├── 00000000.png

......

├── gt_depth_rectify

├── cam0_frame0000.depth.pfm

......

├── VA_left_all.txt

Run bash eval.sh The performances will be saved under the root folder.

To visualize the predicted depth maps in a minibatch:

python execute.py --exe eval_save --log_dir='./tmp' --data_path VA --dataset VA --batch_size 1 --load_weights_folder <path to weights> --models_to_load encoder depth --width 256 --height 256 --max_depth 10 --frame_ids 0 --num_layers 152 ```To visualize the predicted depth maps for all testing data on the list:

python execute.py --exe eval_save_all --log_dir='./tmp' --data_path VA --dataset VA --batch_size 1 --load_weights_folder <path to weights> --models_to_load encoder depth --width 256 --height 256 --max_depth 10 --frame_ids 0 --num_layers 152 ```Evaluation on NYUv2

Prepare NYUv2 data.

.

├── NYUv2

├── img_val

├── 00001.png

......

├── depth_val

├── 00001.npy

......

......

├── NYUv2.txt

| Name | Arch | Expert | MAE | AbsRel | RMSE | acc@ 1.25 | acc@ 1.25^2 | acc@ 1.25^3 | Download |

|---|---|---|---|---|---|---|---|---|---|

| DistDepth-finetuned | ResNet152 | DPT on NYUv2 | 0.308 | 0.113 | 0.444 | 87.3 | 97.3 | 99.3 | model |

| DistDepth-SimSIN | ResNet152 | DPT | 0.411 | 0.163 | 0.563 | 78.0 | 93.6 | 98.1 | model |

Change train_filenames (dummy) and val_filenames in execute_func.py to NYUv2. Then,

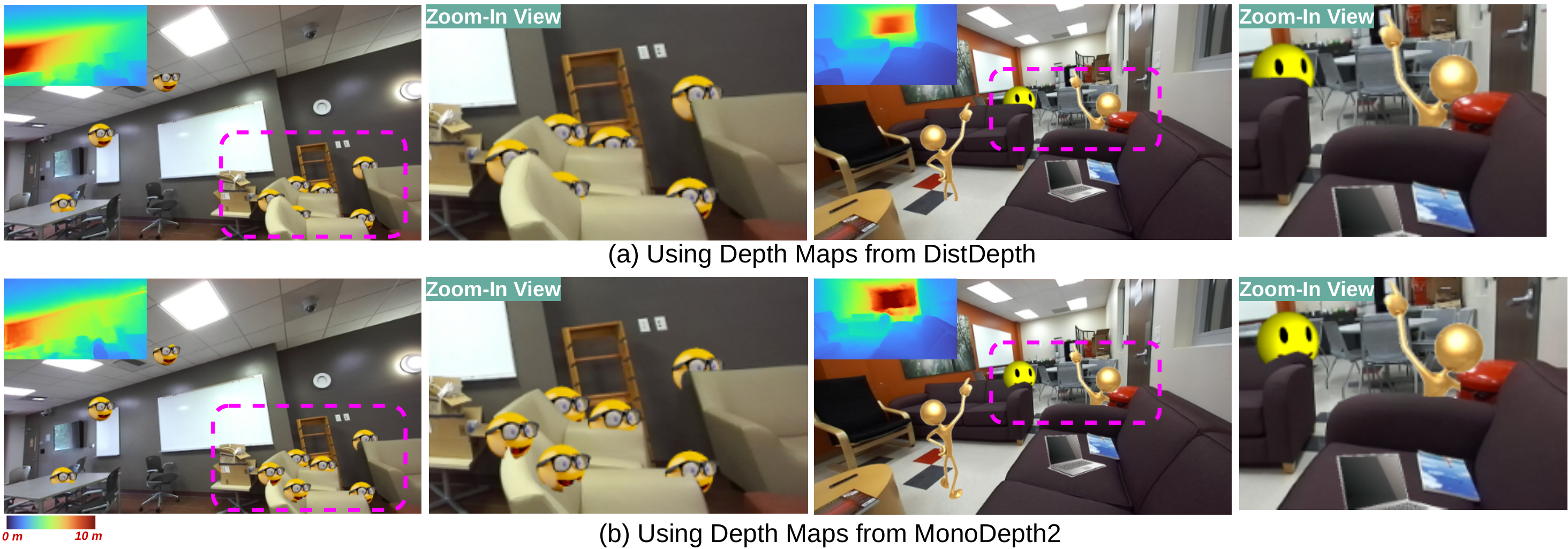

python execute.py --exe eval_measure --log_dir='./tmp' --data_path NYUv2 --dataset NYUv2 --batch_size 1 --load_weights_folder <path to weights> --models_to_load encoder depth --width 256 --height 256 --max_depth 12 --frame_ids 0 --num_layers 152Virtual object insertion:

Dragging objects along a trajectory:

@inproceedings{wu2022toward,

title={Toward Practical Monocular Indoor Depth Estimation},

author={Wu, Cho-Ying and Wang, Jialiang and Hall, Michael and Neumann, Ulrich and Su, Shuochen},

booktitle={CVPR},

year={2022}

}

DistDepth is CC-BY-NC licensed, as found in the LICENSE file.