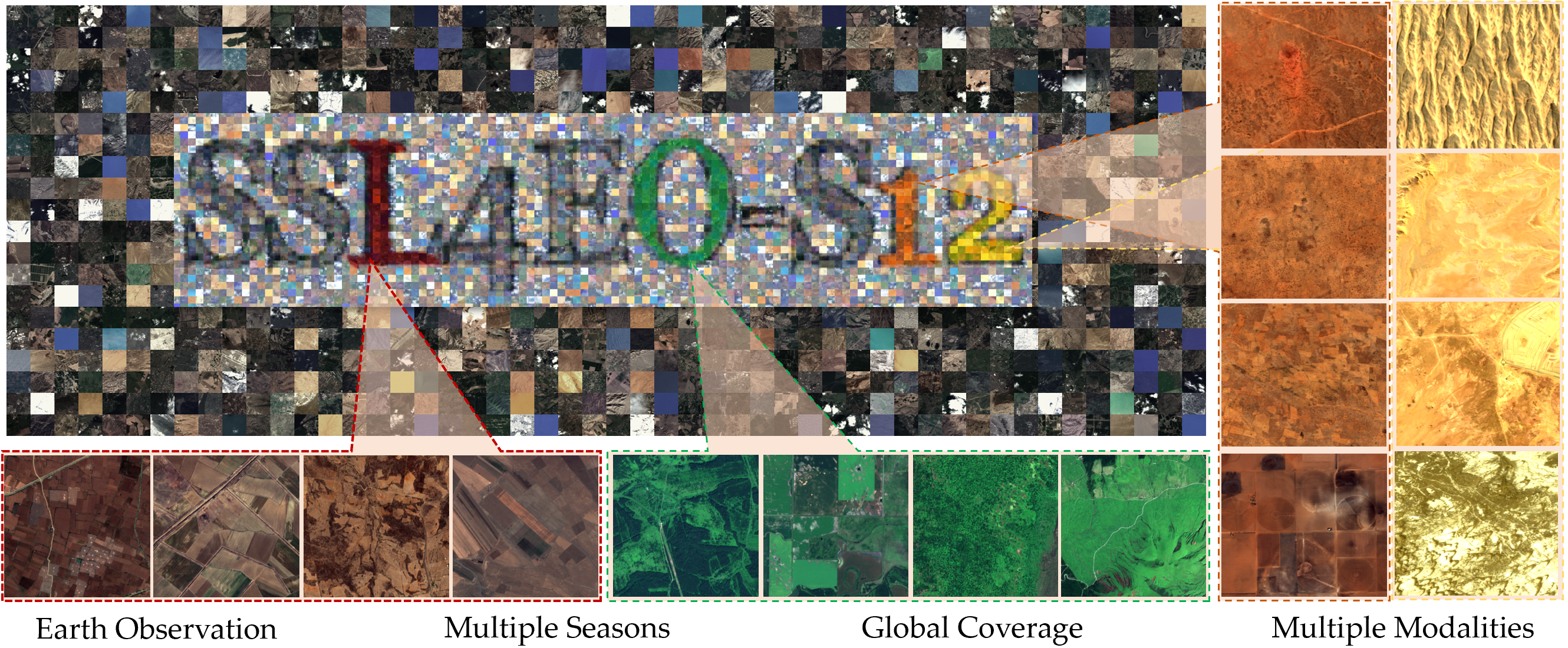

The SSL4EO-S12 dataset is a large-scale multimodal multitemporal dataset for unsupervised/self-supervised pre-training in Earth observation. The dataset consists of unlabeled patch triplets (Sentinel-1 dual-pol SAR, Sentinel-2 top-of-atmosphere multispectral, Sentinel-2 surface reflectance multispectral) from 251079 locations across the globe, each patch covering 2640mx2640m and including four seasonal time stamps.

- Raw dataset: The full SSL4EO-S12 dataset (1.5TB, 500GB for each modality) is accessible at mediaTUM. There are some void IDs (gaps in folder names), see

data/void_ids.csv. Center coordinates of all locations are available here. - Example subset: An example 100-patch subset (600MB) is available at Google Drive.

- Compressed dataset: A compressed 8-bit version (20-50GB for each modality, including an RGB version) is available at mediaTUM. The raw 16/32-bit values are normalized by mean and std and converted to uint8, plus a default geotiff JPEG compression with quality 75. Note: in our experiments, 8-bit input (without JPEG compression) performs comparably well as 16-bit.

- A 50k (random) RGB subset (18GB) is available here (link broken). Sample IDs see

data/50k_ids_random.csv.

Updates

- For faster access in some regions, we have hosted a copy of the data in HuggingFace. Note that only the original data in mediaTUM has a proper DOI.

- We've got some feedback that the compressed dataset (with JPEG compression) has a performance drop compared to the raw data, which could be because of the lossy compression. We plan to update it with a lossless version (yet the file size will increase). Also, do you have INode (number of single files) limit on your server? We could consider updating one resampled GeoTiff for all bands (as in SSL4EO-L). If you have any issues or wish for updates, let us know!

Check src/download_data for instructions to download sentinel or other products from Google Earth Engine.

The pre-trained models with different SSL methods are provided as follows (13 bands of S2-L1C, 100 epochs, input clip to [0,1] by dividing 10000).

| SSL method | Arch | BigEarthNet* | EuroSAT | So2Sat-LCZ42 | Download | Usage | ||

|---|---|---|---|---|---|---|---|---|

| MoCo | ResNet50 | 91.8% | 99.1% | 60.9% | full ckpt | backbone | logs | define model, load weights |

| MoCo | ViT-S/16 | 89.9% | 98.6% | 61.6% | full ckpt | backbone | logs | define model, load weights |

| DINO | ResNet50 | 90.7% | 99.1% | 63.6% | full ckpt | backbone | logs | define model, load weights |

| DINO | ViT-S/16 | 90.5% | 99.0% | 62.2% | full ckpt | backbone | logs | define model, load weights |

| MAE | ViT-S/16 | 88.9% | 98.7% | 63.9% | full ckpt | backbone | logs | define model, load weights |

| Data2vec | ViT-S/16 | 90.3% | 99.1% | 64.8% | full ckpt | backbone | logs | define model, load weights |

* Note the results for BigEarthNet are based on the train/val split following SeCo and In-domain representation learning for RS.

Other pre-trained models:

| SSL method | Arch | Input | Download | ||

|---|---|---|---|---|---|

| MoCo | ResNet18 | S2-L1C 13 bands | full ckpt | backbone | logs |

| ResNet18 | S2-L1C RGB | full ckpt, full ckpt ep200 | backbone | logs | |

| ResNet50 | S2-L1C RGB | full ckpt | backbone | logs | |

| ResNet50 | S1 SAR 2 bands | full ckpt | backbone | logs | |

| MAE | ViT-S/16 | S1 SAR 2 bands | full ckpt | backbone | |

| ViT-B/16 | S1 SAR 2 bands | full ckpt | backbone | ||

| ViT-L/16 | S1 SAR 2 bands | full ckpt | backbone | ||

| ViT-H/14 | S1 SAR 2 bands | full ckpt | backbone | ||

| ViT-B/16 | S2-L1C 13 bands | full ckpt | backbone | ||

| ViT-L/16 | S2-L1C 13 bands | full ckpt | backbone | ||

| ViT-H/14 | S2-L1C 13 bands | full ckpt | backbone |

* The pretrained models are also available in TorchGeo.

This repository is released under the Apache 2.0 license. The dataset and pretrained model weights are released under the CC-BY-4.0 license.

@article{wang2022ssl4eo,

title={SSL4EO-S12: A Large-Scale Multi-Modal, Multi-Temporal Dataset for Self-Supervised Learning in Earth Observation},

author={Wang, Yi and Braham, Nassim Ait Ali and Xiong, Zhitong and Liu, Chenying and Albrecht, Conrad M and Zhu, Xiao Xiang},

journal={arXiv preprint arXiv:2211.07044},

year={2022}

}