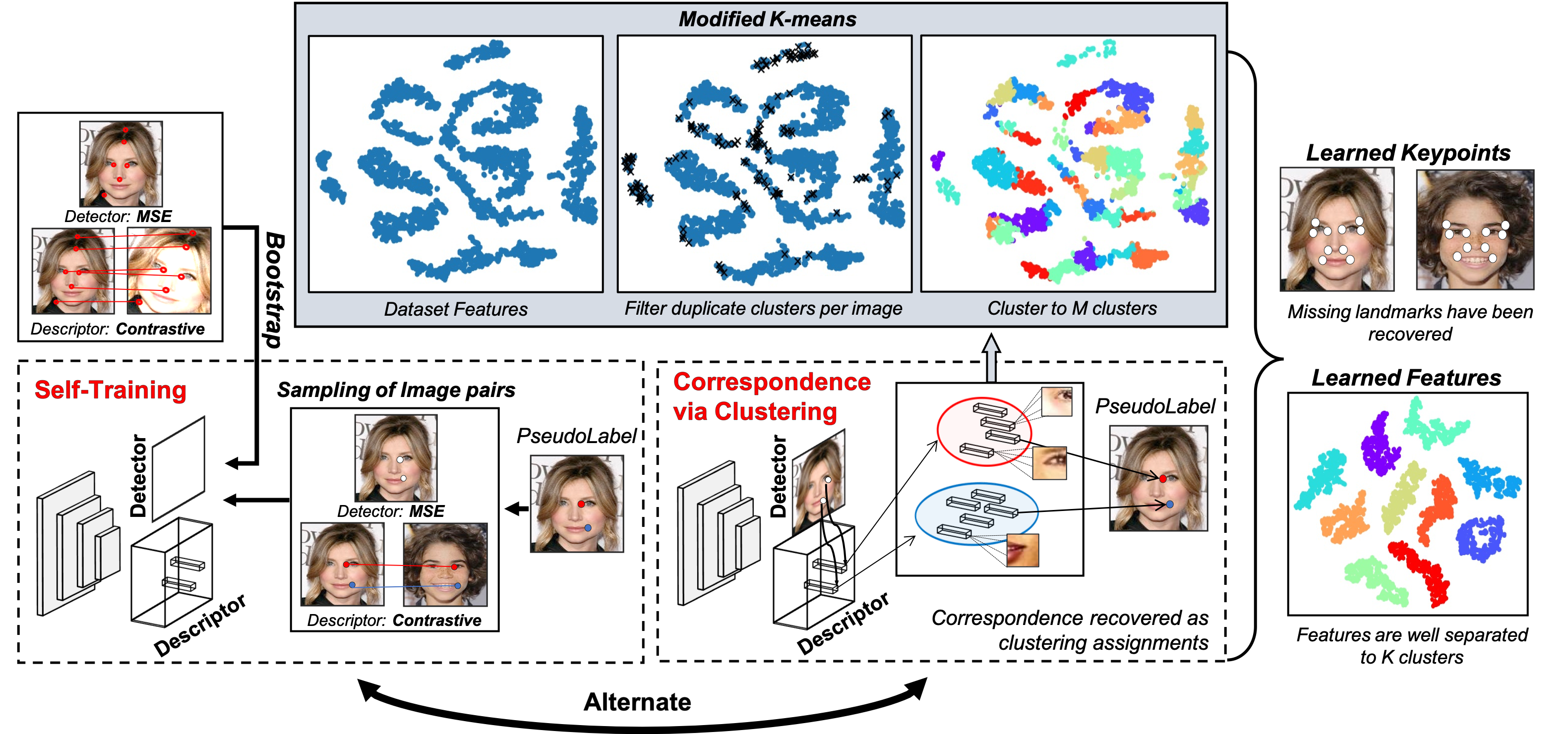

From Keypoints to Object Landmarks via Self-Training Correspondence: A novel approach to Unsupervised Landmark Discovery

Dimitrios Mallis, Enrique Sanchez, Matt Bell, Georgios Tzimiropoulos

This repository contains the training and evaluation code for "From Keypoints to Object Landmarks via Self-Training Correspondence: A novel approach to Unsupervised Landmark Discovery". This sofware learns a deep landmark detector, directly from raw images of a specific object category, without requiring any manual annotations.

Dataloader and pretrained models for more databases will be released soon!!

Data Preparation

CelebA

CelebA can be found here. Download the .zip file inside an empty directory and unzip. We provide precomputed bounding boxes and 68-point annotations (for evaluation only) in data/CelebA.

Installation

You require a reasonable CUDA capable GPU. This project was developed using Linux.

Create a new conda environment and activate it:

conda create -n KeypToLandEnv python=3.8

conda activate KeypToLandEnv

Install pythorch and the faiss library:

conda install pytorch torchvision cudatoolkit=10.2 -c pytorch

conda install -c pytorch faiss-gpu cudatoolkit=10.2

Install other external dependencies using pip and create the results directory.

pip install -r requirements.txt

mkdir Results

Our method is bootstraped by Superpoint. Download weights for a pretrained Superpoint model from here.

Before code execution you have to update paths/main.yaml so it includes all the required paths. Edit the following entries in paths/main.yaml.:

CelebA_datapath: <pathToCelebA_database>/celeb/Img/img_align_celeba_hq/

path_to_superpoint_checkpoint: <pathToSuperPointCheckPoint>/superpoint_v1.pth

Testing

Stage 1

To evaluate the first stage of the algorithm execute:

python eval.py --experiment_name <experiment_name> --dataset_name <dataset_name> --K <K> --stage 1

The last checkpoint stored on _Results/\<experiment\_name\>/CheckPoints/_ will be loaded automatically. TO you want to evaluate a particular checkpoint or pretrained model use the path_to_checkpoint argument.

Stage 2

Similarly, to To evaluate the second stage of the algorithm execute:

python eval.py --experiment_name <experiment_name> --dataset_name <dataset_name> --K <K> --stage 2

Cumulative forward and backward error curves will be stored in _Results/\<experiment\_name\>/Logs/_ .

Training

To execute the first step of our method please run:

python Train_Step1.py --dataset_name <dataset_name> --experiment_name <experiment_name> --K <K>

Similarly, to execute the second step please run:

python Train_Step2.py --dataset_name <dataset_name> --experiment_name <experiment_name> --K <K>

where < dataset_name > is in "CelebA" and < experiment_name > is a custom name you choose for each experiment. Please use the same experiment name for both the first and second step. The software will automatically initiate the second step with the groundtruth descovered in step one.

Pretrained Models

We provide also pretrained models. Can be used to execute the testing script and produce visual results.

| Model | K | Stage | Model |

|---|---|---|---|

| CelebA | 10 | 1 | link |

| CelebA | 10 | 2 | link |

| CelebA | 30 | 1 | link |

| CelebA | 30 | 2 | link |

Citation

If you found this work useful consider citing:

@article{Mallis2022FromKT,

title={From Keypoints to Object Landmarks via Self-Training Correspondence: A novel approach to Unsupervised Landmark Discovery},

author={Dimitrios Mallis and Enrique Sanchez and Matt Bell and Georgios Tzimiropoulos},

journal={ArXiv},

year={2022},

volume={abs/2205.15895}

}