TextBox (妙笔)

“李太白少时,梦所用之笔头上生花后天才赡逸,名闻天下。”——王仁裕《开元天宝遗事·梦笔头生花》

Docs | Model | Dataset | Paper | 中文版

TextBox is developed based on Python and PyTorch for reproducing and developing text generation algorithms in a unified, comprehensive and efficient framework for research purpose. Our library includes 21 text generation algorithms, covering two major tasks:

- Unconditional (input-free) Generation

- Conditional (Seq2Seq) Generation, including Machine Translation, Text Summarization, Attribute-to-Text, and Dialogue Systems

We provide the support for 9 benchmark text generation datasets. A user can apply our library to process the original data copy, or simply download the processed datasets by our team.

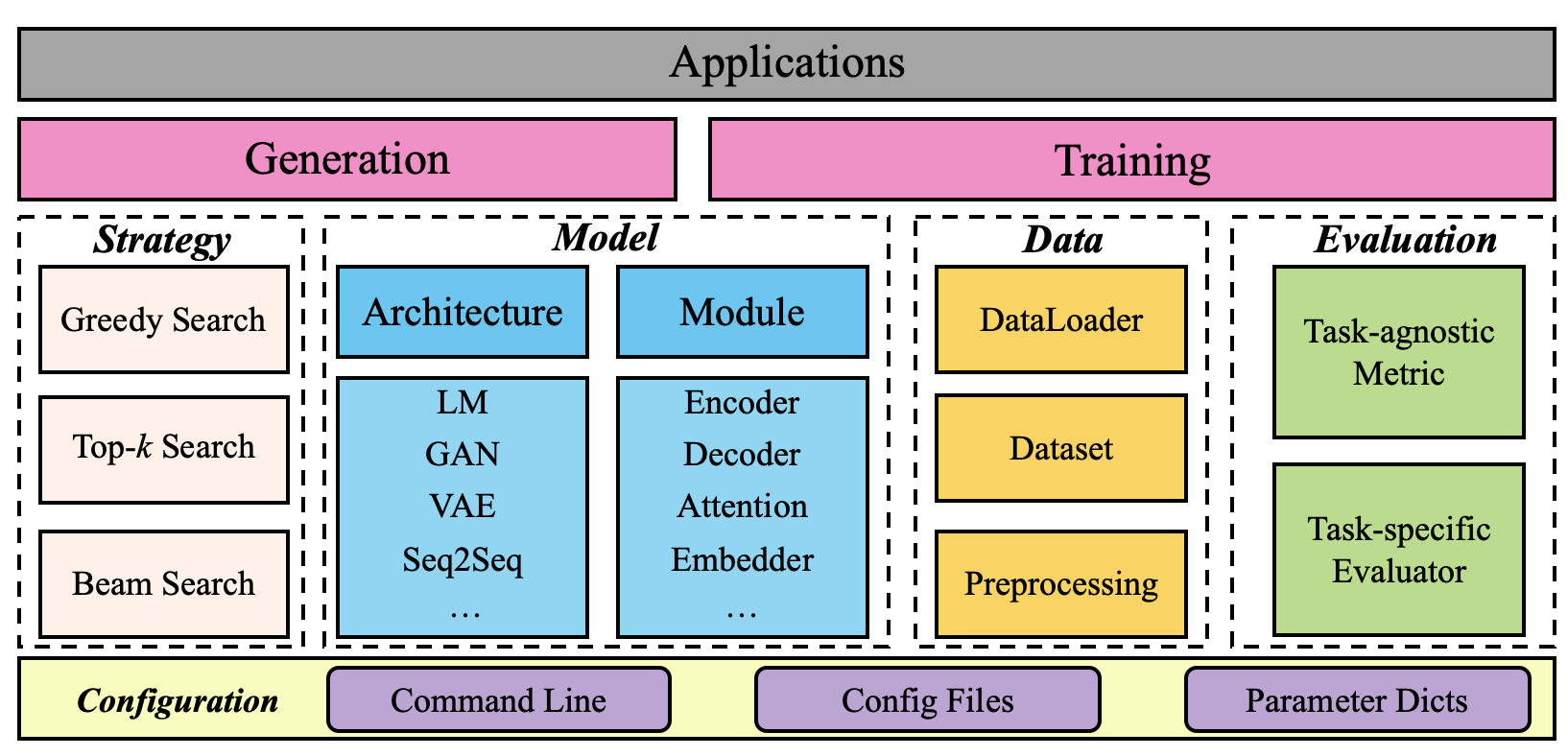

Figure: The Overall Architecture of TextBox

Feature

- Unified and modularized framework. TextBox is built upon PyTorch and designed to be highly modularized, by decoupling diverse models into a set of highly reusable modules.

- Comprehensive models, benchmark datasets and standardized evaluations. TextBox also contains a wide range of text generation models, covering the categories of VAE, GAN, RNN or Transformer based models, and pre-trained language models (PLM).

- Extensible and flexible framework. TextBox provides convenient interfaces of various common functions or modules in text generation models, RNN encoder-decoder, Transformer encoder-decoder and pre-trained language model.

- Easy and convenient to get started. TextBox provides flexible configuration files, which allows green hands to run experiments without modifying source code, and allows researchers to conduct qualitative analysis by modifying few configurations.

Installation

TextBox requires:

-

Python >= 3.6.2 -

torch >= 1.6.0. Please follow the official instructions to install the appropriate version according to your CUDA version and NVIDIA driver version. -

GCC >= 5.1.0

Install from pip

pip install textboxIf you face a problem when installing fast_bleu, for Linux, please ensure GCC >= 5.1.0. For Windows, you can use the wheels in fast_bleu_wheel4windows for installation. For MacOS, you can install with the following command:

pip install fast-bleu --install-option="--CC=<path-to-gcc>" --install-option="--CXX=<path-to-g++>"After installing fast_bleu successfully, just reinstall textbox.

Install from source

git clone https://github.com/RUCAIBox/TextBox.git && cd TextBox

pip install -e . --verboseQuick-Start

Start from source

With the source code, you can use the provided script for initial usage of our library:

python run_textbox.pyThis script will run the RNN model on the COCO dataset to conduct unconditional generation. Typically, this example takes a few minutes. We will obtain the output log like example.log.

If you want to change the parameters, such as rnn_type, max_vocab_size, just set the additional command parameters as you need:

python run_textbox.py --rnn_type=lstm --max_vocab_size=4000We also support to modify YAML configuration files in corresponding dataset and model properties folders and include it in the command line.

If you want to change the model, the dataset or the task type, just run the script by modifying corresponding command parameters:

python run_textbox.py --model=[model_name] --dataset=[dataset_name]model_name is the model to be run, such as RNN and BART. Models we implemented can be found in Model.

If you want to change the datasets, please refer to Dataset.

Start from API

If TextBox is installed from pip, you can create a new python file, download the dataset, and write and run the following code:

from textbox.quick_start import run_textbox

run_textbox(config_dict={'model': 'RNN',

'dataset': 'COCO',

'data_path': './dataset'})This will perform the training and test of the RNN model on the COCO dataset.

If you want to run different models, parameters or datasets, the operations are same with Start from source.

Use Pretrained Language Model

In most cases, only the following two parameters need to be passed in addition:

--tokenize_strategy=none

--pretrained_model_path='<model_name_or_path>'For example, if you want to use T5 for summarization task like CNNDM, run:

python run_textbox.py --model=t5 --dataset=CNNDM --tokenize_strategy=none --pretrained_model_path='<model_name_or_path>'The list of the model names can be found in enum_type.py.

If you want to add a task prefix or suffix, just add two additional parameters:

--prefix_prompt='<prefix>'

--suffix_prompt='<suffix>'Note: the prompts will be added to the beginning and end of source ids.

If you want to add label smoothing during training, add one additional parameter:

--label_smoothing=<smooth loss weight>For some models used for translation task like m2m100, you need to specify source language and target language:

m2m100: en -> zh

--src_lang='en'

--tgt_lang='zh'

mbart: en ->zh

--src_lang='en_XX'

--tgt_lang='zh_CN'Train with Distributed Data Parallel (DDP)

TextBox supports to train models with multiple GPUs conveniently. You don't need to modify the model, just run the following command:

python -m torch.distributed.launch --nproc_per_node=[gpu_num] \

run_textbox.py --model=[model_name] \

--dataset=[dataset_name] --gpu_id=[gpu_ids] --DDP=Truegpu_num is the number of GPUs you want to train with (such as 4), and gpu_ids is the usable GPU id list (such as 0,1,2,3).

Notice that: we only support DDP for end-to-end model. We will add support for non-end-to-end models, such as GAN, in the future.

Architecture

The above Figure presents the overall architecture of our library. The running procedure relies on some experimental configuration, obtained from the files, command line or parameter dictionaries. The dataset and model are prepared and initialized according to the configured settings, and the execution module is responsible for training and evaluating models. The details of interfaces can be obtained in our document.

Model

We implement 21 text generation models, covering unconditional generation and sequence-to-sequence generation, in the following table:

| Category | Model | Reference |

|---|---|---|

| VAE | LSTMVAE | (Bowman et al., 2016) |

| CNNVAE | (Yang et al., 2017) | |

| HybridVAE | (Semeniuta et al., 2017) | |

| CVAE | (Li et al., 2018) | |

| GAN | SeqGAN | (Yu et al., 2017) |

| TextGAN | (Zhang et al., 2017) | |

| RankGAN | (Lin et al., 2017) | |

| MaliGAN | (Che et al., 2017) | |

| LeakGAN | (Guo et al., 2018) | |

| MaskGAN | (Fedus et al., 2018) | |

| PLM | GPT-2 | (Radford et al., 2019) |

| XLNet | (Yang et al., 2019) | |

| BERT2BERT | (Rothe et al., 2020) | |

| BART | (Lewis et al., 2020) | |

| T5 | (Raffel et al., 2020) | |

| ProphetNet | (Qi et al., 2020) | |

| Seq2Seq | RNN | (Sutskever et al., 2014) |

| Transformer | (Vaswani et al., 2017b) | |

| Context2Seq | (Tang et al., 2016) | |

| Attr2Seq | (Dong et al., 2017) | |

| HRED | (Serban et al., 2016) |

Dataset

We have also collected 9 datasets that are commonly used for six tasks, which can be downloaded from Google Drive and Baidu Wangpan (Password: lwy6), including raw data and processed data.

We list the 9 datasets in the following table:

| Task | Dataset |

|---|---|

| Unconditional | Image COCO Caption |

| EMNLP2017 WMT News | |

| IMDB Movie Review | |

| Translation | IWSLT2014 German-English |

| WMT2014 English-German | |

| Summarization | GigaWord |

| Dialog | Persona Chat |

| Attribute to Text | Amazon Electronic |

| Poem Generation | Chinese Classical Poetry Corpus |

We also support you to run our model using your own dataset. Just follow the three steps:

-

Create a new folder under the

datasetfolder to put your own corpus file which includes a sequence per line, e.g.dataset/YOUR_DATASET; -

Write a YAML configuration file using the same file name to set the hyper-parameters of your dataset, e.g.

textbox/properties/dataset/YOUR_DATASET.yaml.If you want to splitted the dataset, please set

split_strategy: "load_split"in the yaml, just as the COCO yaml or IWSLT14_DE_EN yaml.If you want to split the dataset by ratio automaticly, please set

split_strategy: "by_ratio"and your desiredsplit_ratioin the yaml, just as the IMDB yaml. -

For unconditional generation, name the corpus file

corpus.txtif you set"by_ratio", name the corpus filestrain.txt, valid.txt, dev.txtif you set"load_split".For sequence-to-sequence generation, please name the corpus files

train.[xx/yy], valid.[xx/yy], dev.[xx/yy], and thexxoryyis the suffix of the source or target file which should be consistent withsource_suffixandtarget_suffixin the YAML.

Experiment Results

We have implemented various text generation models, and compared their performance on unconditional and conditional text generation tasks. We also show a few generated examples, and more examples can be found in generated_examples.

The following results were obtained from our TextBox in preliminary experiments. However, these algorithms were implemented and tuned based on our understanding and experiences, which may not achieve their optimal performance. If you could yield a better result for some specific algorithm, please kindly let us know. We will update this table after the results are verified.

Uncondition Generation

Image COCO Caption

Negative Log-Likelihood (NLL), BLEU and Self-BLEU (SBLEU) on test dataset:

| Model | NLL | BLEU-2 | BLEU-3 | BLEU-4 | BLEU-5 | SBLEU-2 | SBLEU-3 | SBLEU-4 | SBLEU-5 |

|---|---|---|---|---|---|---|---|---|---|

| RNNVAE | 33.02 | 80.46 | 51.5 | 25.89 | 11.55 | 89.18 | 61.58 | 32.69 | 14.03 |

| CNNVAE | 36.61 | 0.63 | 0.27 | 0.28 | 0.29 | 3.10 | 0.28 | 0.29 | 0.30 |

| HybridVAE | 56.44 | 31.96 | 3.75 | 1.61 | 1.76 | 77.79 | 26.77 | 5.71 | 2.49 |

| SeqGAN | 30.56 | 80.15 | 49.88 | 24.95 | 11.10 | 84.45 | 54.26 | 27.42 | 11.87 |

| TextGAN | 32.46 | 77.47 | 45.74 | 21.57 | 9.18 | 82.93 | 51.34 | 24.41 | 10.01 |

| RankGAN | 31.07 | 77.36 | 45.05 | 21.46 | 9.41 | 83.13 | 50.62 | 23.79 | 10.08 |

| MaliGAN | 31.50 | 80.08 | 49.52 | 24.03 | 10.36 | 84.85 | 55.32 | 28.28 | 12.09 |

| LeakGAN | 25.11 | 93.49 | 82.03 | 62.59 | 42.06 | 89.73 | 64.57 | 35.60 | 14.98 |

| MaskGAN | 95.93 | 58.07 | 21.22 | 5.07 | 1.88 | 76.10 | 43.41 | 20.06 | 9.37 |

| GPT-2 | 26.82 | 75.51 | 58.87 | 38.22 | 21.66 | 92.78 | 75.47 | 51.74 | 32.39 |

Part of generated examples:

| Model | Examples |

|---|---|

| RNNVAE | people playing polo to eat in the woods . |

| LeakGAN | a man is standing near a horse on a lush green grassy field . |

| GPT-2 | cit a large zebra lays down on the ground. |

EMNLP2017 WMT News

NLL, BLEU and SBLEU on test dataset:

| Model | NLL | BLEU-2 | BLEU-3 | BLEU-4 | BLEU-5 | SBLEU-2 | SBLEU-3 | SBLEU-4 | SBLEU-5 |

|---|---|---|---|---|---|---|---|---|---|

| RNNVAE | 142.23 | 58.81 | 19.70 | 5.57 | 2.01 | 72.79 | 27.04 | 7.85 | 2.73 |

| CNNVAE | 164.79 | 0.82 | 0.17 | 0.18 | 0.18 | 2.78 | 0.19 | 0.19 | 0.20 |

| HybridVAE | 177.75 | 29.58 | 1.62 | 0.47 | 0.49 | 59.85 | 10.3 | 1.43 | 1.10 |

| SeqGAN | 142.22 | 63.90 | 20.89 | 5.64 | 1.81 | 70.97 | 25.56 | 7.05 | 2.18 |

| TextGAN | 140.90 | 60.37 | 18.86 | 4.82 | 1.52 | 68.32 | 23.24 | 6.10 | 1.84 |

| RankGAN | 142.27 | 61.28 | 19.81 | 5.58 | 1.82 | 67.71 | 23.15 | 6.63 | 2.09 |

| MaliGAN | 149.93 | 45.00 | 12.69 | 3.16 | 1.17 | 65.10 | 20.55 | 5.41 | 1.91 |

| LeakGAN | 162.70 | 76.61 | 39.14 | 15.84 | 6.08 | 85.04 | 54.70 | 29.35 | 14.63 |

| MaskGAN | 303.00 | 63.08 | 21.14 | 5.40 | 1.80 | 83.92 | 47.79 | 19.96 | 7.51 |

| GPT-2 | 88.01 | 55.88 | 21.65 | 5.34 | 1.40 | 75.67 | 36.71 | 12.67 | 3.88 |

Part of generated examples:

| Model | Examples |

|---|---|

| RNNVAE | lewis holds us in total because they have had a fighting opportunity to hold any bodies when companies on his assault . |

| LeakGAN | we ' re a frustration of area , then we do coming out and play stuff so that we can be able to be ready to find a team in a game , but I know how we ' re going to say it was a problem . |

| GPT-2 | russ i'm trying to build a house that my kids can live in, too, and it's going to be a beautiful house. |

IMDB Movie Review

NLL, BLEU and SBLEU on test dataset:

| Model | NLL | BLEU-2 | BLEU-3 | BLEU-4 | BLEU-5 | SBLEU-2 | SBLEU-3 | SBLEU-4 | SBLEU-5 |

|---|---|---|---|---|---|---|---|---|---|

| RNNVAE | 445.55 | 29.14 | 13.73 | 4.81 | 1.85 | 38.77 | 14.39 | 6.61 | 5.16 |

| CNNVAE | 552.09 | 1.88 | 0.11 | 0.11 | 0.11 | 3.08 | 0.13 | 0.13 | 0.13 |

| HybridVAE | 318.46 | 38.65 | 2.53 | 0.34 | 0.31 | 70.05 | 17.27 | 1.57 | 0.59 |

| SeqGAN | 547.09 | 66.33 | 26.89 | 6.80 | 1.79 | 72.48 | 35.48 | 11.60 | 3.31 |

| TextGAN | 488.37 | 63.95 | 25.82 | 6.81 | 1.51 | 72.11 | 30.56 | 8.20 | 1.96 |

| RankGAN | 518.10 | 58.08 | 23.71 | 6.84 | 1.67 | 69.93 | 31.68 | 11.12 | 3.78 |

| MaliGAN | 552.45 | 44.50 | 15.01 | 3.69 | 1.23 | 57.25 | 22.04 | 7.36 | 3.26 |

| LeakGAN | 499.57 | 78.93 | 58.96 | 32.58 | 12.65 | 92.91 | 79.21 | 60.10 | 39.79 |

| MaskGAN | 509.58 | 56.61 | 21.41 | 4.49 | 0.86 | 92.09 | 77.88 | 59.62 | 42.36 |

| GPT-2 | 348.67 | 72.52 | 41.75 | 15.40 | 4.22 | 86.21 | 58.26 | 30.03 | 12.56 |

Part of generated examples (with max_length=100):

| Model | Examples |

|---|---|

| RNNVAE | best brilliant known plot , sound movie , although unfortunately but it also like . the almost five minutes i will have done its bad numbers . so not yet i found the difference from with |

| LeakGAN | i saw this film shortly when I storms of a few concentration one before it all time . It doesn t understand the fact that it is a very good example of a modern day , in the <|unk|> , I saw it . It is so bad it s a <|unk|> . the cast , the stars , who are given a little |

| GPT-2 | be a very bad, low budget horror flick that is not worth watching and, in my humble opinion, not worth watching any time. the acting is atrocious, there are scenes that you could laugh at and the story, if you can call it that, was completely lacking in logic and |

Sequence-to-Sequence Generation

GigaWord (Summarization)

ROUGE metric on test dataset using beam search (with beam_size=5):

| Model | ROUGE-1 | ROUGE-2 | ROUGE-L | ROUGE-W |

|---|---|---|---|---|

| RNN with Attention | 36.32 | 17.63 | 38.36 | 25.08 |

| Transformer | 36.21 | 17.64 | 38.10 | 24.89 |

| BART | 39.34 | 20.07 | 41.25 | 27.13 |

| BERT2BERT | 38.16 | 18.89 | 40.06 | 26.21 |

| ProphetNet | 38.49 | 18.41 | 39.84 | 26.12 |

| T5 | 38.83 | 19.68 | 40.76 | 26.73 |

| Article | japan 's nec corp. and computer corp. of the united states said wednesday they had agreed to join forces in supercomputer sales . |

| Gold Summary | nec in computer sales tie-up |

| RNN with Attention | nec computer corp . |

| Transformer | nec computer to join forces in chip sales |

| BART | nec computer corp. |

| BERT2BERT | nec computer form alliance for supercomputer sales |

| ProphetNet | nec computer to join forces in supercomputer sales |

| T5 | nec computer to join forces in supercomputer sales |

IWSLT2014 German-English (Translation)

BLEU metric on test dataset with three decoding strategies: top-k sampling, greedy search and beam search (with beam_size=5):

| Model | Strategy | BLEU-2 | BLEU-3 | BLEU-4 | BLEU |

|---|---|---|---|---|---|

| RNN with Attention | Top-k sampling | 26.68 | 16.95 | 10.85 | 19.66 |

| Greedy search | 33.74 | 23.03 | 15.79 | 26.23 | |

| Beam search | 35.68 | 24.94 | 17.42 | 28.23 | |

| Transformer | Top-k sampling | 30.96 | 20.83 | 14.16 | 23.91 |

| Greedy search | 35.48 | 24.76 | 17.41 | 28.10 | |

| Beam search | 36.88 | 26.10 | 18.54 | 29.49 | |

| BART | Beam search | 29.02 | 19.58 | 13.48 | 22.42 |

| BERT2BERT | Beam search | 27.61 | 18.41 | 12.46 | 21.07 |

| Source (Germany) | wissen sie , eines der großen < unk > beim reisen und eine der freuden bei der < unk > forschung ist , gemeinsam mit den menschen zu leben , die sich noch an die alten tage erinnern können . die ihre vergangenheit noch immer im wind spüren , sie auf vom regen < unk > steinen berühren , sie in den bitteren blättern der pflanzen schmecken . |

| Gold Target (English) | you know , one of the intense pleasures of travel and one of the delights of < unk > research is the opportunity to live amongst those who have not forgotten the old ways , who still feel their past in the wind , touch it in stones < unk > by rain , taste it in the bitter leaves of plants . |

| RNN with Attention | you know , one of the great < unk > trips is a travel and one of the friends in the world & apos ; s investigation is located on the old days that you can remember the past day , you & apos ; re < unk > to the rain in the < unk > chamber of plants . |

| Transformer | you know , one of the great < unk > about travel , and one of the pleasure in the < unk > research is to live with people who remember the old days , and they still remember the wind in the wind , but they & apos ; re touching the < unk > . |

Persona Chat (Dialogue)

BLEU and distinct metrics on test dataset using beam search (with beam_size=5):

| Model | Distinct-1 | Distinct-2 | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 |

|---|---|---|---|---|---|---|

| RNN with Attention | 0.24 | 0.72 | 17.51 | 4.65 | 2.11 | 1.47 |

| Transformer | 0.38 | 2.28 | 17.29 | 4.85 | 2.32 | 1.65 |

| HRED | 0.22 | 0.63 | 17.29 | 4.72 | 2.20 | 1.60 |

Amazon Electronic (Attribute to text)

BLEU and distinct metrics on test dataset using beam search (with beam_size=5):

| Model | Distinct-1 | Distinct-2 | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 |

|---|---|---|---|---|---|---|

| Context2Seq | 0.07 | 0.39 | 17.21 | 2.80 | 0.83 | 0.43 |

| Attr2Seq | 0.14 | 2.81 | 17.14 | 2.81 | 0.87 | 0.48 |

Releases

| Releases | Date | Features |

|---|---|---|

| v0.2.1 | 15/04/2021 | TextBox |

| v0.1.5 | 01/11/2021 | Basic TextBox |

Contributing

Please let us know if you encounter a bug or have any suggestions by filing an issue.

We welcome all contributions from bug fixes to new features and extensions.

We expect all contributions discussed in the issue tracker and going through PRs.

We thank @LucasTsui0725 for contributing HRED model and @Richar-Du for CVAE model.

We thank @wxDai for contributing PointerNet and more than 20 language models in transformers API.

Reference

If you find TextBox useful for your research or development, please cite the following paper:

@article{textbox,

title={TextBox: A Unified, Modularized, and Extensible Framework for Text Generation},

author={Junyi Li, Tianyi Tang, Gaole He, Jinhao Jiang, Xiaoxuan Hu, Puzhao Xie, Wayne Xin Zhao, Ji-Rong Wen},

year={2021},

journal={arXiv preprint arXiv:2101.02046}

}

The Team

TextBox is developed and maintained by AI Box.

License

TextBox uses MIT License.