HugeCTR, a component of NVIDIA Merlin Open Beta, is a GPU-accelerated recommender framework. It was designed to distribute training across multiple GPUs and nodes and estimate Click-Through Rates (CTRs). HugeCTR supports model-parallel embedding tables and data-parallel neural networks and their variants such as Wide and Deep Learning (WDL), Deep Cross Network (DCN), DeepFM, and Deep Learning Recommendation Model (DLRM). For additional information, see HugeCTR User Guide.

Design Goals:

- Fast: HugeCTR is a speed-of-light CTR model framework.

- Dedicated: HugeCTR provides the essentials so that you can train your CTR model in an efficient manner.

- Easy: Regardless of whether you are a data scientist or machine learning practitioner, we've made it easy for anybody to use HugeCTR.

We've tested HugeCTR's performance on the following systems:

- DGX-2 and DGX A100

- Versus TensorFlow (TF)

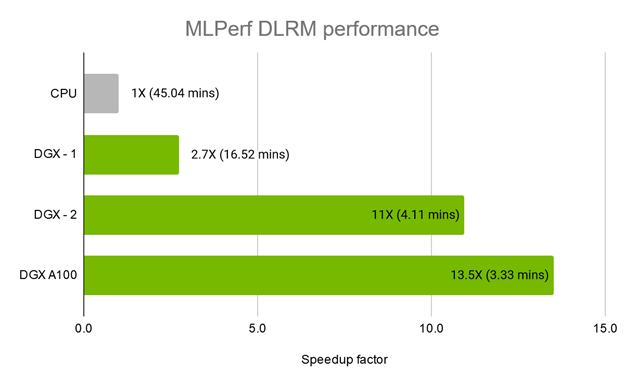

We submitted the DLRM benchmark with HugeCTR version 2.2 to MLPerf Training v0.7. The dataset was Criteo Terabyte Click Logs, which contains 4 billion user and item interactions over 24 days. The target machines were DGX-2 with 16 V100 GPUs and DGX A100 with eight A100 GPUs. Fig. 1 summarizes the performance. For more details, see this blog post.

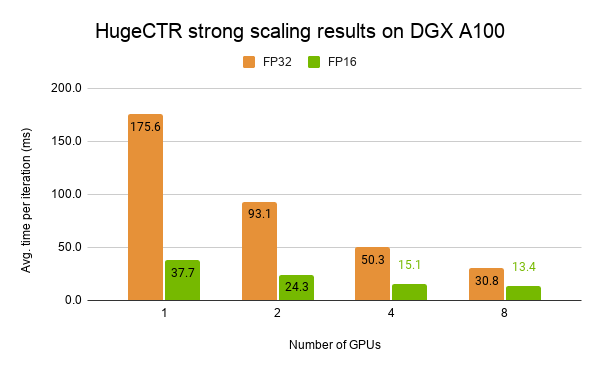

Fig. 2 shows the strong scaling result for both the full precision mode (FP32) and mixed-precision mode (FP16) on a single NVIDIA DGX A100. Bars represent the average iteration time in ms.

In the TensorFlow test case below, HugeCTR exhibits a speedup up to 114x compared to a CPU server that is running TensorFlow with only one V100 GPU and almost the same loss curve.

-

Test environment:

- CPU Server: Dual 20-core Intel(R) Xeon(R) CPU E5-2698 v4 @ 2.20GHz

- TensorFlow version 2.0.0

- V100 16GB: NVIDIA DGX1 servers

-

Network:

Wide Deep Learning: Nx 1024-unit FC layers with ReLU and dropout, emb_dim: 16; Optimizer: Adam for both Linear and DNN modelsDeep Cross Network: Nx 1024-unit FC layers with ReLU and dropout, emb_dim: 16, 6x cross layers; Optimizer: Adam for both Linear and DNN models

-

Dataset:

- The data is provided by CriteoLabs. The original training set contains 45,840,617 examples. Each example contains a label (0 by default OR 1 if the ad was clicked) and 39 features in which 13 are integer and 26 are categorical.

-

Preprocessing:

- Common: Preprocessed by using the scripts available in tools/criteo_script.

- HugeCTR: Converted to the HugeCTR data format with criteo2hugectr.

- TF: Converted to the TFRecord format for the efficient training on Tensorflow.

We’ve implemented the following enhancements to improve usability and performance:

-

Python Interface: To enhance the interoperability with NVTabular and other Python-based libraries, we're introducing a new Python interface for HugeCTR. If you are already using HugeCTR with JSON, the transition to Python will be seamless for you as you'll only have to locate the

hugectr.sofile and set thePYTHONPATHenvironment variable. You can still configure your model in your JSON config file, but the training options such asbatch_sizemust be specified throughhugectr.solver_parser_helper()in Python. For additional information regarding how to use the HugeCTR Python API and comprehend its API signature, see our Jupyter Notebook tutorial. -

HugeCTR Embedding with Tensorflow: To help users easily integrate HugeCTR’s optimized embedding into their Tensorflow workflow, we now offer the HugeCTR embedding layer as a Tensorflow plugin. To better understand how to intall, use, and verify it, see our Jupyter notebook tutorial. It also demonstrates how you can create a new Keras layer

EmbeddingLayerbased on thehugectr_tf_ops.pyhelper code that we provide. -

Model Oversubscription: To enable a model with large embedding tables that exceeds the single GPU's memory limit, we added a new model prefetching feature, giving you the ability to load a subset of an embedding table into the GPU in a coarse grained, on-demand manner during the training stage. To use this feature, you need to split your dataset into multiple sub-datasets while extracting the unique key sets from them. This feature can only currently be used with a

Normdataset format and its corresponding file list. This feature will eventually support all embedding types and dataset formats. We revised ourcriteo2hugectrtool to support the key set extraction for the Criteo dataset. For additional information, see our Python Jupyter Notebook to learn how to use this feature with the Criteo dataset. Please note that The Criteo dataset is a common use case, but model prefetching is not limited to only this dataset. -

TF32 Support: The third-generation Tensor Cores on Ampere support a novel math mode: TensorFloat-32 (TF32). TF32 uses the same 10-bit mantissa as FP16 to ensure accuracy while sporting the same range as FP32, thanks to using an 8-bit exponent. Because TF32 is an internal data type which accelerates FP32 GEMM computations with tensor cores, a user can simply turn it on with a newly added configuration option. Please check out this like for more details.

-

Enhanced AUC Implementation: To enhance the performance of our AUC computation on multi-node environments, we redesigned our AUC implementation to improve how the computational load gets distributed across nodes.

- NOTE: In using the AUC as your evaluation metric, you may encounter a warning message like below, which implies the specified GPU, e.g. 3, suffers from the load imbalance issue. It can happen when the generated predictions are very far from the uniform distribution. It is a normal behavior whilest its performance can be affected.

[02d04h58m05s][HUGECTR][INFO]: GPU 3 has no samples in the AUC computation due to strongly uneven distribution of the scores. Performance may be impacted -

Epoch-Based Training: In addition to

max_iter, a HugeCTR user can setnum_epochsin the Solver clause of their JSON config file. This mode can only currently be used withNormdataset formats and their corresponding file lists. All dataset formats will be supported in the future. -

Multi-Node Training Tutorial: To better support multi-node training use cases, we added a new a step-by-step tutorial.

-

Power Law Distribution Support with Data Generator: Because of the increased need for generating a random dataset whose categorical features follows the power-law distribution, we revised our data generation tool to support this use case. For additional information, refer to the

--long-taildescription here. -

Multi-GPU Preprocessing Script for Criteo Samples: Multiple GPUs can now be used when preparing the dataset for our samples. For additional information, see how preprocess_nvt.py is used to preprocess the Criteo dataset for DCN, DeepFM, and W&D samples.

- Since the automatic plan file generator is not able to handle systems that contain one GPU, a user must manually create a JSON plan file with the following parameters and rename using the name listed in the HugeCTR configuration file:

{"type": "all2all", "num_gpus": 1, "main_gpu": 0, "num_steps": 1, "num_chunks": 1, "plan": [[0, 0]], "chunks": [1]}. - If using a system that contains two GPUs with two NVLink connections, the auto plan file generator will print the following warning message:

RuntimeWarning: divide by zero encountered in true_divide. This is an erroneous warning message and should be ignored. - The current plan file generator doesn't support a system where the NVSwitch or a full peer-to-peer connection between all nodes is unavailable.

- Users need to set an

export CUDA_DEVICE_ORDER=PCI_BUS_IDenvironment variable to ensure that the CUDA runtime and driver have a consistent GPU numbering. LocalizedSlotSparseEmbeddingOneHotonly supports a single-node machine where all the GPUs are fully connected such as NVSwitch.- HugeCTR version 2.2.1 crashes when running our DLRM sample on DGX2 due to a CUDA Graph issue. To run the sample on DGX2, disable the use of CUDA Graph with

"cuda_graph": falseeven if it degrades the performance a bit. We are working on fixing this issue. This issue doesn't exist when using the DGX A100. - The model prefetching feature is only available in Python. Currently, a user can only use this feature with the

DistributedSlotSparseEmbeddingHashembedding and theNormdataset format on single GPUs. This feature will eventually support all embedding types and dataset formats. - The HugeCTR embedding TensorFlow plugin only works with single-node machines.

- The HugeCTR embedding TensorFlow plugin assumes that the input keys are in

int64and its output is infloat. - When using our embedding plugin, please note that the

fprop_v3function, which is available intools/embedding_plugin/python/hugectr_tf_ops.py, only works withDistributedSlotSparseEmbeddingHash.

To get started, see the HugeCTR User Guide.

If you'd like to quickly train a model using the Python interface, follow these six steps:

-

Start a HugeCTR container from NVIDIA NGC by running the following command:

docker run --runtime=nvidia --rm -it nvcr.io/nvidia/hugectr:v2.3 -

Inside the container, copy the DCN JSON config file to your home directory or anywhere you want.

This config file specifies the DCN model architecture and its optimizer. With any Python use case, the solver clause within the config file is not used at all.

-

Generate a synthetic dataset based on the config file by running the following command:

data_generator ./dcn.json ./dataset_dir 434428 1The following set of files are created: ./file_list.txt, ./file_list_test.txt, and ./dataset_dir/*.

-

Write a simple Python code using the hugectr module as shown here:

# train.py import sys import hugectr from mpi4py import MPI def train(json_config_file): solver_config = hugectr.solver_parser_helper(batchsize = 16384, batchsize_eval = 16384, vvgpu = [[0,1,2,3,4,5,6,7]], repeat_dataset = True) sess = hugectr.Session(solver_config, json_config_file) sess.start_data_reading() for i in range(10000): sess.train() if (i % 100 == 0): loss = sess.get_current_loss() print("[HUGECTR][INFO] iter: {}; loss: {}".format(i, loss)) if __name__ == "__main__": json_config_file = sys.argv[1] train(json_config_file)

NOTE: Update the vvgpu (the active GPUs), batchsize, and batchsize_eval parameters according to your GPU system.

-

Train the model by running the following command:

python train.py dcn.json

If you encounter any issues and/or have questions, please file an issue here so that we can provide you with the necessary resolutions and answers. To further advance the Merlin/HugeCTR Roadmap, we encourage you to share all the details regarding your recommender system pipeline using this survey.