List of useful data augmentation resources. You will find here some links to more or less popular github repos ✨, libraries, papers 📚 and other information.

Do you like it? Feel free to ⭐ ! Feel free to pull request!

- Introduction

- Repositories

- Papers

- AutoAugment - repos and papers

- Other - challenges, workshops, tutorials, books

Data augmentation can be simply described as any method that makes our dataset larger. To create more images for example, we could zoom the in and save a result, we could change the brightness of the image or rotate it. To get bigger sound dataset we could try raise or lower the pitch of the audio sample or slow down/speed up. Example data augmentation techniques are presented on the diagram below.

-

- Affine transformations

- Rotation

- Scaling

- Random cropping

- Reflection

- Elastic transformations

- Contrast shift

- Brightness shift

- Blurring

- Channel shuffle

- Advanced transformations

- Random erasing

- Adding rain effects, sun flare...

- Image blending

- Neural-based transformations

- Adversarial noise

- Neural Style Transfer

- Generative Adversarial Networks

- Affine transformations

-

- Noise injection

- Time shift

- Time stretching

- Random cropping

- Pitch scaling

- Dynamic range compression

- Simple gain

- Equalization

-

- Thesaurus

- Text Generation

- Back Translation

- Word Embeddings

- Contextualized Word Embeddings

- Voice conversion

-

- Basic approaches

- Warping

- Jittering

- Perturbing

- Advanced approches

- Embedding space

- GAN/Adversarial

- RL/Meta-Learning

- Basic approaches

If you wish to cite us, you can cite followings paper of your choice: Style transfer-based image synthesis as an efficient regularization technique in deep learning or Data augmentation for improving deep learning in image classification problem.

- albumentations  is a python library with a set of useful, large and diverse data augmentation methods. It offers over 30 different types of augmentations, easy and ready to use. Moreover, as the authors prove, the library is faster than other libraries on most of the transformations.

is a python library with a set of useful, large and diverse data augmentation methods. It offers over 30 different types of augmentations, easy and ready to use. Moreover, as the authors prove, the library is faster than other libraries on most of the transformations.

Example jupyter notebooks:

- All in one showcase notebook

- Classification,

- Object detection, image segmentation and keypoints

- Others - Weather transforms , Serialization, Replay/Deterministic mode, Non-8-bit images

- imgaug  - is another very useful and widely used python library. As authors describe: it helps you with augmenting images for your machine learning projects. It converts a set of input images into a new, much larger set of slightly altered images. It offers many augmentation techniques such as affine transformations, perspective transformations, contrast changes, gaussian noise, dropout of regions, hue/saturation changes, cropping/padding, blurring.

- is another very useful and widely used python library. As authors describe: it helps you with augmenting images for your machine learning projects. It converts a set of input images into a new, much larger set of slightly altered images. It offers many augmentation techniques such as affine transformations, perspective transformations, contrast changes, gaussian noise, dropout of regions, hue/saturation changes, cropping/padding, blurring.

Example jupyter notebooks:

- Load and Augment an Image

- Multicore Augmentation

- Augment and work with: Keypoints/Landmarks, Bounding Boxes, Polygons, Line Strings, Heatmaps, Segmentation Maps

- UDA  - a simple data augmentation tool for image files, intended for use with machine learning data sets. The tool scans a directory containing image files, and generates new images by performing a specified set of augmentation operations on each file that it finds. This process multiplies the number of training examples that can be used when developing a neural network, and should significantly improve the resulting network's performance, particularly when the number of training examples is relatively small.

- a simple data augmentation tool for image files, intended for use with machine learning data sets. The tool scans a directory containing image files, and generates new images by performing a specified set of augmentation operations on each file that it finds. This process multiplies the number of training examples that can be used when developing a neural network, and should significantly improve the resulting network's performance, particularly when the number of training examples is relatively small.

The details are avaible here: UNSUPERVISED DATA AUGMENTATION FOR CONSISTENCY TRAINING

- Data augmentation for object detection  - Repository contains a code for the paper space tutorial series on adapting data augmentation methods for object detection tasks. They support a lot of data augmentations, like Horizontal Flipping, Scaling, Translation, Rotation, Shearing, Resizing.

- Repository contains a code for the paper space tutorial series on adapting data augmentation methods for object detection tasks. They support a lot of data augmentations, like Horizontal Flipping, Scaling, Translation, Rotation, Shearing, Resizing.

- FMix - Understanding and Enhancing Mixed Sample Data Augmentation  This repository contains the official implementation of the paper 'Understanding and Enhancing Mixed Sample Data Augmentation'

This repository contains the official implementation of the paper 'Understanding and Enhancing Mixed Sample Data Augmentation'

- Super-AND  - This repository is the Pytorch implementation of "A Comprehensive Approach to Unsupervised Embedding Learning based on AND Algorithm.

- This repository is the Pytorch implementation of "A Comprehensive Approach to Unsupervised Embedding Learning based on AND Algorithm.

- vidaug  - This python library helps you with augmenting videos for your deep learning architectures. It converts input videos into a new, much larger set of slightly altered videos.

- This python library helps you with augmenting videos for your deep learning architectures. It converts input videos into a new, much larger set of slightly altered videos.

- Image augmentor  - This is a simple python data augmentation tool for image files, intended for use with machine learning data sets. The tool scans a directory containing image files, and generates new images by performing a specified set of augmentation operations on each file that it finds. This process multiplies the number of training examples that can be used when developing a neural network, and should significantly improve the resulting network's performance, particularly when the number of training examples is relatively small.

- This is a simple python data augmentation tool for image files, intended for use with machine learning data sets. The tool scans a directory containing image files, and generates new images by performing a specified set of augmentation operations on each file that it finds. This process multiplies the number of training examples that can be used when developing a neural network, and should significantly improve the resulting network's performance, particularly when the number of training examples is relatively small.

- torchsample  - this python package provides High-Level Training, Data Augmentation, and Utilities for Pytorch. This toolbox provides data augmentation methods, regularizers and other utility functions. These transforms work directly on torch tensors:

- this python package provides High-Level Training, Data Augmentation, and Utilities for Pytorch. This toolbox provides data augmentation methods, regularizers and other utility functions. These transforms work directly on torch tensors:

- Compose()

- AddChannel()

- SwapDims()

- RangeNormalize()

- StdNormalize()

- Slice2D()

- RandomCrop()

- SpecialCrop()

- Pad()

- RandomFlip()

- Random erasing  - The code is based on the paper: https://arxiv.org/abs/1708.04896. The Absract:

- The code is based on the paper: https://arxiv.org/abs/1708.04896. The Absract:

In this paper, we introduce Random Erasing, a new data augmentation method for training the convolutional neural network (CNN). In training, Random Erasing randomly selects a rectangle region in an image and erases its pixels with random values. In this process, training images with various levels of occlusion are generated, which reduces the risk of over-fitting and makes the model robust to occlusion. Random Erasing is parameter learning free, easy to implement, and can be integrated with most of the CNN-based recognition models. Albeit simple, Random Erasing is complementary to commonly used data augmentation techniques such as random cropping and flipping, and yields consistent improvement over strong baselines in image classification, object detection and person re-identification. Code is available at: this https URL.

- data augmentation in C++ -  Simple image augmnetation program transform input images with rotation, slide, blur, and noise to create training data of image recognition.

Simple image augmnetation program transform input images with rotation, slide, blur, and noise to create training data of image recognition.

- Data augmentation with GANs  - This repository contain files with Generative Adversarial Network, which can be used to successfully augment the dataset. This is an implementation of DAGAN as described in https://arxiv.org/abs/1711.04340. The implementation provides data loaders, model builders, model trainers, and synthetic data generators for the Omniglot and VGG-Face datasets.

- This repository contain files with Generative Adversarial Network, which can be used to successfully augment the dataset. This is an implementation of DAGAN as described in https://arxiv.org/abs/1711.04340. The implementation provides data loaders, model builders, model trainers, and synthetic data generators for the Omniglot and VGG-Face datasets.

- Joint Discriminative and Generative Learning  - This repo is for Joint Discriminative and Generative Learning for Person Re-identification (CVPR2019 Oral). The author proposes an end-to-end training network that simultaneously generates more training samples and conducts representation learning. Given N real samples, the network could generate O(NxN) high-fidelity samples.

- This repo is for Joint Discriminative and Generative Learning for Person Re-identification (CVPR2019 Oral). The author proposes an end-to-end training network that simultaneously generates more training samples and conducts representation learning. Given N real samples, the network could generate O(NxN) high-fidelity samples.

[Project] [Paper] [YouTube] [Bilibili] [Poster]

[Supp]

[Project] [Paper] [YouTube] [Bilibili] [Poster]

[Supp]

- White-Balance Emulator for Color Augmentation  - Our augmentation method can accurately emulate realistic color constancy degradation. Existing color augmentation methods often generate unrealistic colors which rarely happen in reality (e.g., green skin or purple grass). More importantly, the visual appearance of existing color augmentation techniques does not well represent the color casts produced by incorrect WB applied onboard cameras, as shown below. [python] [matlab]

- Our augmentation method can accurately emulate realistic color constancy degradation. Existing color augmentation methods often generate unrealistic colors which rarely happen in reality (e.g., green skin or purple grass). More importantly, the visual appearance of existing color augmentation techniques does not well represent the color casts produced by incorrect WB applied onboard cameras, as shown below. [python] [matlab]

- DocCreator  - is an open source, cross-platform software allowing to generate synthetic document images and the accompanying groundtruth. Various degradation models can be applied on original document images to create virtually unlimited amounts of different images.

- is an open source, cross-platform software allowing to generate synthetic document images and the accompanying groundtruth. Various degradation models can be applied on original document images to create virtually unlimited amounts of different images.

A multi-platform and open-source software able to create synthetic image documents with ground truth.

- OnlineAugment  - implementation in PyTorch

- implementation in PyTorch

- More automatic than AutoAugment and related

- Towards fully automatic (STN and VAE, No need to specify the image primitives).

- Broad domains (natural, medical images, etc).

- Diverse tasks (classification, segmentation, etc).

- Easy to use

- One-stage training (user-friendly).

- Simple code (single GPU training, no need for parallel optimization).

- Orthogonal to AutoAugment and related

- Contextual data augmentation - Contextual augmentation is a domain-independent data augmentation for text classification tasks. Texts in supervised dataset are augmented by replacing words with other words which are predicted by a label-conditioned bi-directional language model.

This repository contains a collection of scripts for an experiment of Contextual Augmentation.

- nlpaug  - This python library helps you with augmenting nlp for your machine learning projects. Visit this introduction to understand about Data Augmentation in NLP.

- This python library helps you with augmenting nlp for your machine learning projects. Visit this introduction to understand about Data Augmentation in NLP. Augmenter is the basic element of augmentation while Flow is a pipeline to orchestra multi augmenter together.

Features:

- Generate synthetic data for improving model performance without manual effort

- Simple, easy-to-use and lightweight library. Augment data in 3 lines of code

- Plug and play to any neural network frameworks (e.g. PyTorch, TensorFlow)

- Support textual and audio input

- EDA NLP  - EDA is an easy data augmentation techniques for boosting performance on text classification tasks. These are a generalized set of data augmentation techniques that are easy to implement and have shown improvements on five NLP classification tasks, with substantial improvements on datasets of size

- EDA is an easy data augmentation techniques for boosting performance on text classification tasks. These are a generalized set of data augmentation techniques that are easy to implement and have shown improvements on five NLP classification tasks, with substantial improvements on datasets of size N < 500. While other techniques require you to train a language model on an external dataset just to get a small boost, we found that simple text editing operations using EDA result in good performance gains. Given a sentence in the training set, we perform the following operations:

- Synonym Replacement (SR): Randomly choose n words from the sentence that are not stop words. Replace each of these words with one of its synonyms chosen at random.

- Random Insertion (RI): Find a random synonym of a random word in the sentence that is not a stop word. Insert that synonym into a random position in the sentence. Do this n times.

- Random Swap (RS): Randomly choose two words in the sentence and swap their positions. Do this n times.

- Random Deletion (RD): For each word in the sentence, randomly remove it with probability p.

- SpecAugment with Pytorch  - (https://ai.googleblog.com/2019/04/specaugment-new-data-augmentation.html) is a state of the art data augmentation approach for speech recognition. It supports augmentations such as time wrap, time mask, frequency mask or all above combined.

- (https://ai.googleblog.com/2019/04/specaugment-new-data-augmentation.html) is a state of the art data augmentation approach for speech recognition. It supports augmentations such as time wrap, time mask, frequency mask or all above combined.

- Audiomentations  - A Python library for audio data augmentation. Inspired by albumentations. Useful for machine learning. It allows to use effects such as: Compose, AddGaussianNoise, TimeStretch, PitchShift and Shift.

- A Python library for audio data augmentation. Inspired by albumentations. Useful for machine learning. It allows to use effects such as: Compose, AddGaussianNoise, TimeStretch, PitchShift and Shift.

- MUDA  - A library for Musical Data Augmentation. Muda package implements annotation-aware musical data augmentation, as described in the muda paper.

- A library for Musical Data Augmentation. Muda package implements annotation-aware musical data augmentation, as described in the muda paper.

The goal of this package is to make it easy for practitioners to consistently apply perturbations to annotated music data for the purpose of fitting statistical models.

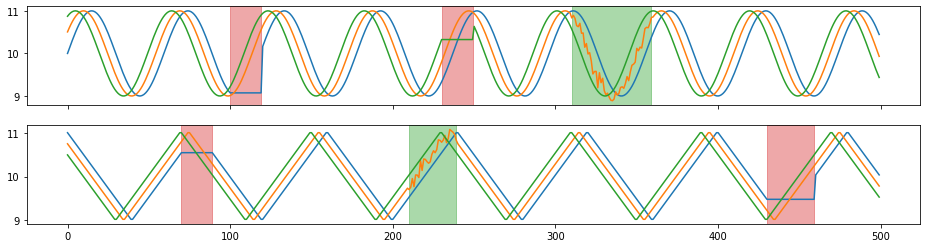

- tsaug  -

-

is a Python package for time series augmentation. It offers a set of augmentation methods for time series, as well as a simple API to connect multiple augmenters into a pipeline. Can be used for audio augmentation.

- wav-augment  - performs data augmentation on audio data.

- performs data augmentation on audio data.

The audio data is represented as pytorch tensors. It is particularly useful for speech data. Among others, it implements the augmentations that we found to be most useful for self-supervised learning (Data Augmenting Contrastive Learning of Speech Representations in the Time Domain, E. Kharitonov, M. Rivière, G. Synnaeve, L. Wolf, P.-E. Mazaré, M. Douze, E. Dupoux. [arxiv]):

- Pitch randomization,

- Reverberation,

- Additive noise,

- Time dropout (temporal masking),

- Band reject,

- Clipping

- tsaug  -

-

is a Python package for time series augmentation. It offers a set of augmentation methods for time series, as well as a simple API to connect multiple augmenters into a pipeline.

Example augmenters:

- random time warping 5 times in parallel,

- random crop subsequences with length 300,

- random quantize to 10-, 20-, or 30- level sets,

- with 80% probability , random drift the signal up to 10% - 50%,

- with 50% probability, reverse the sequence.

-

CopyPaste: An Augmentation Method for Speech Emotion Recognition;Raghavendra Pappagari, Jesús Villalba, Piotr Żelasko, Laureano Moro-Velazquez, Najim Dehak; Data augmentation is a widely used strategy for training robust machine learning models. It partially alleviates the problem of limited data for tasks like speech emotion recognition (SER), where collecting data is expensive and challenging. This study proposes CopyPaste, a perceptually motivated novel augmentation procedure for SER. Assuming that the presence of emotions other than neutral dictates a speaker's overall perceived emotion in a recording, concatenation of an emotional (emotion E) and a neutral utterance can still be labeled with emotion E. We hypothesize that SER performance can be improved using these concatenated utterances in model training. To verify this, three CopyPaste schemes are tested on two deep learning models: one trained independently and another using transfer learning from an x-vector model, a speaker recognition model. We observed that all three CopyPaste schemes improve SER performance on all the three datasets considered: MSP-Podcast, Crema-D, and IEMOCAP. Additionally, CopyPaste performs better than noise augmentation and, using them together improves the SER performance further. Our experiments on noisy test sets suggested that CopyPaste is effective even in noisy test conditions

-

Seen and Unseen emotional style transfer for voice conversion with a new emotional speech dataset;Kun Zhou, Berrak Sisman, Rui Liu, Haizhou Li; Emotional voice conversion aims to transform emotional prosody in speech while preserving the linguistic content and speaker identity. Prior studies show that it is possible to disentangle emotional prosody using an encoder-decoder network conditioned on discrete representation, such as one-hot emotion labels. Such networks learn to remember a fixed set of emotional styles. In this paper, we propose a novel framework based on variational auto-encoding Wasserstein generative adversarial network (VAW-GAN), which makes use of a pre-trained speech emotion recognition (SER) model to transfer emotional style during training and at run-time inference. In this way, the network is able to transfer both seen and unseen emotional style to a new utterance. We show that the proposed framework achieves remarkable performance by consistently outperforming the baseline framework. This paper also marks the release of an emotional speech dataset (ESD) for voice conversion, which has multiple speakers and languages.

-

OnlineAugment: Online Data Augmentation with Less Domain Knowledge;Zhiqiang Tang, Yunhe Gao, Leonid Karlinsky, Prasanna Sattigeri, Rogerio Feris, Dimitris Metaxas; Data augmentation is one of the most important tools in training modern deep neural networks. Recently, great advances have been made in searching for optimal augmentation policies in the image classification domain. However, two key points related to data augmentation remain uncovered by the current methods. First is that most if not all modern augmentation search methods are offline and learning policies are isolated from their usage. The learned policies are mostly constant throughout the training process and are not adapted to the current training model state. Second, the policies rely on class-preserving image processing functions. Hence applying current offline methods to new tasks may require domain knowledge to specify such kind of operations. In this work, we offer an orthogonal online data augmentation scheme together with three new augmentation networks, co-trained with the target learning task. It is both more efficient, in the sense that it does not require expensive offline training when entering a new domain, and more adaptive as it adapts to the learner state. Our augmentation networks require less domain knowledge and are easily applicable to new tasks. Extensive experiments demonstrate that the proposed scheme alone performs on par with the state-of-the-art offline data augmentation methods, as well as improving upon the state-of-the-art in combination with those methods. Code is available at this https URL .

-

Time Series Data Augmentation for Deep Learning: A Survey;Qingsong Wen, Liang Sun, Xiaomin Song, Jingkun Gao, Xue Wang, Huan Xu; Deep learning performs remarkably well on many time series analysis tasks recently. The superior performance of deep neural networks relies heavily on a large number of training data to avoid overfitting. However, the labeled data of many real-world time series applications may be limited such as classification in medical time series and anomaly detection in AIOps. As an effective way to enhance the size and quality of the training data, data augmentation is crucial to the successful application of deep learning models on time series data. In this paper, we systematically review different data augmentation methods for time series. We propose a taxonomy for the reviewed methods, and then provide a structured review for these methods by highlighting their strengths and limitations. We also empirically compare different data augmentation methods for different tasks including time series anomaly detection, classification and forecasting. Finally, we discuss and highlight future research directions, including data augmentation in time-frequency domain, augmentation combination, and data augmentation and weighting for imbalanced class.

-

Attribute Mix: Semantic Data Augmentation for Fine Grained Recognition;Hao Li, Xiaopeng Zhang, Hongkai Xiong, Qi Tian ; Collecting fine-grained labels usually requires expert-level domain knowledge and is prohibitive to scale up. In this paper, we propose Attribute Mix, a data augmentation strategy at attribute level to expand the fine-grained samples. The principle lies in that attribute features are shared among fine-grained sub-categories, and can be seamlessly transferred among images. Toward this goal, we propose an automatic attribute mining approach to discover attributes that belong to the same super-category, and Attribute Mix is operated by mixing semantically meaningful attribute features from two images. Attribute Mix is a simple but effective data augmentation strategy that can significantly improve the recognition performance without increasing the inference budgets. Furthermore, since attributes can be shared among images from the same super-category, we further enrich the training samples with attribute level labels using images from the generic domain. Experiments on widely used fine-grained benchmarks demonstrate the effectiveness of our proposed method. Specifically, without any bells and whistles, we achieve accuracies of 90.2%, 93.1% and 94.9% on CUB-200-2011, FGVC-Aircraft and Standford Cars, respectively.

-

Dictionary-based Data Augmentation for Cross-Domain Neural Machine Translation; Wei Peng, Chongxuan Huang, Tianhao Li, Yun Chen, Qun Liu ; Existing data augmentation approaches for neural machine translation (NMT) have predominantly relied on back-translating in-domain (IND) monolingual corpora. These methods suffer from issues associated with a domain information gap, which leads to translation errors for low frequency and out-of-vocabulary terminology. This paper proposes a dictionary-based data augmentation (DDA) method for cross-domain NMT. DDA synthesizes a domain-specific dictionary with general domain corpora to automatically generate a large-scale pseudo-IND parallel corpus. The generated pseudo-IND data can be used to enhance a general domain trained baseline. The experiments show that the DDA-enhanced NMT models demonstrate consistent significant improvements, outperforming the baseline models by 3.75-11.53 BLEU. The proposed method is also able to further improve the performance of the back-translation based and IND-finetuned NMT models. The improvement is associated with the enhanced domain coverage produced by DDA.

-

Imbalanced Data Learning by Minority Class Augmentation using Capsule Adversarial Networks;Pourya Shamsolmoali, Masoumeh Zareapoor, Linlin Shen, Abdul Hamid Sadka, Jie Yang ; The fact that image datasets are often imbalanced poses an intense challenge for deep learning techniques. In this paper, we propose a method to restore the balance in imbalanced images, by coalescing two concurrent methods, generative adversarial networks (GANs) and capsule network. In our model, generative and discriminative networks play a novel competitive game, in which the generator generates samples towards specific classes from multivariate probabilities distribution. The discriminator of our model is designed in a way that while recognizing the real and fake samples, it is also requires to assign classes to the inputs. Since GAN approaches require fully observed data during training, when the training samples are imbalanced, the approaches might generate similar samples which leading to data overfitting. This problem is addressed by providing all the available information from both the class components jointly in the adversarial training. It improves learning from imbalanced data by incorporating the majority distribution structure in the generation of new minority samples. Furthermore, the generator is trained with feature matching loss function to improve the training convergence. In addition, prevents generation of outliers and does not affect majority class space. The evaluations show the effectiveness of our proposed methodology; in particular, the coalescing of capsule-GAN is effective at recognizing highly overlapping classes with much fewer parameters compared with the convolutional-GAN.

-

DADA: Differentiable Automatic Data Augmentation;Yonggang Li, Guosheng Hu, Yongtao Wang, Timothy Hospedales, Neil M. Robertson, Yongxing Yang; Data augmentation (DA) techniques aim to increase data variability, and thus train deep networks with better generalisation. The pioneering AutoAugment automated the search for optimal DA policies with reinforcement learning. However, AutoAugment is extremely computationally expensive, limiting its wide applicability. Followup work such as PBA and Fast AutoAugment improved efficiency, but optimization speed remains a bottleneck. In this paper, we propose Differentiable Automatic Data Augmentation (DADA) which dramatically reduces the cost. DADA relaxes the discrete DA policy selection to a differentiable optimization problem via Gumbel-Softmax. In addition, we introduce an unbiased gradient estimator, RELAX, leading to an efficient and effective one-pass optimization strategy to learn an efficient and accurate DA policy. We conduct extensive experiments on CIFAR-10, CIFAR-100, SVHN, and ImageNet datasets. Furthermore, we demonstrate the value of Auto DA in pre-training for downstream detection problems. Results show our DADA is at least one order of magnitude faster than the state-of-the-art while achieving very comparable accuracy.

-

Data Augmentation using Pre-trained Transformer Models; Varun Kumar, Ashutosh Choudhary, Eunah Cho; Language model based pre-trained models such as BERT have provided significant gains across different NLP tasks. In this paper, we study different types of pre-trained transformer based models such as auto-regressive models (GPT-2), auto-encoder models (BERT), and seq2seq models (BART) for conditional data augmentation. We show that prepending the class labels to text sequences provides a simple yet effective way to condition the pre-trained models for data augmentation. On three classification benchmarks, pre-trained Seq2Seq model outperforms other models. Further, we explore how different pre-trained model based data augmentation differs in-terms of data diversity, and how well such methods preserve the class-label information. ;

-

SuperMix: Supervising the Mixing Data Augmentation; Ali Dabouei, Sobhan Soleymani, Fariborz Taherkhani, Nasser M. Nasrabadi ; In this paper, we propose a supervised mixing augmentation method, termed SuperMix, which exploits the knowledge of a teacher to mix images based on their salient regions. SuperMix optimizes a mixing objective that considers: i) forcing the class of input images to appear in the mixed image, ii) preserving the local structure of images, and iii) reducing the risk of suppressing important features. To make the mixing suitable for large-scale applications, we develop an optimization technique, 65× faster than gradient descent on the same problem. We validate the effectiveness of SuperMix through extensive evaluations and ablation studies on two tasks of object classification and knowledge distillation. On the classification task, SuperMix provides the same performance as the advanced augmentation methods, such as AutoAugment. On the distillation task, SuperMix sets a new state-of-the-art with a significantly simplified distillation method. Particularly, in six out of eight teacher-student setups from the same architectures, the students trained on the mixed data surpass their teachers with a notable margin.

-

Fast Cross-domain Data Augmentation through Neural Sentence Editing; Guillaume Raille, Sandra Djambazovska, Claudiu Musat; Data augmentation promises to alleviate data scarcity. This is most important in cases where the initial data is in short supply. This is, for existing methods, also where augmenting is the most difficult, as learning the full data distribution is impossible. For natural language, sentence editing offers a solution - relying on small but meaningful changes to the original ones. Learning which changes are meaningful also requires large amounts of training data. We thus aim to learn this in a source domain where data is abundant and apply it in a different, target domain, where data is scarce - cross-domain augmentation. We create the Edit-transformer, a Transformer-based sentence editor that is significantly faster than the state of the art and also works cross-domain. We argue that, due to its structure, the Edit-transformer is better suited for cross-domain environments than its edit-based predecessors. We show this performance gap on the Yelp-Wikipedia domain pairs. Finally, we show that due to this cross-domain performance advantage, the Edit-transformer leads to meaningful performance gains in several downstream tasks

-

GridMask Data Augmentation; Pengguang Chen; We propose a novel data augmentation method GridMask in this paper. It utilizes information removal to achieve state-of-the-art results in a variety of computer vision tasks. We analyze the requirement of information dropping. Then we show limitation of existing information dropping algorithms and propose our structured method, which is simple and yet very effective. It is based on the deletion of regions of the input image. Our extensive experiments show that our method outperforms the latest AutoAugment, which is way more computationally expensive due to the use of reinforcement learning to find the best policies. On the ImageNet dataset for recognition, COCO2017 object detection, and on Cityscapes dataset for semantic segmentation, our method all notably improves performance over baselines. The extensive experiments manifest the effectiveness and generality of the new method.

-

Mel-spectrogram augmentation for sequence to sequence voice conversion; Yeongtae Hwang, Hyemin Cho, Hongsun Yang, Insoo Oh, Seong-Whan Lee; When training the sequence-to-sequence voice conversion model, we need to handle an issue of insufficient data about the number of speech tuples which consist of the same utterance. This study experimentally investigated the effects of Mel-spectrogram augmentation on the sequence-to-sequence voice conversion model. For Mel-spectrogram augmentation, we adopted the policies proposed in SpecAugment. In addition, we propose new policies for more data variations. To find the optimal hyperparameters of augmentation policies for voice conversion, we experimented based on the new metric, namely deformation per deteriorating ratio. We observed the effect of these through experiments based on various sizes of training set and combinations of augmentation policy. In the experimental results, the time axis warping based policies showed better performance than other policies.

-

Data Augmentation by AutoEncoders for Unsupervised Anomaly Detection;Kasra Babaei, ZhiYuan Chen, Tomas Maul; This paper proposes an autoencoder (AE) that is used for improving the performance of once-class classifiers for the purpose of detecting anomalies. Traditional one-class classifiers (OCCs) perform poorly under certain conditions such as high-dimensionality and sparsity. Also, the size of the training set plays an important role on the performance of one-class classifiers. Autoencoders have been widely used for obtaining useful latent variables from high-dimensional datasets. In the proposed approach, the AE is capable of deriving meaningful features from high-dimensional datasets while doing data augmentation at the same time. The augmented data is used for training the OCC algorithms. The experimental results show that the proposed approach enhance the performance of OCC algorithms and also outperforms other well-known approaches.

-

Effective Data Augmentation with Multi-Domain Learning GANs;Shin'ya Yamaguchi, Sekitoshi Kanai, Takeharu Eda ; For deep learning applications, the massive data development (e.g., collecting, labeling), which is an essential process in building practical applications, still incurs seriously high costs. In this work, we propose an effective data augmentation method based on generative adversarial networks (GANs), called Domain Fusion. Our key idea is to import the knowledge contained in an outer dataset to a target model by using a multi-domain learning GAN. The multi-domain learning GAN simultaneously learns the outer and target dataset and generates new samples for the target tasks. The simultaneous learning process makes GANs generate the target samples with high fidelity and variety. As a result, we can obtain accurate models for the target tasks by using these generated samples even if we only have an extremely low volume target dataset. We experimentally evaluate the advantages of Domain Fusion in image classification tasks on 3 target datasets: CIFAR-100, FGVC-Aircraft, and Indoor Scene Recognition. When trained on each target dataset reduced the samples to 5,000 images, Domain Fusion achieves better classification accuracy than the data augmentation using fine-tuned GANs. Furthermore, we show that Domain Fusion improves the quality of generated samples, and the improvements can contribute to higher accuracy.

-

Adversarial AutoAugment;Xinyu Zhang, Qiang Wang, Jian Zhang, Zhao Zhong ; Data augmentation (DA) has been widely utilized to improve generalization in training deep neural networks. Recently, human-designed data augmentation has been gradually replaced by automatically learned augmentation policy. Through finding the best policy in well-designed search space of data augmentation, AutoAugment can significantly improve validation accuracy on image classification tasks. However, this approach is not computationally practical for large-scale problems. In this paper, we develop an adversarial method to arrive at a computationally-affordable solution called Adversarial AutoAugment, which can simultaneously optimize target related object and augmentation policy search loss. The augmentation policy network attempts to increase the training loss of a target network through generating adversarial augmentation policies, while the target network can learn more robust features from harder examples to improve the generalization. In contrast to prior work, we reuse the computation in target network training for policy evaluation, and dispense with the retraining of the target network. Compared to AutoAugment, this leads to about 12x reduction in computing cost and 11x shortening in time overhead on ImageNet. We show experimental results of our approach on CIFAR-10/CIFAR-100, ImageNet, and demonstrate significant performance improvements over state-of-the-art. On CIFAR-10, we achieve a top-1 test error of 1.36%, which is the currently best performing single model. On ImageNet, we achieve a leading performance of top-1 accuracy 79.40% on ResNet-50 and 80.00% on ResNet-50-D without extra data.

-

Imperfect ImaGANation: Implications of GANs Exacerbating Biases on Facial Data Augmentation and Snapchat Selfie Lenses;Niharika Jain, Alberto Olmo, Sailik Sengupta, Lydia Manikonda, Subbarao Kambhampati ; Recently, the use of synthetic data generated by GANs has become a popular method to do data augmentation for many applications. While practitioners celebrate this as an economical way to obtain synthetic data for training data-hungry machine learning models, it is not clear that they recognize the perils of such an augmentation technique when applied to an already-biased dataset. Although one expects GANs to replicate the distribution of the original data, in real-world settings with limited data and finite network capacity, GANs suffer from mode collapse. Especially when this data is coming from online social media platforms or the web which are never balanced. In this paper, we show that in settings where data exhibits bias along some axes (eg. gender, race), failure modes of Generative Adversarial Networks (GANs) exacerbate the biases in the generated data. More often than not, this bias is unavoidable; we empirically demonstrate that given input of a dataset of headshots of engineering faculty collected from 47 online university directory webpages in the United States is biased toward white males, a state-of-the-art (unconditional variant of) GAN "imagines" faces of synthetic engineering professors that have masculine facial features and white skin color (inferred using human studies and a state-of-the-art gender recognition system). We also conduct a preliminary case study to highlight how Snapchat's explosively popular "female" filter (widely accepted to use a conditional variant of GAN), ends up consistently lightening the skin tones in women of color when trying to make face images appear more feminine. Our study is meant to serve as a cautionary tale for the lay practitioners who may unknowingly increase the bias in their training data by using GAN-based augmentation techniques with web data and to showcase the dangers of using biased datasets for facial applications.

-

Understanding and Enhancing Mixed Sample Data Augmentation;Ethan Harris, Antonia Marcu, Matthew Painter, Mahesan Niranjan, Adam Prügel-Bennett, Jonathon Hare ; Mixed Sample Data Augmentation (MSDA) has received increasing attention in recent years, with many successful variants such as MixUp and CutMix. Following insight on the efficacy of CutMix in particular, we propose FMix, an MSDA that uses binary masks obtained by applying a threshold to low frequency images sampled from Fourier space. FMix improves performance over MixUp and CutMix for a number of state-of-the-art models across a range of data sets and problem settings. We go on to analyse MixUp, CutMix, and FMix from an information theoretic perspective, characterising learned models in terms of how they progressively compress the input with depth. Ultimately, our analyses allow us to decouple two complementary properties of augmentations, and present a unified framework for reasoning about MSDA. Code for all experiments is available at this https URL.

-

Improving Generalization by Controlling Label-Noise Information in Neural Network Weights;Hrayr Harutyunyan, Kyle Reing, Greg Ver Steeg, Aram Galstyan; In the presence of noisy or incorrect labels, neural networks have the undesirable tendency to memorize information about the noise. Standard regularization techniques such as dropout, weight decay or data augmentation sometimes help, but do not prevent this behavior. If one considers neural network weights as random variables that depend on the data and stochasticity of training, the amount of memorized information can be quantified with the Shannon mutual information between weights and the vector of all training labels given inputs, I(w:y∣x). We show that for any training algorithm, low values of this term correspond to reduction in memorization of label-noise and better generalization bounds. To obtain these low values, we propose training algorithms that employ an auxiliary network that predicts gradients in the final layers of a classifier without accessing labels. We illustrate the effectiveness of our approach on versions of MNIST, CIFAR-10, and CIFAR-100 corrupted with various noise models, and on a large-scale dataset Clothing1M that has noisy labels.

-

Learning Robust Representations via Multi-View Information Bottleneck;Marco Federici, Anjan Dutta, Patrick Forré, Nate Kushman, Zeynep Akata ; The information bottleneck principle provides an information-theoretic method for representation learning, by training an encoder to retain all information which is relevant for predicting the label while minimizing the amount of other, excess information in the representation. The original formulation, however, requires labeled data to identify the superfluous information. In this work, we extend this ability to the multi-view unsupervised setting, where two views of the same underlying entity are provided but the label is unknown. This enables us to identify superfluous information as that not shared by both views. A theoretical analysis leads to the definition of a new multi-view model that produces state-of-the-art results on the Sketchy dataset and label-limited versions of the MIR-Flickr dataset. We also extend our theory to the single-view setting by taking advantage of standard data augmentation techniques, empirically showing better generalization capabilities when compared to common unsupervised approaches for representation learning. CODE

-

Small energy masking for improved neural network training for end-to-end speech recognition;Chanwoo Kim, Kwangyoun Kim, Sathish Reddy Indurthi ; In this paper, we present a Small Energy Masking (SEM) algorithm, which masks inputs having values below a certain threshold. More specifically, a time-frequency bin is masked if the filterbank energy in this bin is less than a certain energy threshold. A uniform distribution is employed to randomly generate the ratio of this energy threshold to the peak filterbank energy of each utterance in decibels. The unmasked feature elements are scaled so that the total sum of the feature values remain the same through this masking procedure. This very simple algorithm shows relatively 11.2 % and 13.5 % Word Error Rate (WER) improvements on the standard LibriSpeech test-clean and test-other sets over the baseline end-to-end speech recognition system. Additionally, compared to the input dropout algorithm, SEM algorithm shows relatively 7.7 % and 11.6 % improvements on the same LibriSpeech test-clean and test-other sets. With a modified shallow-fusion technique with a Transformer LM, we obtained a 2.62 % WER on the LibriSpeech test-clean set and a 7.87 % WER on the LibriSpeech test-other set.

-

Occlusions for Effective Data Augmentation in Image Classification; Ruth Fong, Andrea Vedaldi; Deep networks for visual recognition are known to leverage "easy to recognise" portions of objects such as faces and distinctive texture patterns. The lack of a holistic understanding of objects may increase fragility and overfitting. In recent years, several papers have proposed to address this issue by means of occlusions as a form of data augmentation. However, successes have been limited to tasks such as weak localization and model interpretation, but no benefit was demonstrated on image classification on large-scale datasets. In this paper, we show that, by using a simple technique based on batch augmentation, occlusions as data augmentation can result in better performance on ImageNet for high-capacity models (e.g., ResNet50). We also show that varying amounts of occlusions used during training can be used to study the robustness of different neural network architectures.

-

CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features; Sangdoo Yun, Dongyoon Han, Seong Joon Oh, Sanghyuk Chun, Junsuk Choe, Youngjoon Yoo; Regional dropout strategies have been proposed to enhance the performance of convolutional neural network classifiers. They have proved to be effective for guiding the model to attend on less discriminative parts of objects (e.g. leg as opposed to head of a person), thereby letting the network generalize better and have better object localization capabilities. On the other hand, current methods for regional dropout remove informative pixels on training images by overlaying a patch of either black pixels or random noise. Such removal is not desirable because it leads to information loss and inefficiency during training. We therefore propose the CutMix augmentation strategy: patches are cut and pasted among training images where the ground truth labels are also mixed proportionally to the area of the patches. By making efficient use of training pixels and retaining the regularization effect of regional dropout, CutMix consistently outperforms the state-of-the-art augmentation strategies on CIFAR and ImageNet classification tasks, as well as on the ImageNet weakly-supervised localization task. Moreover, unlike previous augmentation methods, our CutMix-trained ImageNet classifier, when used as a pretrained model, results in consistent performance gains in Pascal detection and MS-COCO image captioning benchmarks. We also show that CutMix improves the model robustness against input corruptions and its out-of-distribution detection performances.

-

Style transfer-based image synthesis as an efficient regularization technique in deep learning; Agnieszka Mikołajczyk, Michał Grochowski; These days deep learning is the fastest-growing area in the field of Machine Learning. Convolutional Neural Networks are currently the main tool used for image analysis and classification purposes. Although great achievements and perspectives, deep neural networks and accompanying learning algorithms have some relevant challenges to tackle. In this paper, we have focused on the most frequently mentioned problem in the field of machine learning, that is relatively poor generalization abilities. Partial remedies for this are regularization techniques eg dropout, batch normalization, weight decay, transfer learning, early stopping and data augmentation. In this paper, we have focused on data augmentation. We propose to use a method based on a neural style transfer, which allows generating new unlabeled images of a high perceptual quality that combine the content of a base image with the appearance of another one. In a proposed approach, the newly created images are described with pseudo-labels, and then used as a training dataset. Real, labeled images are divided into the validation and test set. We validated the proposed method on a challenging skin lesion classification case study. Four representative neural architectures are examined. Obtained results show the strong potential of the proposed approach.

- Generative adversarial network in medical imaging: A review; Xin Yi, Ekta Walia, Paul Babyna; Generative adversarial networks have gained a lot of attention in the computer vision community due to their capability of data generation without explicitly modelling the probability density function. The adversarial loss brought by the discriminator provides a clever way of incorporating unlabeled samples into training and imposing higher order consistency. This has proven to be useful in many cases, such as domain adaptation, data augmentation, and image-to-image translation. These properties have attracted researchers in the medical imaging community, and we have seen rapid adoption in many traditional and novel applications, such as image reconstruction, segmentation, detection, classification, and cross-modality synthesis. Based on our observations, this trend will continue and we therefore conducted a review of recent advances in medical imaging using the adversarial training scheme with the hope of benefiting researchers interested in this technique.

-

Data Augmentation via Dependency Tree Morphing for Low-Resource Languages; Gözde Gül Şahin, Mark Steedman; Neural NLP systems achieve high scores in the presence of sizable training dataset. Lack of such datasets leads to poor system performances in the case low-resource languages. We present two simple text augmentation techniques using dependency trees, inspired from image processing. We crop sentences by removing dependency links, and we rotate sentences by moving the tree fragments around the root. We apply these techniques to augment the training sets of low-resource languages in Universal Dependencies project. We implement a character-level sequence tagging model and evaluate the augmented datasets on part-of-speech tagging task. We show that crop and rotate provides improvements over the models trained with non-augmented data for majority of the languages, especially for languages with rich case marking systems.

-

Data augmentation for instrument classification robust to audio effects; António Ramires, Xavier Serra; Reusing recorded sounds (sampling) is a key component in Electronic Music Production (EMP), which has been present since its early days and is at the core of genres like hip-hop or jungle. Commercial and non-commercial services allow users to obtain collections of sounds (sample packs) to reuse in their compositions. Automatic classification of one-shot instrumental sounds allows automatically categorising the sounds contained in these collections, allowing easier navigation and better characterisation. Automatic instrument classification has mostly targeted the classification of unprocessed isolated instrumental sounds or detecting predominant instruments in mixed music tracks. For this classification to be useful in audio databases for EMP, it has to be robust to the audio effects applied to unprocessed sounds. In this paper we evaluate how a state of the art model trained with a large dataset of one-shot instrumental sounds performs when classifying instruments processed with audio effects. In order to evaluate the robustness of the model, we use data augmentation with audio effects and evaluate how each effect influences the classification accuracy.

-

Hyperspectral Image Classification Using Random Occlusion Data Augmentation; Juan Mario Haut, Mercedes E. Paoletti, Javier Plaza, Antonio Plaza, Jun Li ; Convolutional neural networks (CNNs) have become a powerful tool for remotely sensed hyperspectral image (HSI) classification due to their great generalization ability and high accuracy. However, owing to the huge amount of parameters that need to be learned and to the complex nature of HSI data itself, these approaches must deal with the important problem of overfitting, which can lead to inadequate generalization and loss of accuracy. In order to mitigate this problem, in this letter, we adopt random occlusion, a recently developed data augmentation (DA) method for training CNNs, in which the pixels of different rectangular spatial regions in the HSI are randomly occluded, generating training images with various levels of occlusion and reducing the risk of overfitting. Our results with two well-known HSIs reveal that the proposed method helps to achieve better classification accuracy with low computational cost.

-

Learning Data Manipulation for Augmentation and Weighting; Zhiting Hu, Bowen Tan, Ruslan Salakhutdinov, Tom Mitchell, Eric P. Xing; Manipulating data, such as weighting data examples or augmenting with new instances, has been increasingly used to improve model training. Previous work has studied various rule- or learning-based approaches designed for specific types of data manipulation. In this work, we propose a new method that supports learning different manipulation schemes with the same gradient-based algorithm. Our approach builds upon a recent connection of supervised learning and reinforcement learning (RL), and adapts an off-the-shelf reward learning algorithm from RL for joint data manipulation learning and model training. Different parameterization of the "data reward" function instantiates different manipulation schemes. We showcase data augmentation that learns a text transformation network, and data weighting that dynamically adapts the data sample importance. Experiments show the resulting algorithms significantly improve the image and text classification performance in low data regime and class-imbalance problems.

-

Anatomically-Informed Data Augmentation for functional MRI with Applications to Deep Learning ;Kevin P. Nguyen, Cherise Chin Fatt, Alex Treacher, Cooper Mellema, Madhukar H. Trivedi, Albert Montillo ; The application of deep learning to build accurate predictive models from functional neuroimaging data is often hindered by limited dataset sizes. Though data augmentation can help mitigate such training obstacles, most data augmentation methods have been developed for natural images as in computer vision tasks such as CIFAR, not for medical images. This work helps to fills in this gap by proposing a method for generating new functional Magnetic Resonance Images (fMRI) with realistic brain morphology. This method is tested on a challenging task of predicting antidepressant treatment response from pre-treatment task-based fMRI and demonstrates a 26% improvement in performance in predicting response using augmented images. This improvement compares favorably to state-of-the-art augmentation methods for natural images. Through an ablative test, augmentation is also shown to substantively improve performance when applied before hyperparameter optimization. These results suggest the optimal order of operations and support the role of data augmentation method for improving predictive performance in tasks using fMRI.

-

Image based fruit category classification by 13-layer deep convolutional neural network and data augmentation; Yu-Dong Zhang, Zhengchao Dong, Xianqing Chen, Wenjuan Jia, Sidan Du, Khan Muhammad, Shui-Hua Wang ; Fruit category identification is important in factories, supermarkets, and other fields. Current computer vision systems used handcrafted features, and did not get good results. In this study, our team designed a 13-layer convolutional neural network (CNN). Three types of data augmentation method was used: image rotation, Gamma correction, and noise injection. We also compared max pooling with average pooling. The stochastic gradient descent with momentum was used to train the CNN with minibatch size of 128. The overall accuracy of our method is 94.94%, at least 5 percentage points higher than state-of-the-art approaches. We validated this 13-layer is the optimal structure. The GPU can achieve a 177× acceleration on training data, and a 175× acceleration on test data. We observed using data augmentation can increase the overall accuracy. Our method is effective in image-based fruit classification.

-

Data augmentation using learned transformations for one-shot medical image segmentation; Amy Zhao, Guha Balakrishnan, Frédo Durand, John V. Guttag, Adrian V. Dalca ; Image segmentation is an important task in many medical applications. Methods based on convolutional neural networks attain state-of-the-art accuracy; however, they typically rely on supervised training with large labeled datasets. Labeling medical images requires significant expertise and time, and typical hand-tuned approaches for data augmentation fail to capture the complex variations in such images. We present an automated data augmentation method for synthesizing labeled medical images. We demonstrate our method on the task of segmenting magnetic resonance imaging (MRI) brain scans. Our method requires only a single segmented scan, and leverages other unlabeled scans in a semi-supervised approach. We learn a model of transformations from the images, and use the model along with the labeled example to synthesize additional labeled examples. Each transformation is comprised of a spatial deformation field and an intensity change, enabling the synthesis of complex effects such as variations in anatomy and image acquisition procedures. We show that training a supervised segmenter with these new examples provides significant improvements over state-of-the-art methods for one-shot biomedical image segmentation.

-

Face-Specific Data Augmentation for Unconstrained Face Recognition; Iacopo Masi, Anh Tuấn Trần, Tal Hassner, Gozde Sahin, Gérard Medioni ; We identify two issues as key to developing effective face recognition systems: maximizing the appearance variations of training images and minimizing appearance variations in test images. The former is required to train the system for whatever appearance variations it will ultimately encounter and is often addressed by collecting massive training sets with millions of face images. The latter involves various forms of appearance normalization for removing distracting nuisance factors at test time and making test faces easier to compare. We describe novel, efficient face-specific data augmentation techniques and show them to be ideally suited for both purposes. By using knowledge of faces, their 3D shapes, and appearances, we show the following: (a) We can artificially enrich training data for face recognition with face-specific appearance variations. (b) This synthetic training data can be efficiently produced online, thereby reducing the massive storage requirements of large-scale training sets and simplifying training for many appearance variations. Finally, (c) The same, fast data augmentation techniques can be applied at test time to reduce appearance variations and improve face representations. Together, with additional technical novelties, we describe a highly effective face recognition pipeline which, at the time of submission, obtains state-of-the-art results across multiple benchmarks. Portions of this paper were previously published by Masi et al. (European conference on computer vision, Springer, pp 579–596, 2016b, International conference on automatic face and gesture recognition, 2017).

-

Hyperspectral Data Augmentation; Jakub Nalepa, Michal Myller, Michal Kawulok ; Data augmentation is a popular technique which helps improve generalization capabilities of deep neural networks. It plays a pivotal role in remote-sensing scenarios in which the amount of high-quality ground truth data is limited, and acquiring new examples is costly or impossible. This is a common problem in hyperspectral imaging, where manual annotation of image data is difficult, expensive, and prone to human bias. In this letter, we propose online data augmentation of hyperspectral data which is executed during the inference rather than before the training of deep networks. This is in contrast to all other state-of-the-art hyperspectral augmentation algorithms which increase the size (and representativeness) of training sets. Additionally, we introduce a new principal component analysis based augmentation. The experiments revealed that our data augmentation algorithms improve generalization of deep networks, work in real-time, and the online approach can be effectively combined with offline techniques to enhance the classification accuracy.

-

Disentangling Correlated Speaker and Noise for Speech Synthesis via Data Augmentation and Adversarial Factorization; Wei-Ning Hsu ; Yu Zhang ; Ron J. Weiss ; Yu-An Chung ; Yuxuan Wang ; Yonghui Wu ; James Glass ; To leverage crowd-sourced data to train multi-speaker text-to-speech (TTS) models that can synthesize clean speech for all speakers, it is essential to learn disentangled representations which can independently control the speaker identity and background noise in generated signals. However, learning such representations can be challenging, due to the lack of labels describing the recording conditions of each training example, and the fact that speakers and recording conditions are often correlated, e.g. since users often make many recordings using the same equipment. This paper proposes three components to address this problem by: (1) formulating a conditional generative model with factorized latent variables, (2) using data augmentation to add noise that is not correlated with speaker identity and whose label is known during training, and (3) using adversarial factorization to improve disentanglement. Experimental results demonstrate that the proposed method can disentangle speaker and noise attributes even if they are correlated in the training data, and can be used to consistently synthesize clean speech for all speakers. Ablation studies verify the importance of each proposed component.

-

Generative Image Translation for Data Augmentation of Bone Lesion Pathology; Anant Gupta, Srivas Venkatesh, Sumit Chopra, Christian Ledig ; Insufficient training data and severe class imbalance are often limiting factors when developing machine learning models for the classification of rare diseases. In this work, we address the problem of classifying bone lesions from X-ray images by increasing the small number of positive samples in the training set. We propose a generative data augmentation approach based on a cycle-consistent generative adversarial network that synthesizes bone lesions on images without pathology. We pose the generative task as an image-patch translation problem that we optimize specifically for distinct bones (humerus, tibia, femur). In experimental results, we confirm that the described method mitigates the class imbalance problem in the binary classification task of bone lesion detection. We show that the augmented training sets enable the training of superior classifiers achieving better performance on a held-out test set. Additionally, we demonstrate the feasibility of transfer learning and apply a generative model that was trained on one body part to another.

-

Towards highly accurate coral texture images classification using deep convolutional neural networks and data augmentation; Anabel Gómez-Ríosa, Siham Tabika, Julián Luengoa, ASM Shihavuddinb, Bartosz Krawczyk, Francisco Herreraa ; The recognition of coral species based on underwater texture images poses a significant difficulty for machine learning algorithms, due to the three following challenges embedded in the nature of this data: (1) datasets do not include information about the global structure of the coral; (2) several species of coral have very similar characteristics; and (3) defining the spatial borders between classes is difficult as many corals tend to appear together in groups. For this reasons, the classification of coral species has always required an aid from a domain expert. The objective of this paper is to develop an accurate classification model for coral texture images. Current datasets contain a large number of imbalanced classes, while the images are subject to inter-class variation. We have focused on the current small datasets and analyzed (1) several Convolutional Neural Network (CNN) architectures, (2) data augmentation techniques and (3) transfer learning approaches. We have achieved the state-of-the art accuracies using different variations of ResNet on the two small coral texture datasets, EILAT and RSMAS.

-

Data Augmentation using GANs for Speech Emotion Recognition;Aggelina Chatziagapi, Georgios Paraskevopoulos, Dimitris Sgouropoulos, Georgios Pantazopoulos, Malvina Nikandrou, Theodoros Giannakopoulos, Athanasios Katsamanis, Alexandros Potamianos, Shrikanth Narayanan ; In this work, we address the problem of data imbalance for the task of Speech Emotion Recognition (SER). We investigate conditioned data augmentation using Generative Adversarial Networks (GANs), in order to generate samples for underrepresented emotions. We adapt and improve a conditional GAN architecture to generate synthetic spectrograms for the minority class. For comparison purposes, we implement a series of signal-based data augmentation methods. The proposed GANbased approach is evaluated on two datasets, namely IEMOCAP and FEEL-25k, a large multi-domain dataset. Results demonstrate a 10% relative performance improvement in IEMOCAP and 5% in FEEL-25k, when augmenting the minority classes. Index Terms: Generative Adversarial Networks, Speech Emotion Recognition, data augmentation, data imbalance

-

SpecAugment: A Simple Data Augmentation Method for Automatic Speech Recognition; Daniel S. Park, William Chan, Yu Zhang, Chung-Cheng Chiu, Barret Zoph, Ekin D. Cubuk, Quoc V. Le ; We present SpecAugment, a simple data augmentation method for speech recognition. SpecAugment is applied directly to the feature inputs of a neural network (i.e., filter bank coefficients). The augmentation policy consists of warping the features, masking blocks of frequency channels, and masking blocks of time steps. We apply SpecAugment on Listen, Attend and Spell networks for end-to-end speech recognition tasks. We achieve state-of-the-art performance on the LibriSpeech 960h and Swichboard 300h tasks, outperforming all prior work. On LibriSpeech, we achieve 6.8% WER on test-other without the use of a language model, and 5.8% WER with shallow fusion with a language model. This compares to the previous state-of-the-art hybrid system of 7.5% WER. For Switchboard, we achieve 7.2%/14.6% on the Switchboard/CallHome portion of the Hub5'00 test set without the use of a language model, and 6.8%/14.1% with shallow fusion, which compares to the previous state-of-the-art hybrid system at 8.3%/17.3% WER.

-

Learning More with Less: Conditional PGGAN-based Data Augmentation for Brain Metastases Detection Using Highly-Rough Annotation on MR Images; Changhee Han, Kohei Murao, Tomoyuki Noguchi, Yusuke Kawata, Fumiya Uchiyama, Leonardo Rundo, Hideki Nakayama, Shin'ichi Satoh ; Accurate Computer-Assisted Diagnosis, associated with proper data wrangling, can alleviate the risk of overlooking the diagnosis in a clinical environment. Towards this, as a Data Augmentation (DA) technique, Generative Adversarial Networks (GANs) can synthesize additional training data to handle the small/fragmented medical imaging datasets collected from various scanners; those images are realistic but completely different from the original ones, filling the data lack in the real image distribution. However, we cannot easily use them to locate disease areas, considering expert physicians' expensive annotation cost. Therefore, this paper proposes Conditional Progressive Growing of GANs (CPGGANs), incorporating highly-rough bounding box conditions incrementally into PGGANs to place brain metastases at desired positions/sizes on 256 X 256 Magnetic Resonance (MR) images, for Convolutional Neural Network-based tumor detection; this first GAN-based medical DA using automatic bounding box annotation improves the training robustness. The results show that CPGGAN-based DA can boost 10% sensitivity in diagnosis with clinically acceptable additional False Positives. Surprisingly, further tumor realism, achieved with additional normal brain MR images for CPGGAN training, does not contribute to detection performance, while even three physicians cannot accurately distinguish them from the real ones in Visual Turing Test.

-

Training Data Augmentation for Context-Sensitive Neural Lemmatization Using Inflection Tables and Raw Text; Toms Bergmanis, Sharon Goldwater ; Lemmatization aims to reduce the sparse data problem by relating the inflected forms of a word to its dictionary form. Using context can help, both for unseen and ambiguous words. Yet most context-sensitive approaches require full lemma-annotated sentences for training, which may be scarce or unavailable in low-resource languages. In addition (as shown here), in a low-resource setting, a lemmatizer can learn more from n labeled examples of distinct words (types) than from n (contiguous) labeled tokens, since the latter contain far fewer distinct types. To combine the efficiency of type-based learning with the benefits of context, we propose a way to train a context-sensitive lemmatizer with little or no labeled corpus data, using inflection tables from the UniMorph project and raw text examples from Wikipedia that provide sentence contexts for the unambiguous UniMorph examples. Despite these being unambiguous examples, the model successfully generalizes from them, leading to improved results (both overall, and especially on unseen words) in comparison to a baseline that does not use context.

-

Data Augmentation for BERT Fine-Tuning in Open-Domain Question Answering; Wei Yang, Yuqing Xie, Luchen Tan, Kun Xiong, Ming Li, Jimmy Lin ; Recently, a simple combination of passage retrieval using off-the-shelf IR techniques and a BERT reader was found to be very effective for question answering directly on Wikipedia, yielding a large improvement over the previous state of the art on a standard benchmark dataset. In this paper, we present a data augmentation technique using distant supervision that exploits positive as well as negative examples. We apply a stage-wise approach to fine tuning BERT on multiple datasets, starting with data that is "furthest" from the test data and ending with the "closest". Experimental results show large gains in effectiveness over previous approaches on English QA datasets, and we establish new baselines on two recent Chinese QA datasets.

-

What Else Can Fool Deep Learning? Addressing Color Constancy Errors on Deep Neural Network Performance;Mahmoud Afifi, Michael S Brown ; There is active research targeting local image manipulations that can fool deep neural networks (DNNs) into produchttps://github.com/mahmoudnafifi/WB_color_augmentering incorrect results. This paper examines a type of global image manipulation that can produce similar adverse effects. Specifically, we explore how strong color casts caused by incorrectly applied computational color constancy - referred to as white balance (WB) in photography - negatively impact the performance of DNNs targeting image segmentation and classification. In addition, we discuss how existing image augmentation methods used to improve the robustness of DNNs are not well suited for modeling WB errors. To address this problem, a novel augmentation method is proposed that can emulate accurate color constancy degradation. We also explore pre-processing training and testing images with a recent WB correction algorithm to reduce the effects of incorrectly white-balanced images. We examine both augmentation and pre-processing strategies on different datasets and demonstrate notable improvements on the CIFAR-10, CIFAR-100, and ADE20K datasets. [repo]

-

Data augmentation for improving deep learning in image classification problem; Agnieszka Mikołajczyk, Michał Grochowski; These days deep learning is the fastest-growing field in the field of Machine Learning (ML) and Deep Neural Networks (DNN). Among many of DNN structures, the Convolutional Neural Networks (CNN) are currently the main tool used for the image analysis and classification purposes. Although great achievements and perspectives, deep neural networks and accompanying learning algorithms have some relevant challenges to tackle. In this paper, we have focused on the most frequently mentioned problem in the field of machine learning, that is the lack of sufficient amount of the training data or uneven class balance within the datasets. One of the ways of dealing with this problem is so called data augmentation. In the paper we have compared and analyzed multiple methods of data augmentation in the task of image classification, starting from classical image transformations like rotating, cropping, zooming, histogram based methods and finishing at Style Transfer and Generative Adversarial Networks, along with the representative examples. Next, we presented our own method of data augmentation based on image style transfer. The method allows to generate the new images of high perceptual quality that combine the content of a base image with the appearance of another ones. The newly created images can be used to pre-train the given neural network in order to improve the training process efficiency. Proposed method is validated on the three medical case studies: skin melanomas diagnosis, histopathological images and breast magnetic resonance imaging (MRI) scans analysis, utilizing the image classification in order to provide a diagnose. In such kind of problems the data deficiency is one of the most relevant issues. Finally, we discuss the advantages and disadvantages of the methods being analyzed.

-

Synthetic data augmentation using GAN for improved liver lesion classification; Maayan Frid-Adar, Eyal Klang, Michal Amitai, Jacob Goldberger, Hayit Greenspan; In this paper, we present a data augmentation method that generates synthetic medical images using Generative Adversarial Networks (GANs). We propose a training scheme that first uses classical data augmentation to enlarge the training set and then further enlarges the data size and its diversity by applying GAN techniques for synthetic data augmentation. Our method is demonstrated on a limited dataset of computed tomography (CT) images of 182 liver lesions (53 cysts, 64 metastases and 65 hemangiomas). The classification performance using only classic data augmentation yielded 78.6% sensitivity and 88.4% specificity. By adding the synthetic data augmentation the results significantly increased to 85.7% sensitivity and 92.4% specificity.

-

Alcoholism Detection by Data Augmentation and Convolutional Neural Network with Stochastic Pooling; Shui-Hua Wang, Yi-Ding Lv, Yuxiu Sui, Shuai Liu, Su-Jing Wang, Yu-Dong Zhang; Alcohol use disorder (AUD) is an important brain disease. It alters the brain structure. Recently, scholars tend to use computer vision based techniques to detect AUD. We collected 235 subjects, 114 alcoholic and 121 non-alcoholic. Among the 235 image, 100 images were used as training set, and data augmentation method was used. The rest 135 images were used as test set. Further, we chose the latest powerful technique—convolutional neural network (CNN) based on convolutional layer, rectified linear unit layer, pooling layer, fully connected layer, and softmax layer. We also compared three different pooling techniques: max pooling, average pooling, and stochastic pooling. The results showed that our method achieved a sensitivity of 96.88%, a specificity of 97.18%, and an accuracy of 97.04%. Our method was better than three state-of-the-art approaches. Besides, stochastic pooling performed better than other max pooling and average pooling. We validated CNN with five convolution layers and two fully connected layers performed the best. The GPU yielded a 149× acceleration in training and a 166× acceleration in test, compared to CPU.

-

mixup: BEYOND EMPIRICAL RISK MINIMIZATION;Hongyi Zhang, Moustapha Cisse, Yann N. Dauphin, David Lopez-Paz; Large deep neural networks are powerful, but exhibit undesirable behaviors such as memorization and sensitivity to adversarial examples. In this work, we propose mixup, a simple learning principle to alleviate these issues. In essence, mixup trains a neural network on convex combinations of pairs of examples and their labels. By doing so, mixup regularizes the neural network to favor simple linear behavior in-between training examples. Our experiments on the ImageNet-2012, CIFAR-10, CIFAR-100, Google commands and UCI datasets show that mixup improves the generalization of state-of-the-art neural network architectures. We also find that mixup reduces the memorization of corrupt labels, increases the robustness to adversarial examples, and stabilizes the training of generative adversarial networks.

-