Code and models for the paper Smooth Adversarial Training.

- Releasing pre-trained ResNet and EfficientNet

- ResNet single-GPU inference

- ResNet multi-GPU inference

- ResNet adversarial robustness evaluation

- ResNet adversarial training

- EfficientNet single-GPU inference

- EfficientNet multi-GPU inference

- EfficientNet adversarial robustness evaluation

- EfficientNet adversarial training

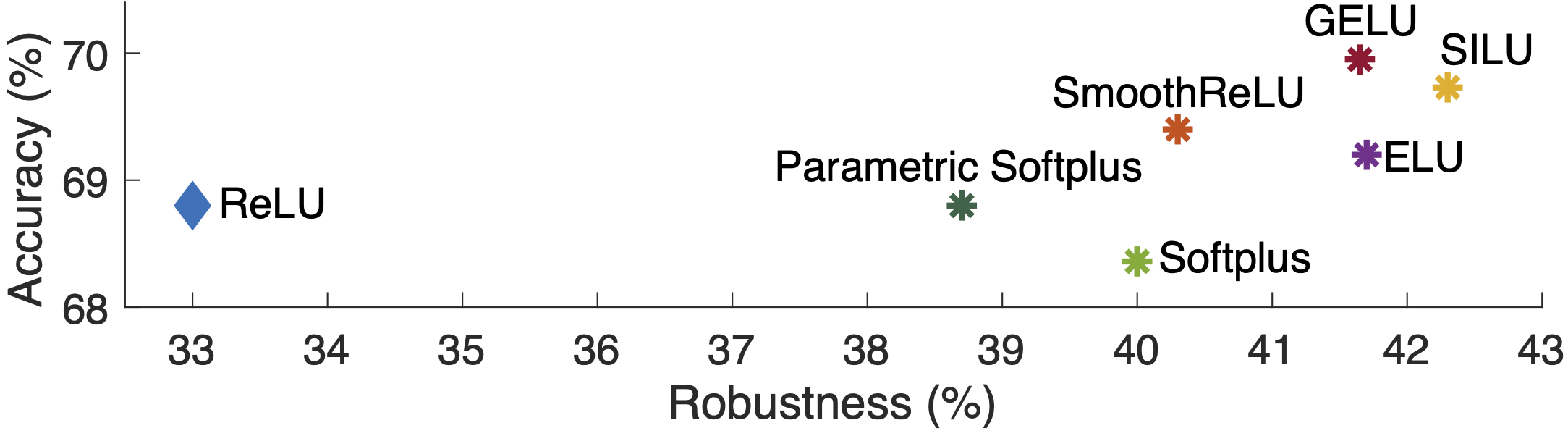

The widely-used ReLU activation function significantly weakens adversarial training due to its non-smooth nature. In this project, we developed smooth adversarial training (SAT), in which we replace ReLU with its smooth approximations (e.g., SILU, softplus, SmoothReLU) to strengthen adversarial training.

On ResNet-50, the best result reported by SAT on ImageNet is 69.7% accuracy and 42.3% robustness, beating its ReLU version by 0.9% for accuracy and 9.3% for robustnes.

We also explore the limits of SAT with larger networks. We obtain the best result by using EfficientNet-L1, which achieves 82.2% accuracy and 58.6% robustness on ImageNet.

- TensorFlow ≥ 1.6 with GPU support

- Tensorpack ≥ 0.9.8

- OpenCV ≥ 3

- ImageNet data in its standard directory structure for ResNet.

- ImageNet data in TFRecord format for EfficientNet

- [gdown] (https://pypi.org/project/gdown/) for downloading pretrained ckpts

Note:

- Here are the scripts for downloading ResNet and EfficientNet from Google Drive.

- For robustness evaluation, the maximum perturbation per pixel is 4, and the attacker is non-targeted.

- ResNet performance reported here is slightly different from the performance reported in the paper, since the image loader here is slightly different from the image loader used in the original training/evaluation code.

- To run ResNet-50 with different activation functions

python main.py --activation-name=$YOUR_ACTIVATION_FUNCTION --load=$YOUR_CKPT_PATH --data=$PATH_TO_IMAGENET --eval-attack-iter=$YOUR_ATTACK_ITERATION_FOR_EVAL --batch=$YOUR_EVALUATION_BATCH_SIZE --eval --attack-epsilon=4.0 -d=50 --attack-step-size=1.0 | ResNet-50 (click for details) | error rate (%) | error rate(%) | ||

|---|---|---|---|---|

| clean images | 10-step PGD | 20-step PGD | 50-step PGD | |

ReLU--activation-name=relu |

68.7 | 35.3 | 33.7 | 33.1 |

SILU--activation-name=silu |

69.7 | 43.0 | 42.2 | 41.9 |

ELU--activation-name=elu |

69.0 | 41.6 | 40.9 | 40.7 |

GELU--activation-name=gelu |

69.9 | 42.6 | 41.8 | 41.5 |

SmoothReLU--activation-name=smoothrelu |

69.3 | 41.3 | 40.4 | 40.1 |

- To run ResNet with SAT at different scales

python main.py -d=$NETWORK_DEPTH --res2-bottleneck=$RESNEXT_BOTTLENECK --group=$RESNEXT_GROUP --input-size=$INPUT_SIZE --load=$YOUR_CKPT_PATH --data=$PATH_TO_IMAGENET --eval-attack-iter=$YOUR_ATTACK_ITERATION_FOR_EVAL --batch=$YOUR_EVALUATION_BATCH_SIZE --eval --attack-epsilon=4.0 -d=50 --attack-step-size=1.0 --activation-name=silu| SAT ResNet (click for details) | error rate (%) | error rate(%) | ||

|---|---|---|---|---|

| clean images | 10-step PGD | 20-step PGD | 50-step PGD | |

ResNet-50--d=50 --res2-bottleneck=64 --group=1 --input-size=224 |

69.7 | 43.0 | 42.2 | 41.9 |

(2X deeper) ResNet-101--d=101 --res2-bottleneck=64 --group=1 --input-size=224 |

72.9 | 46.4 | 45.5 | 45.2 |

(3X deeper) ResNet-152--d=152 --res2-bottleneck=64 --group=1 --input-size=224 |

74.0 | 47.3 | 46.2 | 45.8 |

(2X wider) ResNeXt-32x4d--d=50 --res2-bottleneck=4 --group=32 --input-size=224 |

71.1 | 43.3 | 42.5 | 42.2 |

(4X wider) ResNeXt-32x8d--d=50 --res2-bottleneck=8 --group=32 --input-size=224 |

73.3 | 46.3 | 45.2 | 44.8 |

(299 resolution) ResNet-50--d=50 --res2-bottleneck=64 --group=1 --input-size=299 |

70.7 | 44.6 | 43.8 | 43.6 |

(380 resolution) ResNet-50--d=50 --res2-bottleneck=64 --group=1 --input-size=380 |

71.6 | 44.9 | 44.1 | 43.8 |

(3X deeper & 4X wider & 380 resolution) ResNeXt152-32x8d--d=152 --res2-bottleneck=8 --group=32 --input-size=380 |

78.0 | 53.0 | 51.7 | 51.0 |

- To run EfficientNet with SAT at different scales

python main.py --model_name=$EFFICIENTNET_NAME --moving_average_decay=$EMA_VALUE --ckpt_path=$YOUR_CKPT_PATH --data_dir=$PATH_TO_IMAGENET_TFRECORDS --eval_batch_size=$YOUR_EVALUATION_BATCH_SIZE --use_tpu=false --mode=eval --use_bfloat16=false --transpose_input=false --strategy=gpus --batch_norm_momentum=0.9 --batch_norm_epsilon=1e-5 --sat_preprocessing=True| SAT EfficientNet (click for details) | error rate (%) | error rate(%) | ||

|---|---|---|---|---|

| clean images | 10-step PGD | 20-step PGD | 50-step PGD | |

EfficientNet-B0--model_name=efficientnet-b0 --moving_average_decay=0 |

65.2 | TODO | TODO | TODO |

EfficientNet-B1--model_name=efficientnet-b1 --moving_average_decay=0 |

68.6 | TODO | TODO | TODO |

EfficientNet-B2--model_name=efficientnet-b2 --moving_average_decay=0 |

70.3 | TODO | TODO | TODO |

EfficientNet-B3--model_name=efficientnet-b3 --moving_average_decay=0 |

73.1 | TODO | TODO | TODO |

EfficientNet-B4--model_name=efficientnet-b4 --moving_average_decay=0 |

75.9 | TODO | TODO | TODO |

EfficientNet-B5--model_name=efficientnet-b5 --moving_average_decay=0 |

77.8 | TODO | TODO | TODO |

EfficientNet-B6--model_name=efficientnet-b6 --moving_average_decay=0 |

79.2 | TODO | TODO | TODO |

EfficientNet-B7--model_name=efficientnet-b7 --moving_average_decay=0 |

79.8 | TODO | TODO | TODO |

EfficientNet-L1--model_name=efficientnet-bL1 --moving_average_decay=0 |

80.7 | TODO | TODO | TODO |

EfficientNet-L1-Enhanced--model_name=efficientnet-bL1 --moving_average_decay=0.9999 |

82.2 | TODO | TODO | TODO |

The MAJOR part of this code comes from ImageNet-Adversarial-Training and EfficientNet. We thanks Yingwei Li and Jieru Mei for helping open resource the code and models.

If you use our code, models or wish to refer to our results, please use the following BibTex entry:

@article{Xie_2020_SAT,

author = {Xie, Cihang and Tan, Mingxing and Gong, Boqing and Yuille, Alan and Le, Quoc V},

title = {Smooth Adversarial Training},

journal = {arXiv preprint arXiv:2006.14536},

year = {2020}

}