This repository contains a reproduction and extension of "Diffusion Models Already Have a Semantic Latent Space" by Kwon et al. (2023).

To read the full report containing detailed information on our reproduction experiments and extension study, please, refer to our blogpost.

To install requirements:

conda env create -f environment.yml

To download the CelebA-HQ dataset (to src/data/) and pretrained weights for its diffusion model (to src/lib/asyrp/pretrained/):

bash src/lib/utils/data_download.sh celeba_hq src/

rm a.zip

mkdir src/lib/asyrp/pretrained/

python src/lib/utils/download_weights.py

Training and inference scripts for all models are located in the src/lib/asyrp/scripts/ folder.

To perform evaluation of the results and reproduce our results, refer to the corresponding notebooks in the demos/ folder.

You can download our pre-trained models and pre-computed results via our Google Drive directory.

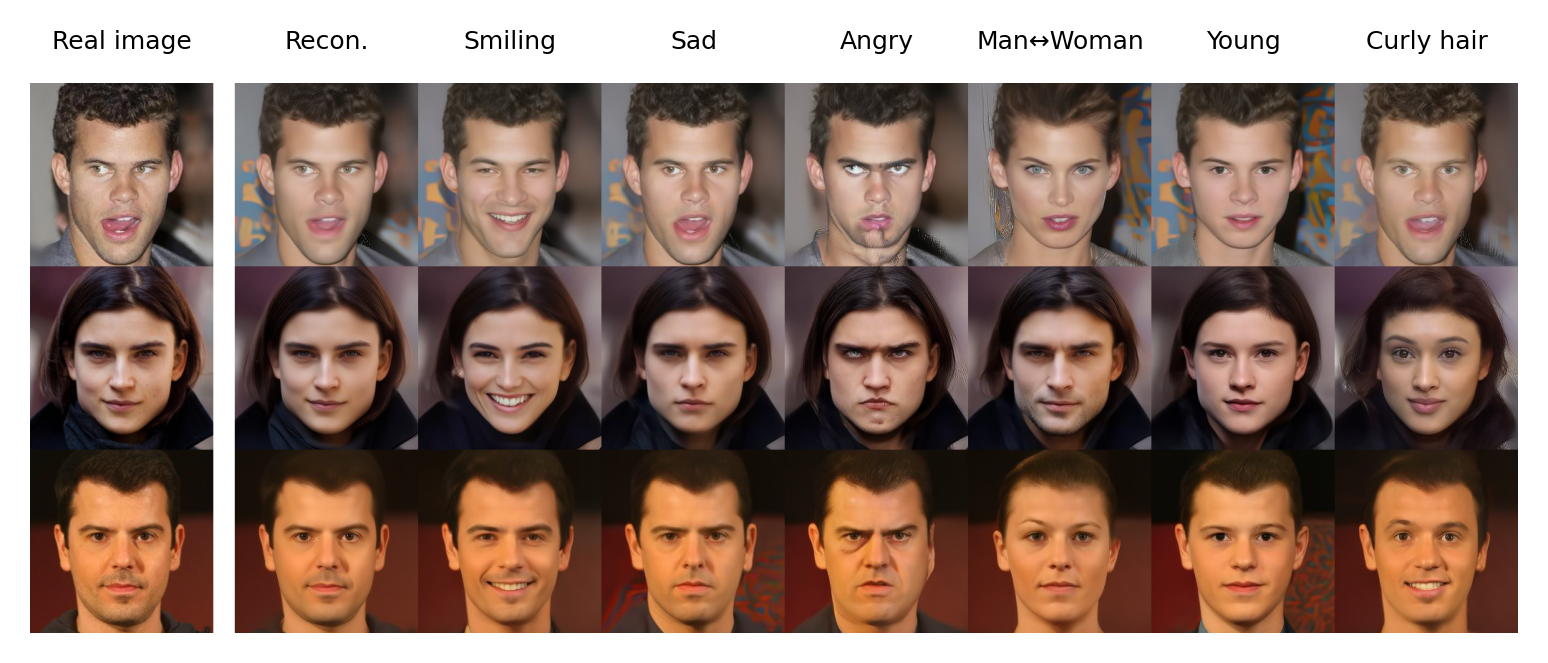

As part of our reproduction study, we successfully replicate results achieved by the original authors for in-domain attributes:

|

|

| Editing results for in-domain attributes. | |

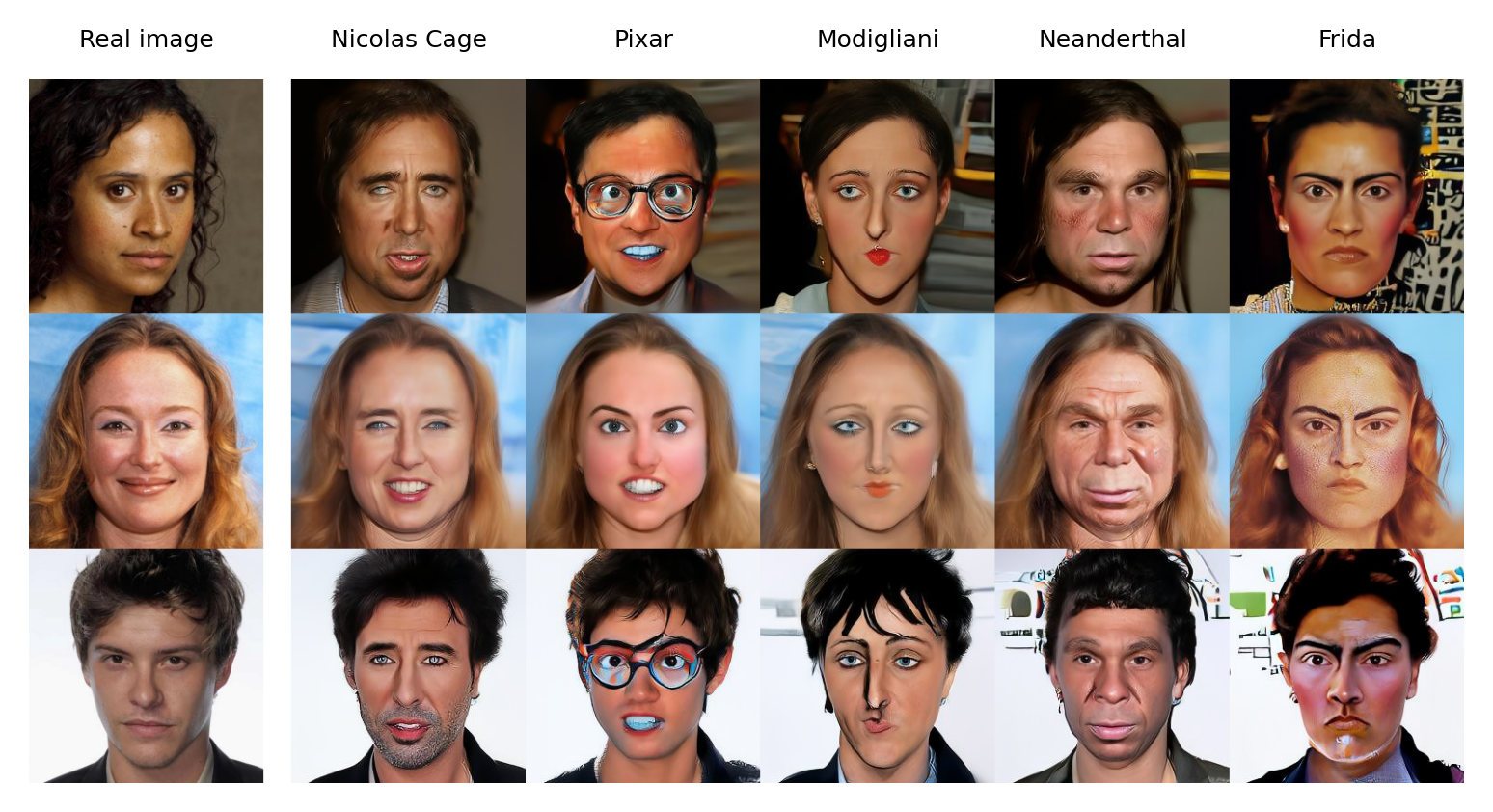

In the same manner, we replicate the results for unseen-domain attributes:

|

|

| Editing results for unseen-domain attributes. | |

Nevertheless, we are unable to replicate the directional CLIP score,

| Metric | Smiling (IN) | Sad (IN) | Tanned (IN) | Pixar (UN) | Neanderthal (UN) | |

|---|---|---|---|---|---|---|

| Original |

0.921 | 0.964 | 0.991 | 0.956 | 0.805 | |

| Reproduced |

0.955 (0.048) |

0.993 (0.037) |

0.933 (0.040) |

0.931 (0.032) |

0.913 (0.035) |

|

| Reproduced |

0.969 (0.047) |

0.999 (0.035) |

0.973 (0.036) |

0.942 (0.031) |

0.952 (0.035) |

|

| Directional CLIP score ( deviations are reported in parentheses. |

||||||

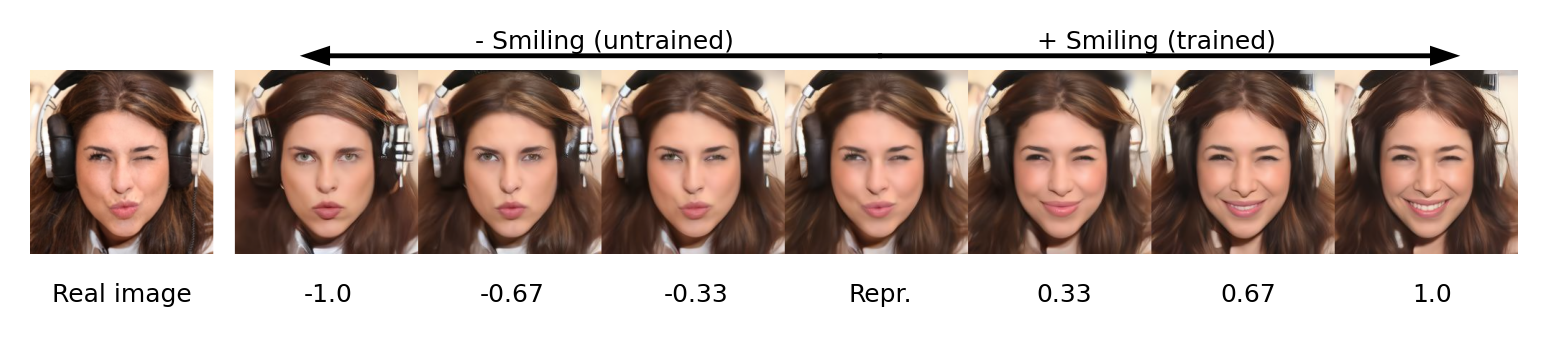

However, our results successfully demonstrate linearity of

|

|

| Image edits for the "smiling" attribute with editing strength in the range from -1 to 1. | |

Also, we show that by using additional steps (

|

|

| Comparison of generated images for the "smiling" attribute with 40 and 1000 time steps during generation. | |

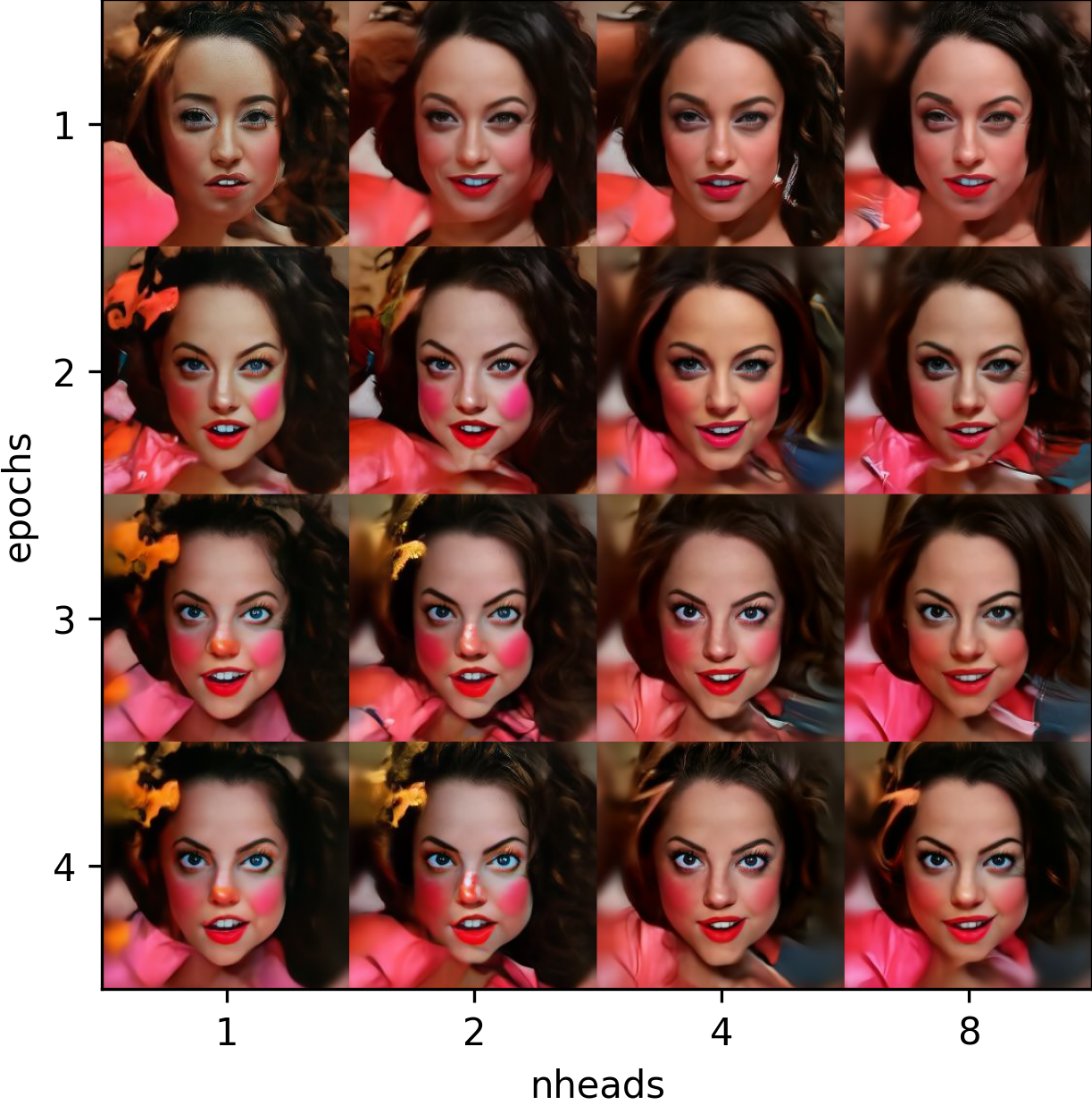

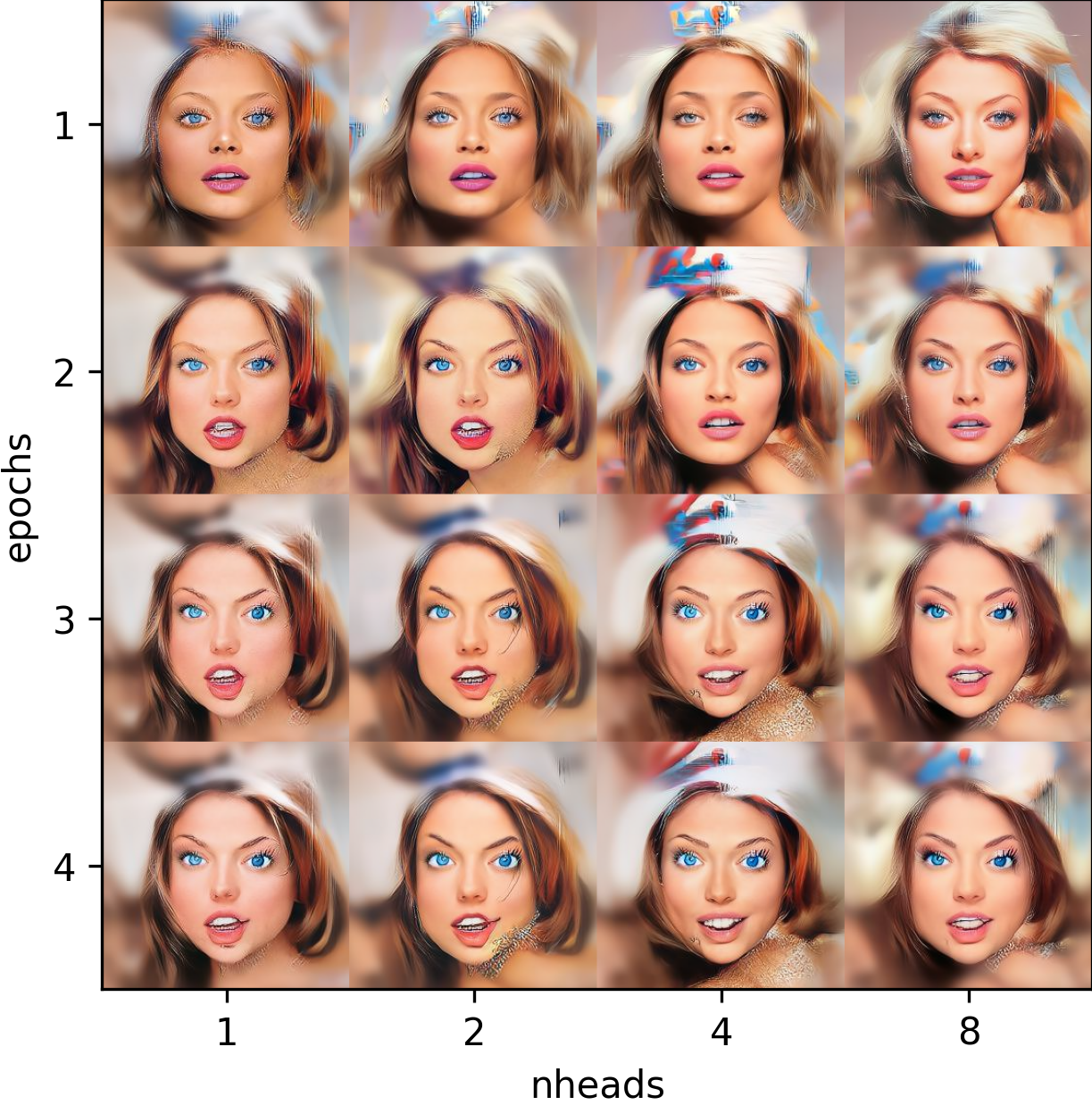

By exchanging the convolutional layers in the original architecture of Asyrp for transformer-based blocks, we achieve the following qualitative results:

|

|

|

|---|---|---|

| The effect of the number of transformer heads on the "pixar" attribute for the pixel-channel transformer architecture. | ||

In terms of the Frechet Inception Distance (

| Model | Smiling (IN) | Sad (IN) | Tanned (IN) | Pixar (UN) | Neanderthal (UN) |

|---|---|---|---|---|---|

| Original | 89.2 | 92.9 | 100.5 | 125.8 | 125.8 |

| Ours | 84.3 | 88.8 | 82.2 | 83.7 | 87.0 |

| Comparison of Frechet Inception Distance ( unseen-domain (UN) attributes between the original model and our best model. |

|||||

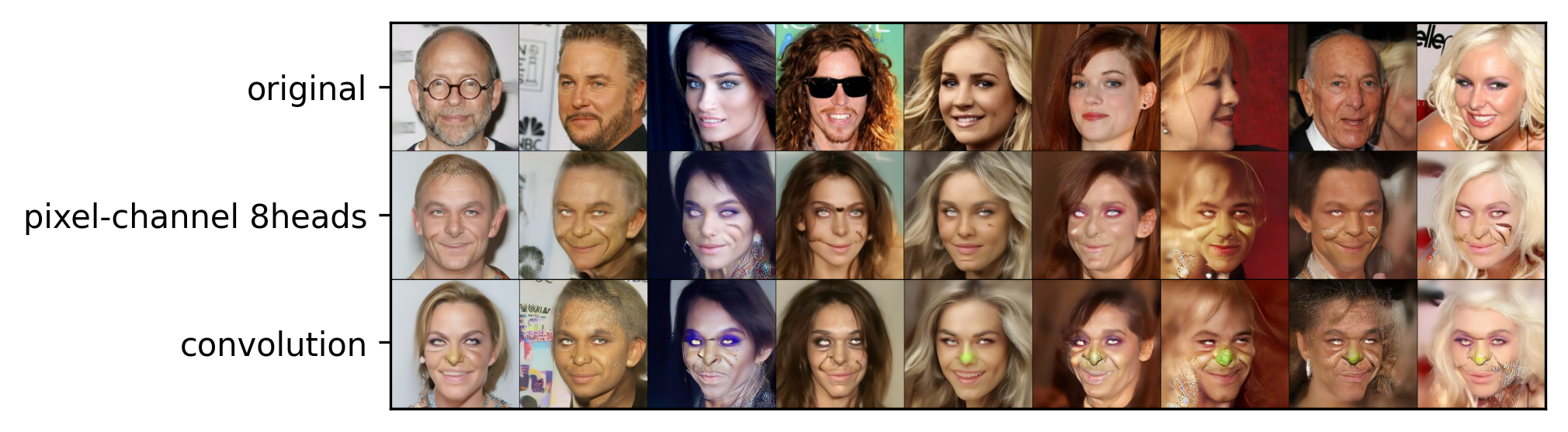

The improved quality can be observed, for example, when qualitatively comparing editing results for an entirely new "goblin" direction:

|

|

|---|---|

| Comparison of convolution-based and transformer-based architecture output for a new "goblin" attribute without hyperparameter tuning. | |

Additionally, we show that costs of training a new model for each new editing direction can be reduced by finetuning a pre-trained model which converges much faster than training the model from scratch:

|

||

|---|---|---|

| Comparison of results achieved by training from scratch and finetuning a pre-trained model after training for 2000 steps. | ||

In addition to having the ability to reproduce the results locally as described above, the repository contains a set of .job files stored in src/jobs/ which have been used to run the code on the Lisa Compute Cluster. Naturally, if used elsewhere, these files must be adjusted to accommodate particular server requirements and compute access. In order to replicate the results in full, the following must be executed (in the specified order):

To retrieve the repository and move to the corresponding folder, run the following:

git clone git@github.com:zilaeric/DL2-2023-group-15.git

cd DL2-2023-group-15/

To install the requirements, run the following:

sbatch src/jobs/install_env.job

To download datasets (to src/data/) and pretrained models (to src/lib/asyrp/pretrained/), run the following:

sbatch src/jobs/download_data.job

Subsequently, the other .job files located in src/jobs/ can be used to run the scripts found in the src/lib/asyrp/scripts/ folder.