OpenAI Gym environment based on PyBullet for multi-agent reinforcement learning with quadrotors

-

The default

DroneModel.CF2Xdynamics are based on Bitcraze's Crazyflie 2.x nano-quadrotor -

Everything after a

$is entered on a terminal, everything after>>>is passed to a Python interpreter -

Suggestions and corrections are very welcome in the form of issues and pull requests, respectively

gym-pybullet-drones |

AirSim | Flightmare | |

|---|---|---|---|

| Physics | PyBullet | FastPhysicsEngine/PhysX | Ad hoc/Gazebo |

| Rendering | PyBullet | Unreal Engine 4 | Unity |

| Language | Python | C++/C# | C++/Python |

| RGB/Depth/Segm. views | Yes | Yes | Yes |

| Multi-agent control | Yes | Yes | Yes |

| ROS interface | No | Yes | Yes |

| Hardware-In-The-Loop | No | Yes | No |

| Fully steppable physics | Yes | No | Yes |

| Aerodynamic effects | Drag, downwash, ground | Drag | Drag |

OpenAI Gym interface |

Yes | No | Yes |

RLlib MultiAgentEnv interface |

Yes | No | No |

Simulation speed-up with respect to the wall-clock when using

- 240Hz (in simulation clock) PyBullet physics for EACH drone

- AND 48Hz (in simulation clock) PID control of EACH drone

- AND nearby obstacles AND a mildly complex background (see .GIFs)

- AND 24FPS (in sim. clock), 64x48 pixel capture of 6 channels (RGBA, depth, segm.) on EACH drone

| Lenovo P52 (i7-8850H/Quadro P2000) | 2020 MacBook Pro (i7-1068NG7) | |

|---|---|---|

| Rendering | OpenGL *** | CPU-based TinyRenderer |

| Single drone, no vision | 15.5x | 16.8x |

| Single drone with vision | 10.8x | 1.3x |

| Multi-drone (10), no vision | 2.1x | 2.3x |

| Multi-drone (5) with vision | 2.5x | 0.2x |

*** on Ubuntu only, uncomment the line after

self.CLIENT = p.connect(p.DIRECT)inBaseAviary.py

While it is easy to—consciously or not—cherry pick statistics, ~5kHz PyBullet physics (CPU-only) is faster than AirSim (1kHz) and more accurate than Flightmare's 35kHz simple single quadcopter dynamics

Multi-agent 6-ch. video capture at ~750kB/s with CPU rendering (

(64*48)*(4+4+2)*24*5*0.2) is comparable to Flightmare's 240 RGB frames/s ((32*32)*3*240)—although in more complex Unity environments—and up to an order of magnitude faster on Ubuntu, with OpenGL rendering

The repo was written using Python 3.7.6 on macOS 10.15 and tested on Ubuntu 18.04.05

Major dependencies are gym, pybullet,

stable-baselines3, rllib and ffmpeg (for video recording only)

$ pip install gym

$ pip install pybullet

$ pip install stable-baselines3

$ pip install 'ray[rllib]'

$ brew install ffmpeg # on macOS

$ sudo apt install ffmpeg # on Linux

Using a conda environment,

dependencies (except ffmpeg), can be installed from file

$ cd gym-pybullet-drones/

$ conda create -n myenv --file /files/conda_req_list.txt

The repo is structured as a Gym Environment

and can be installed with pip install --editable

$ git clone https://github.com/JacopoPan/gym-pybullet-drones.git

$ cd gym-pybullet-drones/

$ pip install -e .

There are 2 basic scripts in examples/: fly.py and learn.py

fly.pyruns an independent flight using PID control implemented in classDSLPIDControl

$ conda activate myenv # If using a conda environment

$ cd gym-pybullet-drones/examples/

$ python fly.py

Tip: use the GUI's sliders and button

Use GUI RPMto override the control with interactive inputs

$ conda activate myenv # If using a conda environment

$ cd gym-pybullet-drones/examples/

$ python learn.py

Other scripts in folder examples/ are:

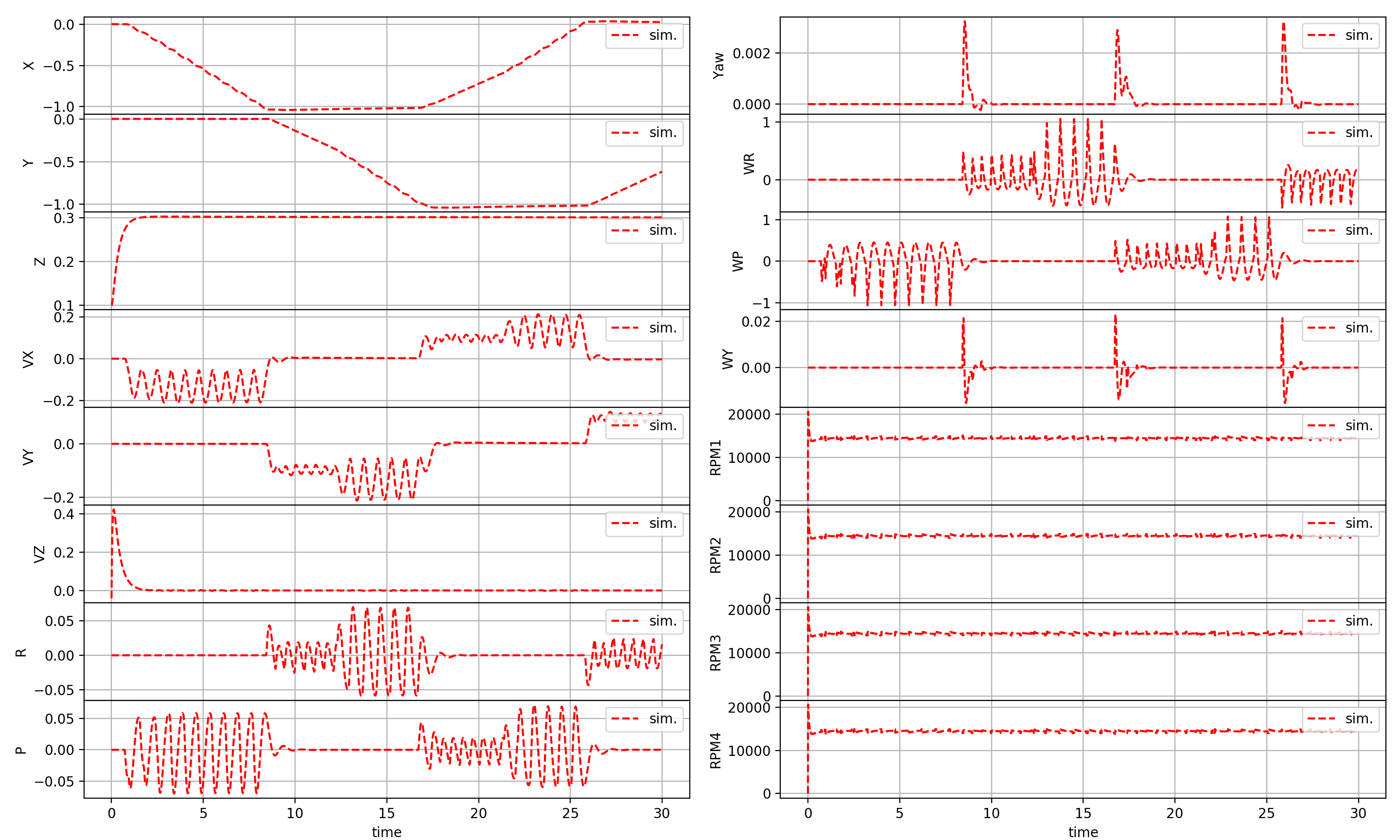

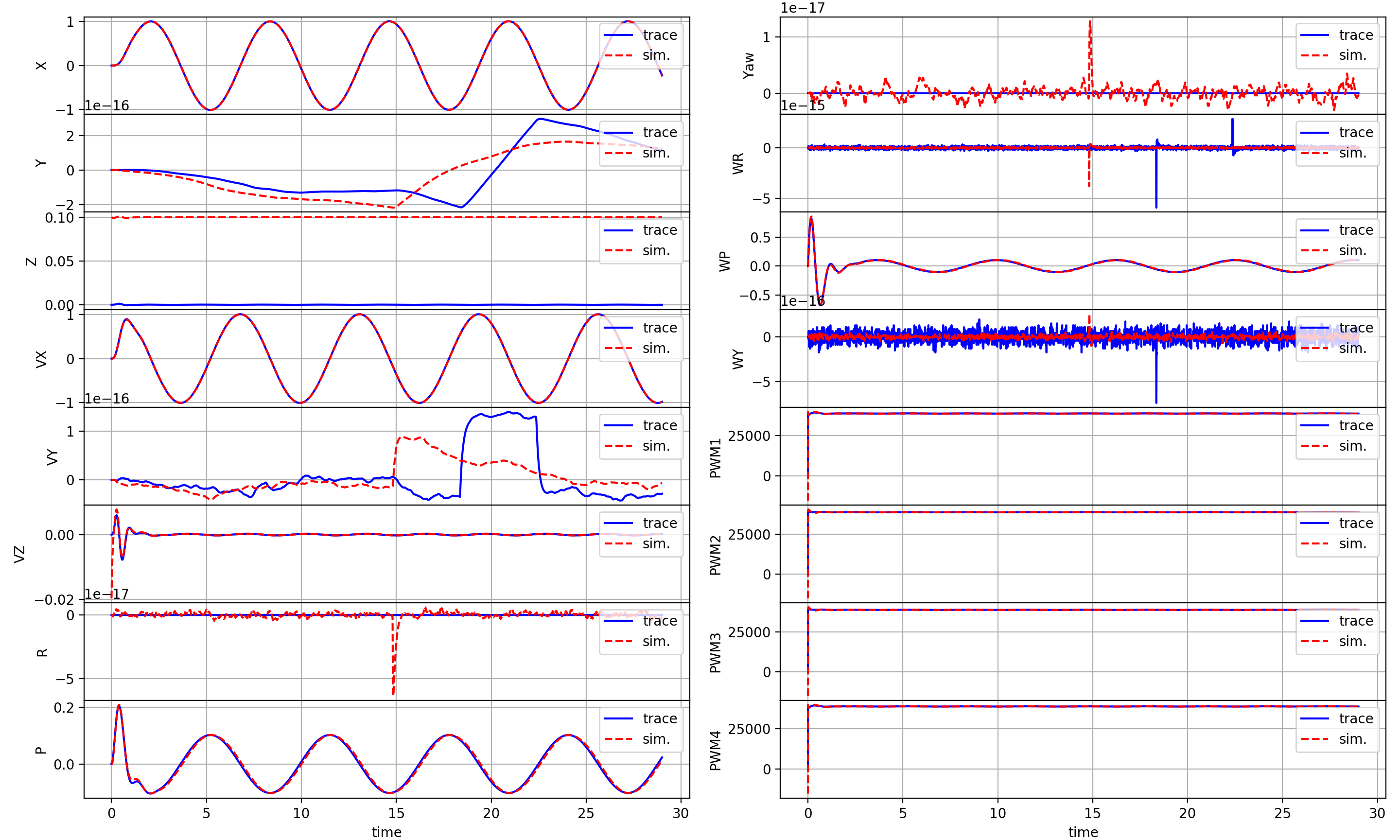

compare.pywhich replays and compare to a trace saved inexample_trace.pkl

$ conda activate myenv # If using a conda environment

$ cd gym-pybullet-drones/examples/

$ python compare.py

downwash.pyis a flight script with only 2 drones, to test the downwash model

$ conda activate myenv # If using a conda environment

$ cd gym-pybullet-drones/examples/

$ python downwash.py

physics.pyis an accessory script that can be used to understand PyBullet's force and torque APIs for different URDF links inp.WORLD_FRAMEandp.LINK_FRAME

$ conda activate myenv # If using a conda environment

$ cd gym-pybullet-drones/examples/

$ python physics.py

Tip: also check the examples in pybullet-examples

_dev.pyis a script continuously updated with the latest features ofgym-pybullet-droneslike RGB, depth and segmentation views from each drone's POV or compatibility with RLlibs'sMultiAgentEnvclass

$ conda activate myenv # If using a conda environment

$ cd gym-pybullet-drones/examples/

$ python _dev.py

A flight arena for one (ore more) quadrotor can be created as a child class of BaseAviary()

>>> env = BaseAviary(

>>> drone_model=DroneModel.CF2X, # See DroneModel Enum class for other quadcopter models

>>> num_drones=1, # Number of drones

>>> visibility_radius=np.inf, # Distance at which drones are considered neighbors, only used for multiple drones

>>> initial_xyzs=None, # Initial XYZ positions of the drones

>>> initial_rpys=None, # Initial roll, pitch, and yaw of the drones in radians

>>> physics: Physics=Physics.PYB, # Choice of (PyBullet) physics implementation

>>> freq=240, # Stepping frequency of the simulation

>>> aggregate_phy_steps=1, # Number of physics updates within each call to BaseAviary.step()

>>> gui=True, # Whether to display PyBullet's GUI, only use this for debbuging

>>> record=False, # Whether to save a .mp4 video (if gui=True) or .png frames (if gui=False) in gym-pybullet-drones/files/, see script /files/ffmpeg_png2mp4.sh for encoding

>>> obstacles=False) # Whether to add obstacles to the environment

And instantiated with gym.make()—see learn.py for an example

>>> env = gym.make('rl-takeoff-aviary-v0') # See learn.py

Then, the environment can be stepped with

>>> obs = env.reset()

>>> for _ in range(10*240):

>>> obs, reward, done, info = env.step(env.action_space.sample())

>>> env.render()

>>> if done: obs = env.reset()

>>> env.close()

The action space's definition of an environment must be implemented in each child of BaseAviary by function

>>> def _actionSpace(self):

>>> ...

In CtrlAviary and VisionCtrlAviary, it is a Dict() of Box(4,) containing the drones' commanded RPMs

The dictionary's keys are "0", "1", .., "n"—where n is the number of drones

The action space of MARLFlockAviary has the same structure but values are normalized in range [-1, 1]

The action space of RLTakeoffAviary is a single Box(4,) normalized to the [-1, 1] range

Each child of BaseAviary also needs to implement a preprocessing step translating actions into RPMs

>>> def _preprocessAction(self, action):

>>> ...

CtrlAviary, VisionCtrlAviary, MARLFlockAviary, and RLTakeoffAviary all simply clip the inputs to MAX_RPM

The observation space's definition of an environment must be implemented by every child of BaseAviary

>>> def _observationSpace(self):

>>> ...

In CtrlAviary, it is a Dict() of pairs {"state": Box(20,), "neighbors": MultiBinary(num_drones)}

The dictionary's keys are "0", "1", .., "n"—where n is the number of drones

Each Box(20,) contains the drone's

- X, Y, Z position in

WORLD_FRAME(3 value, meters unless normalized) - Quaternion orientation in

WORLD_FRAME(4 values) - Roll, pitch and yaw angles in

WORLD_FRAME(3 values, radians unless normalized) - The velocity vector in

WORLD_FRAME(3 values, m/s unless normalized) - Angular velocities in

WORLD_FRAME(3 values, rad/s unless normalized) - Motors' speeds (4 values, RPM)

Each MultiBinary(num_drones) contains the drone's own row of the multi-robot system adjacency matrix

The observation space of MARLFlockAviary has the same structure but normalized to the [-1, 1] range

The observation space of RLTakeoffAviary is a single Box(20,), normalized to the [-1, 1] range

The observation space of VisionCtrlAviary is the same asCtrlAviary but also includes keys rgb, dep, and seg (in each drone's dictionary) for the matrices containing the drone's RGB, depth, and segmentation views

To fill/customize the content of each obs, every child of BaseAviary needs to implement

>>> def _computeObs(self, action):

>>> ...

Reward, done and info are computed by the implementation of 3 functions in every child of BaseAviary

>>> def _computeReward(self, obs):

>>> ... # float or dict of floats

>>> def _computeDone(self, obs):

>>> ... # bool or dict of bools

>>> def _computeInfo(self, obs):

>>> ... # dict or dict of dicts

See RLTakeoffAviary and MARLFlockAviary for example implementations

Simple drag, ground effect, and downwash models can be included in the simulation initializing BaseAviary() with physics=Physics.PYB_GND_DRAG_DW, these are based on the system identification of Forster (2015) (Eq. 4.2), the analytical model used as a baseline for comparison by Shi et al. (2019) (Eq. 15), and DSL's experimental work

Check the implementations of _drag(), _groundEffect(), and _downwash() in BaseAviary for more detail

Folder control contains the implementations of 2 PID controllers

DSLPIDControl (for DroneModel.CF2X/P) and SimplePIDControl (for DroneModel.HB) can be used as

>>> ctrl = [DSLPIDControl(env) for i in range(num_drones)] # Initialize "num_drones" controllers

>>> ...

>>> for i in range(num_drones): # Compute control for each drone

>>> action[str(i)], _, _ = ctrl[i].computeControlFromState(. # Write the action in a dictionary

>>> control_timestep=env.TIMESTEP,

>>> state=obs[str(i)]["state"],

>>> target_pos=TARGET_POS)

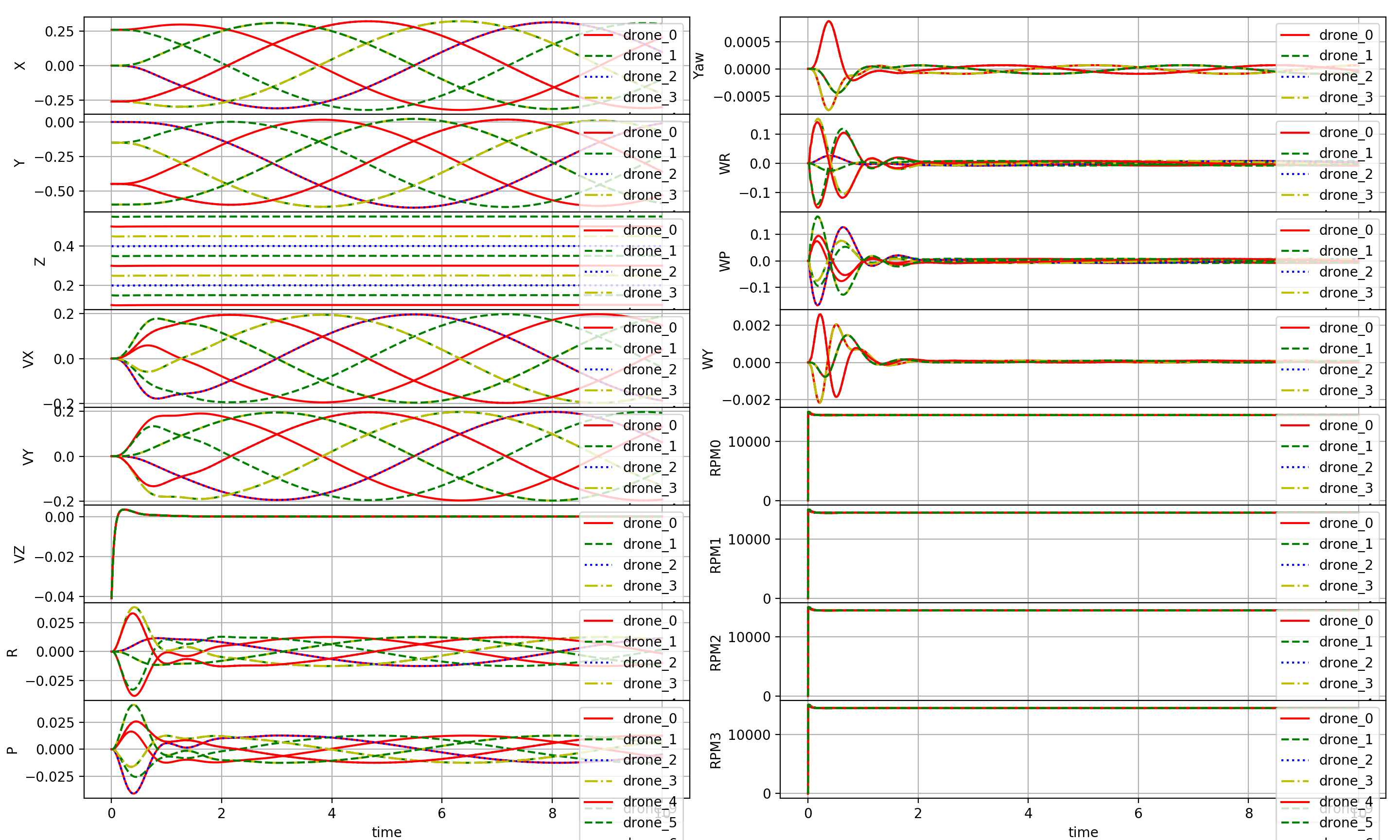

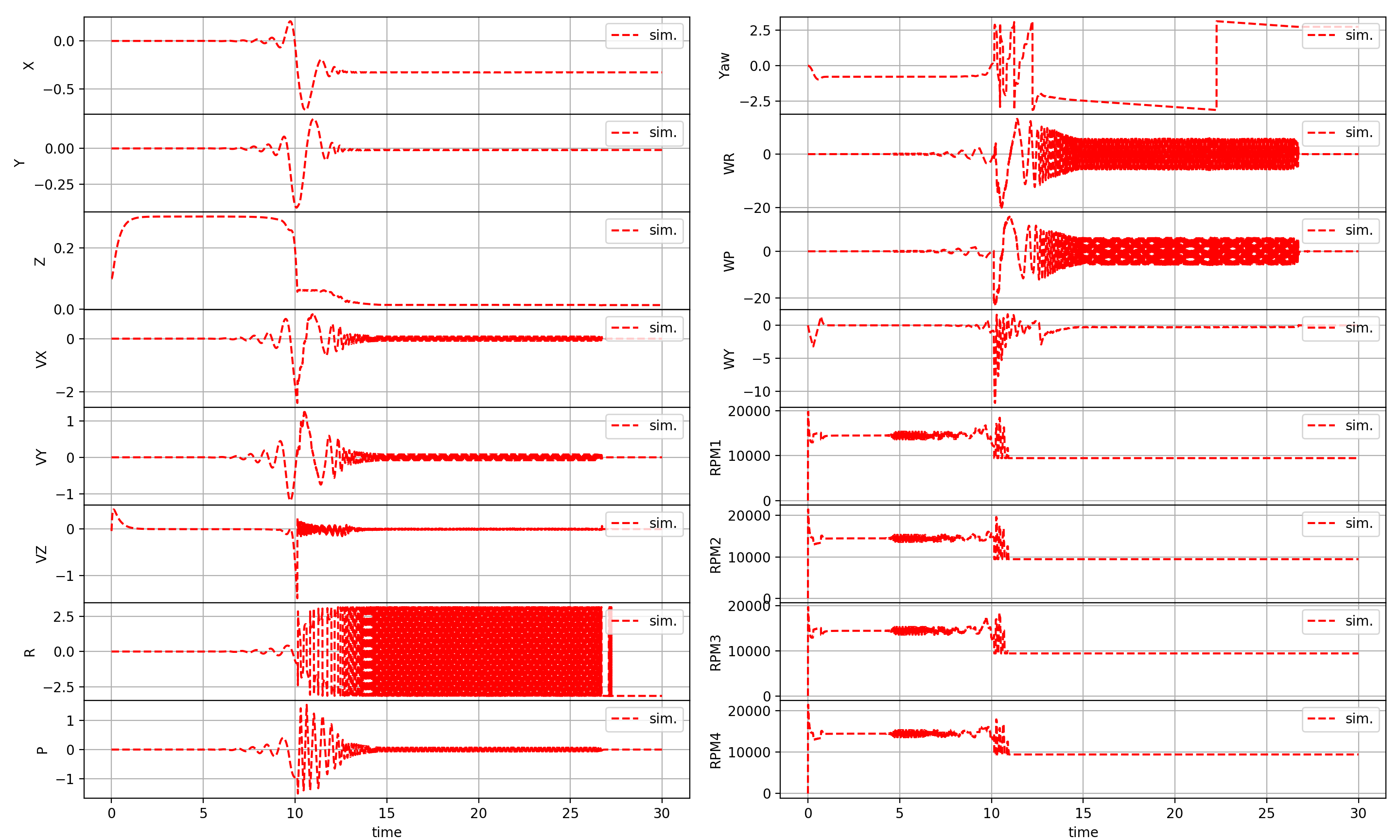

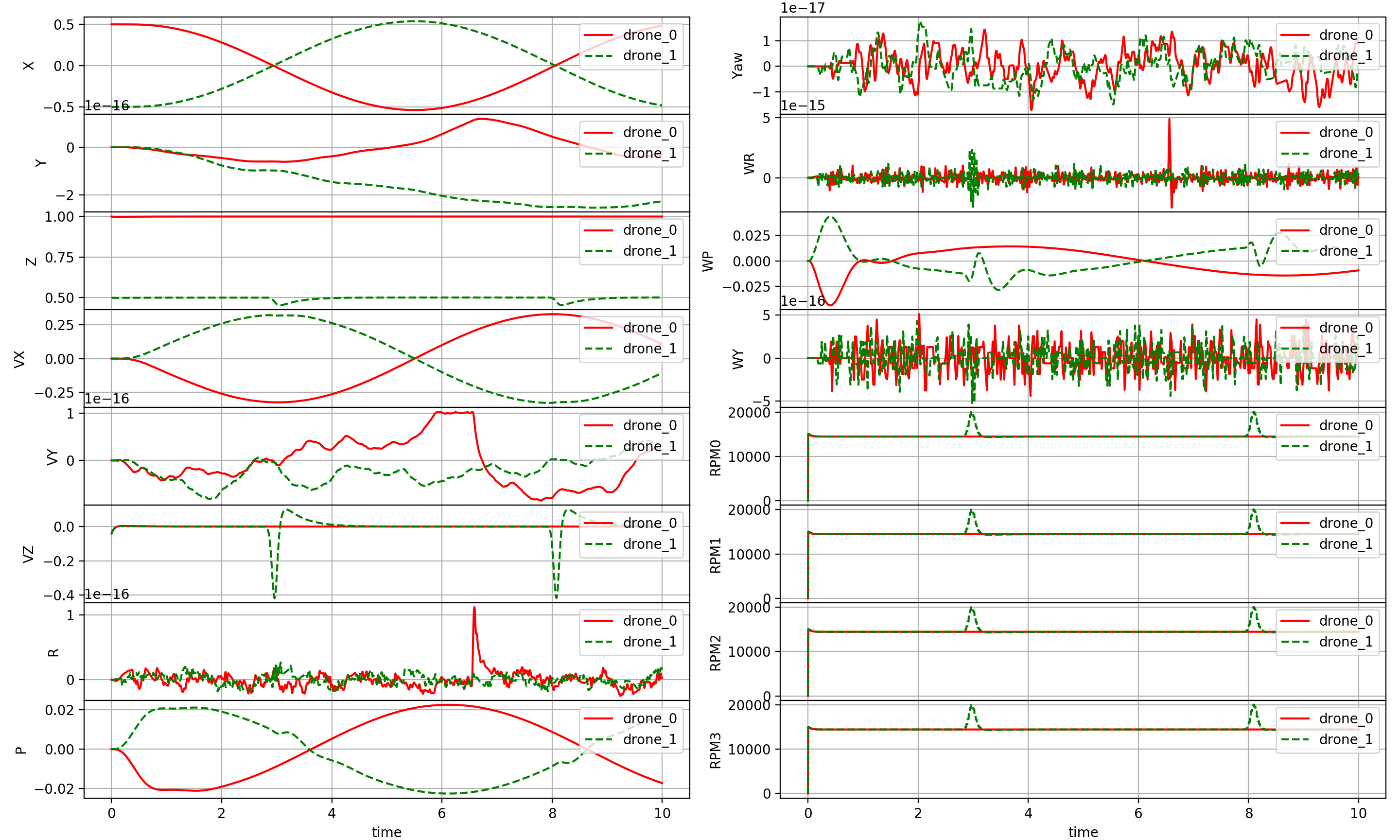

Class Logger contains helper functions to save and plot simulation data, as in this example

>>> logger = Logger(logging_freq_hz=freq, num_drones=num_drones) # Initialize the logger

>>> ...

>>> for i in range(NUM_DRONES): # Log information for each drone

>>> logger.log(drone=i,

>>> timestamp=K/env.SIM_FREQ,

>>> state= obs[str(i)]["state"],

>>> control=np.hstack([ TARGET_POS, np.zeros(9) ]))

>>> ...

>>> logger.save() # Save data to file

>>> logger.plot() # Plot data

If you wish, please refer to this work as

@MISC{gym-pybullet-drones2020,

author = {Panerati, Jacopo and Zheng, Hehui and Zhou, SiQi and Xu, James and Prorok, Amanda and Sch\"{o}llig, Angela P.},

title = {Learning to Fly: a PyBullet-based Gym environment to simulate and learn the control of multiple nano-quadcopters},

howpublished = {\url{https://github.com/JacopoPan/gym-pybullet-drones}},

year = {2020}

}

- Nathan Michael, Daniel Mellinger, Quentin Lindsey, Vijay Kumar (2010) The GRASP Multiple Micro UAV Testbed

- Benoit Landry (2014) Planning and Control for Quadrotor Flight through Cluttered Environments

- Julian Forster (2015) System Identification of the Crazyflie 2.0 Nano Quadrocopter

- Carlos Luis and Jeroome Le Ny (2016) Design of a Trajectory Tracking Controller for a Nanoquadcopter

- Shital Shah, Debadeepta Dey, Chris Lovett, and Ashish Kapoor (2017) AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles

- Guanya Shi, Xichen Shi, Michael O’Connell, Rose Yu, Kamyar Azizzadenesheli, Animashree Anandkumar, Yisong Yue, and Soon-Jo Chung (2019) Neural Lander: Stable Drone Landing Control Using Learned Dynamics

- Yunlong Song, Selim Naji, Elia Kaufmann, Antonio Loquercio, and Davide Scaramuzza (2020) Flightmare: A Flexible Quadrotor Simulator

University of Toronto's Dynamic Systems Lab / Vector Institute / University of Cambridge's Prorok Lab / Mitacs