Paper | Ziyan Chen, Jiazhen Liu, Junyan Li, Changwen Cao, Changlong Jin, and Hakil Kim

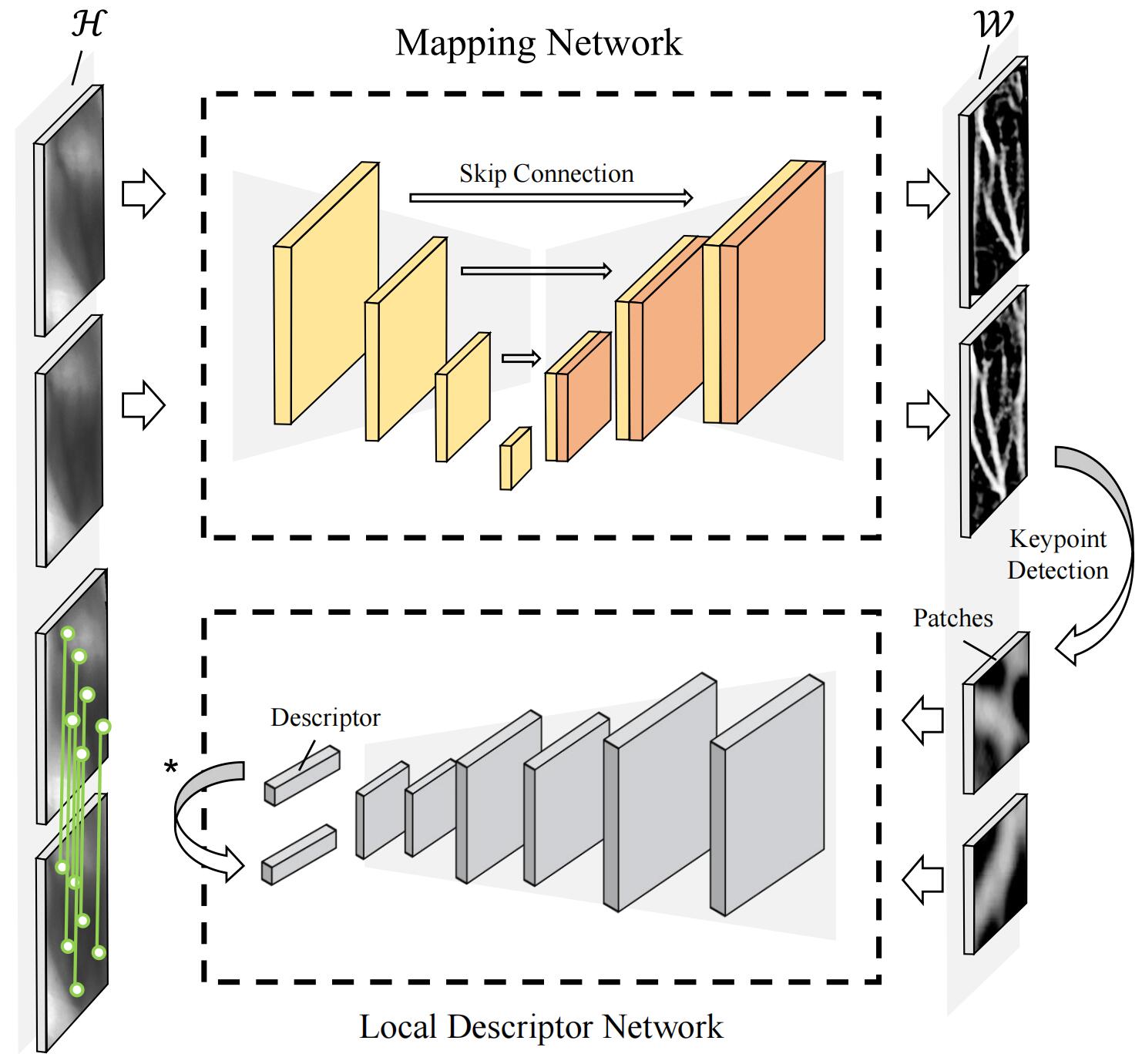

FV-UPatches is a two-stage framework towards generic finger vein recognition in unseen domains. We provide the pre-trained U-Net model which can be easily transfered to other datasets without retraining or fine-tuning effort.

FV-UPatches is based on an open-set recognition settings, whose pipeline can be depicted as follows:

-

Preprocess

- Generate meta-info of input pairs -

[[I_i1, I_j1], [I_im, I_jm], ...] - Prepare segmentation maps from raw inputs based on pretrained U-Net -

I_seg = U-Net(I)

- Generate meta-info of input pairs -

-

Detect keypoints from segmentation maps -

kps = Detector(I_seg) -

Extract local descriptors from segmentation maps on keypoints -

desp = Descriptor(kps, I_seg) -

Compute distance of the descriptor-set pair to measure their similarity -

result = Matcher(desp_i, desp_j)

✨ Please help star this repo if it is helpful for your work. Thanks! XD.

- June 12, 2023 - New functions (cross-dataset evaluation) added. To use the new features, please download new pairs_meta data.

- Dec 13, 2022 - 🚀 this repo is created.

- Relase Preprocessing codes (Matlab).

- Release the soft label data (Since the finger-vein data require licenses to access, we may provide the processing Matlab codes as an alternative).

# 1. Create virtual env

conda create -n fv_upatches python=3.7

conda activate fv_upatches

# 2. Follow PyTorch tutorial to install torch, torchvision (and cudatoolkit) according to your env.

# See https://pytorch.org/get-started/previous-versions/

# e.g. for cu10.2

conda install pytorch==1.5.0 torchvision==0.6.0 cudatoolkit=10.2 -c pytorch

# 3. Install additional dependencies

pip install -r requirements.txt| U-Net | SOSNet | TFeat |

|---|---|---|

| unet-thu.pth | 32×32-liberty.pth | tfeat-liberty.params |

All weights file should be placed in the directory of weights/.

You can use the provided models from the above links, or train your custom models.

The above-mentioned weight files can be downloaded in Baidu Cloud as well.

Restricted by licenses of the public finger-vein datasets, we provide only 10 samples of three datasets to show how the pipeline works. Download from Google Drive | Baidu Cloud.

A model (weights) and data file tree can be like this:

├── ...

├── models

├── sosnet

├── unet

└── weights

└── data

├── pairs_meta

├── config.yaml

├── ...

├── MMCBNU

├── FVUSM

└── SDUMLA

├── roi

├── seg # pseudo segmentation labels based on traditional methods

├── seg-thu # segmentation from U-Net trained in THU-FV dataset

└── thi # skeleton(thinning images) as the keypoint priorsYou have to provide the segmented data. The demo file tree shows segmentation data from U-Net as seg-thu and from traditional methods as enh.

You can eval FVR(Finger-Vein Recognition) performance based on an open-set setting in any datasets.

# exec in the proj root directory

# e.g. eval in SDUMLA based on FVC protocol and <Ridge Det+SOSNet Desp>

python eval.py -d ./data/SDUMLA -p ./data/pair/SDUMLA-FVC.txt -s ./results -desp SOSNet -det RidgeDet

# see descriptions of the arguments

python eval.py -hTo evaluate pretrained U-Net, prepare the enhanced finger vein data as the input and execute the script:

python models/unet/eval.py \

-i data/MMCBNU/roi \

-s ./outputs/unet/MMCBNUWe also provide the implementation of the baseline.

| Detector | Descriptor |

|---|---|

| RidgeDet (Skeleton) | SOSNet、TFeat |

| - | SURF、SIFT、FAST |

An example to run baselines.

# e.g. eval in SDUMLA based on FVC protocol(default args) and <SIFT det+desp>

python eval.py -desp SIFT -det NoneYou can train a U-Net model on your custom data(including the grascale ROI finger images and labels). The default input size is [128, 256].

cd models/unet

# cd target dir

python split_data.py

# see help of the arguments

# python split_data.py -h

python train.py- Dataset - MMCBNU | HKPU | UTFVP | SDUMLA | FVUSM | THU-FV

- SOSNet - Project Page | Paper | Source Code

- U-Net - Paper | PyTorch Implementation

If you have any question of this work, please feel free to reach me out at chen.ziyan@outlook.com.

For business or other formal purposes, please contact Prof. King at cljin@sdu.edu.cn.