Project Page | Paper | OpenReview | Poster

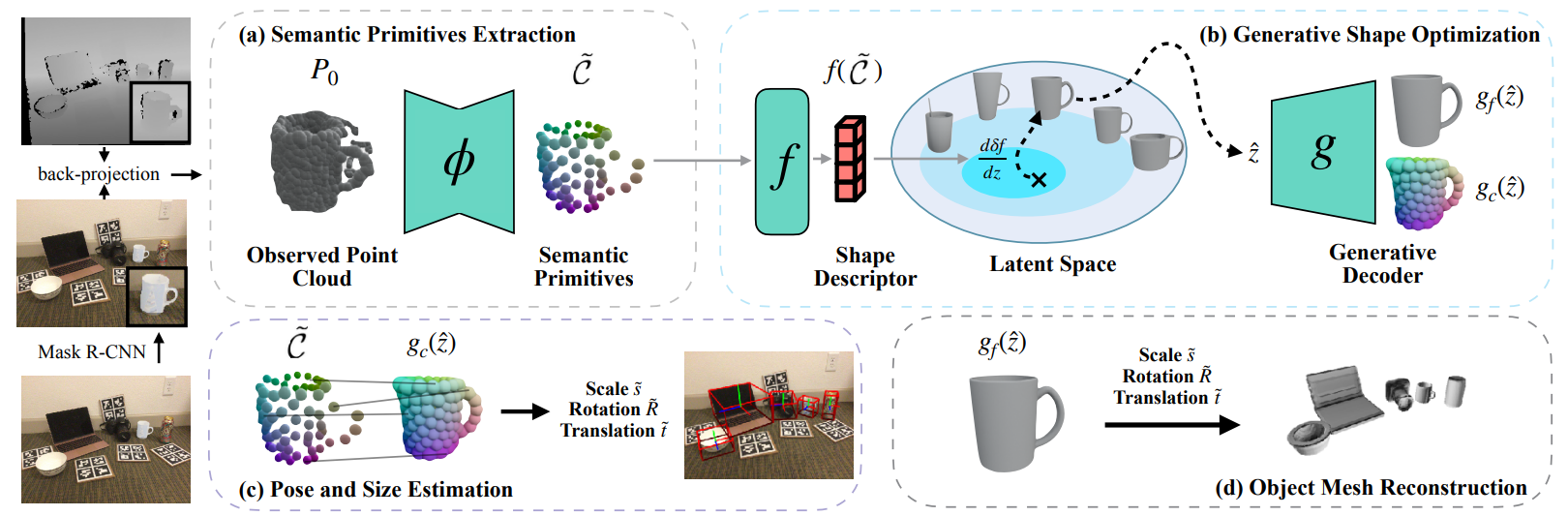

Generative Category-Level Shape and Pose Estimation with Semantic Primitives

Guanglin Li, Yifeng Li, Zhichao Ye, Qihang Zhang, Tao Kong, Zhaopeng Cui, Guofeng Zhang.

CoRL 2022

Follow the instruction of this repo. Place the pretrained DualSDF checkpoints into the directory $ROOT/eval_datas/ like:

eval_datas/

DualSDF_ckpts/

Download the ground truth annotation of test data, Mask R-CNN results, object meshes and real test data. Unzip them and organize these files in $ROOT/eval_datas/ as follows:

eval_datas/

DualSDF_ckpts/

gts/

obj_models/

deformnet_eval/

real/

test/

Prepare the primitive annotation by the follow script:

python generate_primitive.pyDownload CAMERA training data, ground truth annotation and Composed_depths, and unzip them. After the above steps, the dictionary should be like:

train_datas/

data_lists/

NOCS_primitives/

train/

camera_full_depths/

train/

The pretrained model can be download from Google Drive, or you can train the model by:

python train.py ./config/gcn3d_seg/gcn3d_seg_all_256.yaml The training process would take about 1.5 hours for each epoch.

The results present in the paper can be downloaded from Google Drive, or you can produce the result by:

python eval.py ./config/gcn3d_seg/gcn3d_seg_all_256.yaml --pretrained <path_to_segmentation_ckpt> # --draw (save result images)The evaluation process would take about 30 minutes. After the results is saved, you can check the metrics by:

python SGPA_tools/evaluate.py --result_dir <path_to_result>The curve image and metric results could be found in the result dictionary.

If you find our work is useful, please consider cite:

@inproceedings{

li2022generative,

title={Generative Category-Level Shape and Pose Estimation with Semantic Primitives},

author={Li, Guanglin and Li, Yifeng and Ye, Zhichao and Zhang, Qihang and Kong, Tao and Cui, Zhaopeng and Zhang, Guofeng},

booktitle={6th Annual Conference on Robot Learning},

year={2022},

url={https://openreview.net/forum?id=N78I92JIqOJ}

}

In this project we use (parts of) the implementations of the following works:

- DualSDF by zekunhao1995.

- NOCS_CVPR2019 by hughw19.

- SGPA by ck-kai.

- 3dgcn by zhihao-lin.

We thank the respective authors for open sourcing their methods.