MACO: A Modality Adversarial and Contrastive Framework for Modality-missing Multi-modal Knowledge Graph Completion

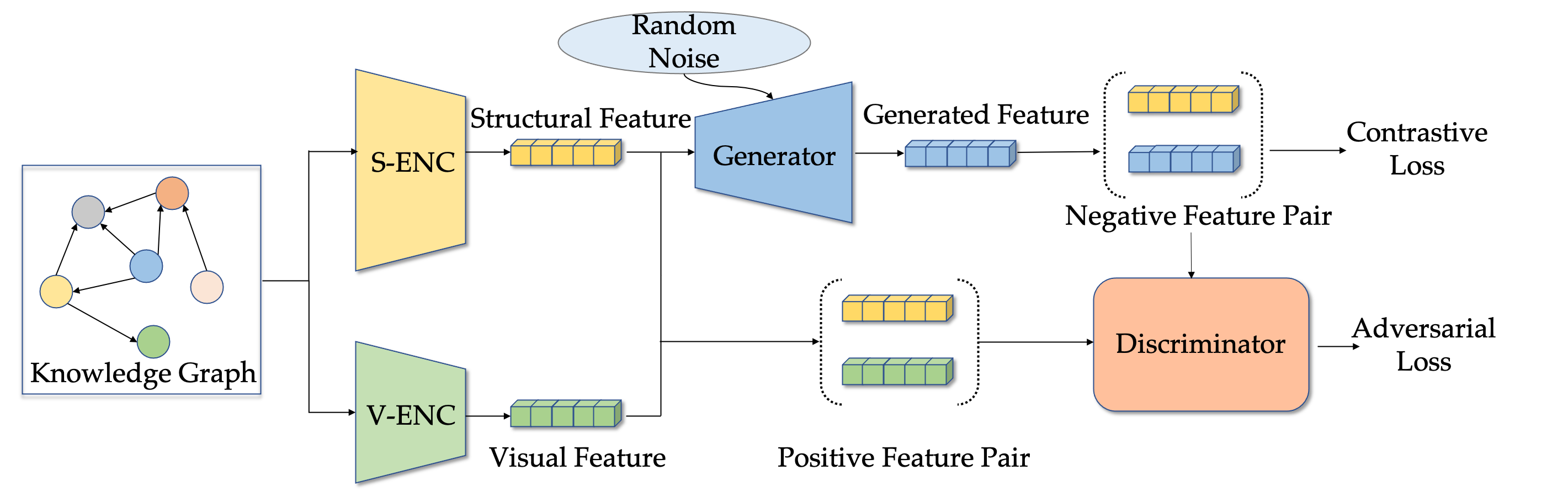

Recent years have seen significant advancements in multi-modal knowledge graph completion (MMKGC). MMKGC enhances knowledge graph completion (KGC) by integrating multi-modal entity information, thereby facilitating the discovery of unobserved triples in the large-scale knowledge graphs (KGs). Nevertheless, existing methods emphasize the design of elegant KGC models to facilitate modality interaction, neglecting the real-life problem of missing modalities in KGs. The missing modality information impedes modal interaction, consequently undermining the model's performance. In this paper, we propose a modality adversarial and contrastive framework (MACO) to solve the modality-missing problem in MMKGC. MACO trains a generator and discriminator adversarially to generate missing modality features that can be incorporated into the MMKGC model. Meanwhile, we design a cross-modal contrastive loss to improve the performance of the generator. Experiments on public benchmarks with further explorations demonstrate that MACO could achieve state-of-the-art results and serve as a versatile framework to bolster various MMKGC models.

2024-02We preprint our Survey Knowledge Graphs Meet Multi-Modal Learning: A Comprehensive Survey [Repo].

- python 3

- torch >= 1.8.0

- numpy

- dgl-cu111 == 0.9.1

- All experiments are performed with one A100-40G GPU.

-

MACO/pretrain with MACO to complete the modality information. We have prepared the FB15K-237 dataset and the visual embeddings extracted with Vision Transformer (ViT). You should first download it from this link. -

MMKGC/run multi-modal KGC to evaluate the quality of generated visual features. -

run MACO

cd MACO/

# download the FB15K-237 visual embeddings and put it in data/

# run the training code

python main.py- run MMKGC

cd MMKGC/

# Put the generated visual embedding in MACO to visual/

mv ../MACO/EMBEDDING_NAME visual/

# run the MMKGC model

DATA=FB15K237

NUM_BATCH=1024

KERNEL=transe

MARGIN=6

LR=2e-5

NEG_NUM=32

VISUAL=random-vit

MS=0.6

POSTFIX=2.0-0.01

CUDA_VISIBLE_DEVICES=0 nohup python run_ikrl.py -dataset=$DATA \

-batch_size=$NUM_BATCH \

-margin=$MARGIN \

-epoch=1000 \

-dim=128 \

-save=./checkpoint/ikrl/$DATA-New-$KERNEL-$NUM_BATCH-$MARGIN-$LR-$VISUAL-large-$MS \

-img_grad=False \

-img_dim=768 \

-neg_num=$NEG_NUM \

-kernel=$KERNEL \

-visual=$VISUAL \

-learning_rate=$LR \

-postfix=$POSTFIX \

-missing_rate=$MS > ./log/IKRL$MS-$DATA-$KERNEL-4score-$MARGIN-$VISUAL-$MS-$POSTFIX.txt &This is a simple demo to run IKRL model. The scripts to train other models (TBKGC, RSME) can be found in MMKGC/scripts/.

There are also some other works about multi-modal knowledge graphs from ZJUKG team. If you are interest in multi-modal knowledge graphs, you could have a look at them:

- (ACM MM 2023) MEAformer: Multi-modal Entity Alignment Transformer for Meta Modality Hybrid

- (ISWC 2023) Rethinking Uncertainly Missing and Ambiguous Visual Modality in Multi-Modal Entity Alignment

- (SIGIR 2022) Hybrid Transformer with Multi-level Fusion for Multimodal Knowledge Graph Completion

- (IJCNN 2023) Modality-Aware Negative Sampling for Multi-modal Knowledge Graph Embedding

Please condiser citing this paper if you use the code from our work. Thanks a lot :)

@article{zhang2023maco,

title={MACO: A Modality Adversarial and Contrastive Framework for Modality-missing Multi-modal Knowledge Graph Completion},

author={Zhang, Yichi and Chen, Zhuo and Zhang, Wen},

journal={arXiv preprint arXiv:2308.06696},

year={2023}

}