- 2024.7.25: Release inference code and checkpoint weight of Diffcalib in the repo.

- 2024.7.25: Release arXiv paper, with supplementary material.

conda create -n diffcalib python=3.10

conda activate diffcalib

pip install -r requirements.txt

pip install -e .Download the intrinsic data from MonoCalib

First, Download the stable-diffusion-2-1 and transform it to the 8 inchannels from modify which will be put in --checkpoint, put the checkpoints under ./checkpoint/

Then, Download the pre-trained models diffcalib-best.zip from BaiduNetDisk(Extract code:xn5p). Please unzip the package and put the checkpoints under ./checkpoint/ which will be put in --unet_ckpt_path.

finally, you can run the bash to evaluate our model in the benchmark.

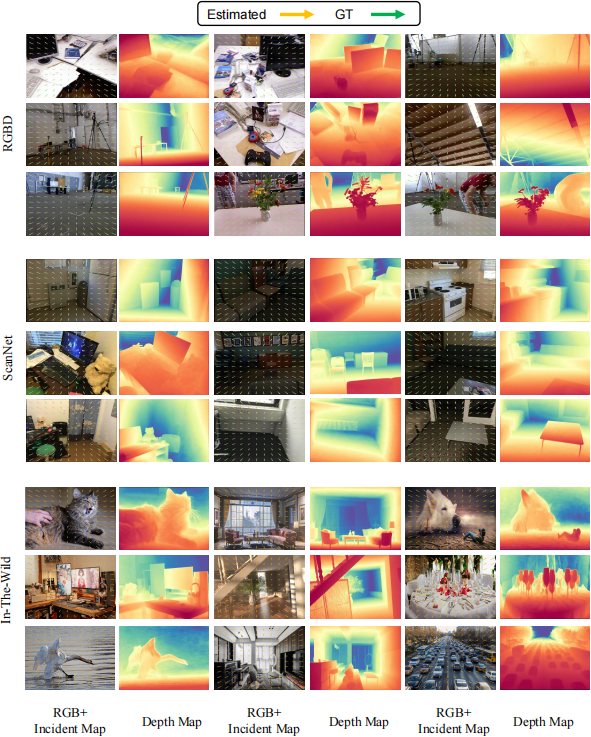

sh scripts/run_incidence_mutidata.shFor depth and incident map visualization

, download shift model from res101 (Extract code: g3yi), diffcalib-pcd.zip from BaiduNetDisk(Extract code:20z6) and install torchsparse packages as follows

sudo apt-get install libsparsehash-dev

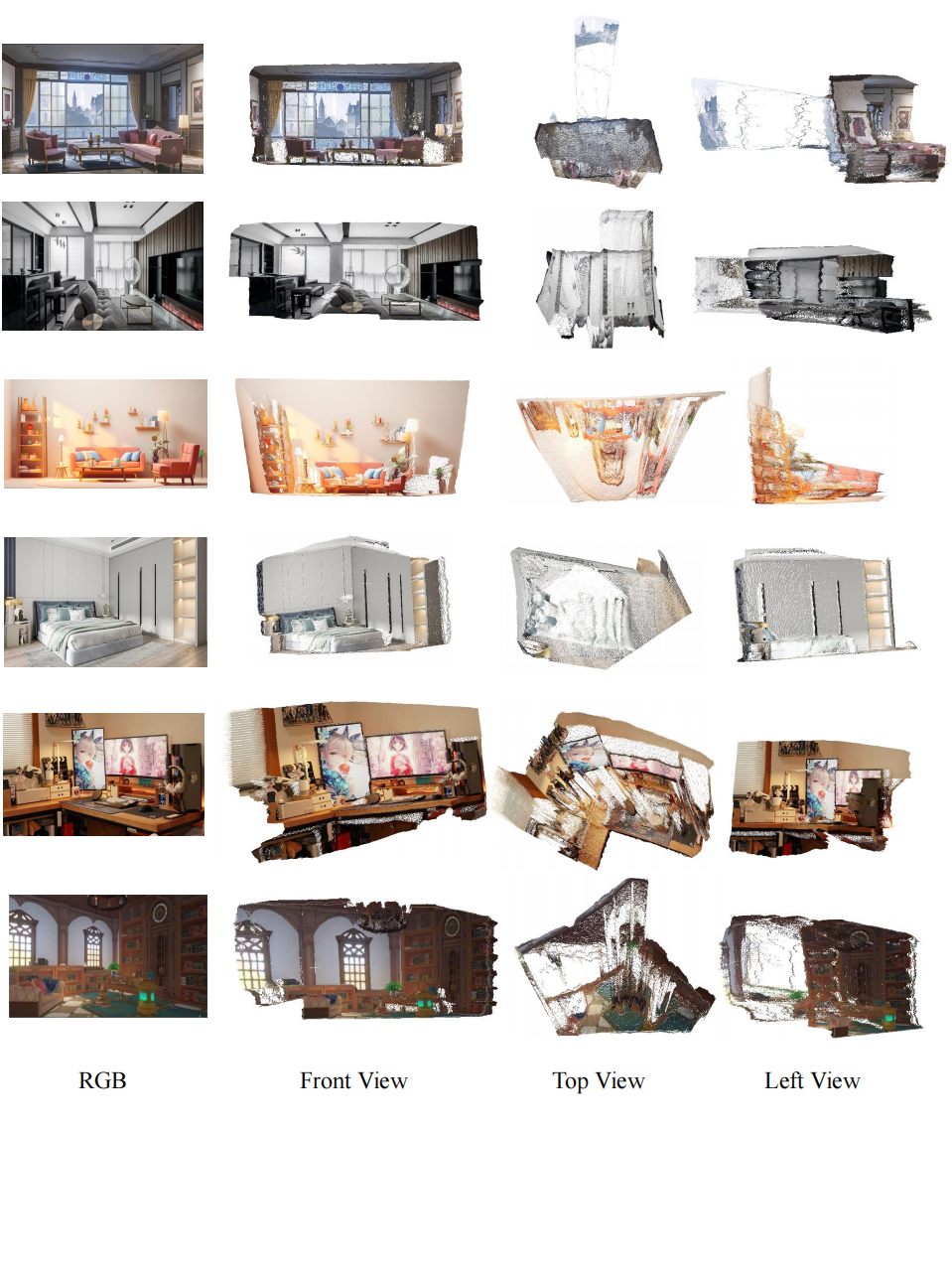

pip install --upgrade git+https://github.com/mit-han-lab/torchsparse.git@v1.2.0Then we can reconstruct 3D shape from a single image.

bash scripts/run_incidence_depth_pcd.sh- Marigold: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation. arXiv, GitHub.

For non-commercial use, this code is released under the LICENSE.

@article{he2024diffcalib,

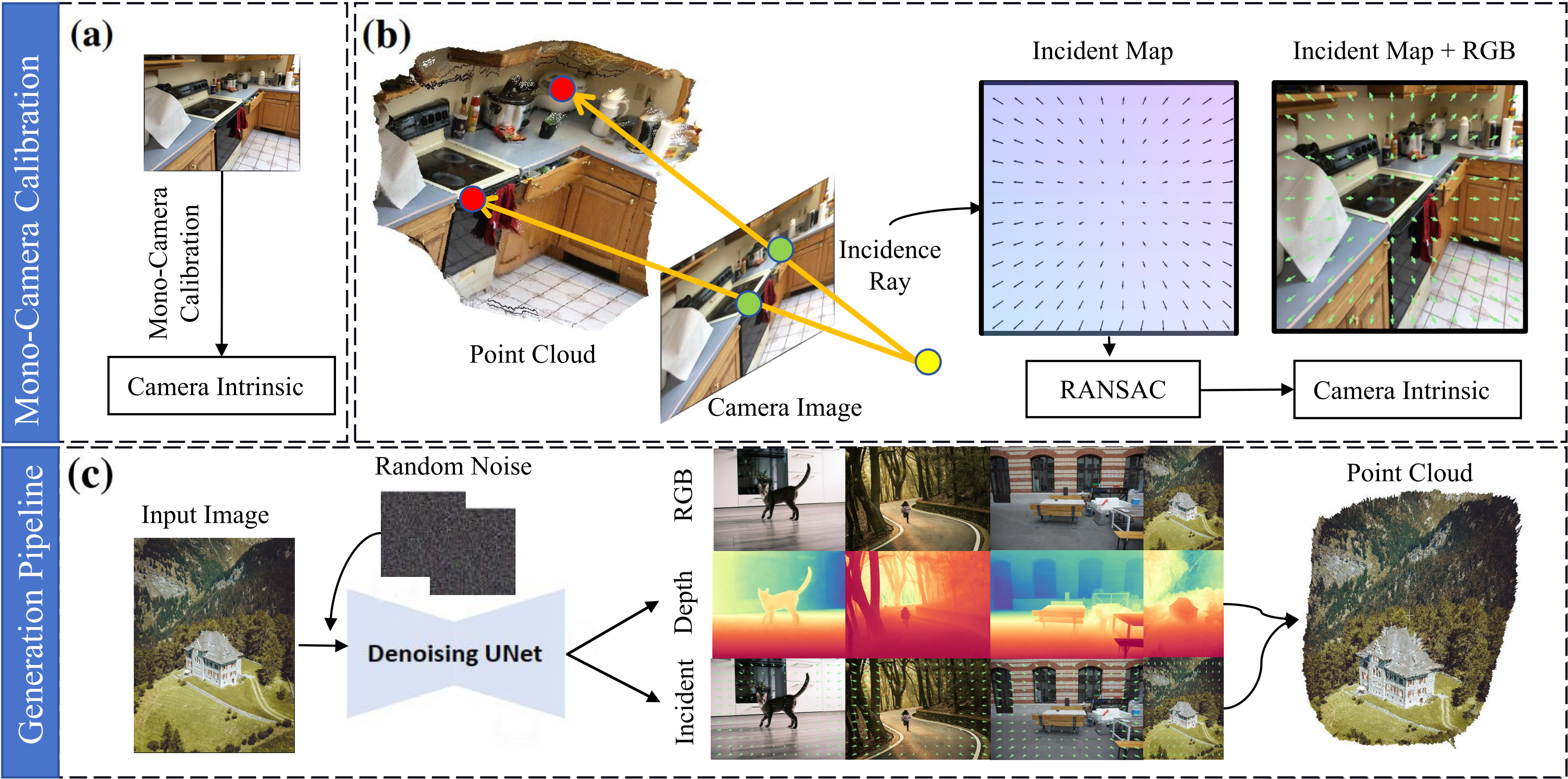

title = {DiffCalib: Reformulating Monocular Camera Calibration as Diffusion-Based Dense Incident Map Generation},

author = {Xiankang He and Guangkai Xu and Bo Zhang and Hao Chen and Ying Cui and Dongyan Guo},

year = {2024},

journal = {arXiv preprint arXiv: 2405.15619}

}