This is a tensorflow-based rotation detection benchmark, also called UranusDet.

UranusDet is written and maintained by Xue Yang with Shanghai Jiao Tong University supervised by Prof. Junchi Yan.

Papers and codes related to remote sensing/aerial image detection: DOTA-DOAI.

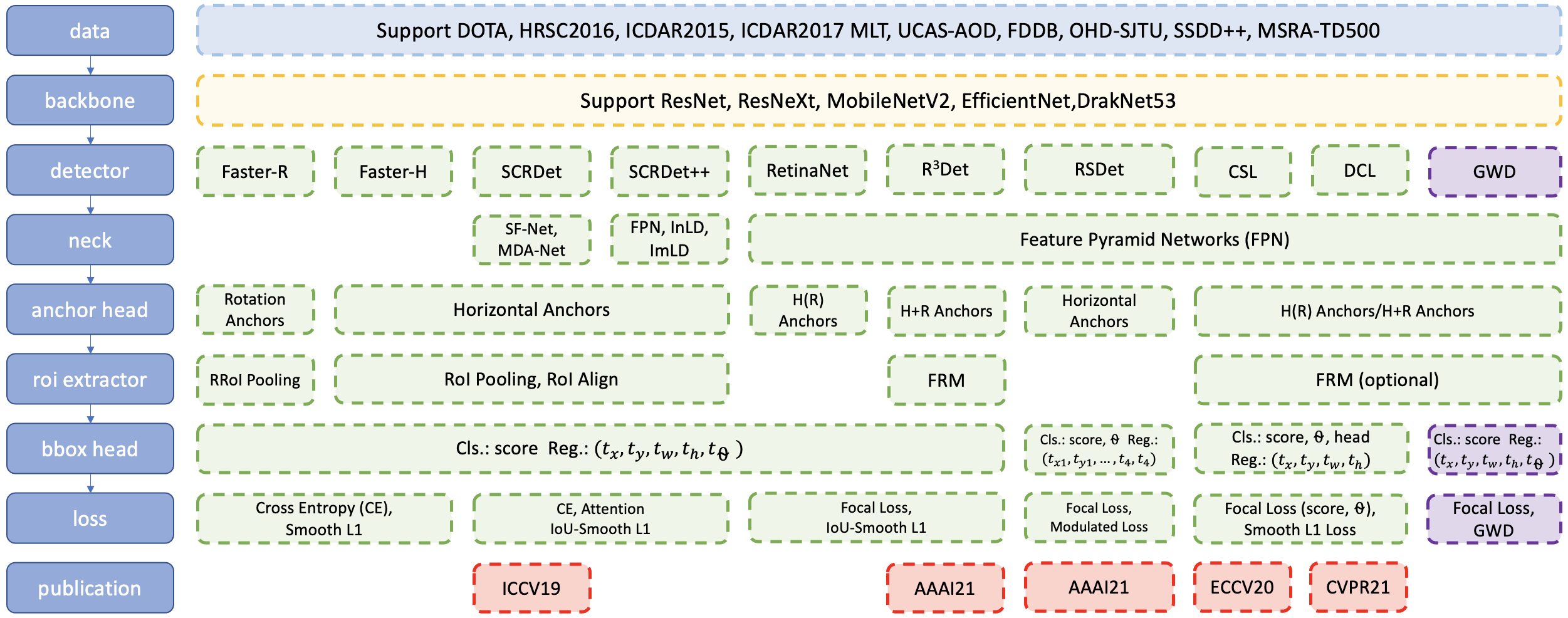

Techniques:

- Dataset: DOTA, HRSC2016, ICDAR2015, ICDAR2017 MLT, MSRA-TD500, UCAS-AOD, FDDB, OHD-SJTU, SSDD++

- Baackbone: ResNet, MobileNetV2, EfficientNet, DarkNet53

- Neck: FPN, BiFPN

- Detectors:

- R2CNN (Faster-RCNN-H): TF code

- RRPN (Faster-RCNN-R): TF code

- SCRDet (ICCV19): R2CNN++

, IoU-Smooth L1 Loss

- RetinaNet-H, RetinaNet-R: TF code

- RefineRetinaNet (CascadeRetinaNet): TF code

- FCOS: TF code

- RSDet (AAAI21): TF code

- R3Det (AAAI21): TF code

, Pytorch code

- Circular Smooth Label (CSL, ECCV20): TF code

- Densely Coded Label (DCL, CVPR21): TF code

- GWD: coming soon!

- Mixed method: R3Det-DCL, R3Det-GWD

- R2CNN (Faster-RCNN-H): TF code

- Loss: CE, Focal Loss, Smooth L1 Loss, IoU-Smooth L1 Loss, Modulated Loss

- Others: SWA, exportPb, MMdnn

More results and trained models are available in the MODEL_ZOO.md.

| Model | Neck | Backbone | Training/test dataset | mAP | Model Link | Anchor | Angle Pred. | Reg. Loss | Angle Range | Data Augmentation | Configs |

|---|---|---|---|---|---|---|---|---|---|---|---|

| RetinaNet-H | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 64.17 | Baidu Drive (j5l0) | H | Reg. | smooth L1 | 180 | × | cfgs_res50_dota_v15.py |

| RetinaNet-H | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 65.73 | Baidu Drive (jum2) | H | Reg. | smooth L1 | 90 | × | cfgs_res50_dota_v4.py |

| IoU-Smooth L1 | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 66.99 | Baidu Drive (bc83) | H | Reg. | iou-smooth L1 | 90 | × | cfgs_res50_dota_v5.py |

| RSDet | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 67.27 | Baidu Drive (6nt5) | H | Reg. | modulated loss | - | × | cfgs_res50_dota_rsdet_v2.py |

| CSL | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 67.38 | Baidu Drive (g3wt) | H | Cls.: Gaussian (r=1, w=10) | smooth L1 | 180 | x | cfgs_res50_dota_v45.py |

| DCL | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 67.39 | Baidu Drive (p9tu) | H | Cls.: BCL (w=180/256) | smooth L1 | 180 | × | cfgs_res50_dota_dcl_v5.py |

| GWD | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 68.93 | Baidu Drive (nb7w) | H | Reg. | gwd | 90 | × | cfgs_res50_dota_v10.py |

| GWD + SWA | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 69.92 | Baidu Drive (nb7w) | H | Reg. | gwd | 90 | × | cfgs_res50_dota_v10.py |

| R3Det | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 70.66 | Baidu Drive (30lt) | H->R | Reg. | smooth L1 | 90 | × | cfgs_res50_dota_r3det_v1.py |

| R3Det-DCL | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 71.21 | Baidu Drive (jueq) | H->R | Cls.: BCL (w=180/256) | iou-smooth L1 | 90->180 | × | cfgs_res50_dota_r3det_dcl_v1.py |

| R3Det-GWD | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 71.56 | Baidu Drive (8962) | H->R | Reg. | smooth L1->gwd | 90 | × | cfgs_res50_dota_r3det_gwd_v6.py |

| R2CNN (Faster-RCNN) | FPN | ResNet50_v1d 600->800 | DOTA1.0 trainval/test | 72.27 | Baidu Drive (wt2b) | H->R | Reg. | smooth L1 | 90 | × | cfgs_res50_dota_v1.py |

docker images: docker pull yangxue2docker/yx-tf-det:tensorflow1.13.1-cuda10-gpu-py3

- python3.5 (anaconda recommend)

- cuda 10.0

- opencv(cv2)

- tfplot 0.2.0 (optional)

- tensorflow-gpu 1.13

- tqdm 4.54.0

- Shapely 1.7.1

Download a pretrain weight you need from the following three options, and then put it to $PATH_ROOT/dataloader/pretrained_weights.

- Tensorflow pretrain weights: resnet50_v1, resnet101_v1, resnet152_v1, efficientnet, mobilenet_v2, darknet53 (Baidu Drive (1jg2), Google Drive).

- MxNet pretrain weights (Recommend in this repo): resnet_v1d, resnet_v1b, refer to gluon2TF.

- Pytorch pretrain weights, refer to pretrain_zoo.py and Others.

- Please download trained models by this project, then put them to $PATH_ROOT/output/pretained_weights.

```

cd $PATH_ROOT/libs/utils/cython_utils

rm *.so

rm *.c

rm *.cpp

python setup.py build_ext --inplace (or make)

cd $PATH_ROOT/libs/utils/

rm *.so

rm *.c

rm *.cpp

python setup.py build_ext --inplace

```

-

If you want to train your own dataset, please note:

(1) Select the detector and dataset you want to use, and mark them as #DETECTOR and #DATASET (such as #DETECTOR=retinanet and #DATASET=DOTA) (2) Modify parameters (such as CLASS_NUM, DATASET_NAME, VERSION, etc.) in $PATH_ROOT/libs/configs/#DATASET/#DETECTOR/cfgs_xxx.py (3) Copy $PATH_ROOT/libs/configs/#DATASET/#DETECTOR/cfgs_xxx.py to $PATH_ROOT/libs/configs/cfgs.py (4) Add category information in $PATH_ROOT/libs/label_name_dict/label_dict.py (5) Add data_name to $PATH_ROOT/data/io/read_tfrecord.py -

Make tfrecord

If image is very large (such as DOTA dataset), the image needs to be cropped. Take DOTA dataset as a example:cd $PATH_ROOT/dataloader/dataset/DOTA python data_crop.pyIf image does not need to be cropped, just convert the annotation file into xml format, refer to example.xml.

cd $PATH_ROOT/dataloader/dataset/ python convert_data_to_tfrecord.py --VOC_dir='/PATH/TO/DOTA/' --xml_dir='labeltxt' --image_dir='images' --save_name='train' --img_format='.png' --dataset='DOTA' -

Start training

cd $PATH_ROOT/tools/#DETECTOR python train.py

-

For large-scale image, take DOTA dataset as a example (the output file or visualization is in $PATH_ROOT/tools/#DETECTOR/test_dota/VERSION):

cd $PATH_ROOT/tools/#DETECTOR python test_dota.py --test_dir='/PATH/TO/IMAGES/' --gpus=0,1,2,3,4,5,6,7 -ms (multi-scale testing, optional) -s (visualization, optional)Notice: In order to set the breakpoint conveniently, the read and write mode of the file is' a+'. If the model of the same #VERSION needs to be tested again, the original test results need to be deleted.

-

For small-scale image, take HRSC2016 dataset as a example:

cd $PATH_ROOT/tools/#DETECTOR python test_hrsc2016.py --test_dir='/PATH/TO/IMAGES/' --gpu=0 --image_ext='bmp' --test_annotation_path='/PATH/TO/ANNOTATIONS' -s (visualization, optional)

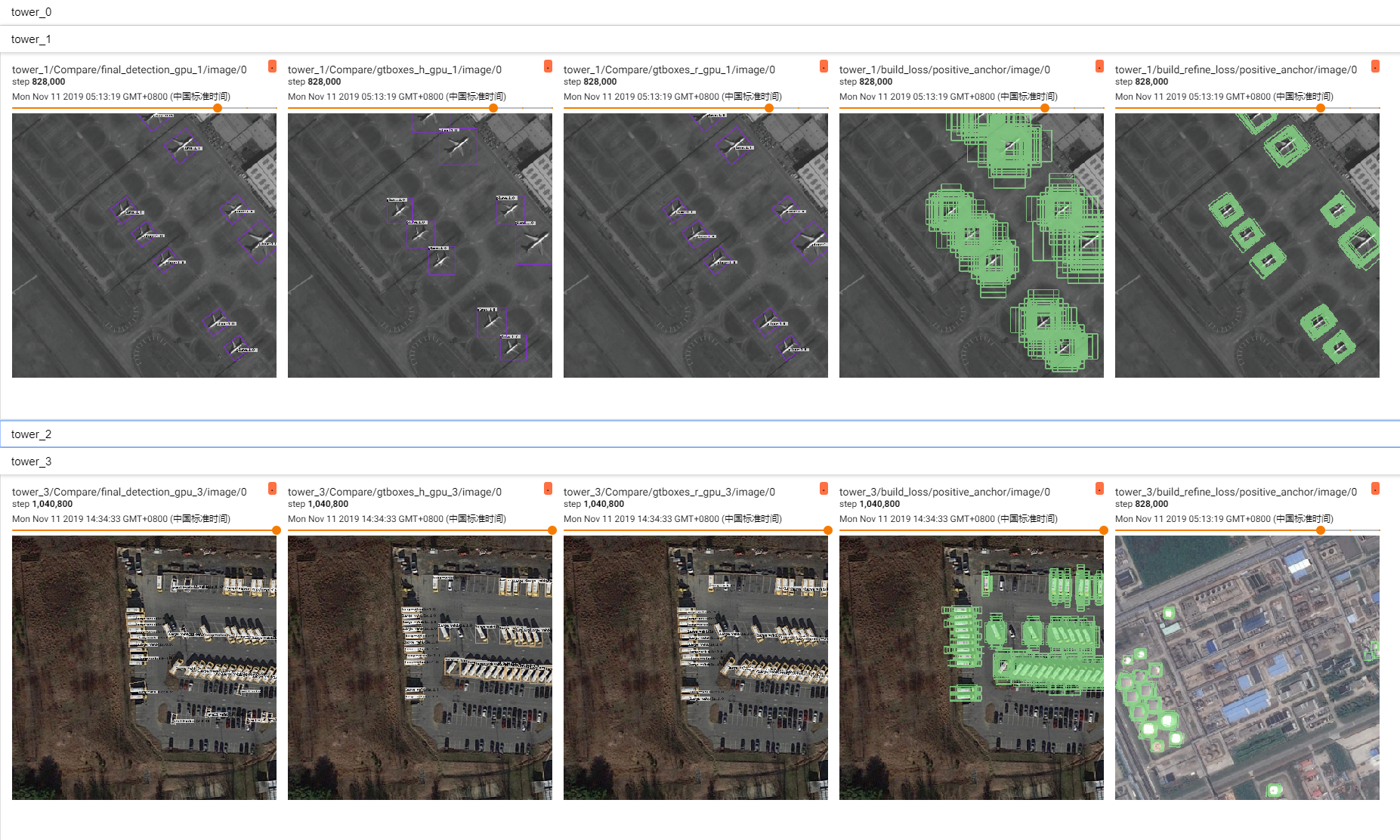

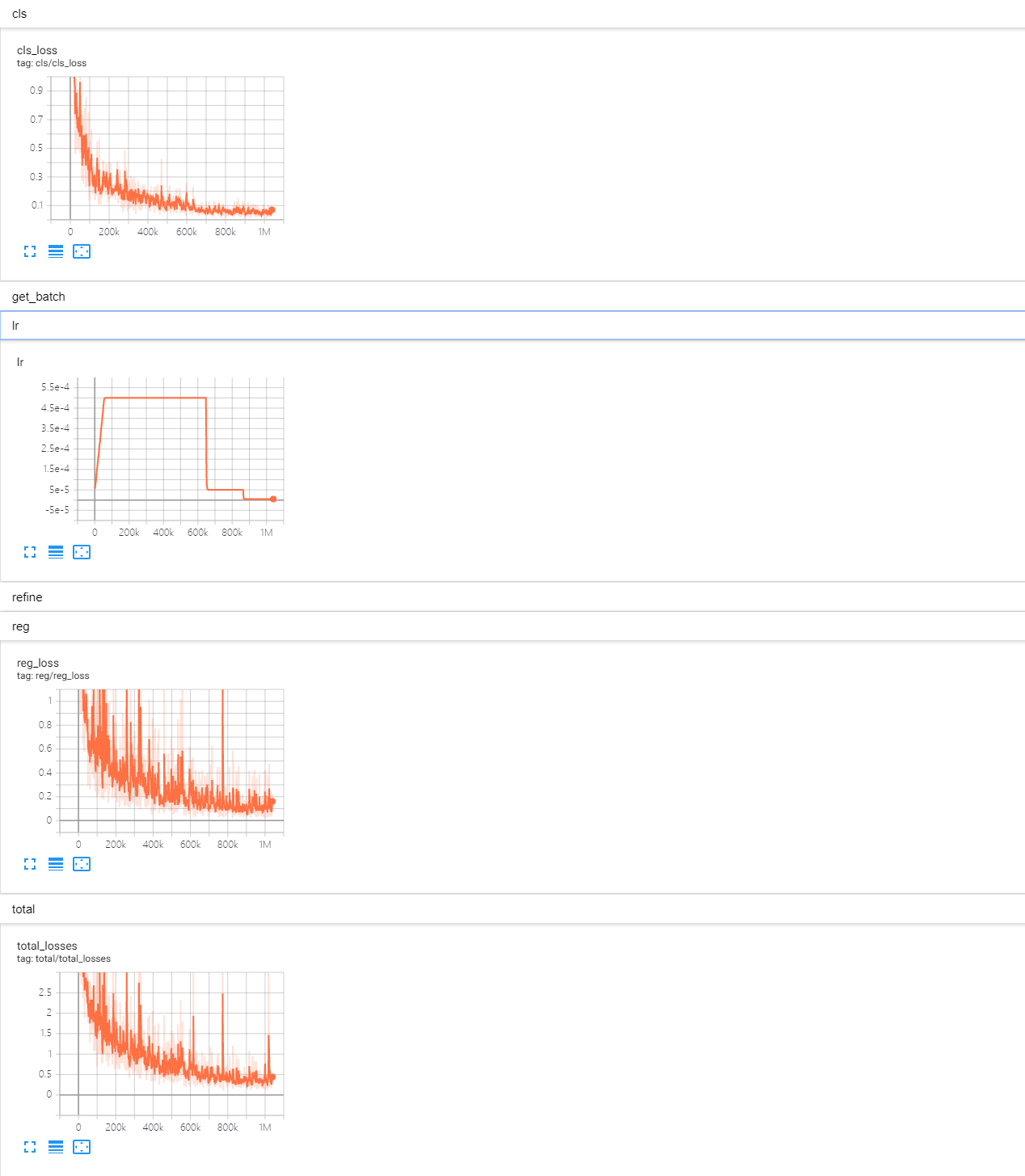

cd $PATH_ROOT/output/summary

tensorboard --logdir=.

If you find our code useful for your research, please consider cite.

@article{yang2021rethinking,

title={Rethinking Rotated Object Detection with Gaussian Wasserstein Distance Loss},

author={Yang, Xue and Yan, Junchi and Qi, Ming and Wang, Wentao and Xiaopeng, Zhang and Qi, Tian},

journal={arXiv preprint arXiv:2101.11952},

year={2021}

}

@inproceedings{yang2020dense,

title={Dense Label Encoding for Boundary Discontinuity Free Rotation Detection},

author={Yang, Xue and Hou, Liping and Zhou, Yue and Wang, Wentao and Yan, Junchi},

journal={Proceedings of the IEEE Computer Vision and Pattern Recognition (CVPR)},

year={2021}

}

@inproceedings{yang2020arbitrary,

title={Arbitrary-oriented object detection with circular smooth label},

author={Yang, Xue and Yan, Junchi},

booktitle={European Conference on Computer Vision (ECCV)},

pages={677--694},

year={2020},

organization={Springer}

}

@inproceedings{yang2021r3det,

title={R3Det: Refined Single-Stage Detector with Feature Refinement for Rotating Object},

author={Yang, Xue and Yan, Junchi and Feng, Ziming and He, Tao},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence (AAAI)},

year={2021}

}

@inproceedings{qian2021learning,

title={Learning modulated loss for rotated object detection},

author={Qian, Wen and Yang, Xue and Peng, Silong and Yan, Junchi and Guo, Yue },

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence (AAAI)},

year={2021}

}

@article{yang2020scrdet++,

title={SCRDet++: Detecting Small, Cluttered and Rotated Objects via Instance-Level Feature Denoising and Rotation Loss Smoothing},

author={Yang, Xue and Yan, Junchi and Yang, Xiaokang and Tang, Jin and Liao, Wenglong and He, Tao},

journal={arXiv preprint arXiv:2004.13316},

year={2020}

}

@inproceedings{yang2019scrdet,

title={SCRDet: Towards more robust detection for small, cluttered and rotated objects},

author={Yang, Xue and Yang, Jirui and Yan, Junchi and Zhang, Yue and Zhang, Tengfei and Guo, Zhi and Sun, Xian and Fu, Kun},

booktitle={Proceedings of the IEEE International Conference on Computer Vision (ICCV)},

pages={8232--8241},

year={2019}

}

1、https://github.com/endernewton/tf-faster-rcnn

2、https://github.com/zengarden/light_head_rcnn

3、https://github.com/tensorflow/models/tree/master/research/object_detection

4、https://github.com/fizyr/keras-retinanet