KAPAO is an efficient single-stage multi-person human pose estimation method that models keypoints and poses as objects within a dense anchor-based detection framework. KAPAO simultaneously detects pose objects and keypoint objects and fuses the detections to predict human poses:

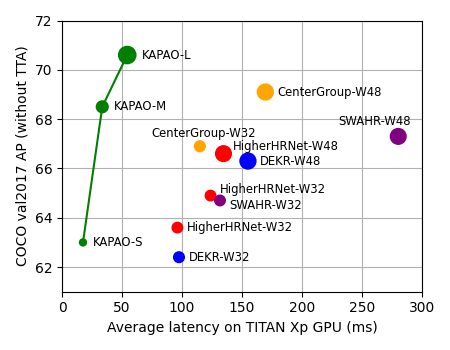

When not using test-time augmentation (TTA), KAPAO is much faster and more accurate than previous single-stage methods like DEKR, HigherHRNet, HigherHRNet + SWAHR, and CenterGroup:

This repository contains the official PyTorch implementation for the paper:

Rethinking Keypoint Representations: Modeling Keypoints and Poses as Objects for Multi-Person Human Pose Estimation.

Our code was forked from ultralytics/yolov5 at commit 5487451.

- If you haven't already, install Anaconda or Miniconda.

- Create a new conda environment with Python 3.6:

$ conda create -n kapao python=3.6. - Activate the environment:

$ conda activate kapao - Clone this repo:

$ git clone https://github.com/wmcnally/kapao.git - Install the dependencies:

$ cd kapao && pip install -r requirements.txt - Download the trained models:

$ python data/scripts/download_models.py

Note: FPS calculations include all processing (i.e., including image loading, resizing, inference, plotting / tracking, etc.). See script arguments for inference options.

To generate the four images in the GIF above:

$ python demos/image.py --bbox$ python demos/image.py --bbox --pose --face --no-kp-dets$ python demos/image.py --bbox --pose --face --no-kp-dets --kp-bbox$ python demos/image.py --pose --face

KAPAO runs fastest on low resolution video with few people in the frame. This demo runs KAPAO-S on a single-person 480p dance video using an input size of 1024. The inference speed is ~9.5 FPS on our CPU, and ~60 FPS on our TITAN Xp.

To display the results in real-time:

$ python demos/video.py --face --display

To create the GIF above:

$ python demos/video.py --face --device cpu --gif

This demo runs KAPAO-S on a 720p flash mob video using an input size of 1280.

To display the results in real-time:

$ python demos/video.py --yt-id 2DiQUX11YaY --tag 136 --imgsz 1280 --color 255 0 255 --start 188 --end 196 --display

To create the GIF above:

$ python demos/video.py --yt-id 2DiQUX11YaY --tag 136 --imgsz 1280 --color 255 0 255 --start 188 --end 196 --gif

This demo runs KAPAO-L on a 480p clip from the TV show Squid Game using an input size of 1024. The plotted poses constitute keypoint objects only.

To display the results in real-time:

$ python demos/video.py --yt-id nrchfeybHmw --imgsz 1024 --weights kapao_l_coco.pt --conf-thres-kp 0.01 --kp-obj --face --start 56 --end 72 --display

To create the GIF above:

$ python demos/video.py --yt-id nrchfeybHmw --imgsz 1024 --weights kapao_l_coco.pt --conf-thres-kp 0.01 --kp-obj --face --start 56 --end 72 --gif

This demo runs KAPAO-S on a 1080p slow motion squash video. It uses a simple player tracking algorithm based on the frame-to-frame pose differences.

To display the inference results in real-time:

$ python demos/squash.py --display --fps

To create the GIF above:

$ python demos/squash.py --start 42 --end 50 --gif --fps

Pose objects generalize well and can even be detected in depth video. Here KAPAO-S was run on a depth video from a fencing action recognition dataset.

The depth video above can be downloaded directly from here.

To create the GIF above:

$ python demos/video.py -p 2016-01-04_21-33-35_Depth.avi --face --start 0 --end -1 --gif --gif-size 480 360

A web demo was integrated to Huggingface Spaces with Gradio (credit to @AK391). It uses KAPAO-S to run CPU inference on short video clips.

Download the COCO dataset: $ sh data/scripts/get_coco_kp.sh

- KAPAO-S (63.0 AP):

$ python val.py --rect - KAPAO-M (68.5 AP):

$ python val.py --rect --weights kapao_m_coco.pt - KAPAO-L (70.6 AP):

$ python val.py --rect --weights kapao_l_coco.pt

- KAPAO-S (64.3 AP):

$ python val.py --scales 0.8 1 1.2 --flips -1 3 -1 - KAPAO-M (69.6 AP):

$ python val.py --weights kapao_m_coco.pt \

--scales 0.8 1 1.2 --flips -1 3 -1 - KAPAO-L (71.6 AP):

$ python val.py --weights kapao_l_coco.pt \

--scales 0.8 1 1.2 --flips -1 3 -1

- KAPAO-S (63.8 AP):

$ python val.py --scales 0.8 1 1.2 --flips -1 3 -1 --task test - KAPAO-M (68.8 AP):

$ python val.py --weights kapao_m_coco.pt \

--scales 0.8 1 1.2 --flips -1 3 -1 --task test - KAPAO-L (70.3 AP):

$ python val.py --weights kapao_l_coco.pt \

--scales 0.8 1 1.2 --flips -1 3 -1 --task test

The following commands were used to train the KAPAO models on 4 V100s with 32GB memory each.

KAPAO-S:

python -m torch.distributed.launch --nproc_per_node 4 train.py \

--img 1280 \

--batch 128 \

--epochs 500 \

--data data/coco-kp.yaml \

--hyp data/hyps/hyp.kp-p6.yaml \

--val-scales 1 \

--val-flips -1 \

--weights yolov5s6.pt \

--project runs/s_e500 \

--name train \

--workers 128

KAPAO-M:

python train.py \

--img 1280 \

--batch 72 \

--epochs 500 \

--data data/coco-kp.yaml \

--hyp data/hyps/hyp.kp-p6.yaml \

--val-scales 1 \

--val-flips -1 \

--weights yolov5m6.pt \

--project runs/m_e500 \

--name train \

--workers 128

KAPAO-L:

python train.py \

--img 1280 \

--batch 48 \

--epochs 500 \

--data data/coco-kp.yaml \

--hyp data/hyps/hyp.kp-p6.yaml \

--val-scales 1 \

--val-flips -1 \

--weights yolov5l6.pt \

--project runs/l_e500 \

--name train \

--workers 128

Note: DDP is usually recommended but we found training was less stable for KAPAO-M/L using DDP. We are investigating this issue.

- Install the CrowdPose API to your conda environment:

$ cd .. && git clone https://github.com/Jeff-sjtu/CrowdPose.git

$ cd CrowdPose/crowdpose-api/PythonAPI && sh install.sh && cd ../../../kapao - Download the CrowdPose dataset:

$ sh data/scripts/get_crowdpose.sh

- KAPAO-S (63.8 AP):

$ python val.py --data crowdpose.yaml \

--weights kapao_s_crowdpose.pt --scales 0.8 1 1.2 --flips -1 3 -1 - KAPAO-M (67.1 AP):

$ python val.py --data crowdpose.yaml \

--weights kapao_m_crowdpose.pt --scales 0.8 1 1.2 --flips -1 3 -1 - KAPAO-L (68.9 AP):

$ python val.py --data crowdpose.yaml \

--weights kapao_l_crowdpose.pt --scales 0.8 1 1.2 --flips -1 3 -1

The following commands were used to train the KAPAO models on 4 V100s with 32GB memory each.

Training was performed on the trainval split with no validation.

The test results above were generated using the last model checkpoint.

KAPAO-S:

python -m torch.distributed.launch --nproc_per_node 4 train.py \

--img 1280 \

--batch 128 \

--epochs 300 \

--data data/crowdpose.yaml \

--hyp data/hyps/hyp.kp-p6.yaml \

--val-scales 1 \

--val-flips -1 \

--weights yolov5s6.pt \

--project runs/cp_s_e300 \

--name train \

--workers 128 \

--noval

KAPAO-M:

python train.py \

--img 1280 \

--batch 72 \

--epochs 300 \

--data data/crowdpose.yaml \

--hyp data/hyps/hyp.kp-p6.yaml \

--val-scales 1 \

--val-flips -1 \

--weights yolov5m6.pt \

--project runs/cp_m_e300 \

--name train \

--workers 128 \

--noval

KAPAO-L:

python train.py \

--img 1280 \

--batch 48 \

--epochs 300 \

--data data/crowdpose.yaml \

--hyp data/hyps/hyp.kp-p6.yaml \

--val-scales 1 \

--val-flips -1 \

--weights yolov5l6.pt \

--project runs/cp_l_e300 \

--name train \

--workers 128 \

--noval