This repository contains the code (in PyTorch) for "Soft Rasterizer: A Differentiable Renderer for Image-based 3D Reasoning" (ICCV'2019 Oral) by Shichen Liu, Tianye Li, Weikai Chen and Hao Li.

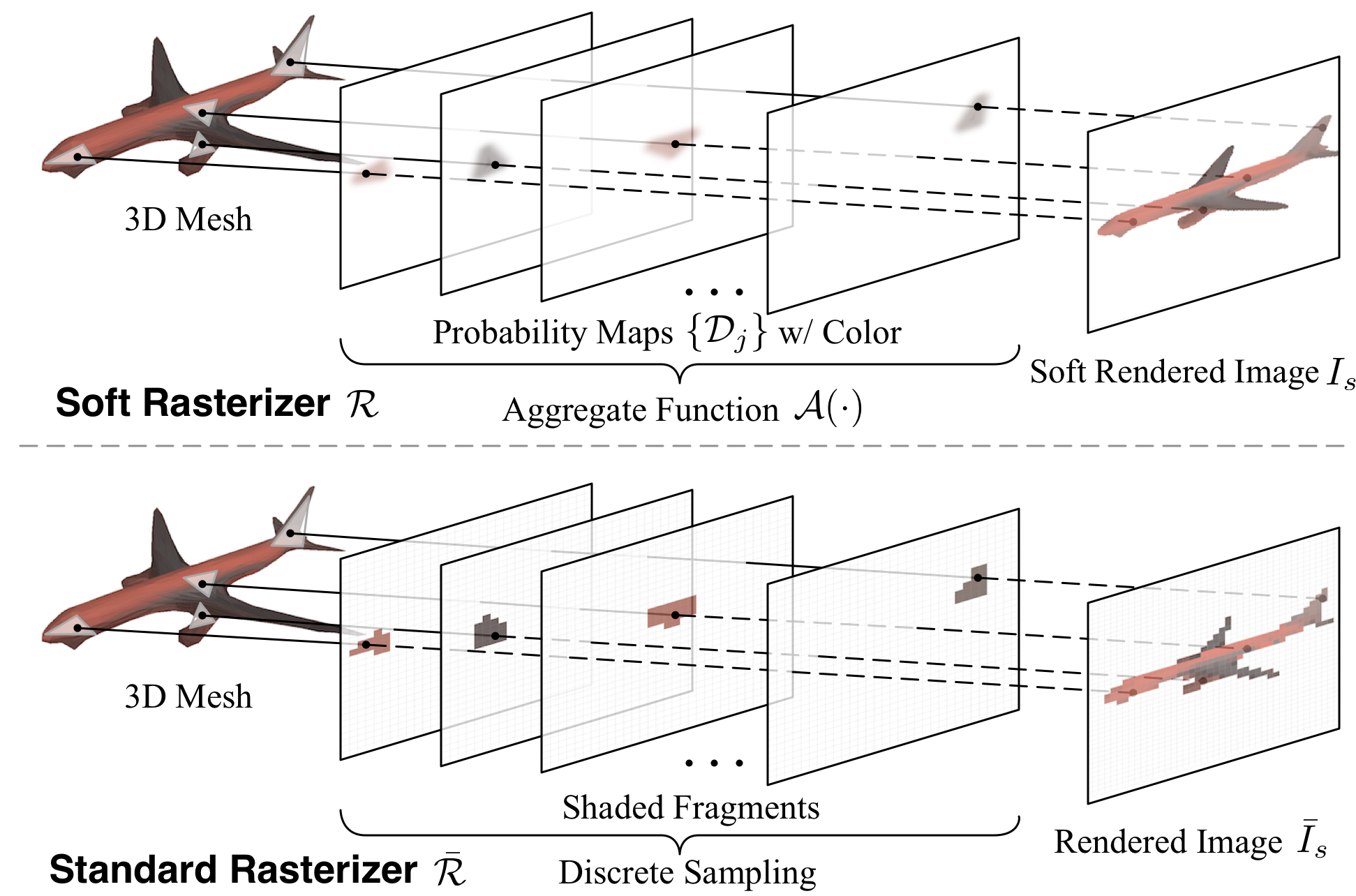

Soft Rasterizer (SoftRas) is a truly differentiable renderer framework with a novel formulation that views rendering as a differentiable aggregating process that fuses probabilistic contributions of all mesh triangles with respect to the rendered pixels. Thanks to such "soft" formulation, our framework is able to (1) directly render colorized mesh using differentiable functions and (2) back-propagate efficient supervision signals to mesh vertices and their attributes (color, normal, etc.) from various forms of image representations, including silhouette, shading and color images.

The code is built on Python3 and PyTorch 1.6.0. CUDA (10.1) is needed in order to install the module. Our code is extended on the basis of this repo. 6/3/2021 update note: we add testing models and recontructed color meshes below, and also slightly optimized the code structure! Previous version is archived in the legacy branch.

To install the module, using

python setup.py install

We demonstrate the rendering effects provided by SoftRas. Realistic rendering results (1st and 2nd columns) can be achieved with a proper setting of sigma and gamma. With larger sigma and gamma, one can obtain renderings with stronger transparency and blurriness (3rd and 4th column).

CUDA_VISIBLE_DEVICES=0 python examples/demo_render.py

By incorporating SoftRas with a simple mesh generator, one can train the network with multi-view images only, without requiring any 3D supervision. At test time, one can reconstruct the 3D mesh, along with the mesh texture, from a single RGB image. Below we show the results of single-view mesh reconstruction on ShapeNet.

Download shapenet rendering dataset provided by NMR:

bash examples/recon/download_dataset.sh

To train the model:

CUDA_VISIBLE_DEVICES=0 python examples/recon/train.py -eid recon

To test the model:

CUDA_VISIBLE_DEVICES=0 python examples/recon/test.py -eid recon \

-d 'data/results/models/recon/checkpoint_0200000.pth.tar'

We also provide our trained model here:

- SoftRas trained with silhouettes supervision (62+ IoU): google drive

- SoftRas trained with shading supervision (64+ IoU, test with

--shading-modelarg): google drive - SoftRas reconstructed meshes with color (random sampled): google drive

SoftRas provides strong supervision for image-based mesh deformation. We visualize the deformation process from a sphere to a car model and then to a plane given supervision from multi-view silhouette images.

CUDA_VISIBLE_DEVICES=0 python examples/demo_deform.py

The optimized mesh is included in data/obj/plane/plane.obj

With scheduled blurry renderings, one can obtain smooth energy landscape that avoids local minima. Below we demonstrate how a color cube is fitted to the target image in the presence of large occlusions. The blurry rendering and the corresponding rendering losses are shown in the 3rd and 4th columns respectively.

We fit the parametric body model (SMPL) to a target image where the part (right hand) is entirely occluded in the input view.

Shichen Liu: liushichen95@gmail.com

Any discussions or concerns are welcomed!

If you find our project useful in your research, please consider citing:

@article{liu2019softras,

title={Soft Rasterizer: A Differentiable Renderer for Image-based 3D Reasoning},

author={Liu, Shichen and Li, Tianye and Chen, Weikai and Li, Hao},

journal={The IEEE International Conference on Computer Vision (ICCV)},

month = {Oct},

year={2019}

}

@article{liu2020general,

title={A General Differentiable Mesh Renderer for Image-based 3D Reasoning},

author={Liu, Shichen and Li, Tianye and Chen, Weikai and Li, Hao},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2020},

publisher={IEEE}

}