An extension to the Apache Spark framework that allows easy and fast processing of very large geospatial datasets.

Mosaic was created to simplify the implementation of scalable geospatial data pipelines by bounding together common Open Source geospatial libraries via Apache Spark, with a set of examples and best practices for common geospatial use cases.

Mosaic provides geospatial tools for

- Data ingestion (WKT, WKB, GeoJSON)

- Data processing

- Geometry and geography

ST_operations (with default JTS or ESRI) - Indexing (with default H3 or BNG)

- Chipping of polygons and lines over an indexing grid co-developed with Ordnance Survey and Microsoft

- Geometry and geography

- Data visualization (Kepler)

The supported languages are Scala, Python, R, and SQL.

The Mosaic library is written in Scala to guarantee maximum performance with Spark and when possible, it uses code generation to give an extra performance boost.

The other supported languages (Python, R and SQL) are thin wrappers around the Scala code.

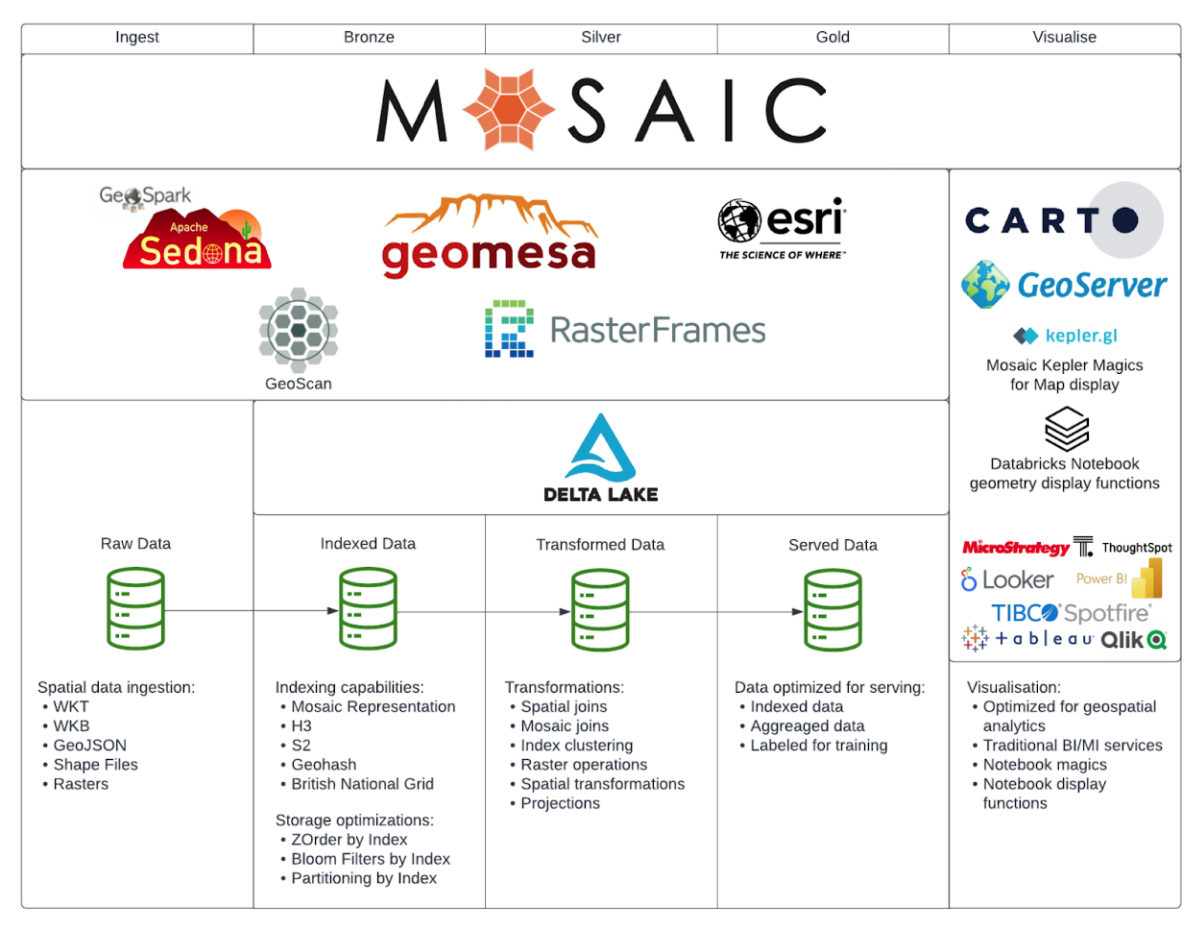

Image1: Mosaic logical design.

Image1: Mosaic logical design.

We recommend using Databricks Runtime versions 11.3 LTS or 12.2 LTS with Photon enabled; this will leverage the Databricks H3 expressions when using H3 grid system.

As of the 0.3.11 release, Mosaic issues the following warning when initialized on a cluster that is neither Photon Runtime nor Databricks Runtime ML [ADB | AWS | GCP]:

DEPRECATION WARNING: Mosaic is not supported on the selected Databricks Runtime. Mosaic will stop working on this cluster after v0.3.x. Please use a Databricks Photon-enabled Runtime (for performance benefits) or Runtime ML (for spatial AI benefits).

If you are receiving this warning in v0.3.11+, you will want to begin to plan for a supported runtime. The reason we are making this change is that we are streamlining Mosaic internals to be more aligned with future product APIs which are powered by Photon. Along this direction of change, Mosaic will be standardizing to JTS as its default and supported Vector Geometry Provider.

Check out the documentation pages.

Install databricks-mosaic as a cluster library, or run from a Databricks notebook

%pip install databricks-mosaicThen enable it with

from mosaic import enable_mosaic

enable_mosaic(spark, dbutils)Get the jar from the releases page and install it as a cluster library.

Then enable it with

import com.databricks.labs.mosaic.functions.MosaicContext

import com.databricks.labs.mosaic.H3

import com.databricks.labs.mosaic.JTS

val mosaicContext = MosaicContext.build(H3, JTS)

import mosaicContext.functions._Get the Scala JAR and the R from the releases page. Install the JAR as a cluster library, and copy the sparkrMosaic.tar.gz to DBFS (This example uses /FileStore location, but you can put it anywhere on DBFS).

library(SparkR)

install.packages('/FileStore/sparkrMosaic.tar.gz', repos=NULL)Enable the R bindings

library(sparkrMosaic)

enableMosaic()Configure the Automatic SQL Registration or follow the Scala installation process and register the Mosaic SQL functions in your SparkSession from a Scala notebook cell:

%scala

import com.databricks.labs.mosaic.functions.MosaicContext

import com.databricks.labs.mosaic.H3

import com.databricks.labs.mosaic.JTS

val mosaicContext = MosaicContext.build(H3, JTS)

mosaicContext.register(spark)| Example | Description | Links |

|---|---|---|

| Quick Start | Example of performing spatial point-in-polygon joins on the NYC Taxi dataset | python, scala, R, SQL |

| Spatial KNN | Runnable notebook-based example using Mosaic SpatialKNN model | python |

| Open Street Maps | Ingesting and processing with Delta Live Tables the Open Street Maps dataset to extract buildings polygons and calculate aggregation statistics over H3 indexes | python |

| STS Transfers | Detecting Ship-to-Ship transfers at scale by leveraging Mosaic to process AIS data. | python, blog |

You can import those examples in Databricks workspace using these instructions.

Mosaic is intended to augment the existing system and unlock the potential by integrating spark, delta and 3rd party frameworks into the Lakehouse architecture.

Image2: Mosaic ecosystem - Lakehouse integration.

Image2: Mosaic ecosystem - Lakehouse integration.

Please note that all projects in the databrickslabs github space are provided for your exploration only, and are not formally supported by Databricks with Service Level Agreements (SLAs). They are provided AS-IS and we do not make any guarantees of any kind. Please do not submit a support ticket relating to any issues arising from the use of these projects.

Any issues discovered through the use of this project should be filed as GitHub Issues on the Repo. They will be reviewed as time permits, but there are no formal SLAs for support.