MAP-NEO is a fully open-sourced Large Language Model that includes the pretraining data, a data processing pipeline (Matrix), pretraining scripts, and alignment code. It is trained from scratch on 4.5T English and Chinese tokens, exhibiting performance comparable to LLaMA2 7B. The MAP-Neo model delivers proprietary-model-like performance in challenging tasks such as reasoning, mathematics, and coding, outperforming its peers of similar size. For research purposes, we aim to achieve full transparency in the LLM training process. To this end, we have made a comprehensive release of MAP-Neo, including the final and intermediate checkpoints, a self-trained tokenizer, the pre-training corpus, and an efficient, stable optimized pre-training codebase.

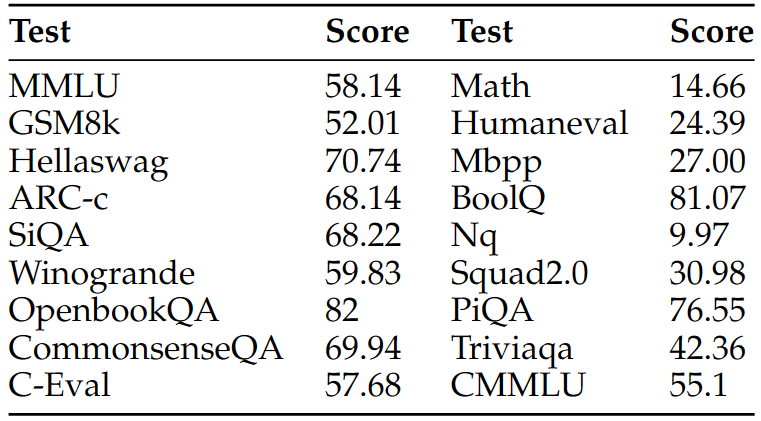

We evaluate MAP-NEO 7B on various benchmarks, as shown in the following.

We are pleased to announce the public release of the MAP-NEO 7B, including base models and a serious of intermedia checkpoints. This release aims to support a broader and more diverse range of research within academic and commercial communities. Please note that the use of this model is subject to the terms outlined in License section. Commercial usage is permitted under these terms.

| Model | Download |

|---|---|

| MAP-NEO 7B Base | 🤗 HuggingFace |

| MAP-NEO 7B intermedia | 🤗 HuggingFace |

| MAP-NEO 7B decay | 🤗 HuggingFace |

| MAP-NEO 2B Base | 🤗 HuggingFace |

| MAP-NEO scalinglaw 980M | 🤗 HuggingFace |

| MAP-NEO scalinglaw 460M | 🤗 HuggingFace |

| MAP-NEO scalinglaw 250M | 🤗 HuggingFace |

| MAP-NEO DATA Matrix | 🤗 HuggingFace |

This code repository is licensed under the MIT License.

For further communications, please scan the following WeChat and Discord QR code:

| Discord | |

|

|