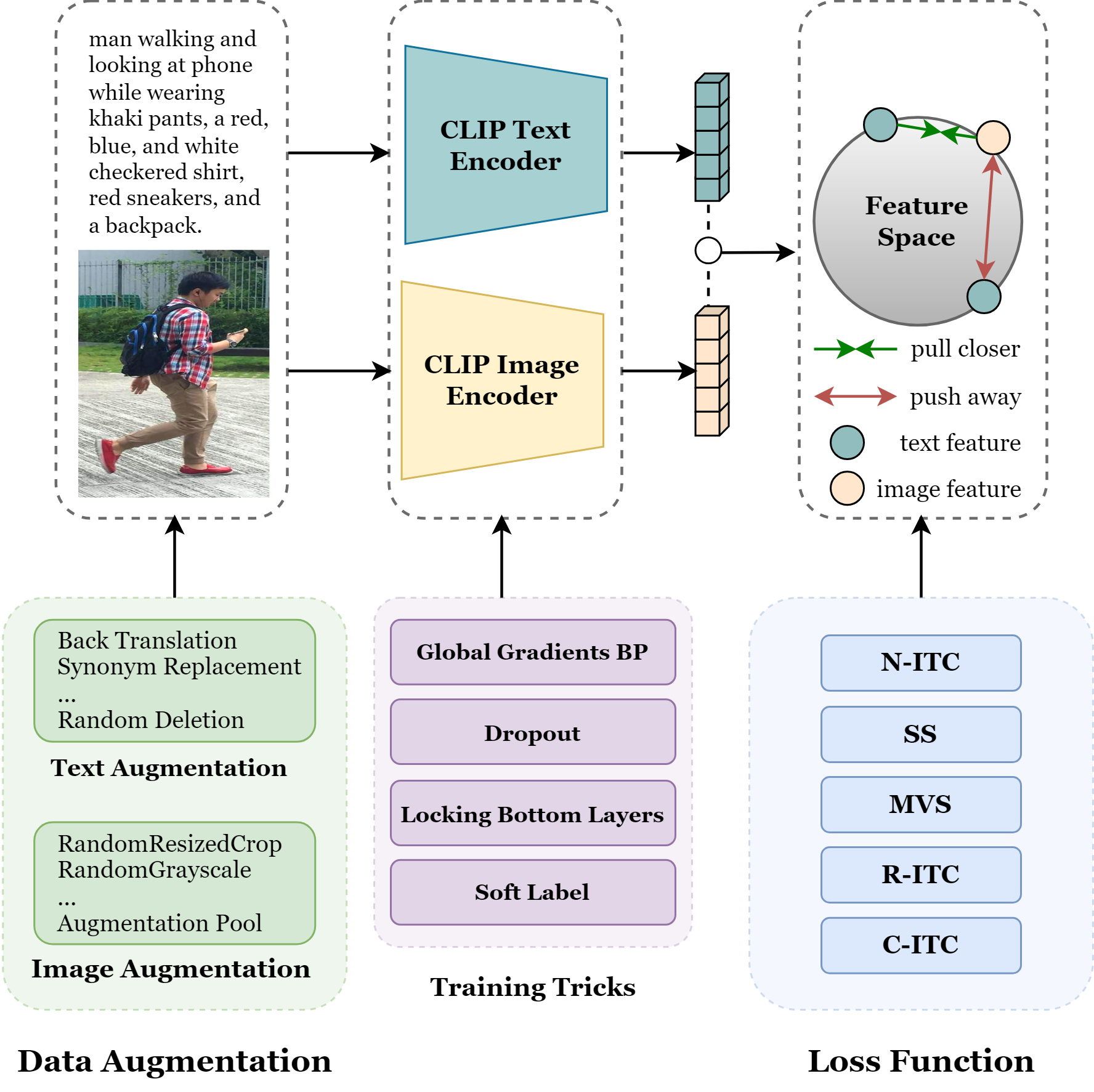

This repository is the code for the paper An Empirical Study of CLIP for Text-based Person Search.

All the experiments are conducted on 4 Nvidia A40 (48GB) GPUs. The CUDA version is 11.7.

The required packages are listed in requirements.txt. You can install them using:

pip install -r requirements.txt- Download CUHK-PEDES dataset from here, ICFG-PEDES dataset from here and RSTPReid dataset from here.

- Download the annotation json files from here.

- Download the pretrained CLIP checkpoint from here.

In config/config.yaml and config/s.config.yaml, set the paths for the annotation file, image path and the CLIP checkpoint path.

You can start the training using PyTorch's torchrun with ease:

CUDA_VISIBLE_DEVICES=0,1,2,3 \

torchrun --rdzv_id=3 --rdzv_backend=c10d --rdzv_endpoint=localhost:0 --nnodes=1 --nproc_per_node=4 \

main.pyYou can easily run simplified TBPS-CLIP using:

CUDA_VISIBLE_DEVICES=0,1,2,3 \

torchrun --rdzv_id=3 --rdzv_backend=c10d --rdzv_endpoint=localhost:0 --nnodes=1 --nproc_per_node=4 \

main.py --simplifiedThis code is distributed under an MIT LICENSE.