This project walks you through the following scenario:

- Starting off with 3 ScyllaDB nodes

- Spike in the number of requests

- Add 3 additional ScyllaDB nodes to handle requests

- Request volume goes down

- Remove 3 nodes

-

Clone the repository

git clone https://github.com/zseta/tablets-scaling.git -

Create EC2 instances in AWS (takes 4+ minutes)

terraform init terraform plan terraform apply

-

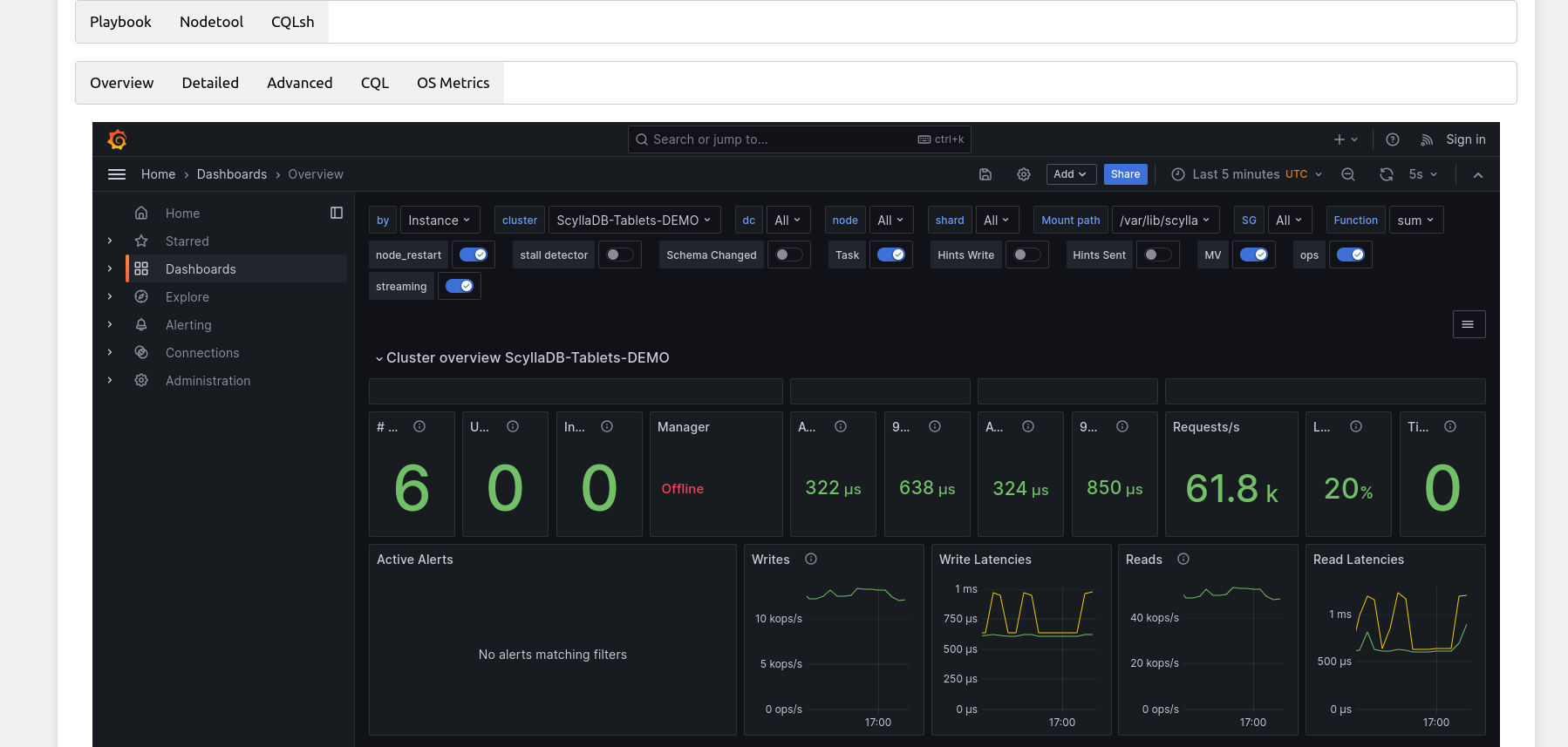

Open ScyllaDB Monitoring

One of the Terraform outputs is the link to Monitoring, similar to this:

Outputs: monitoring_url = "http://<IP-ADDRESS>:3000" [...]There should be 1 active node and 5 unreachable nodes seen on the dashboard.

-

CD into the ansible folder to run playbooks

cd ansible -

Start up a 3 node cluster

ansible-playbook 1_original_cluster.yml

-

Create schema and restore snapshot (takes 5+ minutes)

ansible-playbook 2_restore_snapshot.yml -

Start cql-stress

ansible-playbook 3_stress.yml -

Scale out (start up 3 more nodes)

ansible-playbook 4_scale_out.yml -

Stop cql-stress

ansible-playbook 5_stop_stress.yml -

Scale in (stop 3 nodes)

ansible-playbook ansible/6_scale_in.yml -

Stop demo and remove AWS infrastructure

cd .. terraform destroy

You can also run the DEMO with a user interface. To access the UI, run Terraform as usual then open index.html (the link to this file is also an output of TF).