This is the PyTorch and Lightning implementation of our VLDB 2024 paper: A Multi-Scale Decomposition MLP-Mixer for Time Series Analysis. (https://arxiv.org/abs/2310.11959)

If you find this repo useful, please consider citing our paper:

@article{10.14778/3654621.3654637,

author = {Zhong, Shuhan and Song, Sizhe and Zhuo, Weipeng and Li, Guanyao and Liu, Yang and Chan, S.-H. Gary},

title = {A Multi-Scale Decomposition MLP-Mixer for Time Series Analysis},

year = {2024},

issue_date = {March 2024},

publisher = {VLDB Endowment},

volume = {17},

number = {7},

issn = {2150-8097},

doi = {10.14778/3654621.3654637},

journal = {Proc. VLDB Endow.},

month = {may},

pages = {1723–1736},

numpages = {14}

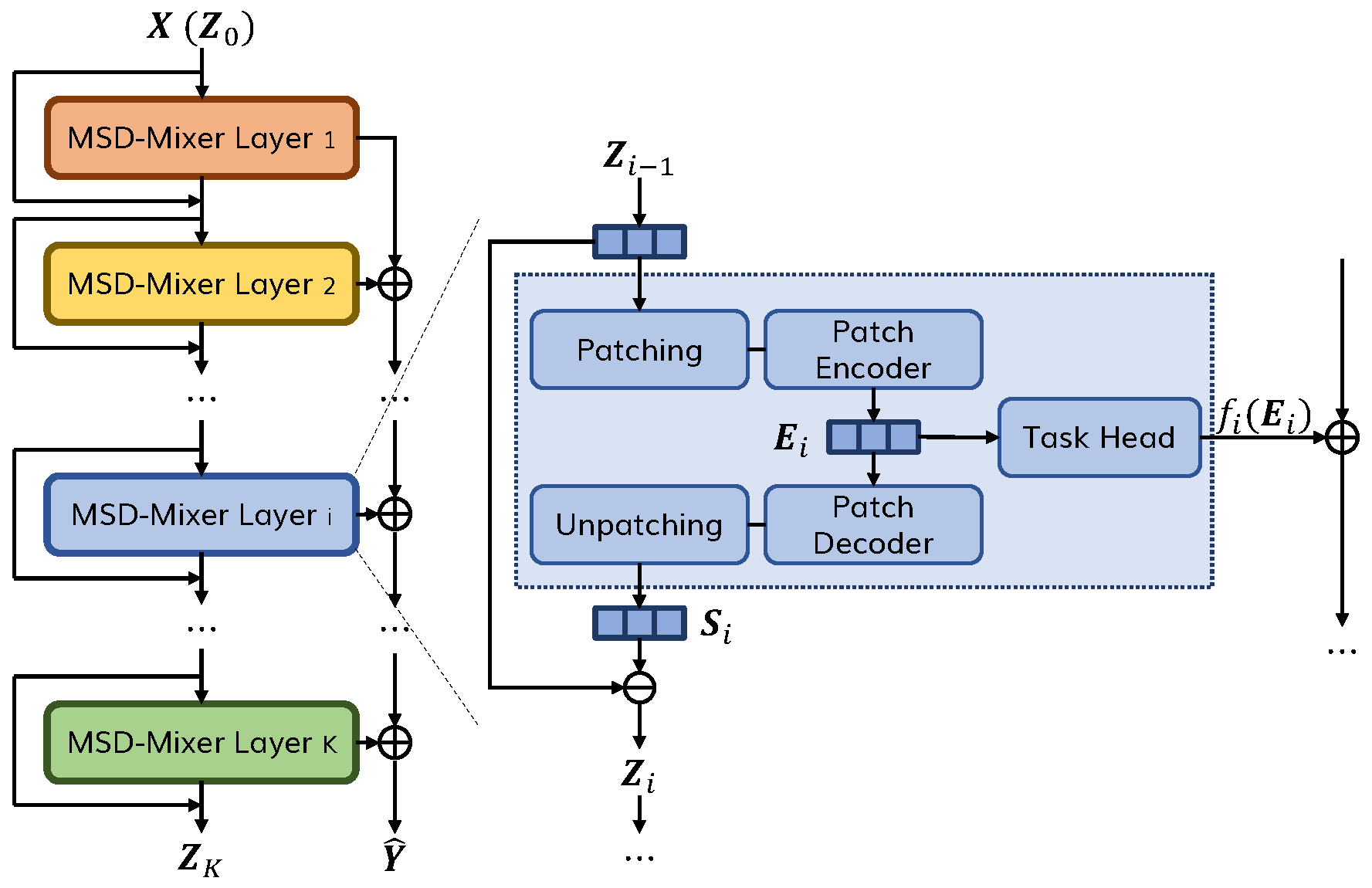

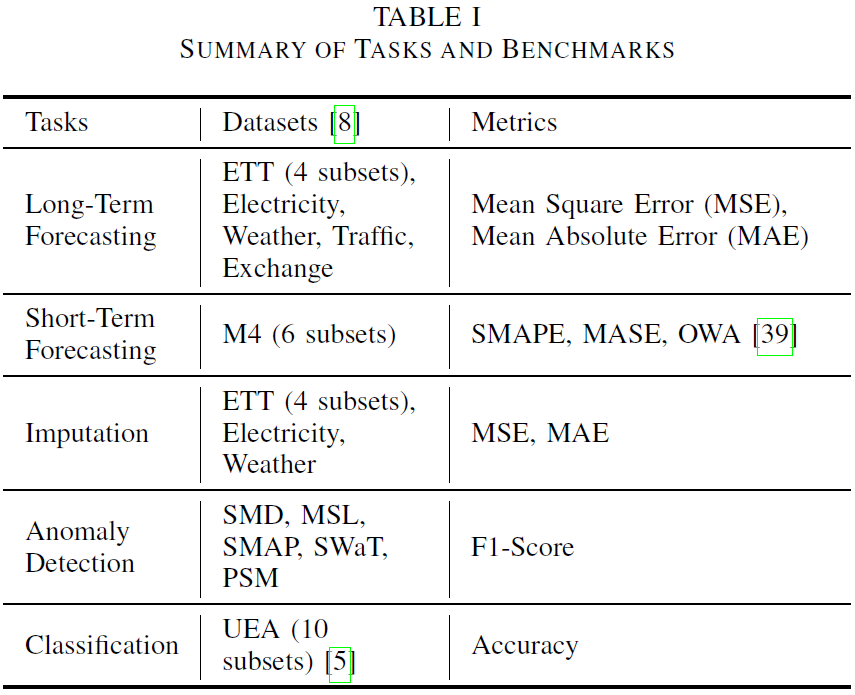

}Time series data, including univariate and multivariate ones, are characterized by unique composition and complex multi-scale temporal variations. They often require special consideration of decomposition and multi-scale modeling to analyze. Existing deep learning methods on this best fit to univariate time series only, and have not sufficiently considered sub-series level modeling and decomposition completeness. To address these challenges, we propose MSD-Mixer, a Multi-Scale Decomposition MLP-Mixer, which learns to explicitly decompose the input time series into different components, and represent the components in different layers. To handle the multi-scale temporal patterns and multivariate dependencies, we propose a novel temporal patching approach to model the time series as multi-scale sub-series (i.e., patches), and employ MLPs to capture intra- and inter-patch variations and channel-wise correlations. In addition, we propose a novel loss function to constrain both the magnitude and autocorrelation of the decomposition residual for better decomposition completeness. Through extensive experiments on various real-world datasets for five common time series analysis tasks, we demonstrate that MSD-Mixer consistently and significantly outperforms other state-of-the-art algorithms.

- Create a conda virtual environment

conda create -n msd-mixer python=3.10 conda activate msd-mixer

- Install Python Packages

pip install -r requirements.txt

Please download the datasets from dataset.zip, unzip the content into the dataset folder and structure the directory as follows:

/path/to/MSD-Mixer/dataset/

electricity/

ETT-small/

exchange_rate/

m4/

MSL/

Multivariate_ts/

PSM/

SMAP/

SMD/

SWaT/

traffic/

weather/

Please use python main.py to run the experiments. Please use the -h or --help argument for details.

Example training commands:

- Run all benchmarks

python main.py ltf # equivalent python main.py ltf --dataset all --pred_len all - Run specific benchmarks

python main.py ltf --dataset etth1 etth2 --pred_len 96 192 336

Logs, results, and model checkpoints will be saved in /path/to/MSD-Mixer/logs/ltf

Example training commands:

- Run all benchmarks

python main.py stf # equivalent python main.py stf --dataset all - Run specific benchmarks

python main.py stf --dataset yearly quarterly monthly

Logs, results, and model checkpoints will be saved in /path/to/MSD-Mixer/logs/stf

Example training commands:

- Run all benchmarks

python main.py imp # equivalent python main.py imp --dataset all --mask_rate all - Run specific benchmarks

python main.py imp --dataset ecl ettm1 --mask_rate 0.25 0.5

Logs, results, and model checkpoints will be saved in /path/to/MSD-Mixer/logs/imp

Example training commands:

- Run all benchmarks

python main.py ad # equivalent python main.py ad --dataset all - Run specific benchmarks

python main.py ad --dataset smd msl swat

Logs, results, and model checkpoints will be saved in /path/to/MSD-Mixer/logs/ad

Example training commands:

- Run all benchmarks

python main.py cls # equivalent python main.py cls --dataset all - Run specific benchmarks

python main.py cls --dataset awr scp1 scp2

Logs, results, and model checkpoints will be saved in /path/to/MSD-Mixer/logs/cls

-

For task-general baselines, including

TimesNet,PatchTST,DLinear,LightTS,ETSformer,FEDformer, andNST, as well asN-BEATSandN-HiTSfor short-term forecasting, andAnomaly Transformerfor anomaly detection, we follow and reuse the unified implementations from Time Series Library (TSlib). -

For

Scaleformerfor long-term forecasting, we follow the official implementation from the Scaleformer paper. -

For

TARNet,DTWD,TapNet,MiniRocket, andTSTfor classification, we follow the implementations from the TARNet paper. And forFormerTimefor classification, we follow the implementation from the FormerTime paper

- Time Series Library (TSlib): datasets, experiment settings, and data processing

- UEA Time Series Classification datasets: datasets