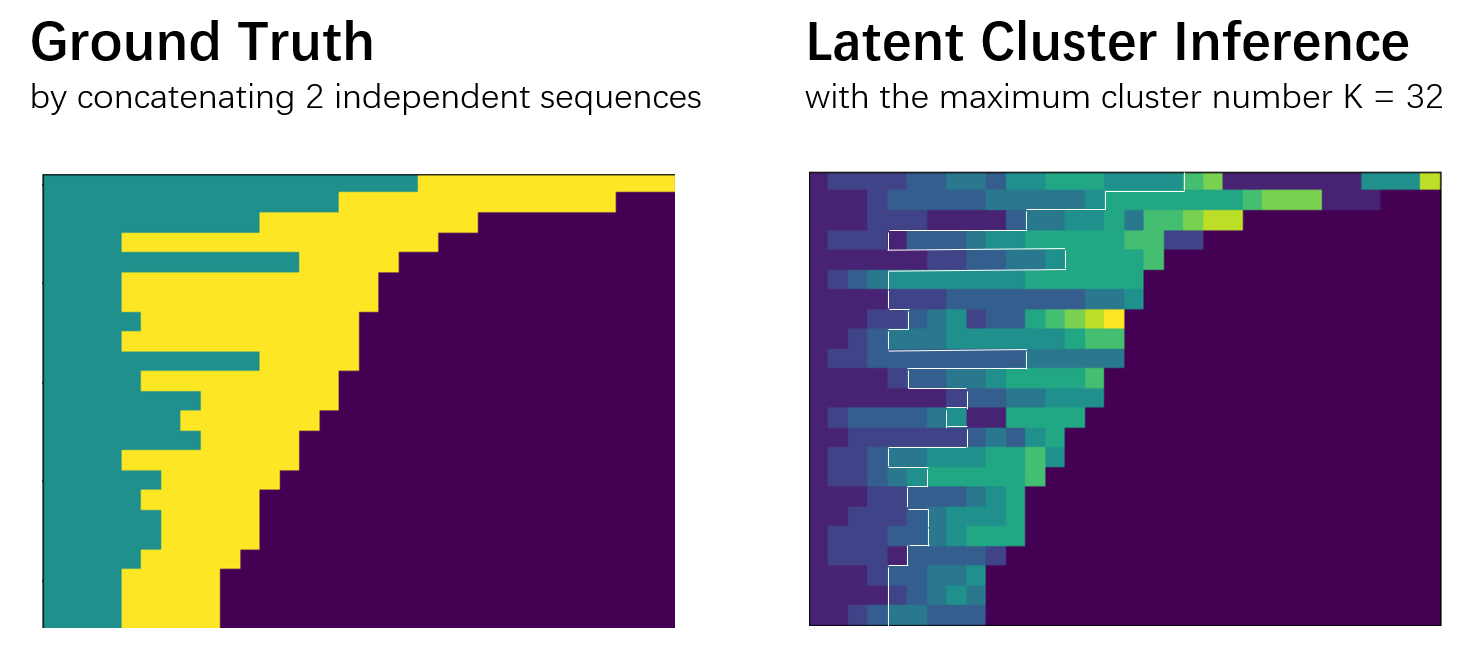

We propose a fully differentiable VAE-based deep clustering framework for sequential data, and (neural) temporal point process (TPP) as a mathematical tool is used to model the probabilitiy of sequential data. With such a framework an event sequence can be decomposed to different highly correlated subsequences (i.e. clusters) unsupervisedly, and the encoder (inference, or posterior), regularization (or prior), decoder (reconstruction, or likelihood), and learning objective (or the ELBO in VAE context) can be abstracted as below:

q(Z|X) = Seq2Seq(X)

p(Z) = Uniform(K)

p(X|Z) = \prod_{k=1}^K TPP(X_k)

ELBO = E_{Z ~ q(Z|X)}[log p(X|Z)] - KL[q(Z|X) || p(Z)]where X is the input sequence, Z is the categorical 1-of-K decomposition plan, and X_k is the k-th subsequence.

In this repo we implement modules:

- neural event embedding (models/eemb.py)

- neural temporal point process (models/tpp.py)

- cluster aware self attention & transformer (models/ctfm.py)

- Auto-Encoding Variational Bayes (ICLR 2014)

- Neural Relational Inference for Interacting Systems (ICML 2018)

- Transformer Hawkes Process (ICML 2020)

- c-NTPP: Learning Cluster-Aware Neural Temporal Point Process (AAAI 2023)

Please refer to Google Drive link for real-world event sequence datasets.