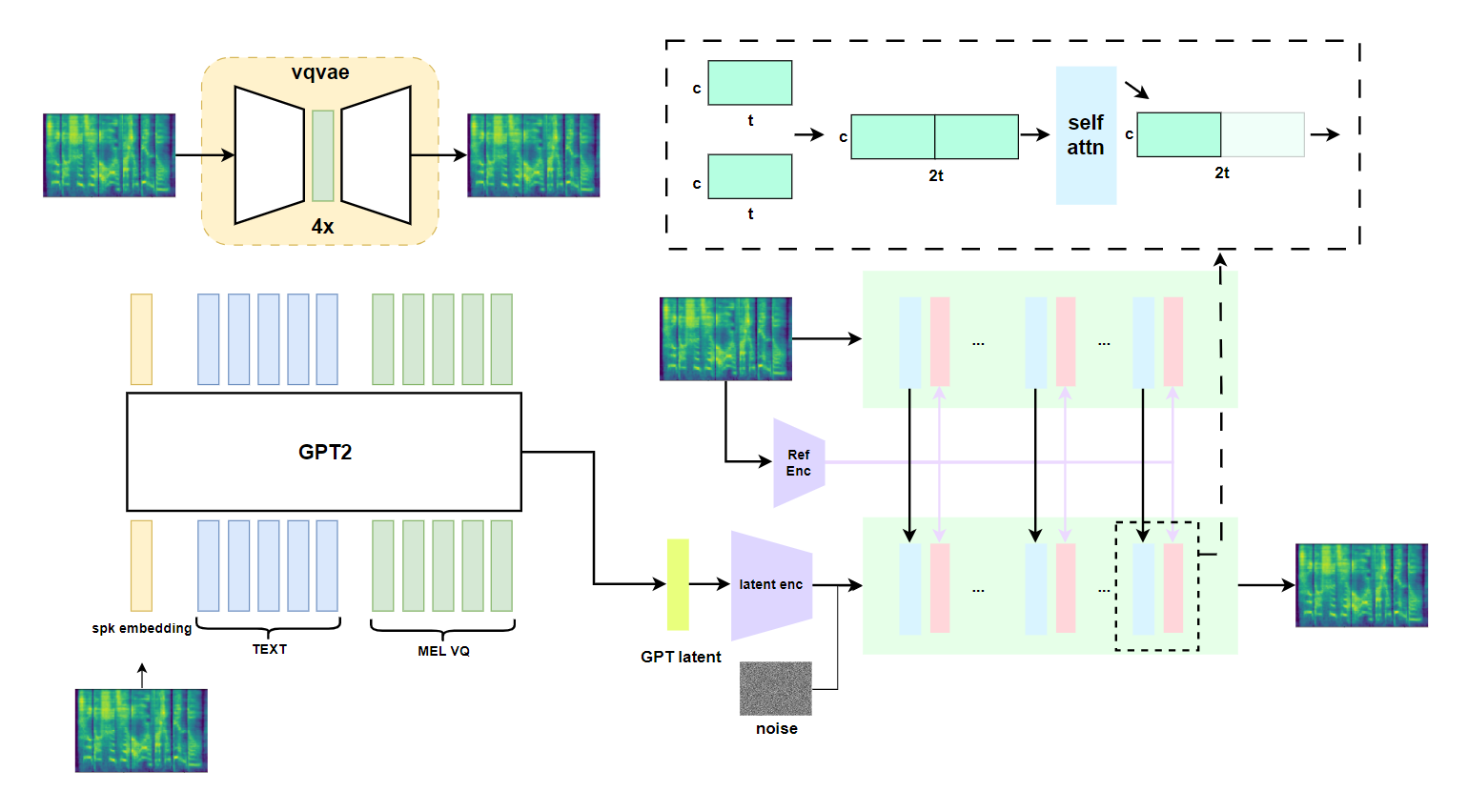

This project is for training tortoise-tts like model. Most of the codes are from tortoise tts and xtts. The distinguishing factor lies in certain training details and the diffusion model. This repository employs the same architecture as animate-anyone, incorporating a referencenet for enhanced zero-shot performance.

Now only support mandarin. Pretrained model can be found in here, you can use colab to generate any speech.

| refer | input | output |

|---|---|---|

| refer.webm | 四是四,十是十,十四是十四,四十是四十。 | out1.webm |

| refer.webm | 八百标兵奔北坡,炮兵并排北边跑。炮兵怕把标兵碰,标兵怕碰炮兵炮。 | out2.webm |

| refer.webm | 黑化肥发灰,灰化肥发黑。黑化肥挥发会发灰,灰化肥挥发会发黑。 | out3.webm |

pip install -e .

Training the model including many steps.

Use the ttts/prepare/bpe_all_text_to_one_file.py to merge all text you have collected. To train the tokenizer, check the ttts/gpt/voice_tokenizer for more info.

Use the vad_asr_save_to_jsonl.py and save_mel_to_disk.py to preprocess dataset.

Use the following instruction to train the model.

accelerate launch ttts/vqvae/train.py

Use save_mel_vq_to_disk.py to preprocess mel vq. Run

accelerate launch ttts/gpt/train.py

to train the model.

You can choose any one vocoder of the following. You must choose one, due to the output reconstructed by the vqvae has low sound quality.

WIP

I chose the pretrained vocos as the vocoder for this project for no special reson. You can swap to any other ones like univnet.

After change the config.json properly

accelerate launch ttts/diffusion/train.py