Zongsheng Yue, Hongwei Yong, Qian Zhao, Lei Zhang, Deyu Meng, Kwan-Yee K. Wong

Note that this work is an extended version of our work VDN (paper, code) that publised on the NeurIPS 2019. In the extended version, we further imporve our method both from model construction and algorithm design, and make it capable of handling blind image super-resolution.

⭐ If our work is helpful to your research, please help star this repo. Thanks! 🤗

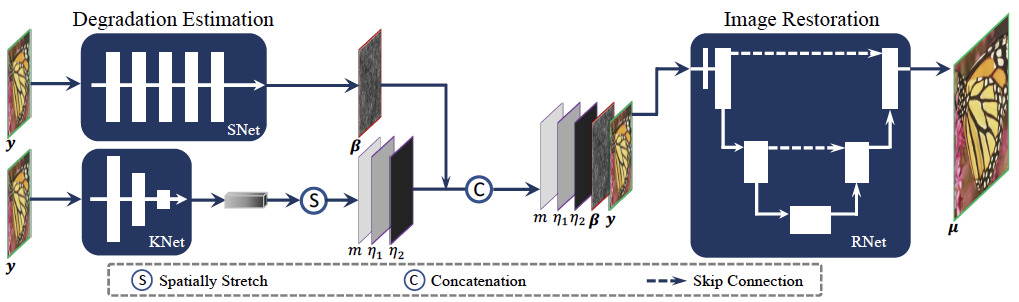

Blind image restoration (IR) is a common yet challenging problem in computer vision. Classical model-based methods and recent deep learning (DL)-based methods represent two different methodologies for this problem, each with their own merits and drawbacks. In this paper, we propose a novel blind image restoration method, aiming to integrate both the advantages of them. Specifically, we construct a general Bayesian generative model for the blind IR, which explicitly depicts the degradation process. In this proposed model, a pixel-wise non-i.i.d. Gaussian distribution is employed to fit the image noise. It is with more flexibility than the simple i.i.d. Gaussian or Laplacian distributions as adopted in most of conventional methods, so as to handle more complicated noise types contained in the image degradation. To solve the model, we design a variational inference algorithm where all the expected posteriori distributions are parameterized as deep neural networks to increase their model capability. Notably, such an inference algorithm induces a unified framework to jointly deal with the tasks of degradation estimation and image restoration. Further, the degradation information estimated in the former task is utilized to guide the latter IR process. Experiments on two typical blind IR tasks, namely image denoising and super-resolution, demonstrate that the proposed method achieves superior performance over current state-of-the-arts.

- Python 3.7.5, Pytorch 1.3.1

- More detail (See environment.yml)

A suitable conda environment named

virnetcan be created and activated with:

conda env create -f environment.yaml

conda activate virnet

Befor testing, please first download the checkpoint from this link and put them in the foloder "model_zoo".

- General testing demo.

python script/testing_demo.py --task task_name --in_path: input_path --out_path: output_path --sf sr_scale

- --task: task name, "denoising-syn", "denoising-real", "sisr"

- --in_path: input path of the low-quality images, image path or folder

- --out_path: output folder

- --sf: scale factor for image super-resolution, 2, 3, or 4

- Reproduce the results in Table 1 of our paper.

python script/denoising_virnet_syn.py --save_dir output_path --noise_type niid

- Reproduce the results in Table 2 of our paper.

python script/denoising_virnet_syn.py --save_dir output_path --noise_type iid

- Reproduce the results on SIDD dataset in Table 4 of our paper.

python script/denoising_virnet_real_sidd.py --save_dir output_path --sidd_dir sidd_data_path

- Reproduce the results on DND dataset in Table 4 of our paper.

python script/denoising_virnet_real_dnd.py --save_dir output_path --dnd_dir dnd_data_path

- Reproduce the results of super-resolution in Table 5 of our paper.

python script/sisr_virnet_syn.py --save_dir output_path --sf 4 --nlevel 0.1

- --nlevel: noise level, 0.1, 2.55 or 7.65

-

Download the source images from Waterloo, CBSD432, Flick2K and DIV2K as groundtruth and fill the data path in the config file.

-

Begin to train:

CUDA_VISIBLE_DEVICES=gpu_id python train_denoising_syn.py --save_dir path_for_log

-

Download the training datasets SIDD and validation datasets noisy, groundtruth.

-

Crop the training datasets into small image patches using this script, and fill the data path in the config file.

-

Begin to training:

CUDA_VISIBLE_DEVICES=gpu_id python train_denoising_real.py --save_dir path_for_log

-

Download the high-resolution images of DIV2K and Flick2K, and crop them into small image patches using this script.

-

Fill data path in the config file.

-

Begin to train:

CUDA_VISIBLE_DEVICES=gpu_id python train_SISR.py --save_dir path_for_log --config configs/sisr_x4.json

@article{yue2024variational,

title={Deep Variational Network Toward Blind Image Restoration},

author={Yue, Zongsheng and Yong, Hongwei and Zhao, Qian and Zhang, Lei and Meng, Deyu and Wong, Kwan-Yee K},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2024}

}

If you have any questions, please feel free to contact me via zsyzam@gmail.com.