Code for the SIGIR 2022 paper "Hybrid Transformer with Multi-level Fusion for Multimodal Knowledge Graph Completion"

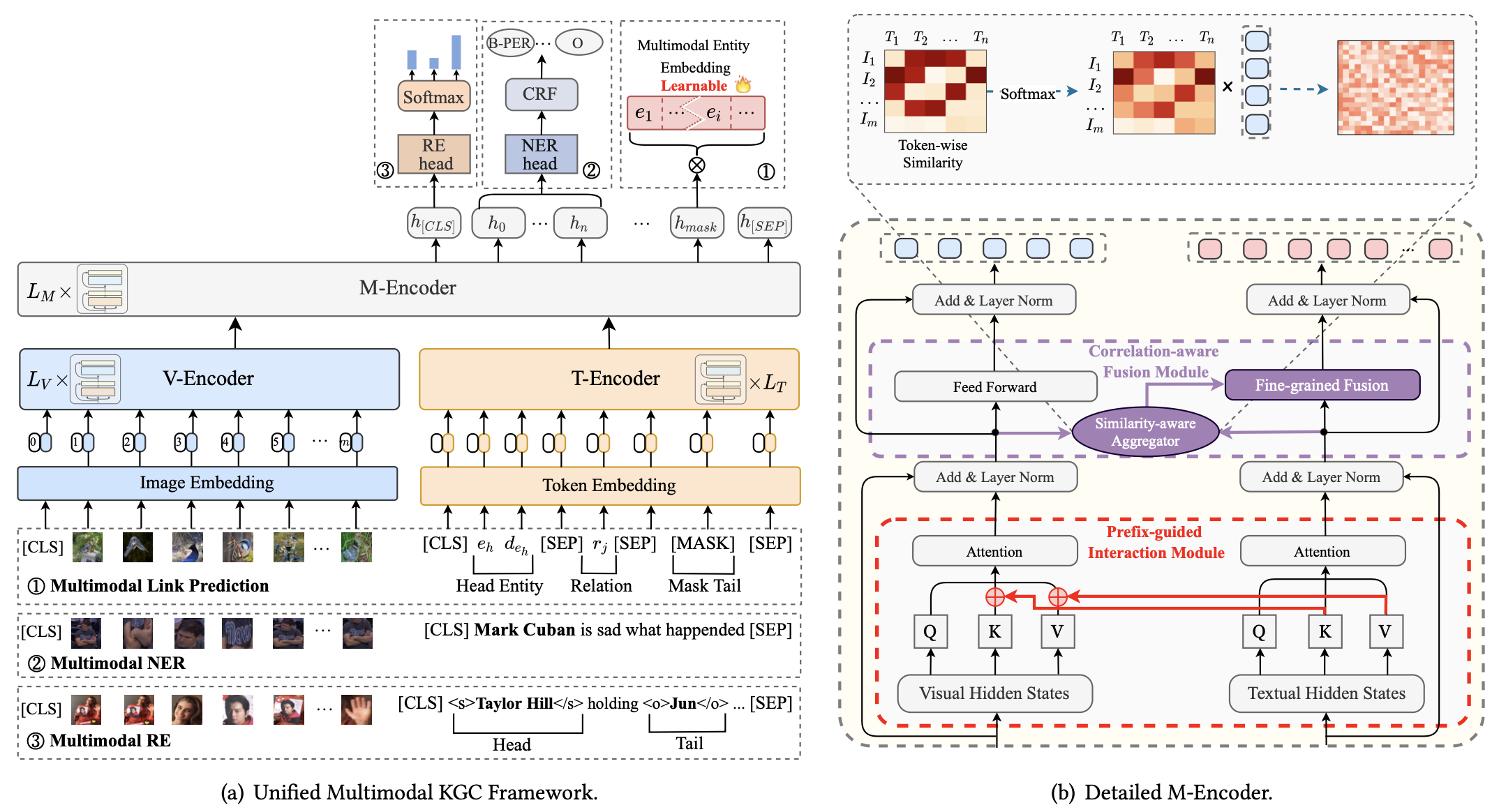

Illustration of MKGformer for (a) Unified Multimodal KGC Framework and (b) Detailed M-Encoder.

To run the codes, you need to install the requirements:

pip install -r requirements.txt

The datasets that we used in our experiments are as follows:

-

Twitter2017

You can download the twitter2017 dataset via this link (https://drive.google.com/file/d/1ogfbn-XEYtk9GpUECq1-IwzINnhKGJqy/view?usp=sharing)

For more information regarding the dataset, please refer to the UMT repository.

-

MRE

The MRE dataset comes from MEGA, many thanks.

You can download the MRE dataset with detected visual objects using folloing command:

cd MRE wget 120.27.214.45/Data/re/multimodal/data.tar.gz tar -xzvf data.tar.gz -

MKG

The expected structure of files is:

MKGFormer

|-- MKG # Multimodal Knowledge Graph

| |-- dataset # task data

| |-- data # data process file

| |-- lit_models # lightning model

| |-- models # mkg model

| |-- scripts # running script

| |-- main.py

|-- MNER # Multimodal Named Entity Recognition

| |-- data # task data

| |-- models # mner model

| |-- modules # running script

| |-- processor # data process file

| |-- utils

| |-- run_mner.sh

| |-- run.py

|-- MRE # Multimodal Relation Extraction

| |-- data # task data

| |-- models # mre model

| |-- modules # running script

| |-- processor # data process file

| |-- run_mre.sh

| |-- run.py

-

- First run Image-text Incorporated Entity Modeling to train entity embedding.

cd MKG bash scripts/pretrain_fb15k-237-image.sh- Then do Missing Entity Prediction.

bash scripts/fb15k-237-image.sh

-

To run mner task, run this script.

cd MNER bash run_mner.py -

To run mre task, run this script.

cd MRE bash run_mre.py

The acquisition of image data for the multimodal link prediction task refer to the code from https://github.com/wangmengsd/RSME, many thanks.

If you use or extend our work, please cite the paper as follows:

@article{DBLP:journals/corr/abs-2205-02357,

author = {Xiang Chen and

Ningyu Zhang and

Lei Li and

Shumin Deng and

Chuanqi Tan and

Changliang Xu and

Fei Huang and

Luo Si and

Huajun Chen},

title = {Hybrid Transformer with Multi-level Fusion for Multimodal Knowledge

Graph Completion},

journal = {CoRR},

volume = {abs/2205.02357},

year = {2022},

url = {https://doi.org/10.48550/arXiv.2205.02357},

doi = {10.48550/arXiv.2205.02357},

eprinttype = {arXiv},

eprint = {2205.02357},

timestamp = {Wed, 11 May 2022 17:29:40 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2205-02357.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}