This is an open source implementation for 3DV 2018 submission "MVDepthNet: real-time multiview depth estimation neural network" by Kaixuan Wang and Shaojie Shen. arXiv link. If you find the project useful for your research, please cite:

@InProceedings{mvdepthnet,

author = "K. Wang and S. Shen",

title = "MVDepthNet: real-time multiview depth estimation neural network",

booktitle = "International Conference on 3D Vision (3DV)",

month = "Sep.",

year = "2018",

}

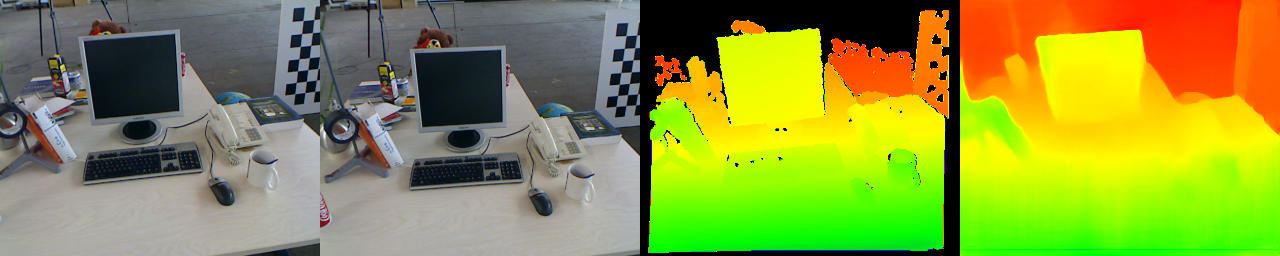

Given multiple images and the corresponding camera poses, a cost volume is firstly calculated and then combined with the reference image to generate the depth map. An example is

From left to right is: the left image, the right image, the "ground truth" depth from RGB-D cameras and the estimated depth map.

A video can be used to illustrate the performance of our system:

- PyTorch

The PyTorch version used in the implementation is 0.3. To use the network in higher versions, only small changes are needed.

-

OpenCV

-

NumPy

We provide a trained model used in our paper evaluation and some images to run the example code.

Please download the model via the link and the sample images via the link. Put the model opensource_model.pth.tar and extract the sample_data.pkl.tar.gz under the project folder.

Just

python example.py

Please refer to the example.py. To use the network, you need to provide a left image, a right image, camera intrinsic parameters and the relative camera pose. Images are normalized using the mean 81.0 and the std 35.0, for example

normalized_image = (image - 81.0)/35.0.

Please refer to depthNet_model.py, use the function getVolume to construct multiple volumes and average them. Input the model with the reference image and the averaged cost volume to get the estimated depth maps.

Most of the training data and test data are collected by DeMoN and we thank their work.