PyTorch implementation of deep person re-identification models.

We support

- multi-GPU training.

- both image-based and video-based reid.

- unified interface for different reid models.

- easy dataset preparation.

- end-to-end training and evaluation.

- standard dataset splits used by most papers.

- fast cython-based evaluation.

cdto the folder where you want to download this repo.- Run

git clone https://github.com/KaiyangZhou/deep-person-reid. - Install dependencies by

pip install -r requirements.txt. - To accelerate evaluation (10x faster), you can use cython-based evaluation code (developed by luzai). First

cdtoeval_lib, then domakeorpython setup.py build_ext -i. After that, runpython test_cython_eval.pyto test if the package is successfully installed.

Image reid datasets:

- Market1501 [7]

- CUHK03 [13]

- DukeMTMC-reID [16, 17]

- MSMT17 [22]

- VIPeR [28]

- GRID [29]

- CUHK01 [30]

- PRID450S [31]

- SenseReID [32]

Video reid datasets:

- MARS [8]

- iLIDS-VID [11]

- PRID2011 [12]

- DukeMTMC-VideoReID [16, 23]

Instructions regarding how to prepare (and do evaluation on) these datasets can be found here.

torchreid/models/resnet.py: ResNet50 [1], ResNet101 [1], ResNet50M [2].torchreid/models/resnext.py: ResNeXt101 [26].torchreid/models/seresnet.py: SEResNet50 [25], SEResNet101 [25], SEResNeXt50 [25], SEResNeXt101 [25].torchreid/models/densenet.py: DenseNet121 [3].torchreid/models/mudeep.py: MuDeep [10].torchreid/models/hacnn.py: HACNN [15].torchreid/models/squeezenet.py: SqueezeNet [18].torchreid/models/mobilenetv2.py: MobileNetV2 [19].torchreid/models/shufflenet.py: ShuffleNet [20].torchreid/models/xception.py: Xception [21].torchreid/models/inceptionv4.py: InceptionV4 [24].torchreid/models/inceptionresnetv2.py: InceptionResNetV2 [24].

See torchreid/models/__init__.py for details regarding what keys to use to call these models.

Benchmarks can be found here.

Training codes are implemented in

train_imgreid_xent.py: train image model with cross entropy loss.train_imgreid_xent_htri.py: train image model with combination of cross entropy loss and hard triplet loss.train_vidreid_xent.py: train video model with cross entropy loss.train_vidreid_xent_htri.py: train video model with combination of cross entropy loss and hard triplet loss.

For example, to train an image reid model using ResNet50 and cross entropy loss, run

python train_imgreid_xent.py -d market1501 -a resnet50 --optim adam --lr 0.0003 --max-epoch 60 --stepsize 20 40 --train-batch 32 --test-batch 100 --save-dir log/resnet50-xent-market1501 --gpu-devices 0To use multiple GPUs, you can set --gpu-devices 0,1,2,3.

Note: To resume training, you can use --resume path/to/.pth.tar to load a checkpoint from which saved model weights and start_epoch will be used. Learning rate needs to be initialized carefully. If you just wanna load a pretrained model by discarding layers that do not match in size (e.g. classification layer), use --load-weights path/to/.pth.tar instead.

Please refer to the code for more details.

Say you have downloaded ResNet50 trained with xent on market1501. The path to this model is 'saved-models/resnet50_xent_market1501.pth.tar' (create a directory to store model weights mkdir saved-models/ beforehand). Then, run the following command to test

python train_imgreid_xent.py -d market1501 -a resnet50 --evaluate --resume saved-models/resnet50_xent_market1501.pth.tar --save-dir log/resnet50-xent-market1501 --test-batch 100 --gpu-devices 0Likewise, to test video reid model, you should have a pretrained model saved under saved-models/, e.g. saved-models/resnet50_xent_mars.pth.tar, then run

python train_vid_model_xent.py -d mars -a resnet50 --evaluate --resume saved-models/resnet50_xent_mars.pth.tar --save-dir log/resnet50-xent-mars --test-batch 2 --gpu-devices 0Note that --test-batch in video reid represents number of tracklets. If you set this argument to 2, and sample 15 images per tracklet, the resulting number of images per batch is 2*15=30. Adjust this argument according to your GPU memory.

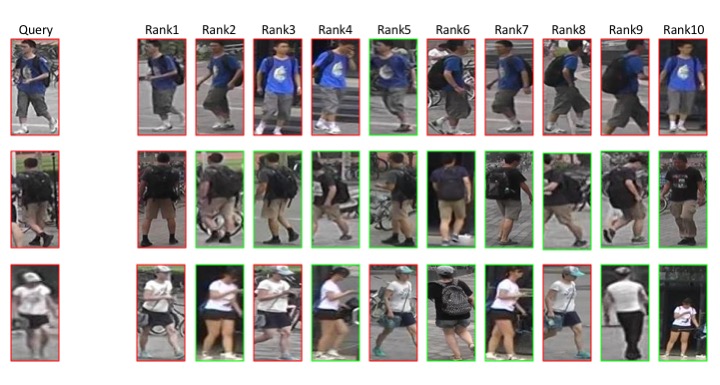

Ranked results can be visualized via --visualize-ranks, which works along with --evaluate. Ranked images will be saved in save_dir/ranked_results where save_dir is the directory you specify with --save-dir.

Before raising an issue, please have a look at the history issues where you may find answers. If those answers do not solve your problem, raise a new issue (choose an informative title) and include the following details in your question: (1) environmental settings, e.g. python version, torch/torchvision version, etc. (2) command that leads to the errors. (3) screenshot of error logs if available. If you find any errors in the code, please inform me by opening a new issue.

If you wanna contribute to this project, e.g. implementing new losses, please open an issue for discussion or directly email me.

Please link this project in your paper.

[1] He et al. Deep Residual Learning for Image Recognition. CVPR 2016.

[2] Yu et al. The Devil is in the Middle: Exploiting Mid-level Representations for Cross-Domain Instance Matching. arXiv:1711.08106.

[3] Huang et al. Densely Connected Convolutional Networks. CVPR 2017.

[4] Hermans et al. In Defense of the Triplet Loss for Person Re-Identification. arXiv:1703.07737.

[5] Szegedy et al. Rethinking the Inception Architecture for Computer Vision. CVPR 2016.

[6] Kingma and Ba. Adam: A Method for Stochastic Optimization. ICLR 2015.

[7] Zheng et al. Scalable Person Re-identification: A Benchmark. ICCV 2015.

[8] Zheng et al. MARS: A Video Benchmark for Large-Scale Person Re-identification. ECCV 2016.

[9] Wen et al. A Discriminative Feature Learning Approach for Deep Face Recognition. ECCV 2016

[10] Qian et al. Multi-scale Deep Learning Architectures for Person Re-identification. ICCV 2017.

[11] Wang et al. Person Re-Identification by Video Ranking. ECCV 2014.

[12] Hirzer et al. Person Re-Identification by Descriptive and Discriminative Classification. SCIA 2011.

[13] Li et al. DeepReID: Deep Filter Pairing Neural Network for Person Re-identification. CVPR 2014.

[14] Zhong et al. Re-ranking Person Re-identification with k-reciprocal Encoding. CVPR 2017

[15] Li et al. Harmonious Attention Network for Person Re-identification. CVPR 2018.

[16] Ristani et al. Performance Measures and a Data Set for Multi-Target, Multi-Camera Tracking. ECCVW 2016.

[17] Zheng et al. Unlabeled Samples Generated by GAN Improve the Person Re-identification Baseline in vitro. ICCV 2017.

[18] Iandola et al. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size. arXiv:1602.07360.

[19] Sandler et al. MobileNetV2: Inverted Residuals and Linear Bottlenecks. CVPR 2018.

[20] Zhang et al. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. CVPR 2018.

[21] Chollet. Xception: Deep Learning with Depthwise Separable Convolutions. CVPR 2017.

[22] Wei et al. Person Transfer GAN to Bridge Domain Gap for Person Re-Identification. CVPR 2018.

[23] Wu et al. Exploit the Unknown Gradually: One-Shot Video-Based Person Re-Identification by Stepwise Learning. CVPR 2018.

[24] Szegedy et al. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. ICLRW 2016.

[25] Hu et al. Squeeze-and-Excitation Networks. CVPR 2018.

[26] Xie et al.

Aggregated Residual Transformations for Deep Neural Networks. CVPR 2017.

[27] Chen et al. Dual Path Networks. NIPS 2017.

[28] Gray et al. Evaluating appearance models for recognition, reacquisition, and tracking. PETS 2007.

[29] Loy et al. Multi-camera activity correlation analysis. CVPR 2009.

[30] Li et al. Human Reidentification with Transferred Metric Learning. ACCV 2012.

[31] Roth et al. Mahalanobis Distance Learning for Person Re-Identification. PR 2014.

[32] Zhao et al. Spindle Net: Person Re-identification with Human Body Region Guided Feature Decomposition and Fusion. CVPR 2017.