OT-VP: Optimal Transport-guided Visual Prompting for Test-Time Adaptation

Yunbei Zhang, Akshay Mehra, Jihun Hamm

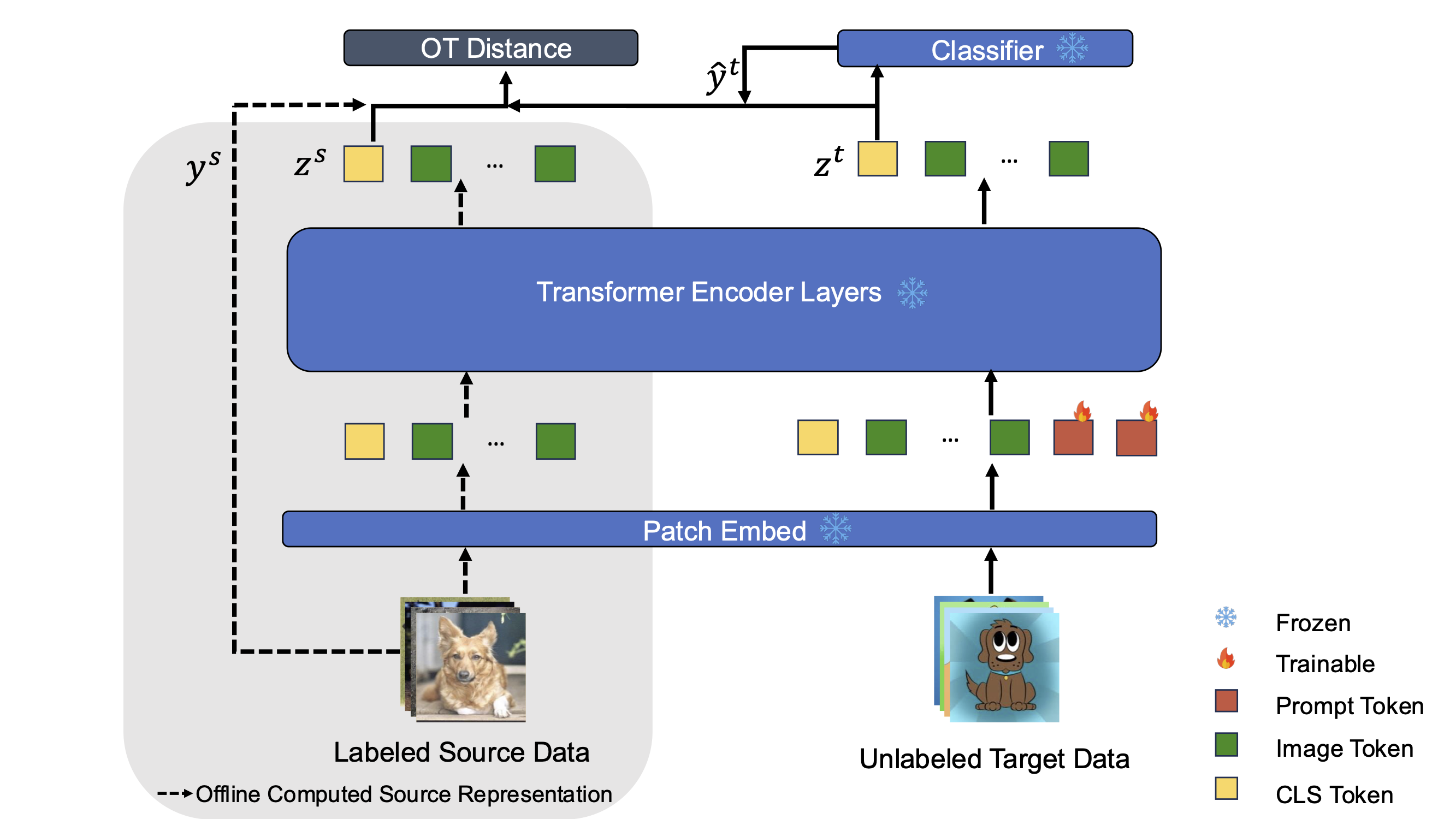

Illustration of OT-VP

pip install -r requirements.txtWe use the ImageNet pre-trained ViT model from timm. ImageNet-C can be downloaded here.

Corruption can be chosen from 0 to 14, corresponding to 'gaussian_noise', 'shot_noise', 'impulse_noise', 'defocus_blur', 'glass_blur', 'motion_blur', 'zoom_blur', 'snow', 'frost', 'fog', 'brightness', 'contrast', 'elastic_transform', 'pixelate', 'jpeg_compression' respectively.

python -m domainbed.scripts.adapt --dataset ImageNetC --data_dir [path/to/ImageNet-C] --algorithm OTVP --corruption [0-14]Please cite our work if you find it useful.

@article{zhang2024ot,

title={OT-VP: Optimal Transport-guided Visual Prompting for Test-Time Adaptation},

author={Zhang, Yunbei and Mehra, Akshay and Hamm, Jihun},

journal={arXiv preprint arXiv:2407.09498},

year={2024}

}DomainBed code is heavily used.

DoPrompt is used to implement Visual Prompting.