Domain Enhanced Arbitrary Image Style Transfer via Contrastive Learning (CAST)

A Unified Arbitrary Style Transfer Framework via Adaptive Contrastive Learning (UCAST)

We provide our PyTorch implementation of the paper ''Domain Enhanced Arbitrary Image Style Transfer via Contrastive Learning''(SIGGRAPH 2022) , which is a simple yet powerful model for arbitrary image style transfer, and ''A Unified Arbitrary Style Transfer Framework via Adaptive Contrastive Learning''(ACM Transactions on Graphics) , which is a improved arbitrary style style transfer method.

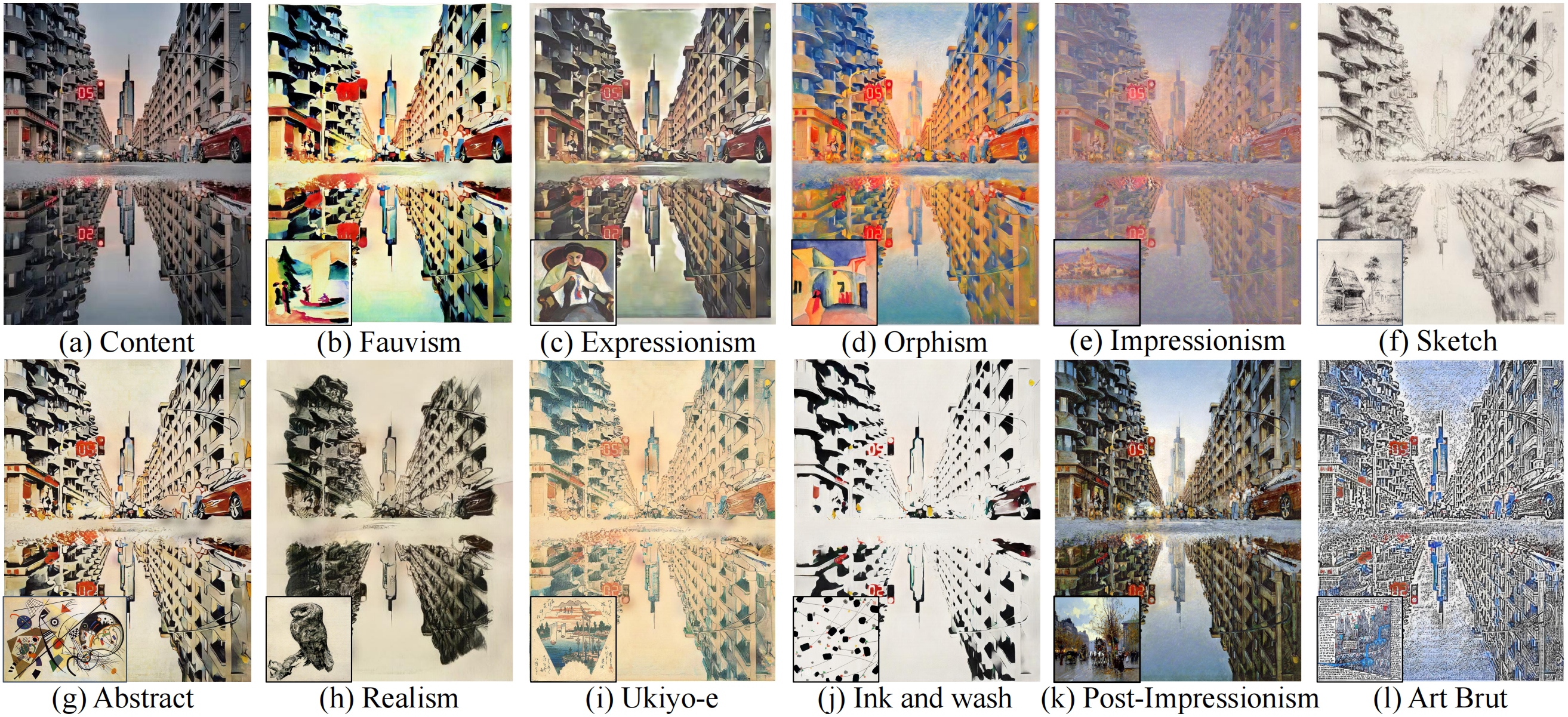

In this work, we tackle the challenging problem of arbitrary image style transfer using a novel style feature representation learning method. A suitable style representation, as a key component in image stylization tasks, is essential to achieve satisfactory results. Existing deep neural network based approaches achieve reasonable results with the guidance from second-order statistics such as Gram matrix of content features. However, they do not leverage sufficient style information, which results in artifacts such as local distortions and style inconsistency. To address these issues, we propose to learn style representation directly from image features instead of their second-order statistics, by analyzing the similarities and differences between multiple styles and considering the style distribution.

For details see the papers CAST , UCAST, and the video

Python 3.6 or above.

PyTorch 1.6 or above

For packages, see requirements.txt.

pip install -r requirements.txtClone the repo

git clone https://github.com/zyxElsa/CAST_pytorch.gitThen put your content images in ./datasets/{datasets_name}/testA, and style images in ./datasets/{datasets_name}/testB.

Example directory hierarchy:

CAST_pytorch

|--- datasets

|--- {datasets_name}

|--- trainA

|--- trainB

|--- testA

|--- testB

Then, call --dataroot ./datasets/{datasets_name}Train the CAST model:

python train.py --dataroot ./datasets/{dataset_name} --name {model_name}The pretrained style classification model is saved at ./models/style_vgg.pth.

Google Drive: Check here

The pretrained content encoder is saved at ./models/vgg_normalised.pth.

Google Drive: Check here

Test the CAST or UCAST model:

python test.py --dataroot ./datasets/{dataset_name} --name {model_name}The pretrained model is saved at ./checkpoints/CAST_model/*.pth.

BaiduNetdisk: Check CAST model (passwd:cast)

Google Drive: Download CAST model and UCAST model (for video style transfer).

@inproceedings{zhang2020cast,

author = {Zhang, Yuxin and Tang, Fan and Dong, Weiming and Huang, Haibin and Ma, Chongyang and Lee, Tong-Yee and Xu, Changsheng},

title = {Domain Enhanced Arbitrary Image Style Transfer via Contrastive Learning},

booktitle = {ACM SIGGRAPH},

year = {2022}}@article{zhang2023unified,

title={A Unified Arbitrary Style Transfer Framework via Adaptive Contrastive Learning},

author={Zhang, Yuxin and Tang, Fan and Dong, Weiming and Huang, Haibin and Ma, Chongyang and Lee, Tong-Yee and Xu, Changsheng},

journal={ACM Transactions on Graphics},

year={2023},

publisher={ACM New York, NY}

}Please feel free to open an issue or contact us personally if you have questions, need help, or need explanations. Write to one of the following email addresses, and maybe put one other in the cc: