This repository showcases building task-oriented bot at scale with handful examples via fintuning a pretrained model using SOLOIST framework, and contains the dataset, source code and pre-trained model for the following paper:

SOLOIST: Building Task Bots at Scale with Transfer Learning and Machine Teaching

Baolin Peng, Chunyuan Li, Jinchao Li, Shahin Shayandeh, Lars Liden, Jianfeng Gao

Transactions of the Association for Computational Linguistics 2021

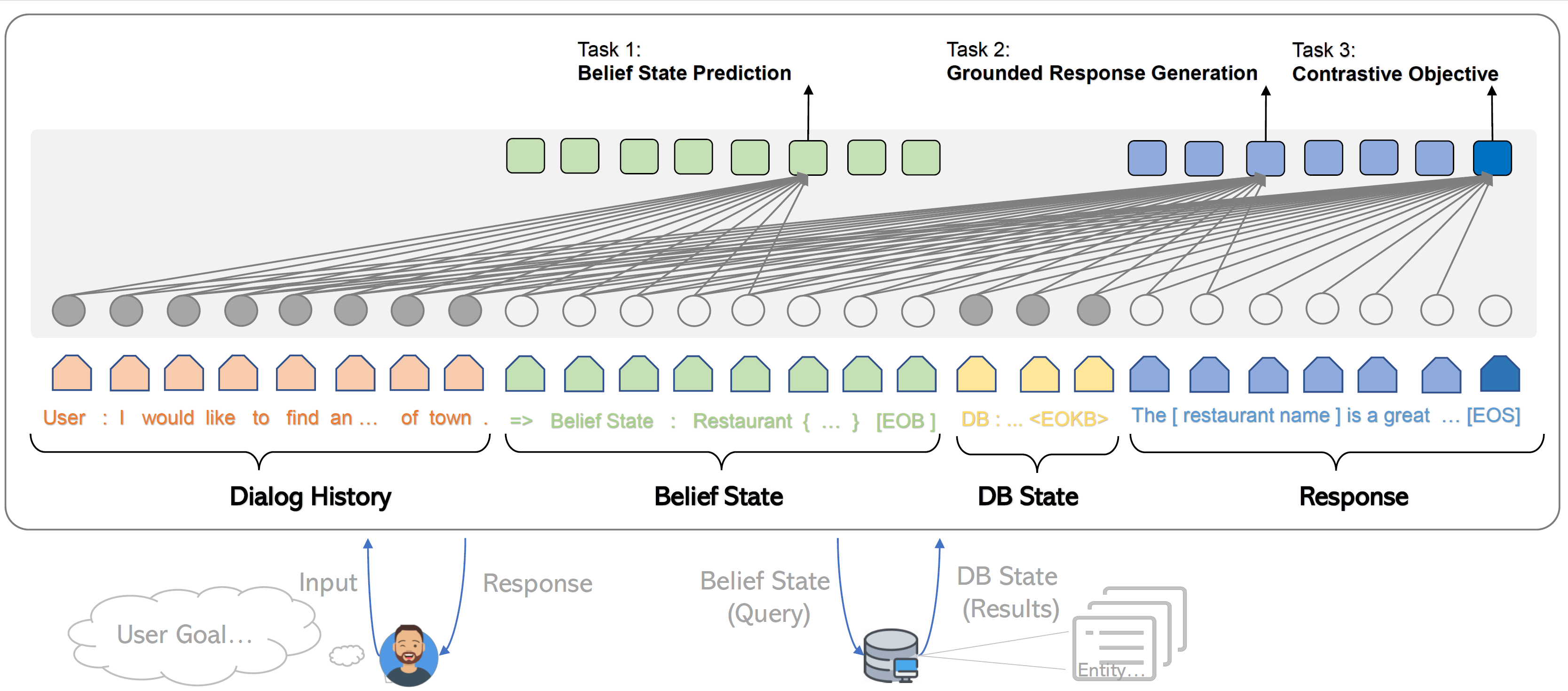

SOLOIST is a new pre-training-fine-tuning paradigm for building task-oritend dialog systems. We parameterize a dialog system using a Transformer-based auto-regressive language model, which subsumes different dialog modules (e.g. state tracker, dialog policy, response generator) into a single neural model. We pre-train, on large heterogeneous dialog corpora, a large-scale Transformer model which can generate dialog responses grounded in user goals and real-world knowledge for task completion. The pre-trained model can be efficiently fine-tuned and adapted to accomplish a new dialog task with a handful of task-specific dialogs via fine-tuning and machine teaching.

This repository is based on hugginface transformer and OpenAI GPT-2. Some evaluation scripts and dataset are adapted from MultiWOZ, Schema-guided Dataset, etc..

The included scripts can be used to reproduce the results reported in the paper. Project and demo webpage: https://aka.ms/soloist

Require python 3.6.

The interactive interface requries vue framework. Please refer to here for installation.

Please use the below commands to clone the repo and install required package.

git clone

pip install -r requirements.txt

Fetch and unzip the pretrained model based on which to continue finetune your own data. (will release more versions of pretrained models, stay tuned)

wget https://bapengstorage.blob.core.windows.net/soloist/gtg_pretrained.tar.gz

cd soloist

tar -xvf gtg_pretrained_model.tar.gzData format

{

"history": [

"user : later I have to call Jessie"

],

"belief": "belief : name = Jessie",

"kb": "",

"reply": "system : Sure, added to your reminder list. FOLLOWUP action_set_reminder"

},We use json to represent a training example. As shown in the example, it contains the following fields:

- history - The context from session beginning to current turn

- belief - The belief state.

- kb - Database query results, leave it as blank if db query is not requried.

- reply - The target system respose. It can be a template, an api call or natural language.

Training

python train.py --output_dir=MODEL_SAVE_PATH --model_type=gpt2 --model_name_or_path=PRE_TRAINED_MODEL_PATH --do_train --train_data_file=TRAIN_FILE --per_gpu_train_batch_size 4 --num_train_epochs EPOCH --learning_rate 5e-5 --overwrite_cache --use_tokenize --save_steps 10000 --max_seq 500 --overwrite_output_dir --max_turn 15 --num_candidates 1 --mc_loss_efficient 0.2 --add_special_action_tokens --with_code_loss --add_belief_prediction --add_response_prediction --add_same_belief_response_predictionoutput_dir : Path of the saving model.

model_name_or_path : Initial checkpoint;

num_train_epochs : Number of training epochs; 5, 10, 20 are enough for a reasonable performance.

learning_rate : Learning rate; 5e-5, 1e-5, or 1e-4.

num_candidates : number of candidate; recommend 1.

mc_loss_efficient : contrastive loss coefficient; 0.1 to 1.

add_belief_prediction : if add contrastive loss item for belief span.

add_response_prediction : if add contrastive loss item for response prediction.

add_same_belief_response_prediction : if add contrastive loss item for belief span and response.

Generation

python generate.py --model_type=gpt2 --model_name_or_path=SAVED_MODEL --num_samples NS --input_file=TEST_FILE --top_p TOP_P --temperature TEMP --output_file=OUTPUT_FILE --max_turn 15model_name_or_path : Path of the saved model.

num_samples : Number of samples; 1 or 5 for reranking.

top_p : Nuclear sampling; 0.2 - 0.5

temperature : Nuclear sampling; 0.7 - 1.5

input_file : Path to input file.

output_file : Path to save results.

Interaction

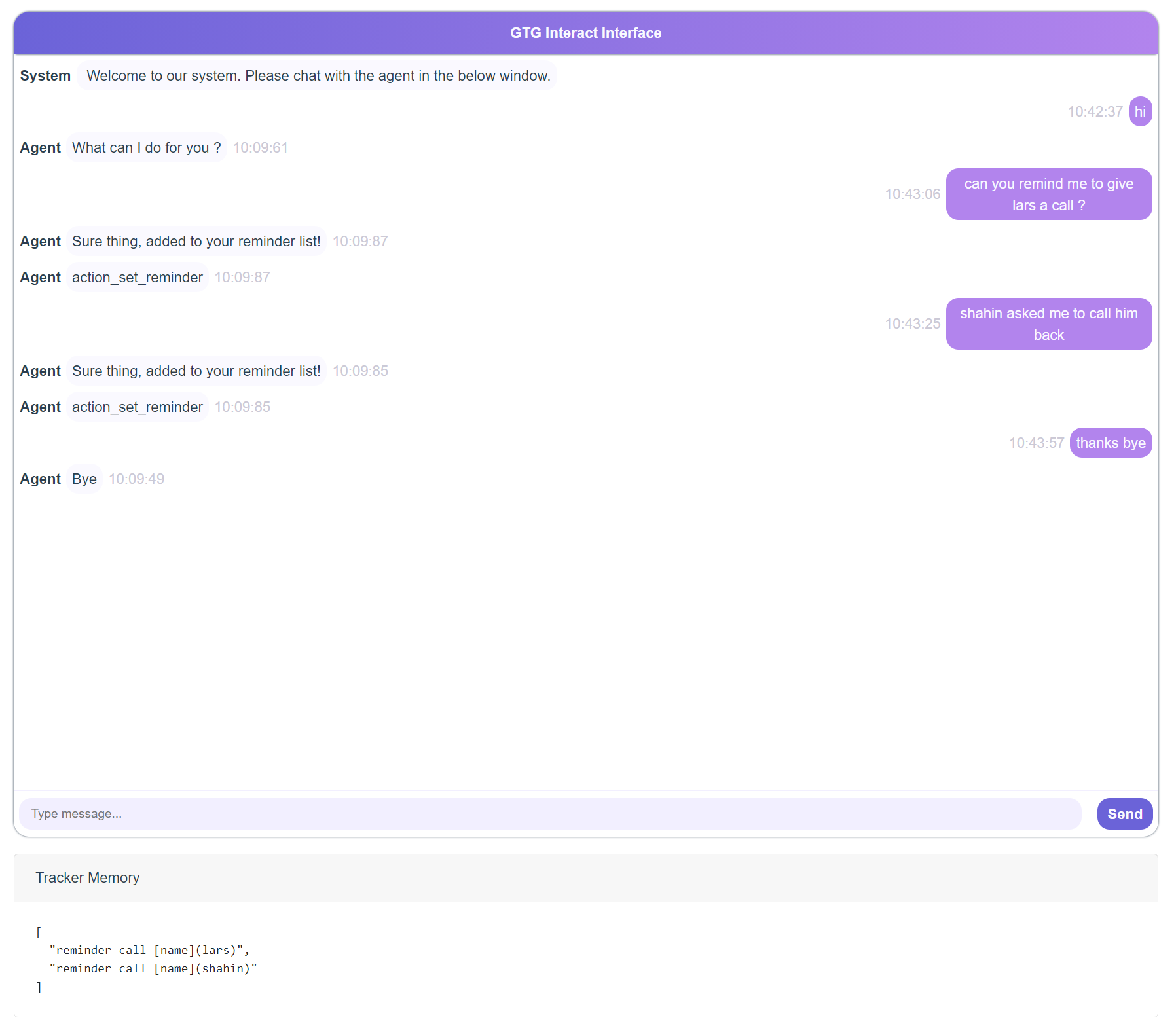

We provide an demo interface to chat with finetuned models. The backend server is based on flask and the interface is based on vue, bootstrap-vue, and BasicVueChat.

Start the backend server:

python EXAMPLE_server.py # start the sever and expose 8081 port, users need to write own server code following examples/reminderbot/reminderbot_server.pyStart serving frontend page:

npm install

npm run serve Open localhost:8080, you will see the following page. Note that the backend port should be consistent with the port used in html/compoents/chat.vue.

Theoretically, all dialog related corpora or domain-specific corpous, like insurrance, banking domian dialog log sesssions, can be used to pre-train soloist. For simplicity, in this repo we showcase pretraining with publicly available corpus. Incorporating other dataset is straightforward.

sh scripts/pretrain_preprocessing.shsh scripts/soloist_pretrain.shWith 8 v100 GPUs, checkpoints at step 300k - 500k are able to demonstrate good transfer learning capability.

Using our framework, it is not necessary to explicitly define and annotate user intents and system policy. If log dialogue sessions are available, the only annotaiton required is dialogue state. Otherwise, the training examples can be obtained by following steps: 1) user drafts several rules/templates as seed. 2) tree traversal algorithm to generate seed dialogs. 3) use our previous work SC-GPT to augment the training corpus (optional, related papers can be found at here and here.

In this tutorial, you will build a simple assitant with which you can chat to add calling reminders or remove all reminders. The example is adapted from RASA Reminderbot.

We use rules and tree traversal to generate 34 examples as training set.

#under examples/reminderbot

python convert_rasa.pyFinetune with pre-trained Soloist model

#under soloist folder

sh script/train_reminderbot.shInteract with above trained model

#under examples/reminderbot

python reminderbot_server.py

#under soloist/html

npm install

npm run serveA demo vedio on chatting with reminder bot is at here.

In this tutorial, you will build a simple knowledge base query bot. The example is adapted from RASA knowledgebasebot.

The procedure is similar to how you build reminderbot.

python convert.pyFinetune with pre-trained Soloist model

sh train_kbbot.shInteract with above trained model

#under examples/reminderbot

python reminderbot_server.py

#under soloist/html

npm run serveCheck here for details.

This repository aims to facilitate research in a paradigm shift of building task bots at scale. This toolkit contains only part of the modeling machinery needed to actually produce a model weight file in a running dialog. On its own, this model provides only information about the weights of various text spans; in order for a researcher to actually use it, they will need to bring in-house conversational data of their own for furture pre-training and decode the response generation from the pretrained/finetuned system. Microsoft is not responsible for any generation from the 3rd party utilization of the pretrained system.

if you use this code and data in your research, please cite our arxiv paper:

@article{peng2020soloist,

title={SOLOIST: Building Task Bots at Scale with Transfer Learning and Machine Teaching},

author={Peng, Baolin and Li, Chunyuan and Li, Jinchao and Shayandeh, Shahin and Liden, Lars and Gao, Jianfeng},

journal={arXiv preprint arXiv:2005.05298},

year={2020}

}