20210625 安装最新的pytorch1.9出错,ERROR: Unexpected segmentation fault encountered in worker,可能是数据加载有问题,改成pytorch1.7后正常了。

20201126 torch.nn.module中forward()函数不能用@staticmethod,否则会提示 forward() missing 1 required positional argument: 'x'

20201112 将程序的版本从python2转为了python3,pytorch版本也转为了pytorch-1.7 具体修改:1.使用python内置工具2to3,直接将python代码转化 2.pytorch中的torch.autograd.Function在版本升级后,构建方法发生了变化。 修改了torch_functions.py中的MyTripletLossFunc类。

This is the code for the paper:

Composing Text and Image for Image Retrieval - An Empirical Odyssey

Nam Vo, Lu Jiang, Chen Sun, Kevin Murphy, Li-Jia Li, Li Fei-Fei, James Hays

CVPR 2019.

Please note that this is not an officially supported Google product. And this is the reproduced, not the original code.

If you find this code useful in your research then please cite

@inproceedings{vo2019composing,

title={Composing Text and Image for Image Retrieval-An Empirical Odyssey},

author={Vo, Nam and Jiang, Lu and Sun, Chen and Murphy, Kevin and Li, Li-Jia and Fei-Fei, Li and Hays, James},

booktitle={CVPR},

year={2019}

}

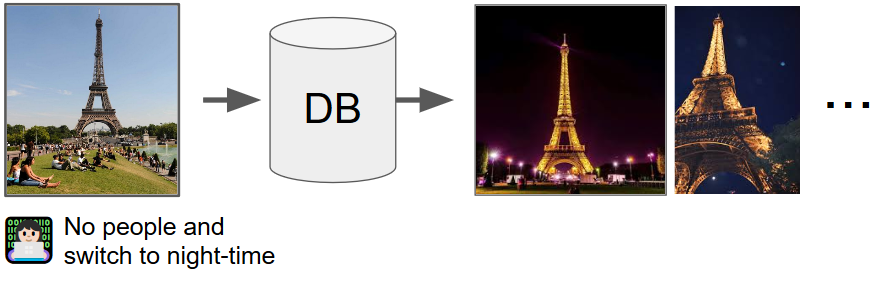

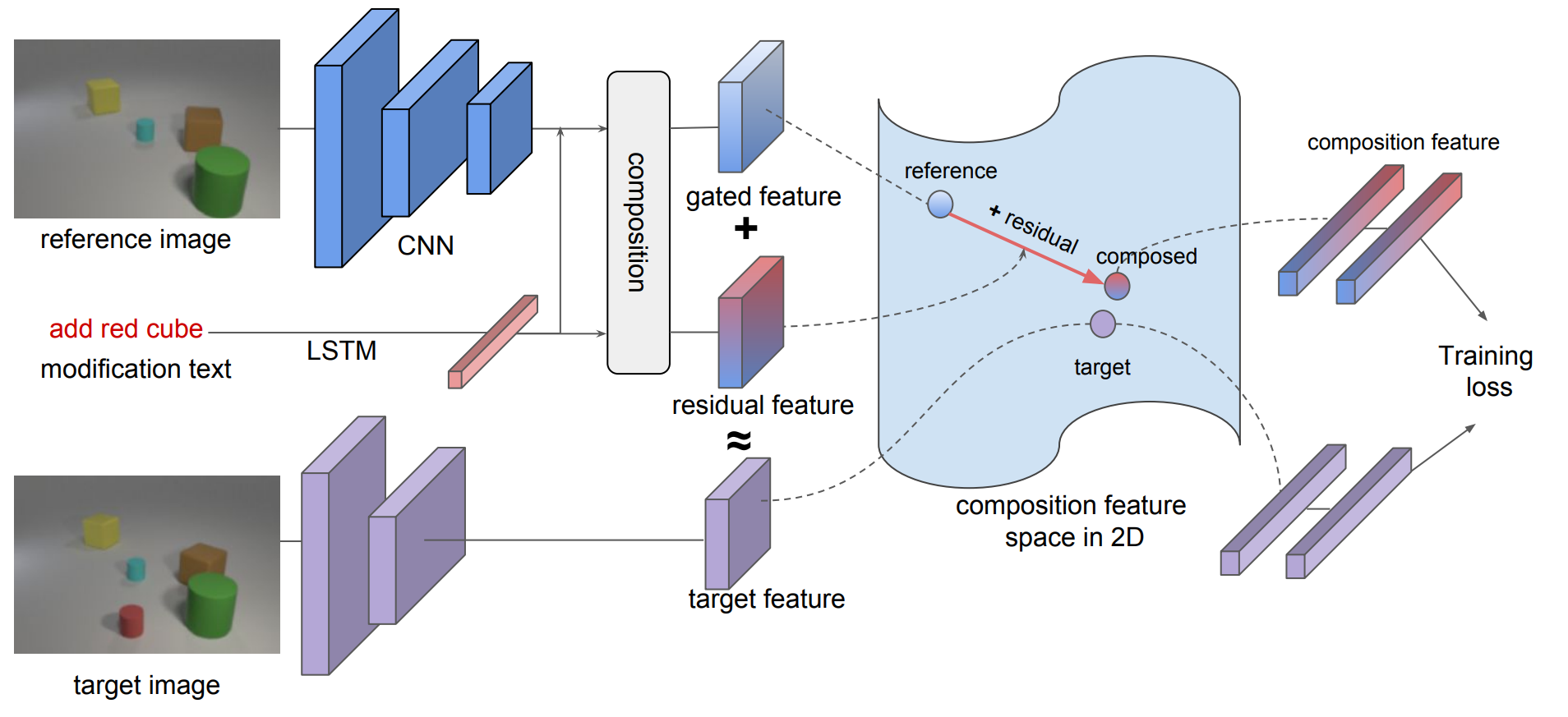

In this paper, we study the task of image retrieval, where the input query is specified in the form of an image plus some text that describes desired modifications to the input image.

We propose a new way to combine image and text using TIRG function for the retrieval task. We show this outperforms existing approaches on different datasets.

- torchvision

- pytorch

- numpy

- tqdm

- tensorboardX

main.py: driver script to run training/testingdatasets.py: Dataset classes for loading images & generate training retrieval queriestext_model.py: LSTM model to extract text featuresimg_text_composition_models.py: various image text compostion models (described in the paper)torch_function.py: contains soft triplet loss function and feature normalization functiontest_retrieval.py: functions to perform retrieval test and compute recall performance

Download the dataset from this external website.

Make sure the dataset include these files:

<dataset_path>/css_toy_dataset_novel2_small.dup.npy

<dataset_path>/images/*.png

To run our training & testing:

python main.py --dataset=css3d --dataset_path=./CSSDataset --num_iters=160000 \

--model=tirg --loss=soft_triplet --comment=css3d_tirg

python main.py --dataset=css3d --dataset_path=./CSSDataset --num_iters=160000 \

--model=tirg_lastconv --loss=soft_triplet --comment=css3d_tirgconv

The first command apply TIRG to the fully connected layer and the second applies it to the last conv layer. To run the baseline:

python main.py --dataset=css3d --dataset_path=./CSSDataset --num_iters=160000 \

--model=concat --loss=soft_triplet --comment=css3d_concat

Download the dataset from this external website.

Make sure the dataset include these files:

<dataset_path>/images/<adj noun>/*.jpg

For training & testing:

python main.py --dataset=mitstates --dataset_path=./mitstates \

--num_iters=160000 --model=concat --loss=soft_triplet \

--learning_rate_decay_frequency=50000 --num_iters=160000 --weight_decay=5e-5 \

--comment=mitstates_concat

python main.py --dataset=mitstates --dataset_path=./mitstates \

--num_iters=160000 --model=tirg --loss=soft_triplet \

--learning_rate_decay_frequency=50000 --num_iters=160000 --weight_decay=5e-5 \

--comment=mitstates_tirg

Download the dataset from this external website Download our generated test_queries.txt from here.

Make sure the dataset include these files:

<dataset_path>/labels/*.txt

<dataset_path>/women/<category>/<caption>/<id>/*.jpeg

<dataset_path>/test_queries.txt`

Run training & testing:

python main.py --dataset=fashion200k --dataset_path=./Fashion200k \

--num_iters=160000 --model=concat --loss=batch_based_classification \

--learning_rate_decay_frequency=50000 --comment=f200k_concat

python main.py --dataset=fashion200k --dataset_path=./Fashion200k \

--num_iters=160000 --model=tirg --loss=batch_based_classification \

--learning_rate_decay_frequency=50000 --comment=f200k_tirg

Our pretrained models can be downloaded below. You can find our best single model accuracy: The numbers are slightly different from the ones reported in the paper due to the re-implementation.

- CSS Model: 0.760

- Fashion200k Model: 0.161

- MITStates Model: 0.132

All log files will be saved at ./runs/<timestamp><comment>.

Monitor with tensorboard (training loss, training retrieval performance, testing retrieval performance):

tensorboard --logdir ./runs/ --port 8888

Pytorch's data loader might consume a lot of memory, if that's an issue add --loader_num_workers=0 to disable loading data in parallel.