iS-MAP: Neural Implicit Mapping and Positioning for Structural Environments(ACCV 2024)[Paper]

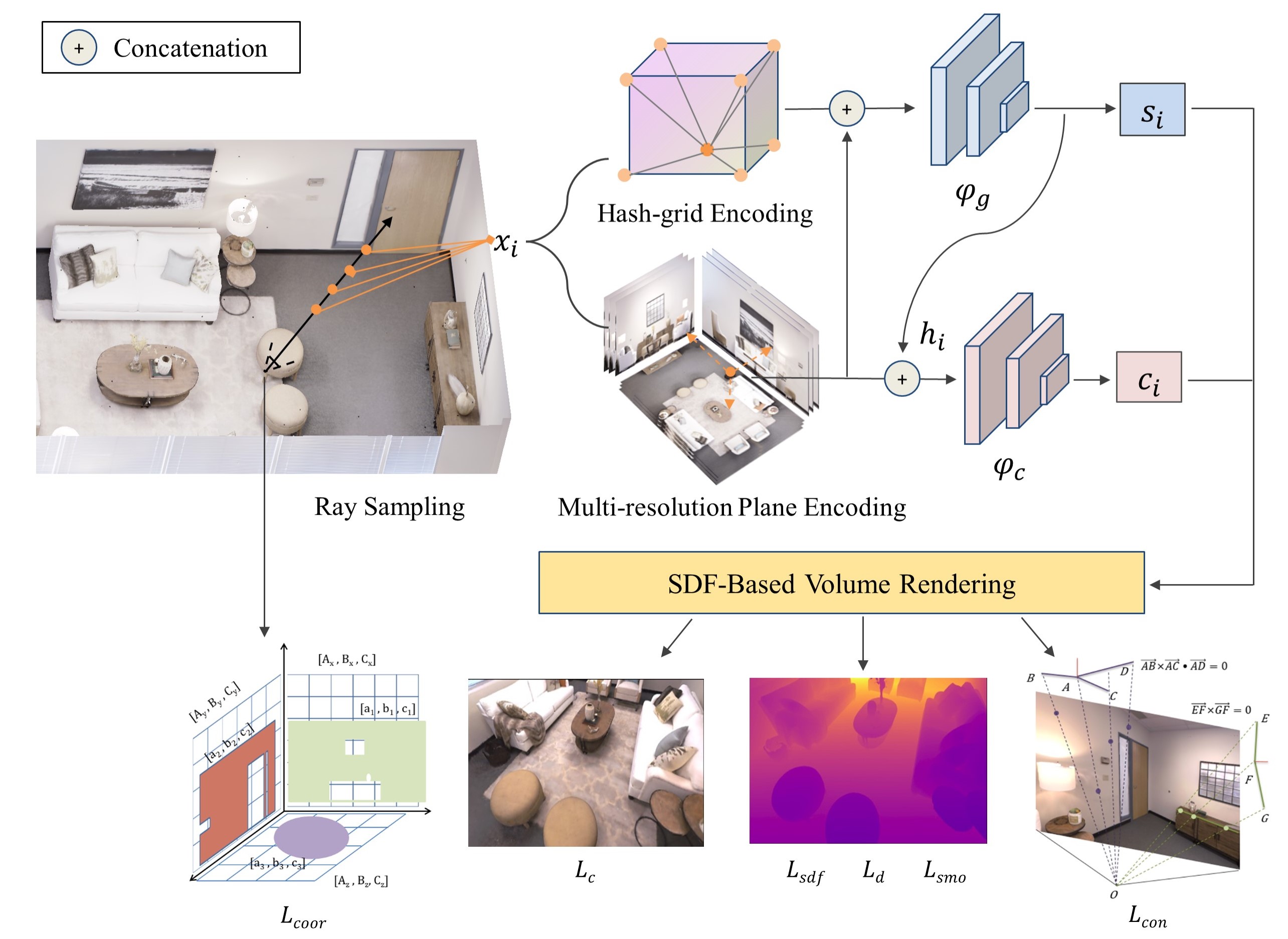

Overview of the system: We sample the 3D points along the ray from each pixel and then encode the sample points by hybrid hash and multi-scale feature plane, and decode them to the TSDF value

Overview of the system: We sample the 3D points along the ray from each pixel and then encode the sample points by hybrid hash and multi-scale feature plane, and decode them to the TSDF value

You can create an anaconda environment called ismap. Please install libopenexr-dev before creating the environment.

sudo apt-get install libopenexr-dev

conda env create -f environment.yaml

conda activate ismap

You will then need to install tiny-cuda-nn to use the hash grid. We recommend installing it from source code.

cd tiny-cuda-nn/bindings/torch

python setup.py install

Download the data as below and the data is saved into the ./Datasets/Replica folder.

bash scripts/download_replica.sh

For running iS-MAP, you should generate line mask with LSD first. We provide a simple preprocessing code to perform LSD segmentation. For example, for room0 scene, after downloading the Replica dataset, you can run

python preprocess_line.py

The line mask images will be generated in the root path of dataset and the folder named ./line_seg.

Alternatively, we recommend directly downloading the preprocessed dataset including the ./line_seg folder here.

After downloading the data to the ./Datasets folder, you can run iS-MAP:

python -W ignore run.py configs/Replica/room0.yaml

The rendering image and reconstruction mesh are saved in $OUTPUT_FOLDER/mapping_vis and $OUTPUT_FOLDER/mesh. The final_mesh_eval_rec_culled.ply means mesh culling the unseen and occluded regions.

To evaluate the average trajectory error. Run the command below with the corresponding config file:

python src/tools/eval_ate.py configs/Replica/room0.yaml

We follow the evaluation method of Co-SLAM, remove the unseen areas and add some virtual camera positions to balance accuracy and prediction ability. For detailed evaluation code, please refer to here.

Thanks to previous open-sourced repo: ESLAM, Co-SLAM, NICE-SLAM

If you find our work useful, please consider citing:

@inproceedings{wang2024map,

title={iS-MAP: Neural Implicit Mapping and Positioning for Structural Environments},

author={Wang, Haocheng and Cao, Yanlong and Shou, Yejun and Shen, Lingfeng and Wei, Xiaoyao and Xu, Zhijie and Ren, Kai},

booktitle={Proceedings of the Asian Conference on Computer Vision},

pages={747--763},

year={2024}

}