Paper, Arxiv, Project Page, CoRL 2024

Xiaogang Jia*12, Qian Wang*1 Atalay Donat1, Bowen Xing1, Ge LI1, Hongyi Zhou2, Onur Celik1, Denis Blessing1, Rudolf Lioutikov2, Gerhard Neumann1

1Autonomous Learning Robots, Karlsruhe Institute of Technology

2Intuitive Robots Lab, Karlsruhe Institute of Technology

* indicates equal contribution

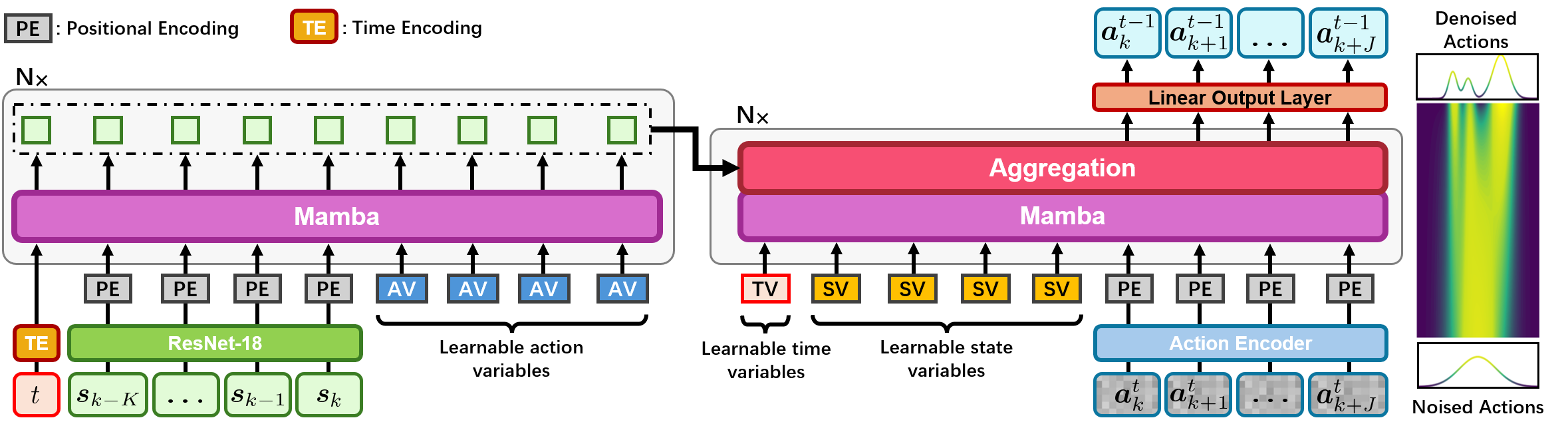

This project encompasses the MaIL codebase, which includes the implementation of the Decoder-only Mamba and Encoder-Decoder Mamba for BC and DDPM models. Highlights of MaIL:

- MaIL achieves better results compared to Transformer-based models on the LIBERO benchmark with 20% data.

- MaIL can be used as a standalone policy or as a part of advanced methods like diffusion.

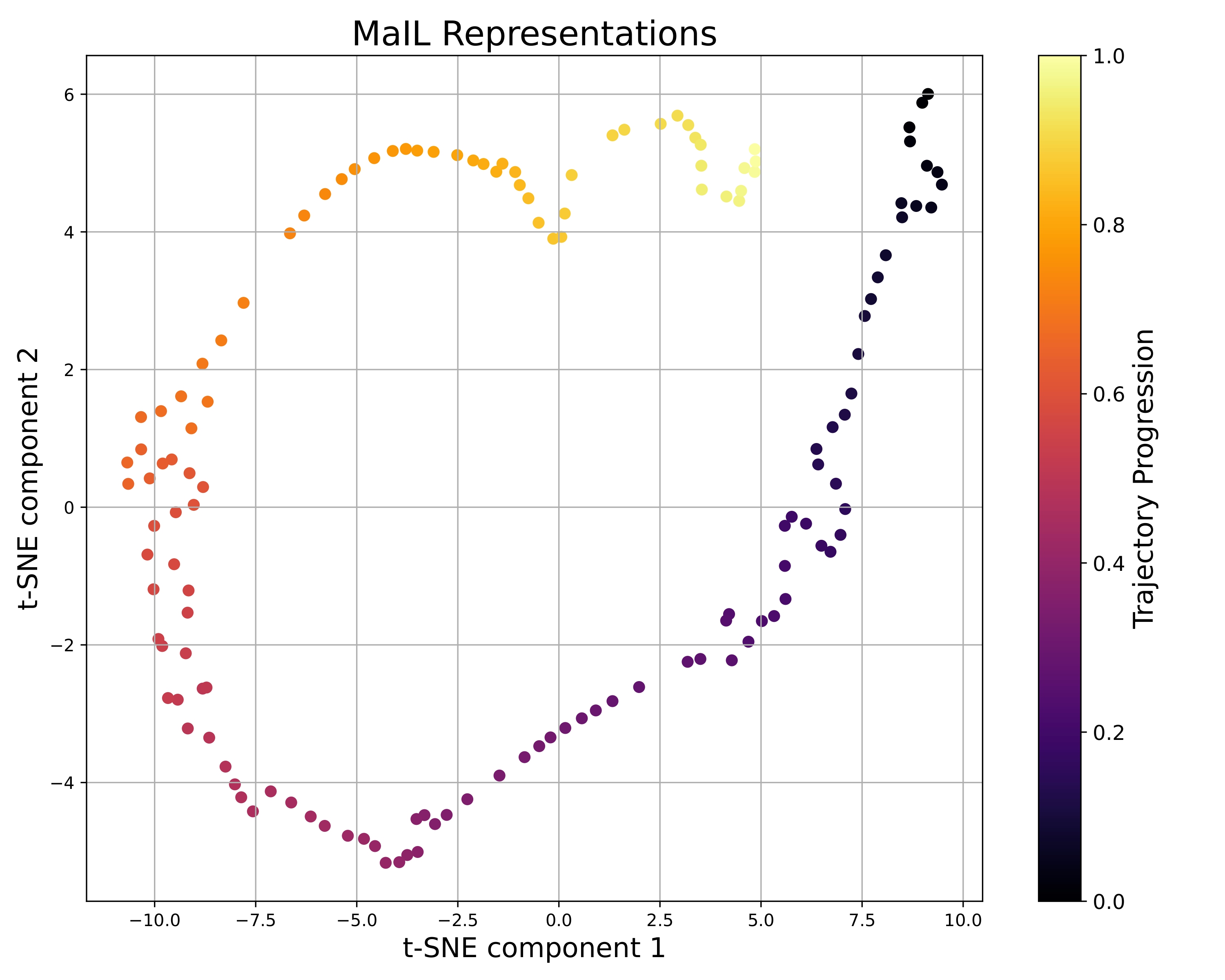

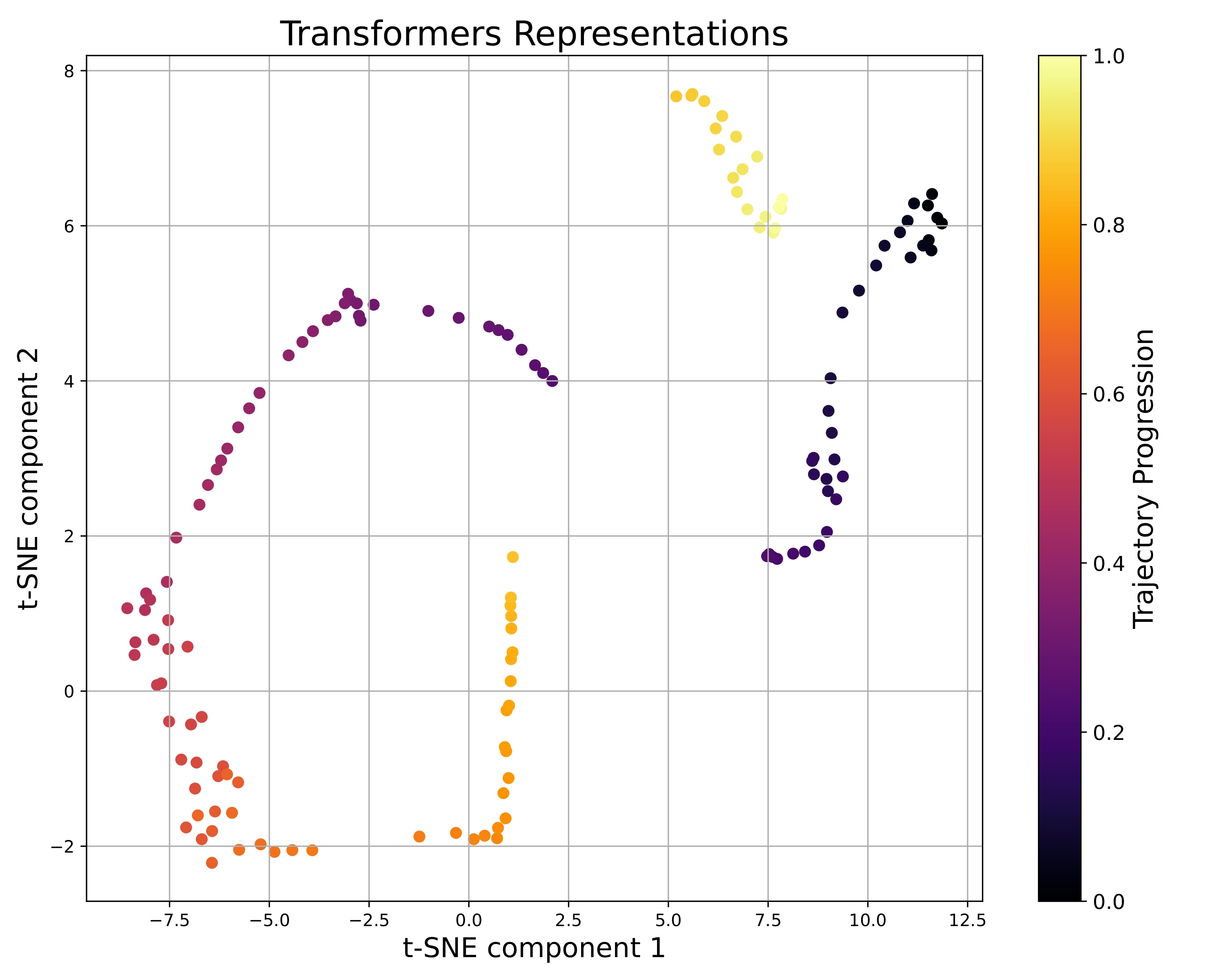

- MaIL has a much more structured latent space compared to Transformer-based models.

To begin, clone this repository locally

git clone git@github.com:ALRhub/MaIL.git

conda create -n mail python=3.8

conda activate mail

# adapt to your own cuda version if you need

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

pip install -r requirements.txt

git clone https://github.com/Lifelong-Robot-Learning/LIBERO.git

cd LIBERO

pip install -e .

pip install mamba-ssm==1.2.0.post1

MaIL

└── agents # model implementation

└── models

...

└── configs # task configs and model hyper parameters

└── environments

...

└── dataset # data saving folder and data process

└── data

...

└── scripts # running scripts and hyper parameters

└── aligning

└── stacking

...

└── task_embeddings # language embeddings

└── simulation # task simulation

...

Train decoder-only mamba with BC on LIBERO-Spatial and LIBERO-Object tasks using 3 seeds

bash scripts/bc/libero_so_bc_mamba_dec.sh

Train encoder-decoder mamba with DDPM on LIBERO-Spatial and LIBERO-Object tasks using 3 seeds

bash scripts/3seed/libero_so_mamba_encdec.sh

The code of this repository relies on the following existing codebases:

- [Mamba] https://github.com/state-spaces/mamba

- [D3Il] https://github.com/ALRhub/d3il

- [LIBERO] https://github.com/Lifelong-Robot-Learning/LIBERO

If you found the code usefull, please cite our work:

@inproceedings{

jia2024mail,

title={Ma{IL}: Improving Imitation Learning with Selective State Space Models},

author={Xiaogang Jia and Qian Wang and Atalay Donat and Bowen Xing and Ge Li and Hongyi Zhou and Onur Celik and Denis Blessing and Rudolf Lioutikov and Gerhard Neumann},

booktitle={8th Annual Conference on Robot Learning},

year={2024},

url={https://openreview.net/forum?id=IssXUYvVTg}

}