Official support code for LAEO-Net++ paper (IEEE TPAMI, 2021).

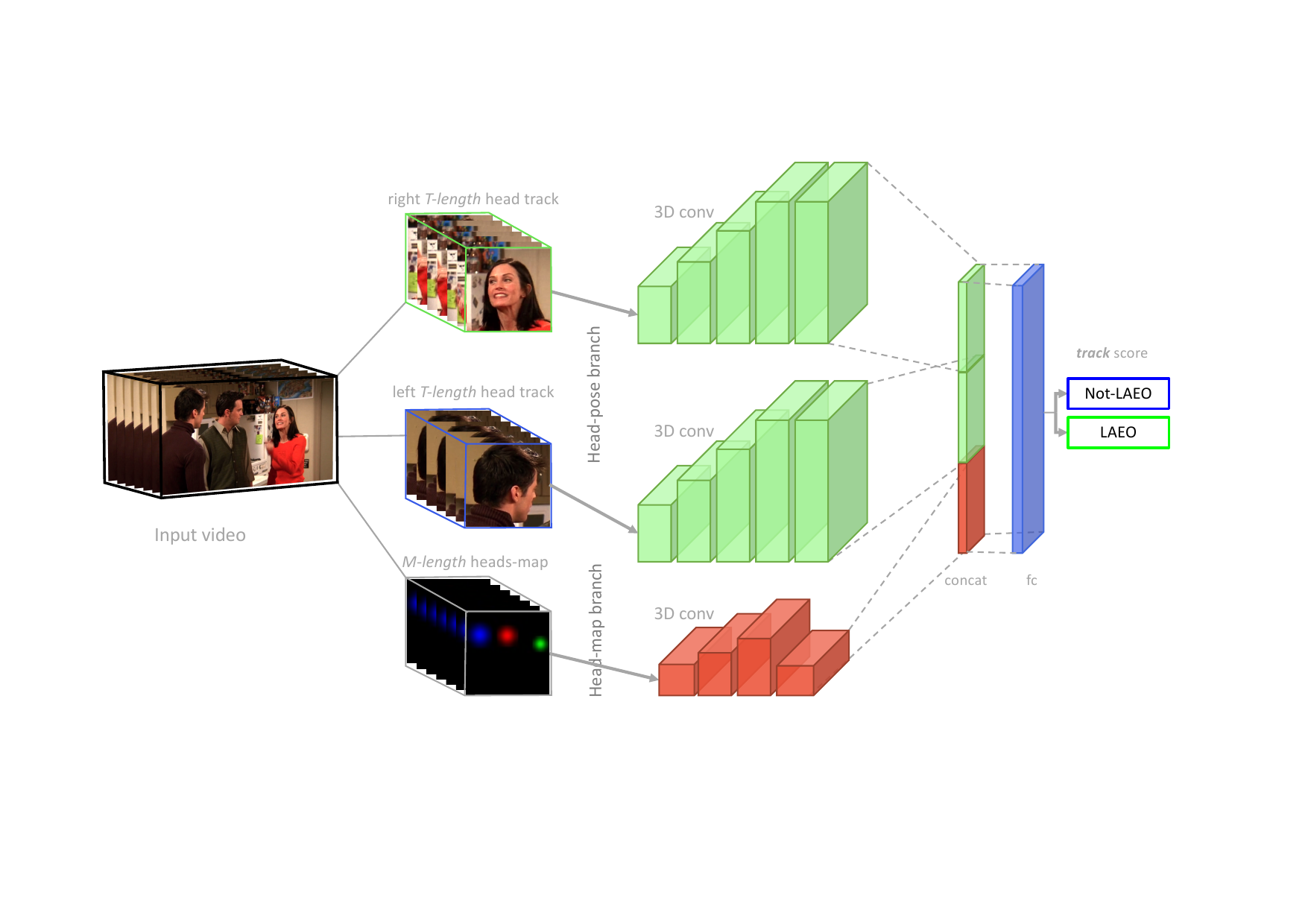

The LAEO-Net++ receives as input two tracks of head crops and a track of maps containing the relative position of the heads, and returns the probability of being LAEO those two heads.See previous version here

The following demo predicts the LAEO label on a pair of heads included in

subdirectory data/ava_val_crop. You can choose either to use a model trained on UCO-LAEO

or a model trained on AVA-LAEO.

cd laeonetplus

python mains/ln_demo_test.pyTraining code will be available soon.

Try this Google Colab to run LAEO prediction on a target video file: notebook

Try this Google Colab to perform head detection and tracking on a target video file: notebook

Clone this repository:

git clone git@github.com:AVAuco/laeonetplus.gitSee library dependencies here. In addition, a requirements.txt file is provided.

We provide an example of training on AVA-LAEO data (using preprocessed samples): see the training document.

Marin-Jimenez, M. J., Kalogeiton, V., Medina-Suarez, P., Zisserman, A. (2021). LAEO-Net++: revisiting people Looking At Each Other in videos. IEEE transactions on Pattern Analysis and Machine Intelligence, PP, 10.1109/TPAMI.2020.3048482. Advance online publication. https://doi.org/10.1109/TPAMI.2020.3048482

@article{marin21pami,

author = {Mar\'in-Jim\'enez, Manuel J. and Kalogeiton, Vicky and Medina-Su\'arez, Pablo and and Zisserman, Andrew},

title = {{LAEO-Net++}: revisiting people {Looking At Each Other} in videos},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

doi = {10.1109/TPAMI.2020.3048482},

year = {2021}

}Conference version:

@inproceedings{marin19cvpr,

author = {Mar\'in-Jim\'enez, Manuel J. and Kalogeiton, Vicky and Medina-Su\'arez, Pablo and and Zisserman, Andrew},

title = {{LAEO-Net}: revisiting people {Looking At Each Other} in videos},

booktitle = CVPR,

year = {2019}

}