- Contributors : Aman Sharma , Vyshnav Achuthan , Neha Madhekar , Rishikesh Jadhav , Wu Xiyang

Create the conda environment by running

conda env create -f environment.yml

- Download the full Toyota Woven Planet Perception datset, which includes the Mini dataset and the Train and Test dataset.

- Extract the tar files to a directory named

lyft2/. The files should be organized in the following structure:lyft2/ ├──── train/ │ ├──── maps/ │ ├──── images/ │ ├──── train_lidar/ │ └──── train_data/

The config file can be found in powerbev/configs . You can download the pre-trained models which are finetuned for nuscenes dataset using the below links:

| Weights | Dataset | BEV Size | IoU | VPQ |

|---|---|---|---|---|

PowerBEV_long.ckpt |

NuScenes | 100m x 100m (50cm res.) | 39.3 | 33.8 |

PowerBEV_short.ckpt |

NuScenes | 30m x 30m (15cm res.) | 62.5 | 55.5 |

PowerBEV_static_long.ckpt |

None | 100m x 100m (50cm res.) | 39.3 | 33.8 |

PowerBEV_static_short.ckpt |

None | 30m x 30m (15cm res.) | 62.5 | 55.5 |

To train the model from scratch on Woven, run

python train.py --config powerbev/configs/powerbev.yml

and make sure you make the respective changes on the config.yaml file inside configs folder.

python train.py --config powerbev/configs/powerbev.yml \

PRETRAINED.LOAD_WEIGHTS True \

PRETRAINED.PATH $YOUR_PRETRAINED_STATIC_WEIGHTS_PATH

To run from the model which was trained from scratch just search for the tensorboard log file which will have the ckpt file and add that ckpt file path as your pretrained weights path.

python test.py --config powerbev/configs/powerbev.yml \

PRETRAINED.LOAD_WEIGHTS True \

PRETRAINED.PATH $YOUR_PRETRAINED_WEIGHTS_PATH

To run from the model which was trained from scratch just search for the tensorboard log file which will have the ckpt file and add that ckpt file path as your pretrained weights path.

python visualise.py --config powerbev/configs/powerbev.yml \

PRETRAINED.LOAD_WEIGHTS True \

PRETRAINED.PATH $YOUR_PRETRAINED_WEIGHTS_PATH \

BATCHSIZE 1

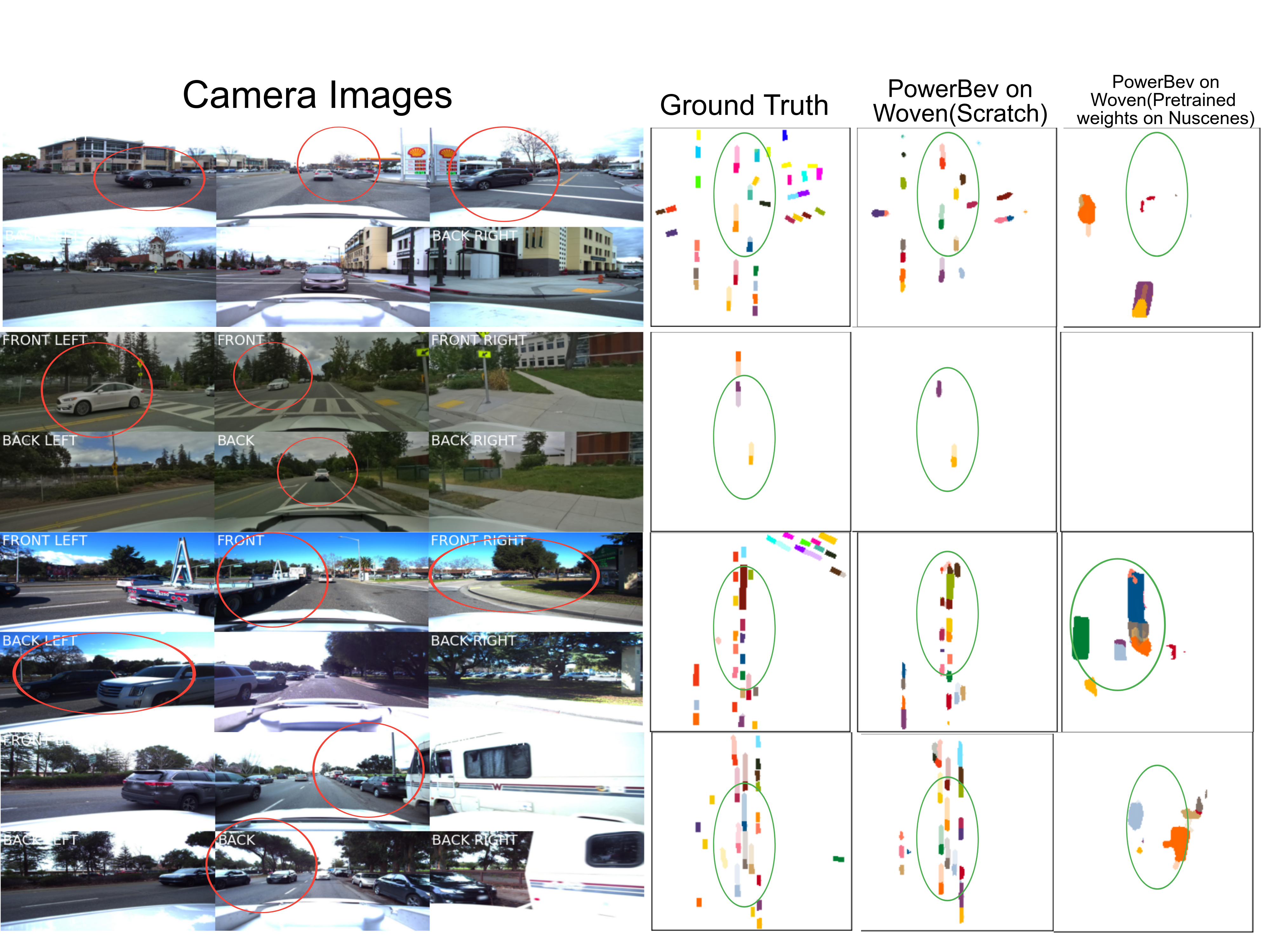

This will render predictions from the network and save them to an visualization_outputs folder.

PowerBEV is released under the MIT license. Please see the LICENSE file for more information.

This is the official PyTorch implementation of the paper:

PowerBEV: A Powerful yet Lightweight Framework for Instance Prediction in Bird's-Eye View

Peizheng Li, Shuxiao Ding,Xieyuanli Chen,Niklas Hanselmann,Marius Cordts,Jürgen Gall