Shanghai Jiao Tong University

*equal contribution

While our code doesn't require any specific packages, each included method has its own environment requirements which are provided in the References section.

The main directory where all code is stored is referred to as global_path. The following structure is relative to that directory path:

.

├── datasets # Add argoverse raw csv files here

│ ├── train

│ │ └── data

│ │ └── ... csv files

│ ├── val

│ │ └── data

│ │ └── ... csv files

│ └── test

│ └── data

│ └── ... csv files

├── datasets_summit # add Alignment raw csv files here

│ ├── summit_10HZ

│ │ ├── train

│ │ │ └── data

│ │ │ └── ... csv files

│ │ ├── val

│ │ │ └── data

│ │ │ └── ... csv files

│ │ └── test

│ │ └── data

│ │ └── ... csv files

├── features # Preprocessed features are saved here

│ ├── forecasting_features # Save features for all methods

│ └── ground_truth_data # Save GT for the dataset

python train.py configs/method_folder/config_to_use.py

python test.py configs/method_folder/config_to_use.py

Change method_folder to any folder you want to use, and config_to_use.py to any config you hope to try. All configs on Alignment dataset have summit in its name.

When training KNN, you may find this error: BLAS : Program is Terminated. Because you tried to allocate too many memory regions. Using the code below will help fix it:

export OPENBLAS_NUM_THREADS=1

export GOTO_NUM_THREADS=1

export OMP_NUM_THREADS=1

For training DSP, run the scripts to generate the preprocess data.

Go to Summit Release and choose the right version for your operating system:

- 0.9.8e (non-lite version) for Ubuntu 18.04 (the version where the experiment was conducted)

Unzip and put in the home folder ~/summit

Run

cd && mkdir whatmatters

cd whatmatters

git clone https://github.com/AdaCompNUS/WhatMatters

cd WhatMatters

mv * ../

mv .git .gitignore ../

rm -rf WhatMatterscd && mkdir whatmatters

cd whatmatters

mv gamma_crowd_gammaplanner.py ~/summit/PythonAPI/examples/cd && mkdir whatmatters

cd whatmatters

cd ~/src/scripts

python3 launch_docker.py --image cppmayo/melodic_cuda10_1_cudnn7_libtorch_opencv4_ws_noentry

cd summit/Scripts

pip3 install requests

python3 download_imagery.py -d meskel_square

python3 download_imagery.py -d beijing

python3 download_imagery.py -d highway

python3 download_imagery.py -d chandni_chowk

python3 download_imagery.py -d magic

python3 download_imagery.py -d shibuya

python3 download_imagery.py -d shi_men_er_lu

docker pull cppmayo/melodic_cuda10_1_cudnn7_libtorch_opencv4_ws_noentryWe also need to install docker-nvidia2 as followed guide (https://developer.nvidia.com/blog/gpu-containers-runtime/)

cd ~/src/scripts

python3 launch_docker.py --image cppmayo/melodic_cuda10_1_cudnn7_libtorch_opencv4_ws_noentryInside docker, run:

catkin config --merge-devel

catkin clean

catkin build(Ignore the type error of the signal handler) After building catkin workspace, exit the docker setup with Ctrl+d

Install either conda or miniconda. If you install conda, then at line 12 in src/scripts/launch_docker.py, you need to change to anaconda3, if miniconda then

you change to miniconda3

cd src/moped/moped_impelmentation/

conda create env --name moped -f hivt.ymlcd ~/whatmatters

git clone https://github.com/argoai/argoverse-api.git

pip install -e argoverse-api

pip install Pyro4

pip install mmcv==1.7.1Noting that if you got error in install sklearn, editting setup.py insie argoverse-api to change to scikit-learn.

And if you have issue with lapsolver, use command pip install cmake then reinstalling

docker pull cppmayo/melodic_cuda10_1_cudnn7_libtorch_opencv4_wscd ~/src/scripts

python server_pipeline.py --gpu <gpu_id> --trials <number of runnings>After running, the data will be stored inside ~/driving_data. Refer to next section for reporting driving performance metrics

cd ~/src/scripts/experiment_notebook

python Analyze_RVO_DESPOT.py --mode data

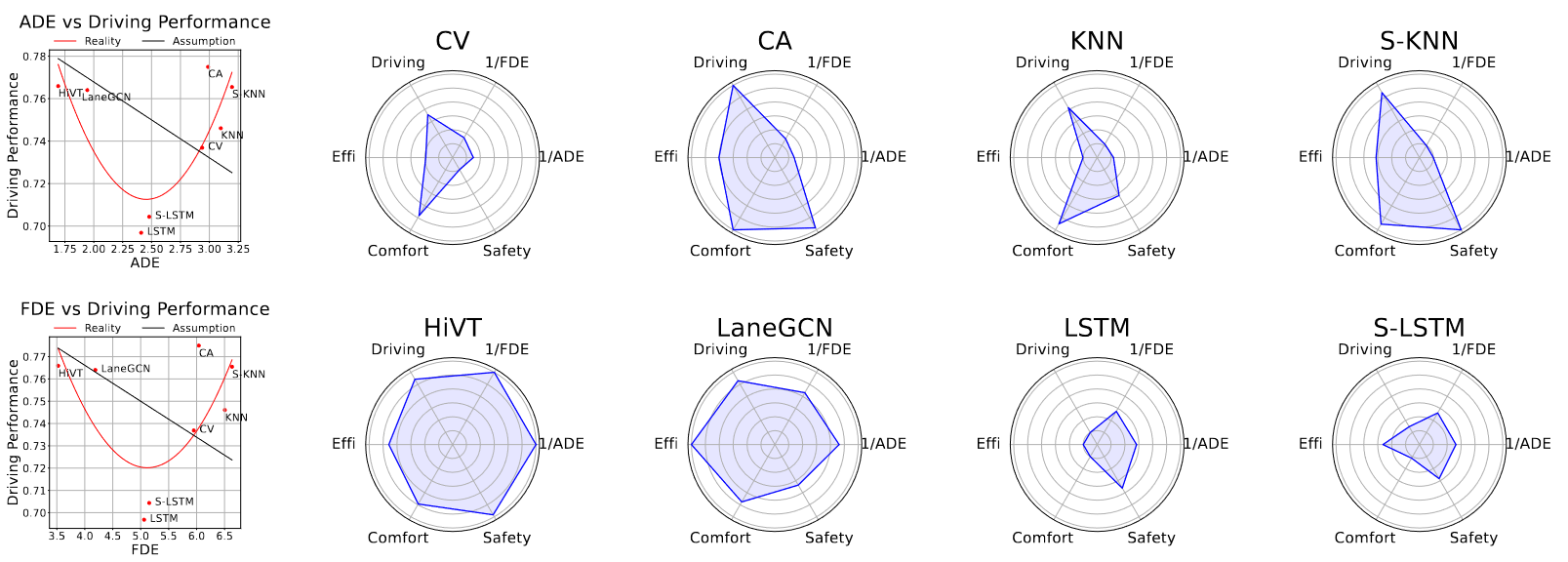

python Analyze_RVO_DESPOT.py Then you will get the plot with x-axis is prediction performancde and y-axis is driving performance

@misc{wutran2023truly,

title={What Truly Matters in Trajectory Prediction for Autonomous Driving?},

author={Haoran Wu and Tran Phong and Cunjun Yu and Panpan Cai and Sifa Zheng and David Hsu},

year={2023},

eprint={2306.15136},

archivePrefix={arXiv},

}The code base heavily borrows from:

Argoverse Forecasting: https://github.com/jagjeet-singh/argoverse-forecasting

LaneGCN: https://github.com/uber-research/LaneGCN

HiVT: https://github.com/ZikangZhou/HiVT

DSP: https://github.com/HKUST-Aerial-Robotics/DSP

HOME: https://github.com/Robotmurlock/TNT-VectorNet-and-HOME-Trajectory-Forecasting