Writeup / README

Code architecture

main.py

|

|

pipeline.py

| VehicleDetector

|----------------------------------------------------------------------|

| _____________________________|__________________________ |

| | | | | |

| data_handler.py features.py classifier.py visualizer.py |

| |

|----------------------------------------------------------------------|to run the code pipeline, uncomment process_video in main.py

if __name__ == '__main__':

# unit_tests()

# train_pipeline()

process_video() and execute

python main.py

Histogram of Oriented Gradients (HOG)

1. Explain how (and identify where in your code) you extracted HOG features from the training images.

For every loaded image

The color space of the image is changed using

def convert_color_space(self,image):

return cv2.cvtColor(image,self.color_space)and the HOG for each channel is computed and concatenated to form a feature vector

def get_HOG(self,image):

"""

HOG of every channel of given image is compuuted and

is concatenated to form one single feature vector

"""

feat_ch1 = hog(image[:,:,0],

orientations= self.orientations ,

pixels_per_cell= self.pixels_per_cell ,

cells_per_block= self.cells_per_block,

visualise=False)

feat_ch2 = hog(image[:,:,1],

orientations= self.orientations ,

pixels_per_cell= self.pixels_per_cell ,

cells_per_block= self.cells_per_block,

visualise=False)

feat_ch3 = hog(image[:,:,2],

orientations= self.orientations ,

pixels_per_cell= self.pixels_per_cell ,

cells_per_block= self.cells_per_block,

visualise=False)

return np.concatenate((feat_ch1, feat_ch2, feat_ch3))The feature extraction and preprocessing part is handled by FeatureDetector class defined in features.py

2. Explain how you settled on your final choice of HOG parameters.

The parameter of HOG were finalized using trial and error method

self.color_space = cv2.COLOR_RGB2YCrCb

self.orientations = 16

self.pixels_per_cell = (12,12)

self.cells_per_block = (2,2)

self.image_size = (32,32)

self.color_feat_size = (64,64)

self.no_of_bins = 32

self.color_features = False

self.spatial_features = False

self.HOG_features = TrueIt was observed that the color_features and spatial_features don't add much of a significance and rather slow down the computation. Thus only HOG was used

3. Describe how (and identify where in your code) you trained a classifier using your selected HOG features (and color features if you used them).

I found that Random forest classifier gave better accuracy and process time than SVM classifier so I used RandomForestClassifier from sklearn.ensemble

[accuracy] 0.9583333333333334

The following is the feature vector which was used to train the classifier. I created a classifier class which had methods :

def train(self, X_train, y_train, X_test, y_test):

...

def predict(self, inputX):

...

def save_classifier(self):

...

def load_classifier(self):

...Sliding Window Search

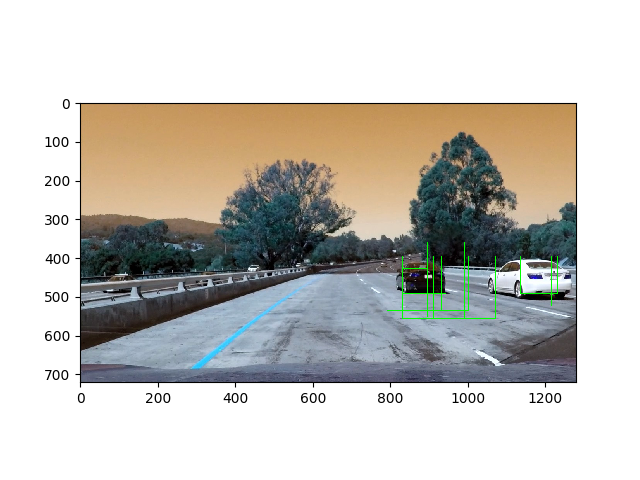

1. Describe how (and identify where in your code) you implemented a sliding window search. How did you decide what scales to search and how much to overlap windows?

scale_factors = [(0.4,1.0,0.55,0.8,64),

(0.4,1.0,0.55,0.8,96),

(0.4,1.0,0.55,0.9,128),

(0.4,1.0,0.55,0.9,140),

(0.4,1.0,0.55,0.9,160),

(0.4,1.0,0.50,0.9,192)]window_1 = self.slide_window(window_image,

x_start_stop=[int(scale_factor[0]*width),

int(scale_factor[1]*width)],

y_start_stop=[int(scale_factor[2]*height),

int(scale_factor[3]*height)],

xy_window=( scale_factor[4],

scale_factor[4]),

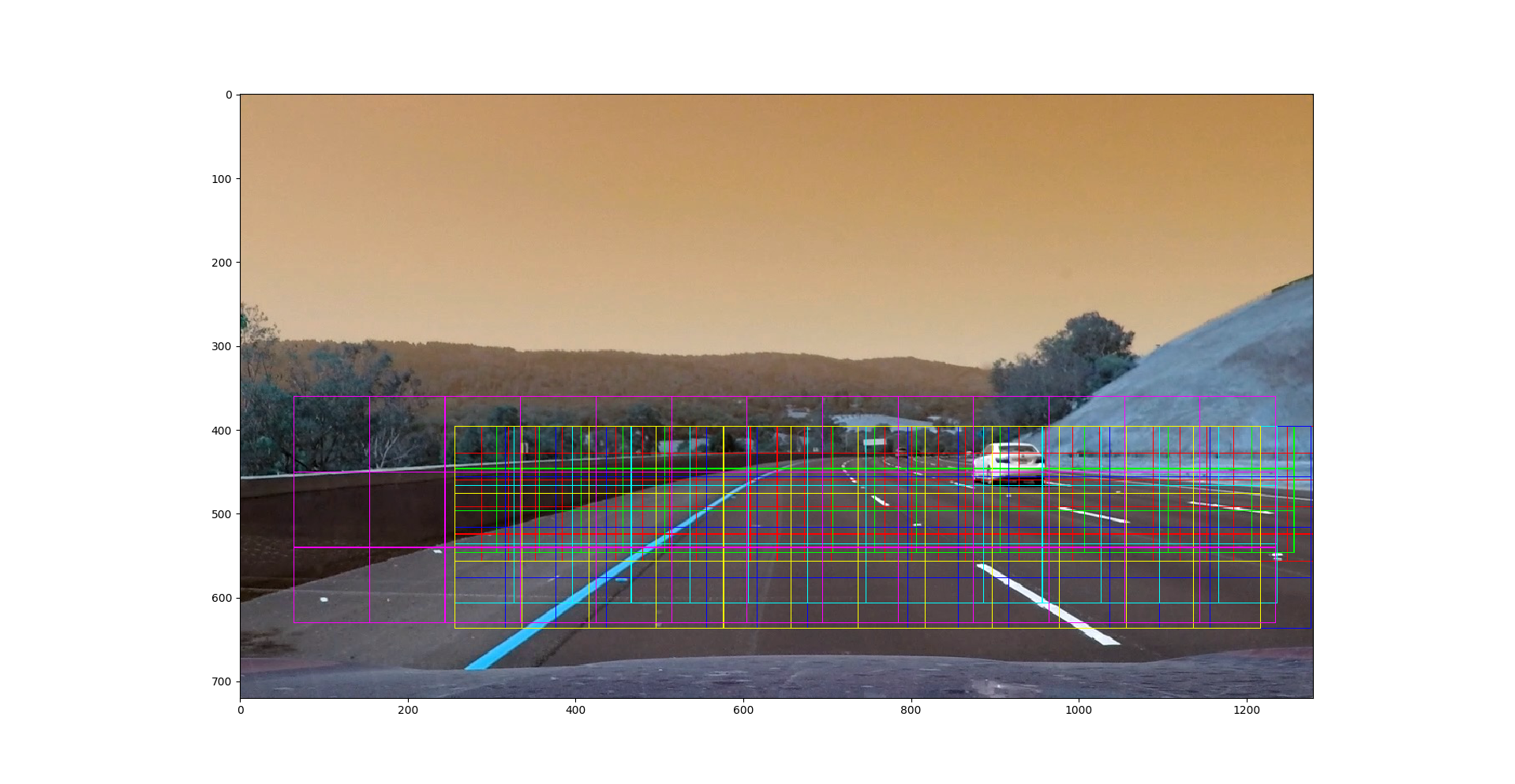

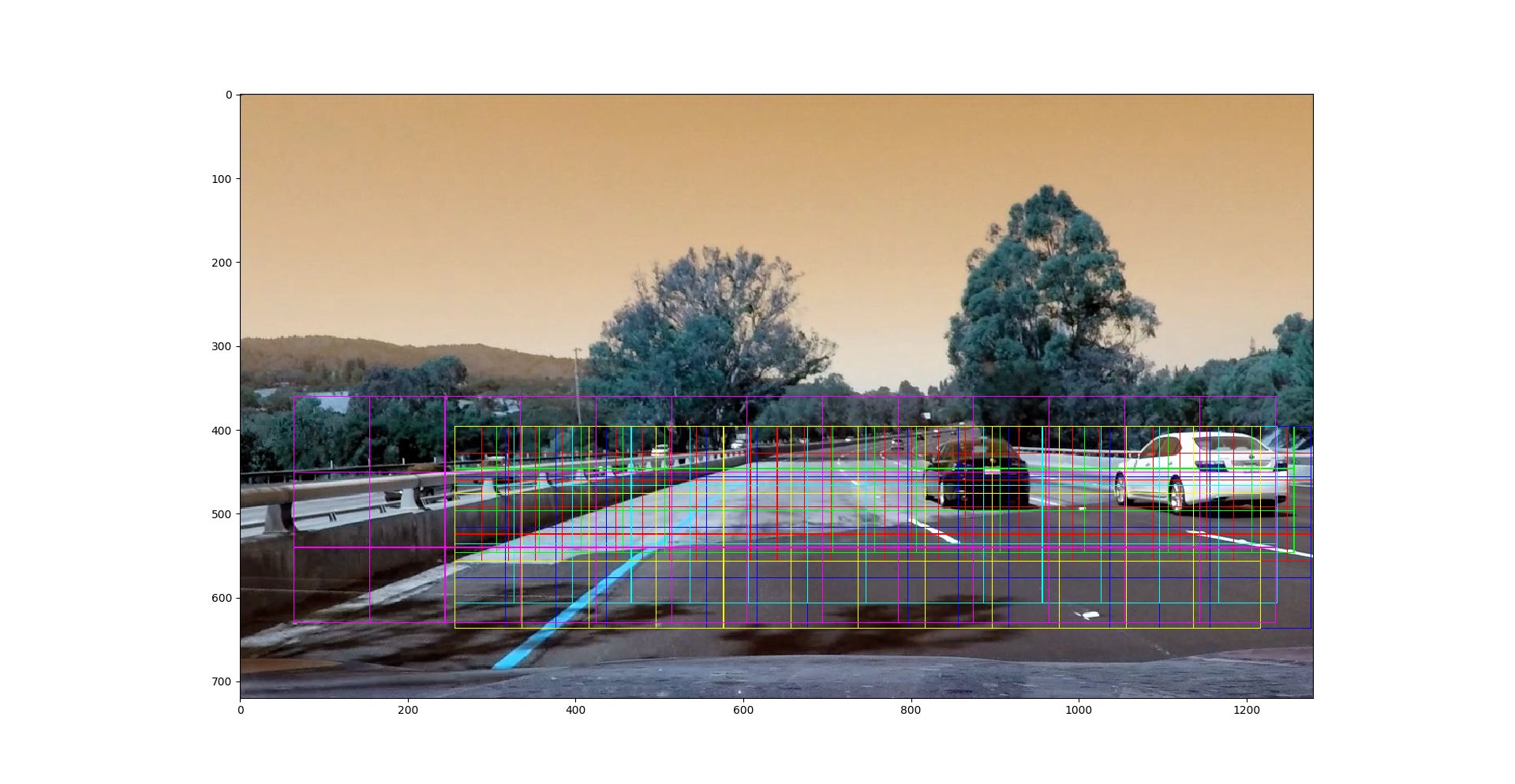

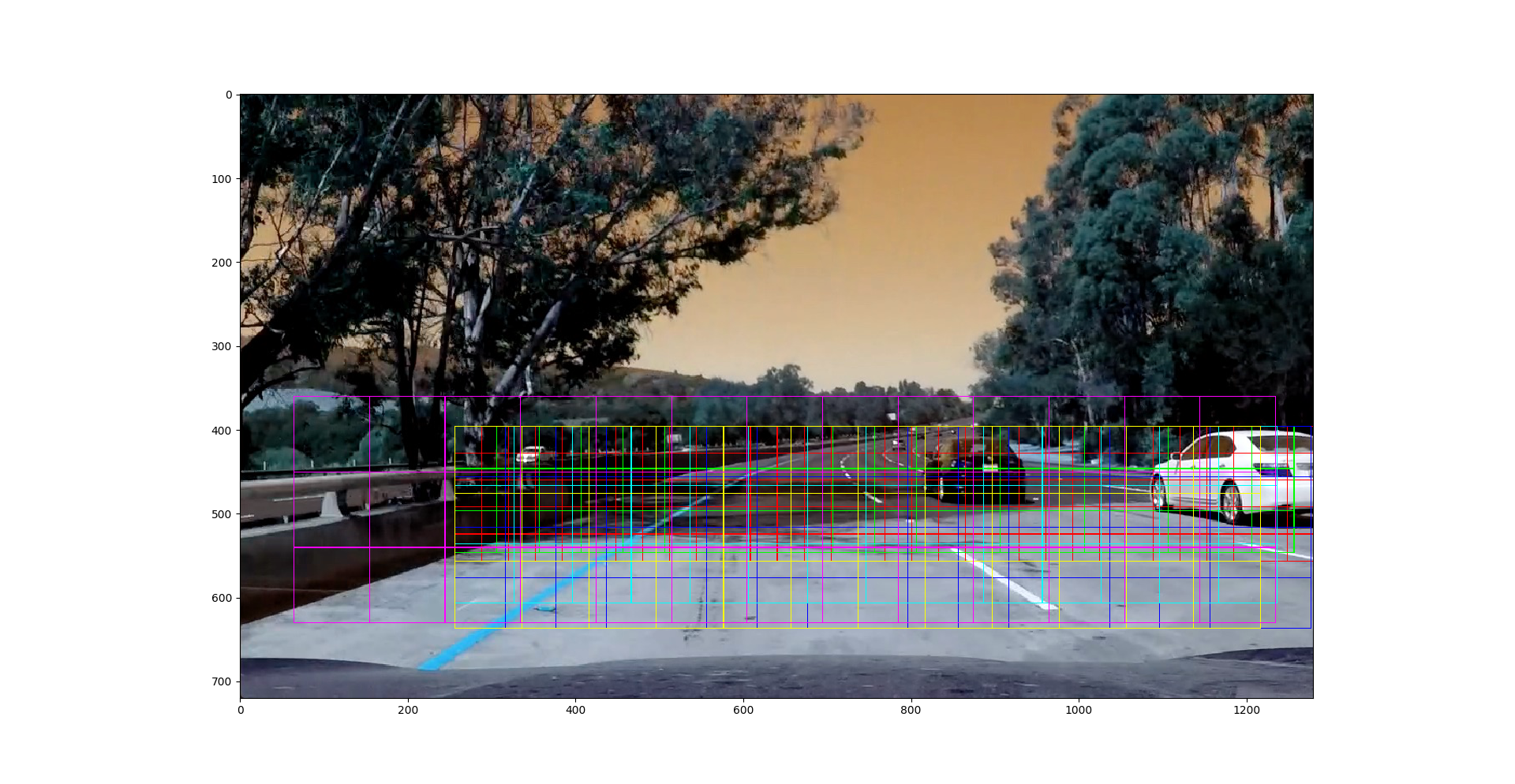

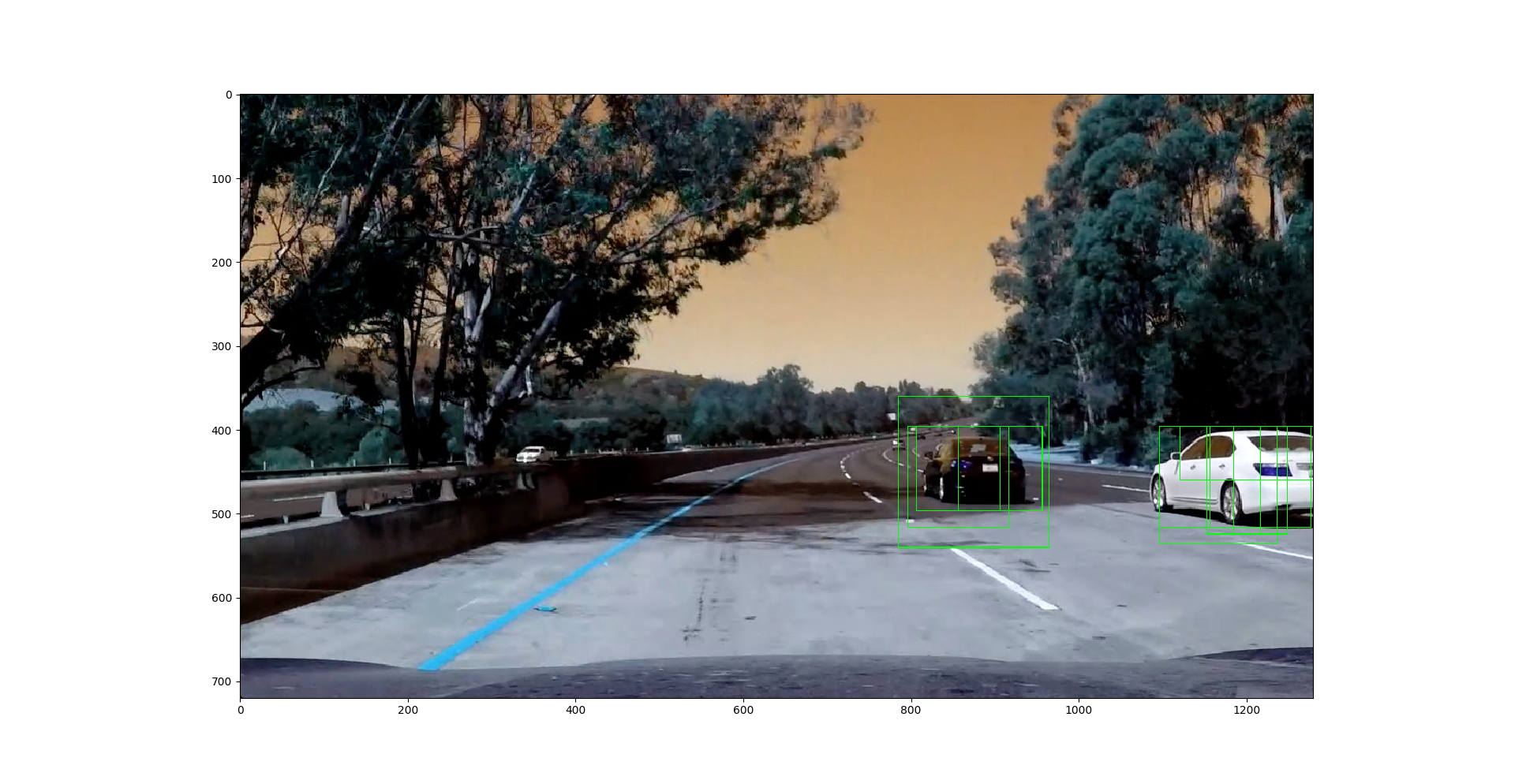

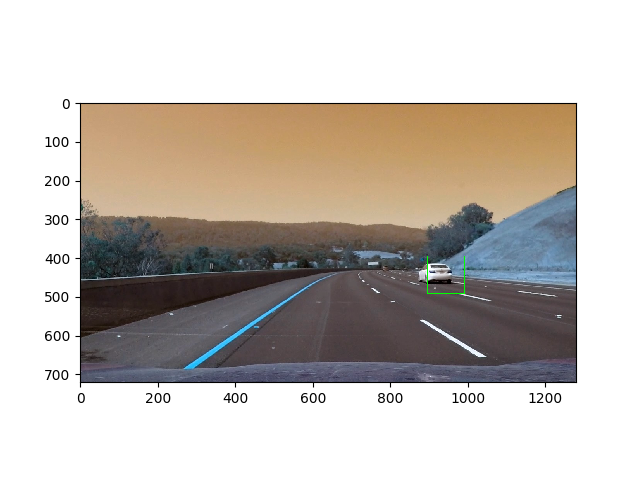

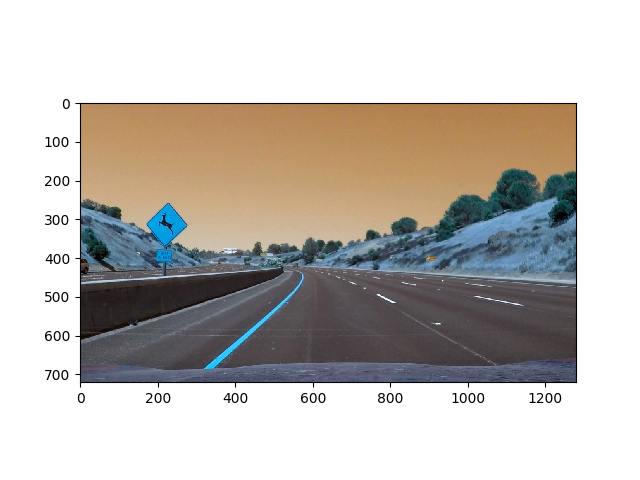

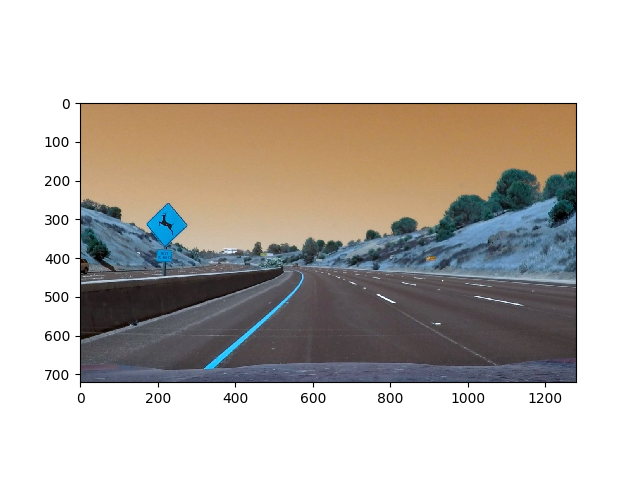

xy_overlap=(0.5, 0.5))Following is the result of sliding windows on the image

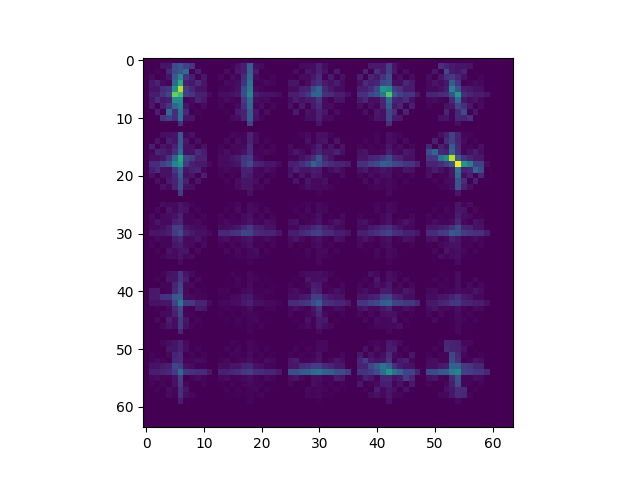

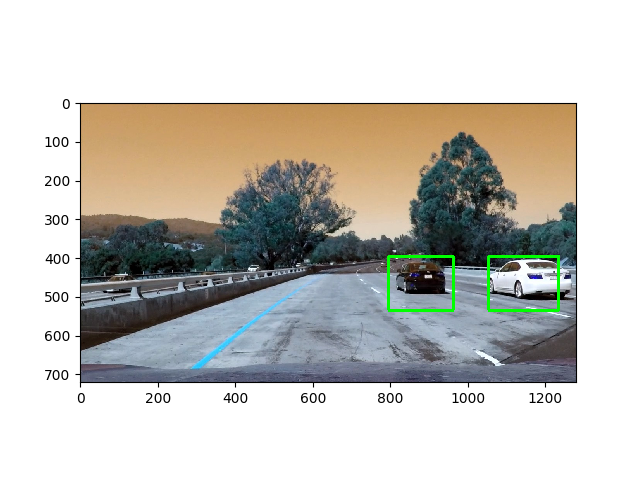

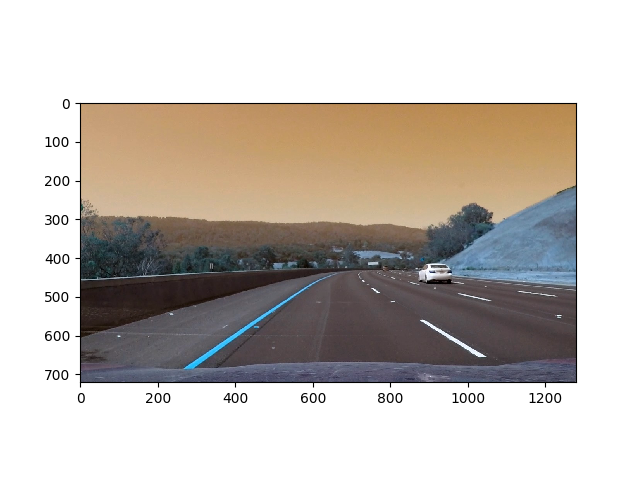

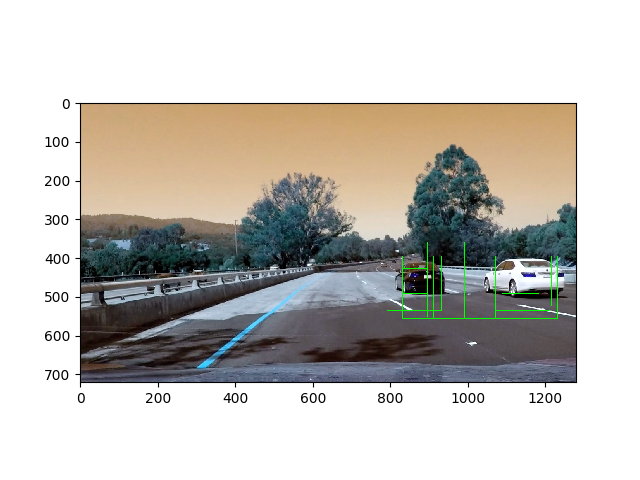

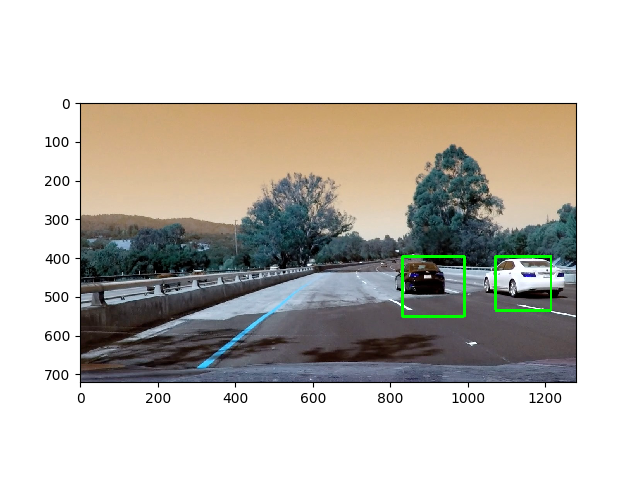

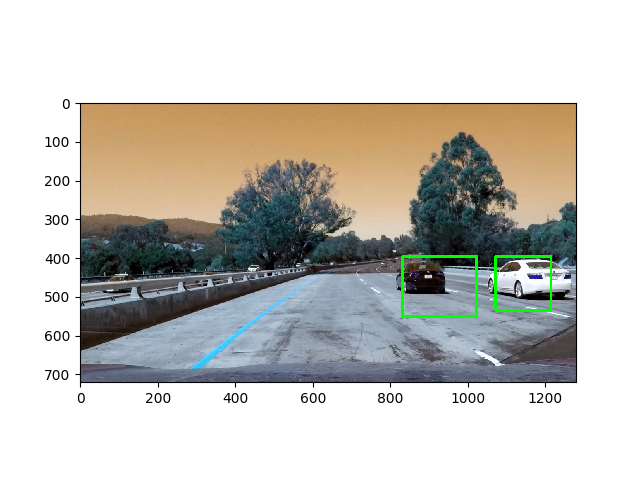

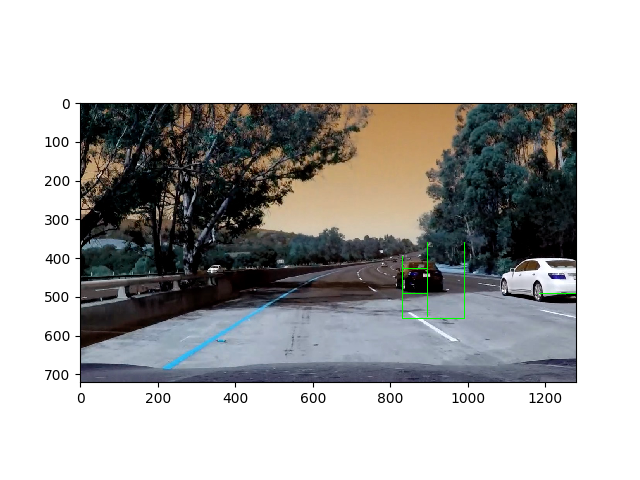

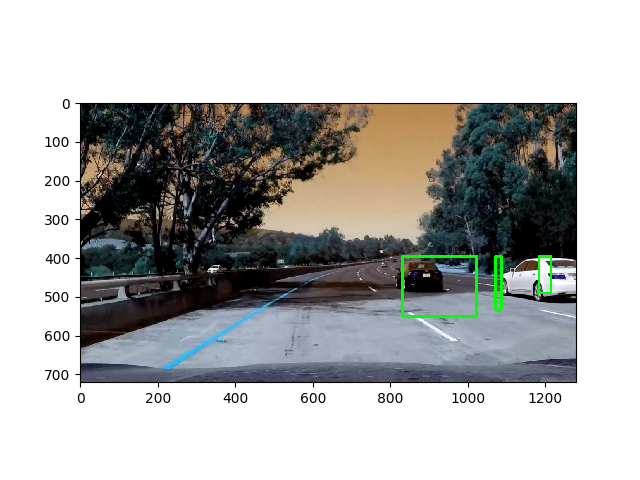

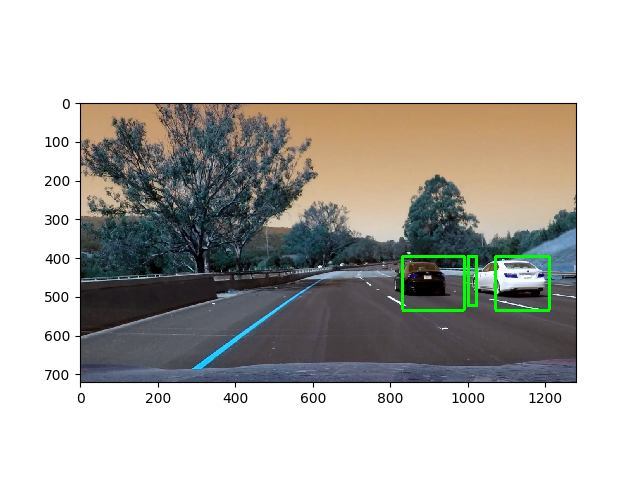

2. Show some examples of test images to demonstrate how your pipeline is working. What did you do to optimize the performance of your classifier?

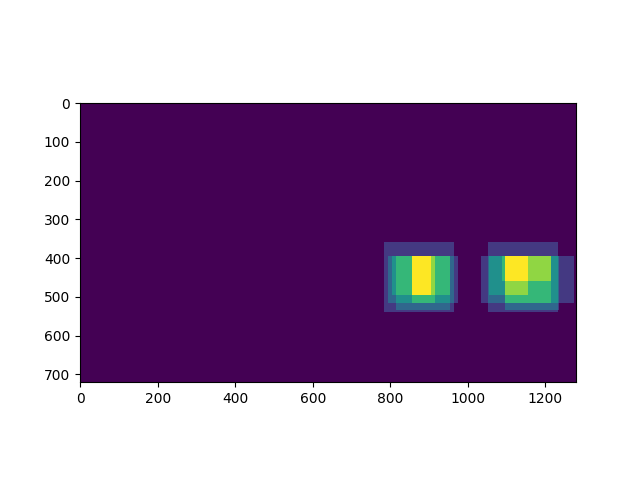

I used the above mentioned parameter and window sizes to obtain features. I then created a headmap of the bounding boxes predicted by the classifier, thresholded it and then created blobs to find the final bounding boxes.

Video Implementation

1. Provide a link to your final video output. Your pipeline should perform reasonably well on the entire project video (somewhat wobbly or unstable bounding boxes are ok as long as you are identifying the vehicles most of the time with minimal false positives.)

Here's a link to my video result

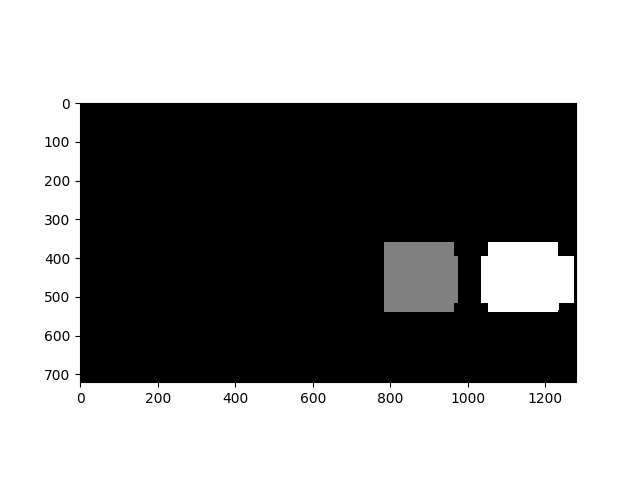

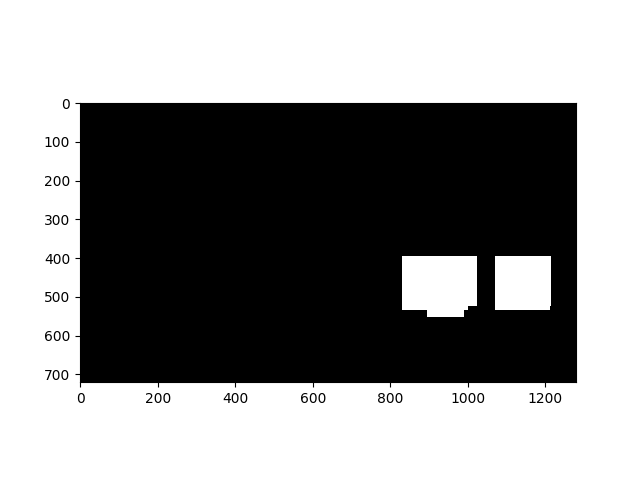

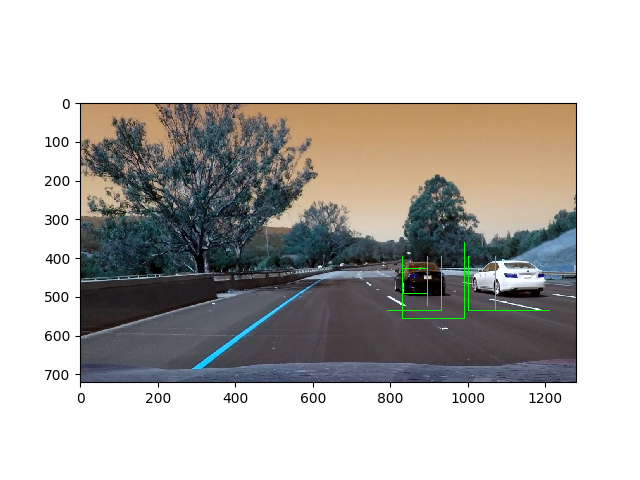

2. Describe how (and identify where in your code) you implemented some kind of filter for false positives and some method for combining overlapping bounding boxes.

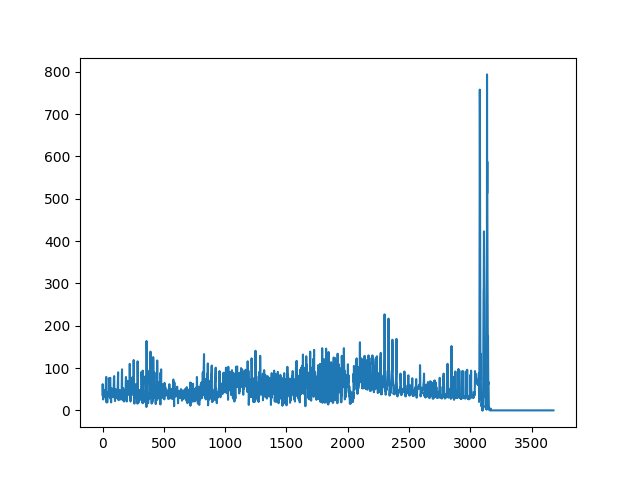

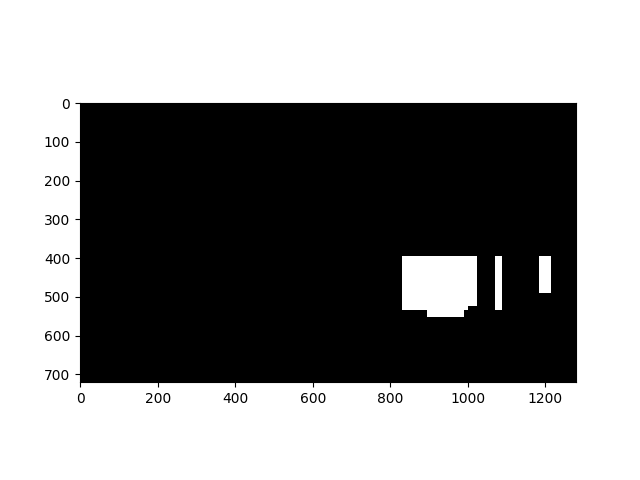

I took the predictions which had probability above a given threshold and created a heatmap from their bounding boxes. I then made the heatmap binary thresholding it. To find blobs I used scipy.ndimage.measurements.label() . To track the object and reduce affect of false positives, I stored the previous heatmap and added a scaled version of it to the next heatmap and increased the heapmap threshold. thus the heat in the area where previously car was detected would be higher than original and can easily be thresholded.

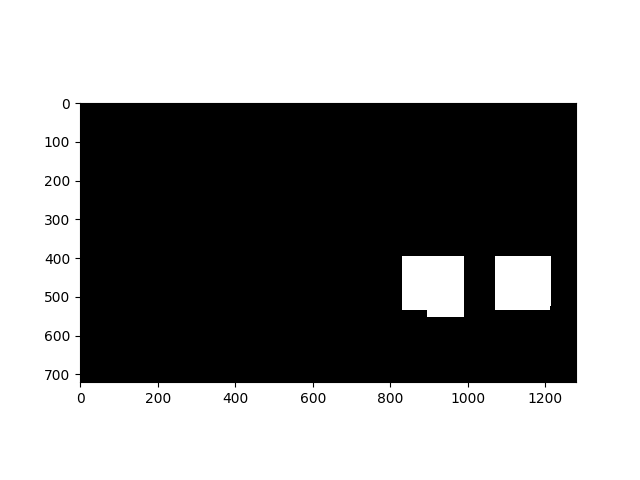

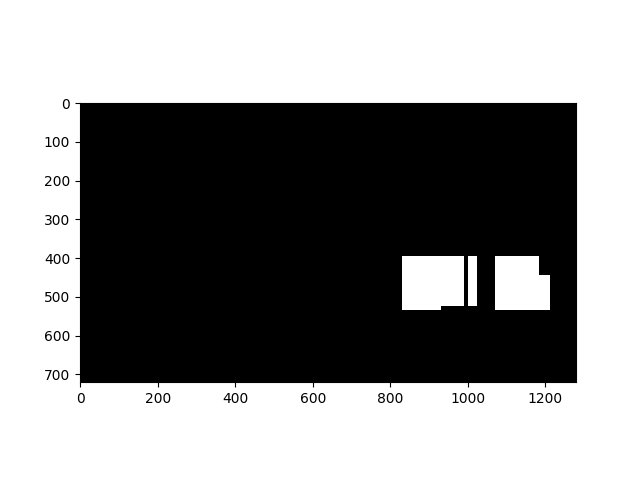

Here is the output of scipy.ndimage.measurements.label() on the integrated heatmap from all six frames:

Discussion

1. Briefly discuss any problems / issues you faced in your implementation of this project. Where will your pipeline likely fail? What could you do to make it more robust?

This implementation is very slow and requires a lot of parameter tuning. A deep learning approach would be faster and more robust as forward pass in architectures like faster RCNN and YOLO is in microseconds. There would be no need to choose features manually. The outliers in the deep learning approach can also be removed by non-maximal suppression,