PyTorch implementation of the supervised learning experiments from the paper: Model-Agnostic Meta-Learning (MAML): https://arxiv.org/abs/1703.03400

Both

MiniImagenetandOmniglotDatasets are supported! Have Fun~

For Tensorflow Implementation, please visit HERE. For First-Order Implementation, Reptile namely, please visit HERE.

- python: 3.x

- Pytorch: 0.4+

miniimagenet/

├── images

├── n0210891500001298.jpg

├── n0287152500001298.jpg

...

├── test.csv

├── val.csv

└── train.csv

- modify the

pathinminiimagenet_train.py:

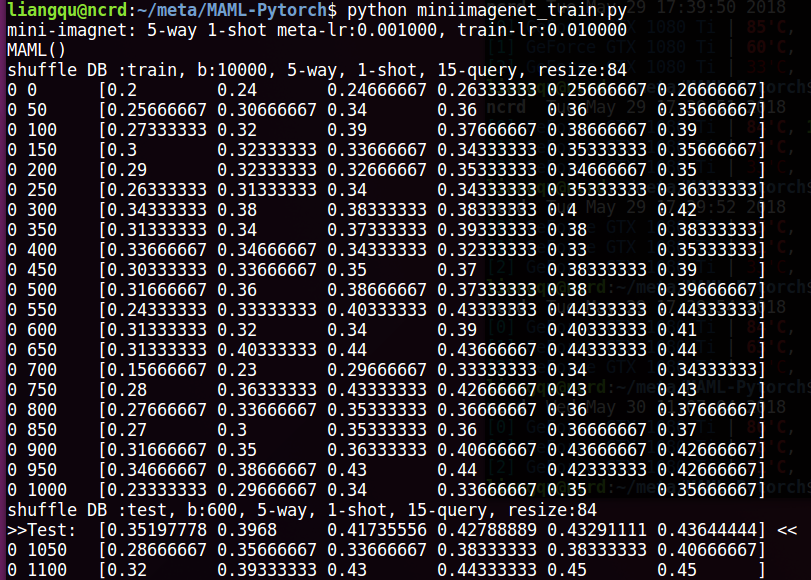

# batchsz here means total episode number

mini = MiniImagenet('/hdd1/liangqu/datasets/miniimagenet/', mode='train', n_way=n_way, k_shot=k_shot, k_query=k_query,

batchsz=10000, resize=imgsz)

...

mini_test = MiniImagenet('/hdd1/liangqu/datasets/miniimagenet/', mode='test', n_way=n_way, k_shot=k_shot, k_query=k_query,

batchsz=600, resize=imgsz)to your actual data path.

| Model | Fine Tune | 5-way Acc. | 20-way Acc. | ||

|---|---|---|---|---|---|

| 1-shot | 5-shot | 1-shot | 5-shot | ||

| Matching Nets | N | 43.56% | 55.31% | 17.31% | 22.69% |

| Meta-LSTM | 43.44% | 60.60% | 16.70% | 26.06% | |

| MAML | Y | 48.7% | 63.11% | 16.49% | 19.29% |

| Ours | Y | 48.1% | 62.2% | - | - |

run python omniglot_train.py, the program will download omniglot dataset automatically.

decrease the value of meta_batchsz to fit your GPU memory capacity.