Haozhi Cao1, Yuecong Xu2, Jianfei Yang3*, Pengyu Yin1, Xingyu Ji1, Shenghai Yuan1, Lihua Xie1

1: Centre for Advanced Robotics Technology Innovation (CARTIN), Nanyang Technological University

2: Department of Electrical and Computer Engineering, National University of Singapore

3: School of MAE and School of EEE, Nanyang Technological University

📄 [Arxiv] | 💾 [Project Site] | 📖 [OpenAccess]

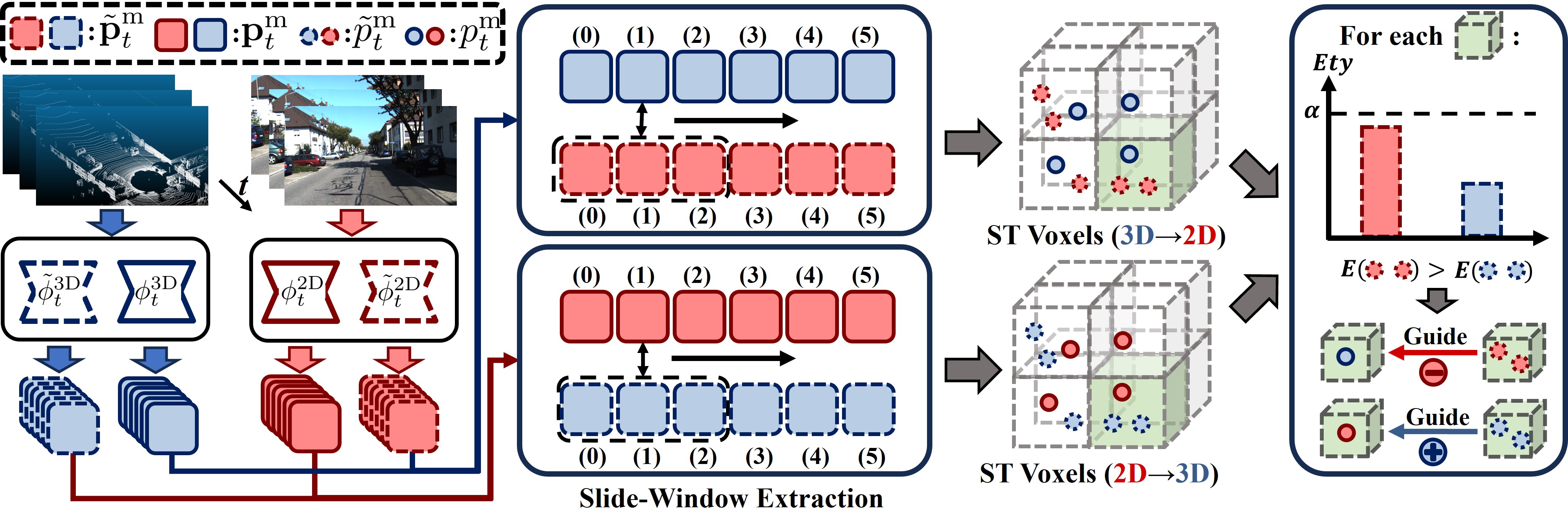

Latte is an MM-TTA method that leverages estimated 3D poses to retrieve reliable spatial-temporal voxels for Test-Time Adaptation (TTA). The overall structure is as follows.

To ease the effort during environment setup, we recommend you leverage Docker and NVIDIA Container Toolkit. With Docker installed, you can locally build the docker image for Latte using this Dockerfile by running docker build -t latte ..

You can then run a container using the docker image. The next step is to install some additional prerequisites. To do so, go to this repo folder and run bash install.sh within the built container (you may ignore the warning saying some package versions are incompatible).

Please refer to DATA_PREPARE.md for the data preparation and pre-processing details.

We recommand you to create a directory for logs and checkpoints saving, and then link to this repo by mkdir latte/exp & ln -sfn /path/to/logs/checkpoints latte/exp/models

To get the pretrained model, the easiest way is to

- Download our prepared multi-modal networks from the googld drive links provided in the main result table.

- Then update the

MODEL_2D.CKPT_PATHandMODEL_3D.CKPT_PATHin each TTA methods configs to proceed the TTA proecess.

You can also create your own pretrained models, first download the pretrained checkpoint segformer.b1.512x512.ade.160k.pth from their official repo, and move it to latte/models/pretrained/. Then run the followed command:

# Taking S-to-S as an example

$ CUDA_VISIBLE_DEVICES=0 python latte/train/train_baseline.py --cfg=configs/synthia_semantic_kitti/baseline.yamlDo note that you need to specify your GPU id in the env variable by using CUDA_VISIBLE_DEVICES=0.

After updated the paths to corresponding pretrained checkpoints, you can conduct test-time adaptation using the respective config, taking Latte as an example:

# Taking S-to-S as an example

$ CUDA_VISIBLE_DEVICES=0 python latte/tta/tta.py --cfg=configs/synthia_semantic_kitti/latte.yamlAfter the TTA process, class-wise results will be recorded in the *.xlsx file located in the model directory latte/exp/models/RUN_NAME. The following table contains the overall results of each method we compared as well as Latte, and links to our pretrained checkpoints.

| Method | MM |

USA→Singapore (checkpoints) |

A2D2→KITTI (checkpoints) |

Synthia→KITTI (checkpoints) |

|||||||

| 2D | 3D | xM | 2D | 3D | xM | 2D | 3D | xM | Avg | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| TENT | ✘ | 36.8 | 36.5 | 42.1 | 43.3 | 44.4 | 49.1 | 22.4 | 35.2 | 37.3 | 42.8 |

| ETA | ✘ | 36.8 | 34.2 | 43.5 | 43.2 | 42.4 | 49.8 | 22.4 | 30.6 | 32.9 | 42.1 |

| SAR | ✘ | 36.7 | 35.7 | 43.8 | 43.2 | 44.5 | 50.3 | 21.1 | 32.2 | 34.1 | 42.7 |

| SAR-rs | ✘ | 36.7 | 34.8 | 43.7 | 43.3 | 43.1 | 49.9 | 22.2 | 30.6 | 32.8 | 42.1 |

| xMUDA | ✔ | 20.3 | 36.4 | 33.6 | 13.0 | 33.2 | 21.3 | 8.4 | 22.2 | 18.9 | 21.6 |

| xMUDA+PL | ✔ | 36.5 | 40.8 | 45.4 | 42.7 | 48.3 | 50.9 | 28.2 | 32.6 | 34.5 | 43.6 |

| MMTTA | ✔ | 37.2 | 41.6 | 45.6 | 44.4 | 50.6 | 52.3 | 27.3 | 33.5 | 34.4 | 44.1 |

| PsLabel | ✔ | 37.7 | 35.8 | 41.7 | 42.8 | 47.0 | 49.4 | 29.5 | 34.2 | 36.5 | 42.5 |

| Latte (Ours) | ✔ | 37.5 | 41.0 | 46.2 | 46.0 | 53.0 | 54.2 | 33.3 | 39.3 | 41.7 | 47.4 |

- [2024.09] Release pretraining and TTA details. See you in Milan!

- [2024.09] Installation and data preparation details released. The full release will be available soon!

- [2024.08] We are now refactoring our code and code will be available shortly. Stay tuned!

- [2024.07] Our paper is accepted by ECCV 2024! Check our paper on arxiv here.

We greatly appreciate the contributions of the following public repos:

For any further questions, please contact Haozhi Cao (haozhi002@ntu.edu.sg)

@article{cao2024reliable,

title={Reliable Spatial-Temporal Voxels For Multi-Modal Test-Time Adaptation},

author={Cao, Haozhi and Xu, Yuecong and Yang, Jianfei and Yin, Pengyu and Ji, Xingyu and Yuan, Shenghai and Xie, Lihua},

journal={arXiv preprint arXiv:2403.06461},

year={2024}

}