This repository contains the pytorch implementation of PromptMR, an unrolled model for multi-coil MRI reconstruction. See our paper Fill the K-Space and Refine the Image: Prompting for Dynamic and Multi-Contrast MRI Reconstruction for more details.

- [2023/10/15] 🔥 We have released training and inference code, along with pretrained PromptMR models, for both the CMRxRecon and fastMRI multi-coil knee datasets. These models exceed the performance of existing state-of-the-art methods.

- [2023/10/12] 🥇 We secured 1st place in both Cine and T1/T2 Mapping reconstruction tasks in CMRxRecon Challenge during MICCAI 2023!

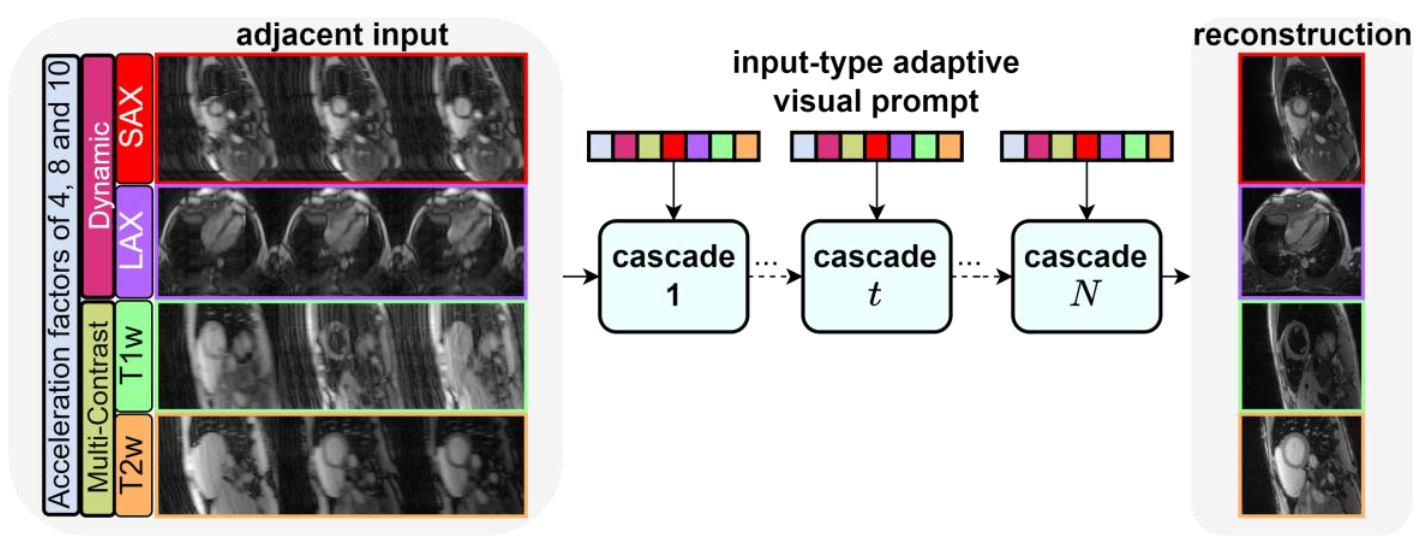

Overview of PromptMR: an all-in-one unrolled model for MRI reconstruction. Adjacent inputs, depicted in image domain for visual clarity, provide neighboring k-space information for reconstruction. To accommodate different input varieties, the input-type adaptive visual prompt is integrated into each cascade of the unrolled architecture to guide the reconstruction process.

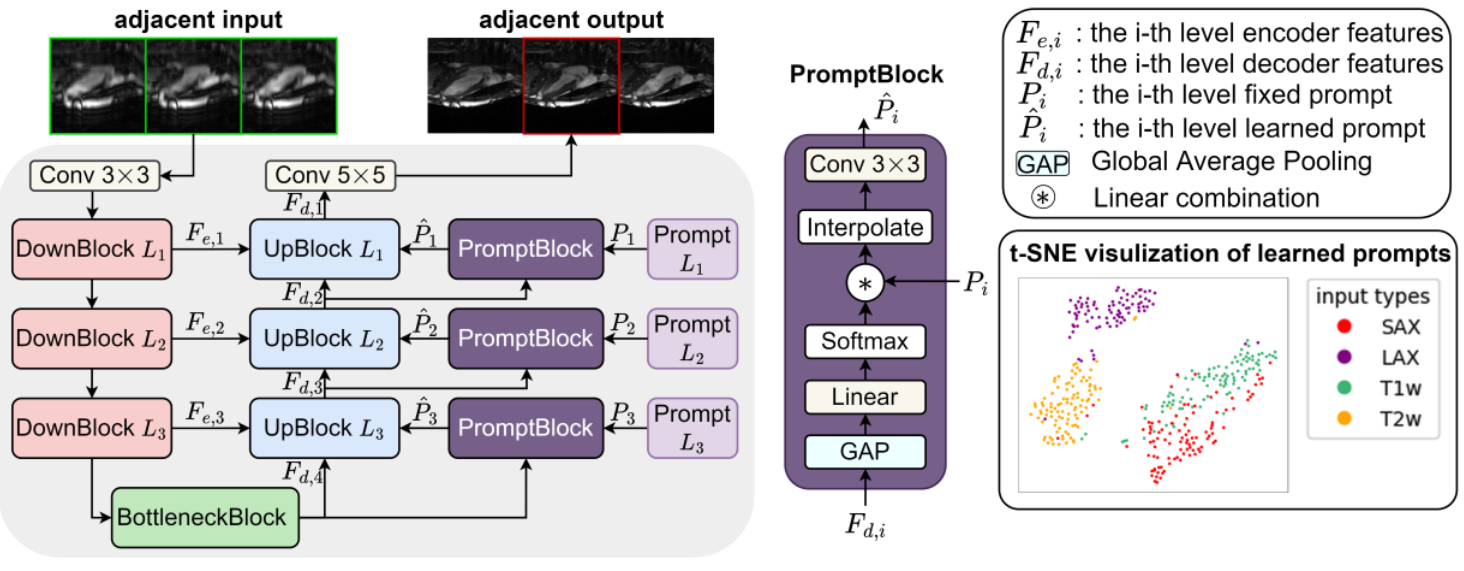

Overview of the PromptUnet: the denoiser in each cascade of PromptMR. The PromptBlocks can generate adaptively learned prompts across multiple levels, which integrate with decoder features in the UpBlocks to allow rich hierachical context learning.

See INSTALL.md for installation instructions and data preparation required to run this codebase.

FastMRI multi-coil knee dataset

If you found this repository useful to you, please consider giving a star ⭐️ and citing our paper:

@article{xin2023fill,

title={Fill the K-Space and Refine the Image: Prompting for Dynamic and Multi-Contrast MRI Reconstruction},

author={Xin, Bingyu and Ye, Meng and Axel, Leon and Metaxas, Dimitris N},

journal={arXiv preprint arXiv:2309.13839},

year={2023}

}